80,000 hours should remove OpenAI from the Job Board (and similar EA orgs should do similarly)

By Raemon @ 2024-07-03T20:34 (+263)

I haven't shared this post with other relevant parties – my experience has been that private discussion of this sort of thing is more paralyzing than helpful. I might change my mind in the resulting discussion, but, I prefer that discussion to be public.

I think 80,000 hours should remove OpenAI from its job board, and similar EA job placement services should do the same.

(I personally believe 80k shouldn't advertise Anthropic jobs either, but I think the case for that is somewhat less clear)

I think OpenAI has demonstrated a level of manipulativeness, recklessness, and failure to prioritize meaningful existential safety work, that makes me think EA orgs should not be going out of their way to give them free resources. (It might make sense for some individuals to work there, but this shouldn't be a thing 80k or other orgs are systematically funneling talent into)

There plausibly should be some kind of path to get back into good standing with the AI Risk community, although it feels difficult to imagine how to navigate that, given how adversarial OpenAI's use of NDAs was, and how difficult that makes it to trust future commitments.

The things that seem most significant to me:

- They promised the superalignment team 20% of their compute-at-the-time (which AFAICT wasn't even a large fraction of their compute over the coming years), but didn't provide anywhere close to that, and then disbanded the team when Leike left.

- Their widespread use of non-disparagement agreements, with non-disclosure clauses, which generally makes it hard to form accurate impressions about what's going on at the organization.

- Helen Toner's description of how Sam Altman wasn't forthright with the board. (i.e. "The board was not informed about ChatGPT in advance and learned about ChatGPT on Twitter. Altman failed to inform the board that he owned the OpenAI startup fund despite claiming to be an independent board member, giving false information about the company’s formal safety processes on multiple occasions. And relating to her research paper, that Altman in the paper’s wake started lying to other board members in order to push Toner off the board.")

- Hearing from multiple ex-OpenAI employees that OpenAI safety culture did not seem on track to handle AGI. Some of these are public (Leike, Kokotajlo), others were in private.

This is before getting into more openended arguments like "it sure looks to me like OpenAI substantially contributed to the world's current AI racing" and "we should generally have a quite high bar for believing that the people running a for-profit entity building transformative AI are doing good, instead of cause vast harm, or at best, being a successful for-profit company that doesn't especially warrant help from EAs.

I am generally wary of AI labs (i.e. Anthropic and Deepmind), and think EAs should be less optimistic about working at large AI orgs, even in safety roles. But, I think OpenAI has demonstrably messed up, badly enough, publicly enough, in enough ways that it feels particularly wrong to me for EA orgs to continue to give them free marketing and resources.

I'm mentioning 80k specifically because I think their job board seemed like the largest funnel of EA talent, and because it seemed better to pick a specific org than a vague "EA should collectively do something." (see: EA should taboo "EA should"). I do think other orgs that advise people on jobs or give platforms to organizations (i.e. the organization fair at EA Global) should also delist OpenAI.

My overall take is something like: it is probably good to maintain some kind of intellectual/diplomatic/trade relationships with OpenAI, but bad to continue giving them free extra resources, or treat them as an org with good EA or AI safety standing.

It might make sense for some individuals to work at OpenAI, but doing so in a useful way seems very high skill, and high-context – not something to funnel people towards in a low-context job board.

I also want to clarify: I'm not against 80k continuing to list articles like Working at an AI Lab, which are more about how to make the decisions, and go into a fair bit of nuance. I disagree with that article, but it seems more like "trying to lay out considerations in a helpful way" than "just straightforwardly funneling people into positions at a company." (I do think that article seems out of date and worth revising in light of new information. I think "OpenAI seems inclined towards safety" now seems demonstrably false, or at least less true in the ways that matter. And this should update you on how true it is for the other labs, or how likely it is to remain true)

FAQ / Appendix

Some considerations and counterarguments which I've thought about, arranged as a hypothetical FAQ.

Q: It seems that, like it or not, OpenAI is a place transformative AI research is likely to happen, and having good people work there is important.

Isn't it better to have alignment researchers working there, than not? Are you sure you're not running afoul of misguided purity instincts?

I do agree it might be necessary to work with OpenAI, even if they are reckless and negligent. I'd like to live in the world where "don't work with companies causing great harm" was a straightforward rule to follow. But we might live in a messy, complex world where some good people may need to work with harmful companies anyway.

But: we've now had two waves of alignment people leave OpenAI. The second wave has multiple people explicitly saying things like "quit OpenAI due to losing confidence that it would behave responsibly around the time of AGI."

The first wave, my guess is they were mostly under non-disclosure/non-disparagement agreements, and we can't take their lack of criticism as much evidence.

It looks to me, from the outside, like OpenAI is just not really structured or encultured in a way that makes it that viable for someone on the inside to help improve things much. I don't think it makes sense to continue trying to improve OpenAI's plans, at least until OpenAI has some kind of credible plan (backed up by actions) of actually seriously working on existential safety.

I think it might make sense for some individuals to go work at OpenAI anyway, who have an explicit plan for how to interface with the organizational culture. But I think this is a very high context, high skill job. (i.e. skills like "keeping your eye on the AI safety ball", "interfacing well with OpenAI staff/leadership while holding onto your own moral/strategic compass", "knowing how to prioritize research that differentially helps with existential safety, rather than mostly amounting to near-term capabilities work.")

I don't think this is the sort of thing you should just funnel people into on a jobs board.

I think it makes a lot more sense to say "look, you had your opportunity to be taken on faith here, you failed. It is now OpenAI's job to credibly demonstrate that it is worthwhile for good people to join there trying to help, rather than for them to take that on faith."

Q: What about jobs like "security research engineer?".

That seems straightforwardly good for OpenAI to have competent people for, and probably doesn't require a good "Safety Culture" to pay off?

The argument for this seems somewhat plausible. I still personally think it makes sense to fully delist OpenAI positions unless they've made major changes to the org (see below).

I'm operating here from a cynical/conflict-theory-esque stance. I think OpenAI has exploited the EA community and it makes sense to engage with them from more of a cynical "conflict theory" stance. I think it makes more sense to say, collectively, "knock it off", and switch to default "apply pressure." I think if OpenAI wants to find good security people, that should be their job, not EA organizations.

But, I don't have a really slam dunk argument that this is the right stance to take. For now, I list it as my opinion, but acknowledge there are other worldviews where it's less clear what to do.

Q: What about offering a path towards "good standing?" to OpenAI?

It seems plausibly important to me to offer some kind of roadmap back to good standing. I do kinda think regulating OpenAI from the outside isn't likely to be sufficient, because it's too hard to specify what actually matters for existential AI safety.

So, it feels important to me not to fully burn bridges.

But, it seems pretty hard to offer any particular roadmap. We've got three different lines of OpenAI leadership breaking commitments, and being manipulative. So we're long past the point where "mere words" would reassure me.

Things that would be reassure me are costly actions that are extremely unlikely in worlds where OpenAI would (intentionally or no) lure more people in and then still turn out to, nope, just be taking advantage of them for safety-washing / regulatory capture reasons.

Such actions seem pretty unlikely by now. Most of the examples I can think to spell out seem too likely to be gameable (i.e. if OpenAI were to announce a new Superalignment-equivalent team, or commitments to participate in eval regulations, I would guess they would only do the minimum necessary to look good, rather than a real version of the thing).

An example that'd feel pretty compelling is if Sam Altman actually really, for real, left the company, that would definitely have me re-evaluating my sense of the company. (This seems like a non-starter, but, listing for completeness).

I wouldn't put much stock in a Sam Altman apology. If Sam is still around, the most I'd hope for is some kind of realistic, real-talk, arms-length negotiation where it's common knowledge that we can't really trust each other but maybe we can make specific deals.

I'd update somewhat if Greg Brockman and other senior leadership (i.e. people who seem to actually have the respect of the capabilities and product teams), or maybe new board members, made clear statements indicating:

- they understand: how OpenAI messed up (in terms of not keeping commitments, and the manipulativeness of non-disclosure non-disparagement agreements)

- they take some actions that are holding Sam (and maybe themselves in some cases) accountable.

- they take existential risk seriously on a technical level. They have real cruxes for what would change their current scaling strategy. This is integrated into org-wide decisionmaking.

This wouldn't make me think "oh everything's fine now." But would be enough of an update that I'd need to evaluate what they actually said/did and form some new models.

Q: What if we left up job postings, but with an explicit disclaimer linking to a post saying why people should be skeptical?

This idea just occurred to me as I got to the end of the post. Overall, I think this doesn't make sense given the current state of OpenAI, but thinking about it opens up some flexibility in my mind about what might make sense, in worlds where we get some kind of costly signals or changes in leadership from OpenAI.

(My actual current guess is this sort of disclaimer makes sense for Anthropic and/or DeepMind jobs. This feels like a whole separate post though)

My actual range of guesses here are more cynical than this post focuses on. I'm focused on things that seemed easy to legibly argue for.

I'm not sure who has decisionmaking power at 80k, or most other relevant orgs. I expect many people to feel like I'm still bending over backwards being accommodating to an org we should have lost all faith in. I don't have faith in OpenAI, but I do still worry about escalation spirals and polarization of discourse.

When dealing with a potentially manipulative adversary, I think it's important to have backbone and boundaries and actual willingness to treat the situation adversarially. But also, it's important to leave room to update or negotiate.

But, I wanted to end with explicitly flagging the hypothesis that OpenAI is best modeled as a normal profit-maximizing org, that they basically co-opted EA into being a lukewarm ally it could exploit, when it'd have made sense to treat OpenAI more adversarially from the start (or at least be more "ready to pivot towards treating adversarially".

I don't know that that's the right frame, but I think the recent revelations should be an update towards that frame.

Conor Barnes @ 2024-07-03T22:20 (+96)

Hi, I run the 80,000 Hours job board, thanks for writing this out!

I agree that OpenAI has demonstrated a significant level of manipulativeness and have lost confidence in them prioritizing existential safety work. However, we don’t conceptualize the board as endorsing organisations. The point of the board is to give job-seekers access to opportunities where they can contribute to solving our top problems or build career capital to do so (as we write in our FAQ). Sometimes these roles are at organisations whose mission I disagree with, because the role nonetheless seems like an opportunity to do good work on a key problem.

For OpenAI in particular, we’ve tightened up our listings since the news stories a month ago, and are now only posting infosec roles and direct safety work – a small percentage of jobs they advertise. See here for the OAI roles we currently list. We used to list roles that seemed more tangentially safety-related, but because of our reduced confidence in OpenAI, we limited the listings further to only roles that are very directly on safety or security work. I still expect these roles to be good opportunities to do important work. Two live examples:

- Infosec

- Even if we were very sure that OpenAI was reckless and did not care about existential safety, I would still expect them to not want their model to leak out to competitors, and importantly, we think it's still good for the world if their models don't leak! So I would still expect people working on their infosec to be doing good work.

- Non-infosec safety work

- These still seem like potentially very strong roles with the opportunity to do very important work. We think it’s still good for the world if talented people work in roles like this!

- This is true even if we expect them to lack political power and to play second fiddle to capabilities work and even if that makes them less good opportunities vs. other companies.

We also include a note on their 'job cards' on the job board (also DeepMind’s and Anthropic’s) linking to the Working at an AI company article you mentioned, to give context. We’re not opposed to giving more or different context on OpenAI’s cards and are happy to take suggestions!

Raemon @ 2024-07-04T05:29 (+84)

Nod, thanks for the reply.

I won't argue more for removing infosec roles at the moment. As noted in the post, I think this is at least a reasonable position to hold. I (weakly) disagree, but for reasons that don't seem worth getting into here.

The things I'd argue here:

- Safetywashing is actually pretty bad, for the world's epistemics and for EA and AI safety's collective epistemics. I think it also warps the epistemics of the people taking the job, so while they might be getting some career experience... they're also likely getting a distorted view of what what AI safety is, and becoming worse researchers than they would otherwise.

- As previously stated – it's not that I don't think anyone should take these jobs, but I think the sort of person who should take them is someone who has a higher degree of context and skill than I expect the 80k job board to filter for.

- Even if you disagree with those points, you should have some kind of crux for "what would distinguish an 'impactful AI safety job?'" vs a fake safety-washed role. It should be at least possible for OpenAI to make a role so clearly fake that you notice and stop listing it.

- If you're set on continuing to list OpenAI Alignment roles, I think the current disclaimer is really inadequate and misleading. (Partly because of the object-level content in Working at an AI company, which I think wrongly characterizes OpenAI, and partly because what disclaimers you do have are deep in that post. On the top-level job ad, there's no indication that applicants should be skeptical about OpenAI.

Re: cruxes for safetywashing

You'd presumably agree, OpenAI couldn't just call any old job 'Alignment Science' and have it automatically count as worth listing on your site.

Companies at least sometimes lie, and they often use obfuscating language to mislead. OpenAI's track record is such that, we know that they do lie and mislead. So, IMO, your prior here should be moderately high.

Maybe you only think it's, like, 10%? (or less? IMO less than 10% feels pretty strained to me). But, at what credence would you stop listing it on the job board? And what evidence would increase your odds?

Taking the current Alignment Science researcher role as an example:

We are seeking Researchers to help design and implement experiments for alignment research. Responsibilities may include:

- Writing performant and clean code for ML training

- Independently running and analyzing ML experiments to diagnose problems and understand which changes are real improvements

- Writing clean non-ML code, for example when building interfaces to let workers interact with our models or pipelines for managing human data

- Collaborating closely with a small team to balance the need for flexibility and iteration speed in research with the need for stability and reliability in a complex long-lived project

- Understanding our high-level research roadmap to help plan and prioritize future experiments

- Designing novel approaches for using LLMs in alignment research

You might thrive in this role if you:

- Are excited about OpenAI’s mission of building safe, universally beneficial AGI and are aligned with OpenAI’s charter

- Want to use your engineering skills to push the frontiers of what state-of-the-art language models can accomplish

- Possess a strong curiosity about aligning and understanding ML models, and are motivated to use your career to address this challenge

- Enjoy fast-paced, collaborative, and cutting-edge research environments

- Have experience implementing ML algorithms (e.g., PyTorch)

- Can develop data visualization or data collection interfaces (e.g., JavaScript, Python)

- Want to ensure that powerful AI systems stay under human control.

Is this an alignment research role, or a capabilities role that pays token lip service to alignment?My guess (60%) based on my knowledge of OpenAI is it's more like the latter.

It says "Designing novel approaches for using LLMs in alignment research", but that's only useful if you think OpenAI uses the phrase "alignment research" to mean something important. We know they eventually coined the term "superalignment", distinguished from most of what they'd been calling "alignment" work (where "superalignment" is closer to what was originally meant by the term).

If OpenAI was creating jobs that weren't really helpful at all, but labeling them "alignment" anyway, how would you know?

Conor Barnes @ 2024-07-04T20:24 (+8)

Re: On whether OpenAI could make a role that feels insufficiently truly safety-focused: there have been and continue to be OpenAI safety-ish roles that we don’t list because we lack confidence they’re safety-focused.

For the alignment role in question, I think the team description given at the top of the post gives important context for the role’s responsibilities:

OpenAI’s Alignment Science research teams are working on technical approaches to ensure that AI systems reliably follow human intent even as their capabilities scale beyond human ability to directly supervise them.

With the above in mind, the role responsibilities seem fine to me. I think this is all pretty tricky, but in general, I’ve been moving toward looking at this in terms of the teams:

Alignment Science: Per the above team description, I’m excited for people to work there – though, concerning the question of what evidence would shift me, this would change if the research they release doesn’t match the team description.

Preparedness: I continue to think it’s good for people to work on this team, as per the description: “This team … is tasked with identifying, tracking, and preparing for catastrophic risks related to frontier AI models.”

Safety Systems: I think roles here depend on what they address. I think the problems listed in their team description include problems I definitely want people working on (detecting unknown classes of harm, red-teaming to discover novel failure cases, sharing learning across industry, etc), but it’s possible that we should be more restrictive in which roles we list from this team.

I don’t feel confident giving a probability here, but I do think there’s a crux here around me not expecting the above team descriptions to be straightforward lies. It’s possible that the teams will have limited resources to achieve their goals, and with the Safety Systems team in particular, I think there’s an extra risk of safety work blending into product work. However, my impression is that the teams will continue to work on their stated goals.

I do think it’s worthwhile to think of some evidence that would shift me against listing roles from a team:

- If a team doesn’t publish relevant safety research within something like a year.

- If a team’s stated goal is updated to have less safety focus.

Other notes:

- We’re actually in the process of updating the AI company article.

- The top-level disclaimer: Yeah, I think this needs updating to something more concrete. We put it up while ‘everything was happening’ but I’ve neglected to change it, which is my mistake and will probably prioritize fixing over the next few days.

- Thanks for diving into the implicit endorsement point. I acknowledge this could be a problem (and if so, I want to avoid it or at least mitigate it), so I’m going to think about what to do here.

Raemon @ 2024-07-05T19:54 (+35)

Thanks.

Fwiw while writing the above, I did also think "hmm, I should also have some cruxes for 'what would update me towards 'these jobs are more real than I currently think.'" I'm mulling that over and will write up some thoughts soon.

It sounds like you basically trust their statements about their roles. I appreciate you stating your position clearly, but, I do think this position doesn't make sense:

- we already have evidence of them failing to uphold commitments they've made in clear cut ways. (i.e. I'd count their superalignment compute promises as basically a straightforward lie, and if not a "lie", it at least clearly demonstrates that their written words don't count for much. This seems straightforwardly relevant to the specific topic of "what does a given job at OpenAI entail?", in addition to being evidence about their overall relationship with existential safety)

we've similarly seen OpenAI change it's stated policies, such asremoving restrictions on military use. Or, initially being a nonprofit and converting into "for-profit-managed by non-profit" (where the "managed by nonprofit board" part turned out to be pretty ineffectual)(not sure if I endorse this, mulling over Habryka's comment)

Surely, this at at least updates you downward on how trustworthy their statements are? How many times do they have to "say things that turned out not to be true" before you stop taking them at face value? And why is that "more times than they have already?".

Separate from straightforward lies, and/or altering of policy to the point where any statements they make seem very unreliable, there is plenty of degrees of freedom of "what counts as alignment." They are already defining alignment in a way that is pretty much synonymous with short-term capabilities. I think the plan of "iterate on 'alignment' with nearterm systems as best you can to learn and prepare" is not necessarily a crazy plan. There are people I respect who endorse it, who previously defended it as an OpenAI approach, although notably most of those people have now left OpenAI (sometimes still working on similar plans at other orgs).

But, it's very hard to tell the difference from the outside between:

- "iterating on nearterm systems, contributing to AI race dynamics in the process, in a way that has a decent chance of teaching you skills that will be relevant for aligning superintelligences"

- "iterating on nearterm systems, in a way that you think/hope will teach you skills for navigating superintelligence... but, you're wrong about how much you're learning, and whether it's net positive"

- "iterating on nearterm systems, and calling it alignment because it makes for better PR, but not even really believing that it's particularly necessary to navigate superintelligence.

When recommending jobs for organizations that are potentially causing great harm, I think 80k has a responsibility to actually form good opinions on whether the job makes sense, independent on what the organization says it's about.

You don't just need to model whether OpenAI is intentionally lying, you also need to model whether they are phrasing things ambiguously, and you need to model whether they are self-decelving about whether these roles are legitimate alignment work, or valuable enough work to outweigh the risks. And, you need to model that they might just be wrong and incompetent at longterm alignment development (or: "insufficiently competent to outweigh risks and downsides"), even if their heart were in the right place.

I am very worried that this isn't already something you have explicit models about.

Habryka @ 2024-07-06T01:02 (+9)

we've similarly seen OpenAI change it's stated policies, such as removing restrictions on military use.

As I've discussed in the comments on a related post, I don't think OpenAI meaningfully changed any of its stated policies with regards to military usage. I don't think OpenAI really ever promised anyone they wouldn't work with militaries, and framing this as violating a past promise weakens the ability to hold them accountable for promises they actually made.

What OpenAI did was to allow more users to use their product. It's similar to LessWrong allowing crawlers or jurisdictions that we previously blocked to now access the site. I certainly wouldn't consider myself to have violated some promise by allowing crawlers or companies to access LessWrong that I had previously blocked (or for a closer analogy, let's say we were currently blocking AI companies from crawling LW for training purposes, and I then change my mind and do allow them to do that, I would not consider myself to have broken any kind of promise or policy).

Raemon @ 2024-07-06T01:06 (+2)

Mmm, nod. I will look into the actual history here more, but, sounds plausible. (edited the previous comment a bit for now)

Elizabeth @ 2024-07-04T21:40 (+26)

The arguments you give all sound like reasons OpenAI safety positions could be beneficial. But I find them completely swamped by all the evidence that they won't be, especially given how much evidence OpenAI has hidden via NDAs.

But let's assume we're in a world where certain people could do meaningful safety work an OpenAI. What are the chances those people need 80k to tell them about it? OpenAI is the biggest, most publicized AI company in the world; if Alice only finds out about OpenAI jobs via 80k that's prima facie evidence she won't make a contribution to safety.

What could the listing do? Maybe Bob has heard of OAI but is on the fence about applying. An 80k job posting might push him over the edge to applying or accepting. The main way I see that happening is via a halo effect from 80k. The mere existence of the posting implies that the job is aligned with EA/80k's values.

I don't think there's a way to remove that implication with any amount of disclaimers. The job is still on the board. If anything disclaimers make the best case scenarios seem even better, because why else would you host such a dangerous position?

So let me ask: what do you see as the upside to highlighting OAI safety jobs on the job board? Not of the job itself, but the posting. Who is it that would do good work in that role, and the 80k job board posting is instrumental in them entering it?

Conor Barnes @ 2024-07-05T19:43 (+8)

Update: We've changed the language in our top-level disclaimers: example. Thanks again for flagging! We're now thinking about how to best minimize the possibility of implying endorsement.

Raemon @ 2024-07-05T23:51 (+9)

Following up my other comment:

To try to be a bit more helpful rather than just complaining and arguing: when I model your current worldview, and try to imagine a disclaimer that helps a bit more with my concerns but seems like it might work for you given your current views, here's a stab. Changes bolded.

OpenAI is a frontier AI research and product company, with teams working on alignment, policy, and security. We recommend specific opportunities at OpenAI that we think may be high impact. We recommend applicants pay attention to the details of individual roles at OpenAI, and form their own judgment about whether the role is net positive. We do not necessarily recommend working at other positions at OpenAI

You can read considerations around working at a frontier AI company in our career review on the topic.

(it's not my main crux, by "frontier" felt both like a more up-to-date term for what OpenAI does, and also feels more specifically like it's making a claim about the product than generally awarding status to the company the way "leading" does)

Raemon @ 2024-07-05T19:46 (+2)

Thanks. This still seems pretty insufficient to me, but, it's at least an improvement and I appreciate you making some changes here.

Linda Linsefors @ 2024-07-24T09:20 (+1)

I can't find the disclaimer. Not saying it isn't there. But it should be obvious from just skimming the page, since that is what most people will do.

Owen Cotton-Barratt @ 2024-07-04T11:16 (+55)

I think that given the 80k brand (which is about helping people to have a positive impact with their career), it's very hard for you to have a jobs board which isn't kinda taken by many readers as endorsement of the orgs. Disclaimers help a bit, but it's hard for them to address the core issue — because for many of the orgs you list, you basically do endorse the org (AFAICT).

I also think it's a pretty different experience for employees to turn up somewhere and think they can do good by engaging in a good faith way to help the org do whatever it's doing, and for employees to not think that but think it's a good job to take anyway.

My take is that you would therefore be better splitting your job board into two sections:

- In one section, only include roles at orgs where you basically feel happy standing behind them, and think it's straightforwardly good for people to go there and help the orgs be better

- You can be conservative about inclusion here — and explain in the FAQ that non-inclusion in this list doesn't mean that it wouldn't be good to straightforwardly help the org, just that this isn't transparent enough to 80k to make the recommendation

- In another more expansive section, you could list all roles which may be impactful, following your current strategy

- If you only have this section (as at present), which I do think is a legit strategy, I feel you should probably rebrand it to something other than "80k job board", and it should live somewhere other than the 80k website — even if you continue to link it prominently from the 80k site

- Otherwise I think that you are in part spending 80k's reputation in endorsing these organizations

- If you have both of the sections I'm suggesting, I still kind of think it might be better to have this section not under the 80k brand — but I feel that much less strongly, since the existence of the first section should help a good amount in making it transparent that listing doesn't mean endorsement of the org

- If you only have this section (as at present), which I do think is a legit strategy, I feel you should probably rebrand it to something other than "80k job board", and it should live somewhere other than the 80k website — even if you continue to link it prominently from the 80k site

- (If the binary in/out seems too harsh, you could potentially include gradations, like "Fully endorsed org", "Partially endorsed org", and so on)

Edited to add: I want to acknowledge that at a gut level I'm very sympathetic to "but we explained all of this clearly!". I do think if people misunderstand you then that's partially on them. But I also think that it's sort of predictable that they will misunderstand in this way, and so it's also partially on you.

I should also say that I'm not confident in this read. If someone did a survey and found that say <10% of people browsing the 80k job board thought that 80k was kind of endorsing the orgs, I'd be surprised but I'd also update towards thinking that your current approach was a good one.

Habryka @ 2024-07-04T18:48 (+27)

I am generally very wary of trying to treat your audience as unsophisticated this way. I think 80k taking on the job of recommending the most impactful jobs, according to the best of their judgement, using the full nuance and complexity of their models, is much clearer and straightforward than a recommendation which is something like "the most impactful jobs, except when we don't like being associated with something, or where the case for it is a bit more complicated than our other jobs, or where our funders asked us to not include it, etc.".

I do think that doing this well requires the ability to sometimes say harsh things about an organization. I think communicating accurately about job recommendations will inevitably require being able to say "we think working at this organization might be really miserable and might involve substantial threats, adversarial relationships, and you might cause substantial harm if you are not careful, but we still think it's overall still a good choice if you take that into account". And I think those judgements need to be made on an organization-by-organization level (and can't easily be captured by generic statements in the context of the associated career guide).

Owen Cotton-Barratt @ 2024-07-04T19:55 (+28)

I don't think you should treat your audience as unsophisticated. But I do think you should acknowledge that you will have casual readers who will form impressions from a quick browse, and think it's worth doing something to minimise the extent to which they come away misinformed.

Separately, there is a level of blunt which you might wisely avoid being in public. Your primary audience is not your only audience. If you basically recommend that people treat a company as a hostile environment, then the company may reasonably treat the recommender as hostile, so now you need to recommend that they hide the fact they listened to you (or reveal it with a warning that this may make the environment even more hostile) ... I think it's very reasonable to just skip this whole dynamic.

Habryka @ 2024-07-04T21:34 (+10)

But I do think you should acknowledge that you will have casual readers who will form impressions from a quick browse, and think it's worth doing something to minimise the extent to which they come away misinformed.

Yeah, I agree with this. I like the idea of having different kinds of sections, and I am strongly in favor of making things be true at an intuitive glance as well as on closer reading (I like something in the vicinity of "The Onion Test" here)

Separately, there is a level of blunt which you might wisely avoid being in public. Your primary audience is not your only audience. If you basically recommend that people treat a company as a hostile environment, then the company may reasonably treat the recommender as hostile, so now you need to recommend that they hide the fact they listened to you (or reveal it with a warning that this may make the environment even more hostile) ... I think it's very reasonable to just skip this whole dynamic.

I feel like this dynamic is just fine? I definitely don't think you should recommend that they hide the fact they listened to you, that seems very deceptive. I think you tell people your honest opinion, and then if the other side retaliates, you take it. I definitely don't think 80k should send people to work at organizations as some kind of secret agent, and I think responding by protecting OpenAIs reputation by not disclosing crucial information about the role, feels like straightforwardly giving into an unjustified threat.

Owen Cotton-Barratt @ 2024-07-04T22:02 (+11)

Hmm at some level I'm vibing with everything you're saying, but I still don't think I agree with your conclusion. Trying to figure out what's going on there.

Maybe it's something like: I think the norms prevailing in society say that in this kind of situation you should be a bit courteous in public. That doesn't mean being dishonest, but it does mean shading the views you express towards generosity, and sometimes gesturing at rather than flat expressing complaints.

With these norms, if you're blunt, you encourage people to read you as saying something worse than is true, or to read you as having an inability to act courteously. Neither of which are messages I'd be keen to send.

And I sort of think these norms are good, because they're softly de-escalatory in terms of verbal spats or ill feeling. When people feel attacked it's easy for them to be a little irrational and vilify the other side. If everyone is blunt publicly I think this can escalate minor spats into major fights.

Habryka @ 2024-07-04T22:48 (+18)

Maybe it's something like: I think the norms prevailing in society say that in this kind of situation you should be a bit courteous in public. That doesn't mean being dishonest, but it does mean shading the views you express towards generosity, and sometimes gesturing at rather than flat expressing complaints.

I don't really think these are the prevailing norms, especially not regards with an adversary who has leveraged illegal threats of destroying millions of dollars of value to prevent negative information from getting out.

Separately about whether these are the norms, I think the EA community plays a role in society where being honest and accurate about our takes of other people is important. There were a lot of people who took what the EA community said about SBF and FTX seriously and this caused enormous harm. In many ways the EA community (and 80k in-particular) are playing the role of a rating agency, and as a rating agency you need to be able to express negative ratings, otherwise you fail at your core competency.

As such, even if there are some norms in society about withholding negative information here, I think the EA and AI-safety communities in-particular cannot hold itself to these norms within the domains of their core competencies and responsibilities.

Owen Cotton-Barratt @ 2024-07-04T23:12 (+13)

I don't regard the norms as being about witholding negative information, but about trying to err towards presenting friendly frames while sharing what's pertinent, or something?

Honestly I'm not sure how much we really disagree here. I guess we'd have to concretely discuss wording for an org. In the case of OpenAI, I imagine it being appropriate to include some disclaimer like:

OpenAI is a frontier AI company. It has repeatedly expressed an interest in safety and has multiple safety teams. However, some people leaving the company have expressed concern that it is not on track to handle AGI safely, and that it wasn't giving its safety teams resources they had been promised. Moreover, it has a track record of putting inappropriate pressure on people leaving the company to sign non-disparagement agreements. [With links]

I largely agree with the rating-agency frame.

Habryka @ 2024-07-05T06:34 (+25)

I don't regard the norms as being about witholding negative information, but about trying to err towards presenting friendly frames while sharing what's pertinent, or something?

I agree with some definitions of "friendly" here, and disagree with others. I think there is an attractor here towards Orwellian language that is intentionally ambiguous about what it's trying to say, in order to seem friendly or non-threatening (because in some sense it is), and that kind of "friendly" seems pretty bad to me.

I think the paragraph you have would strike me as somewhat too Orwellian, though it's not too far off from what I would say. Something closer to what seems appropriate to me:

OpenAI is a frontier AI company, and as such it's responsible for substantial harm by assisting in the development of dangerous AI systems, which we consider among the biggest risks to humanity's future. In contrast to most of the jobs in our job board, we consider working at OpenAI more similar to working at a large tobacco company, hoping to reduce the harm that the tobacco company causes, or leveraging this specific tobacco company's expertise with tobacco to produce more competetive and less harmful variations of tobacco products.

To its credit, it has repeatedly expressed an interest in safety and has multiple safety teams, which are attempting to reduce the likelihood of catastrophic outcomes from AI systems.

However, many people leaving the company have expressed concern that it is not on track to handle AGI safely, that it wasn't giving its safety teams resources they had been promised, and that the leadership of the company is untrustworthy. Moreover, it has a track record of putting inappropriate pressure on people leaving the company to sign non-disparagement agreements. [With links]

We explicitly recommend against taking any roles not in computer security or safety at OpenAI, and consider those substantially harmful under most circumstances (though exceptions might exist).

I feel like this is currently a bit too "edgy" or something, and I would massage some sentences for longer, but it captures the more straightforward style that i think would be less likely to cause people to misunderstand the situation.

Owen Cotton-Barratt @ 2024-07-05T08:35 (+13)

So it may be that we just have some different object-level views here. I don't think I could stand behind the first paragraph of what you've written there. Here's a rewrite that would be palatable to me:

OpenAI is a frontier AI company, aiming to develop artificial general intelligence (AGI). We consider poor navigation of the development of AGI to be among the biggest risks to humanity's future. It is complicated to know how best to respond to this. Many thoughtful people think it would be good to pause AI development; others think that it is good to accelerate progress in the US. We think both of these positions are probably mistaken, although we wouldn't be shocked to be wrong. Overall we think that if we were able to slow down across the board that would probably be good, and that steps to improve our understanding of the technology relative to absolute progress with the technology are probably good. In contrast to most of the jobs in our job board, therefore, it is not obviously good to help OpenAI with its mission. It may be more appropriate to consider working at OpenAI as more similar to working at a large tobacco company, hoping to reduce the harm that the tobacco company causes, or leveraging this specific tobacco company's expertise with tobacco to produce more competetive and less harmful variations of tobacco products.

I want to emphasise that this difference is mostly not driven by a desire to be politically acceptable (although the inclusion/wording of the "many thoughtful people ..." clauses are a bit for reasons of trying to be courteous), but rather a desire not to give bad advice, nor to be overconfident on things.

Rohin Shah @ 2024-07-06T08:23 (+12)

... That paragraph doesn't distinguish at all between OpenAI and, say, Anthropic. Surely you want to include some details specific to the OpenAI situation? (Or do your object-level views really not distinguish between them?)

Owen Cotton-Barratt @ 2024-07-06T13:52 (+6)

I was just disagreeing with Habryka's first paragraph. I'd definitely want to keep content along the lines of his third paragraph (which is pretty similar to what I initially drafted).

Habryka @ 2024-07-05T17:07 (+4)

Yeah, this paragraph seems reasonable (I disagree, but like, that's fine, it seems like a defensible position).

Raemon @ 2024-07-05T17:57 (+2)

Yeah same. (although, this focuses entirely on their harm as an AI organization, and not manipulative practices)

I think it leaves the question "what actually is the above-the-fold-summary" (which'd be some kind of short tag).

D0TheMath @ 2024-07-04T17:43 (+14)

Otherwise I think that you are in part spending 80k's reputation in endorsing these organizations

Agree on this. For a long time I've had a very low opinion of 80k's epistemics[1] (both podcast, and website), and having orgs like OpenAI and Meta on there was a big contributing factor[2].

In particular that they try to both present as an authoritative source on strategic matters concerning job selection, while not doing the necessary homework to actually claim such status & using articles (and parts of articles) that empirically nobody reads & I've found are hard to find to add in those clarifications, if they ever do. ↩︎

Probably second to their horrendous SBF interview. ↩︎

calebp @ 2024-07-03T22:40 (+30)

I think this is a good policy and broadly agree with your position.

It's a bit awkward to mention, but as you've said that you've delisted other roles at OpenAI and that OpenAI has acted badly before - I think you should consider explicitly saying that you don't necessarily endorse other roles at OpenAI and suspect that some other role may be harmful on the OpenAI jobs board cards.

I'm a little worried about people seeing OpenAI listed on the board and inferring that the 80k recommendation somewhat transfers to other roles at OpenAI (which, imo is a reasonable heuristic for most companies listed on the board - but fails in this specific case).

Lucie Philippon @ 2024-07-04T00:00 (+14)

I think this halo effect could be reduced by making small UI changes:

- Removing the OpenAI logo

- Replacing the "OpenAI" name in the search results by "Harmful frontier AI Lab" or similar

- Starting with a disclaimer on why this specific job might be good despite the overall org being bad

I would be all for a cleanup of 80k material to remove mentions of OpenAI as a place to improve the world.

calebp @ 2024-07-04T00:35 (+8)

The first two bullets don't seem like small UI changes to me; the second, in particular, seems too adversarial imo.

Raemon @ 2024-07-04T05:31 (+11)

fwiw I don't think replacing the OpenAI logo or name makes much sense.

I do think it's pretty important to actively communicate that even the safety roles shouldn't be taken at face value.

yanni kyriacos @ 2024-07-03T23:36 (+7)

I agree with your second point Caleb, which is also why I think 80k need to stop having OpenAI (or similar) employees on their podcast.

Why? Because employer brand Halo Effects are real and significant.

calebp @ 2024-07-04T00:31 (+8)

Fwiw, I don't think that being on the 80k podcast is much of an endorsement of the work that people are doing. I think the signal is much more like "we think this person is impressive and interesting", which is consistent with other "interview podcasts" (and I suspect that it's especially true of podcasts that are popular amongst 80k listeners).

I also think having OpenAI employees discuss their views publicly with smart and altruistic people like Rob is generally pretty great, and I would personally be excited for 80k to have more OpenAI employees (particularly if they are willing to talk about why they do/don't think AIS is important and talk about their AI worldview).

Having a line at the start of the podcast making it clear that they don't necessarily endorse the org the guest works for would mitigate most concerns - though I don't think it's particularly necessary.

Rebecca @ 2024-07-04T07:04 (+44)

I would agree with this if 80k didn’t make it so easy for the podcast episodes to become PR vehicles for the companies: some time back 80k changed their policy and now they send all questions to interviewees in advance, and let them remove any answers they didn’t like upon reflection. Both of these make it very straightforward for the companies’ PR teams to influence what gets said in an 80k podcast episode, and remove any confidence that you’re getting an accurate representation of the researcher’s views, rather than what the PR team has approved them to say.

Habryka @ 2024-07-04T06:56 (+28)

These still seem like potentially very strong roles with the opportunity to do very important work. We think it’s still good for the world if talented people work in roles like this!

I think given that these jobs involved being pressured via extensive legal blackmail into signing secret non-disparagement agreements that forced people to never criticize OpenAI, at great psychological stress and at substantial cost to many outsiders who were trying to assess OpenAI, I don't agree with this assessment.

Safety people have been substantially harmed by working at OpenAI, and safety work at OpenAI can have substantial negative externalities.

peterbarnett @ 2024-07-04T17:44 (+20)

Insofar as you are recommending the jobs but not endorsing the organization, I think it would be good to be fairly explicit about this in the job listing. The current short description of OpenAI seems pretty positive to me:

OpenAI is a leading AI research and product company, with teams working on alignment, policy, and security. You can read more about considerations around working at a leading AI company in our career review on the topic. They are also currently the subject of news stories relating to their safety work.

I think this should say something like "We recommend jobs at OpenAI because we think these specific positions may be high impact. We would not necessarily recommend working at other jobs at OpenAI (especially jobs which increase AI capabilities)."

I also don't know what to make of the sentence "They are also currently the subject of news stories relating to their safety work." Is this an allusion to the recent exodus of many safety people from OpenAI? If so, I think it's misleading and gives far too positive an impression.

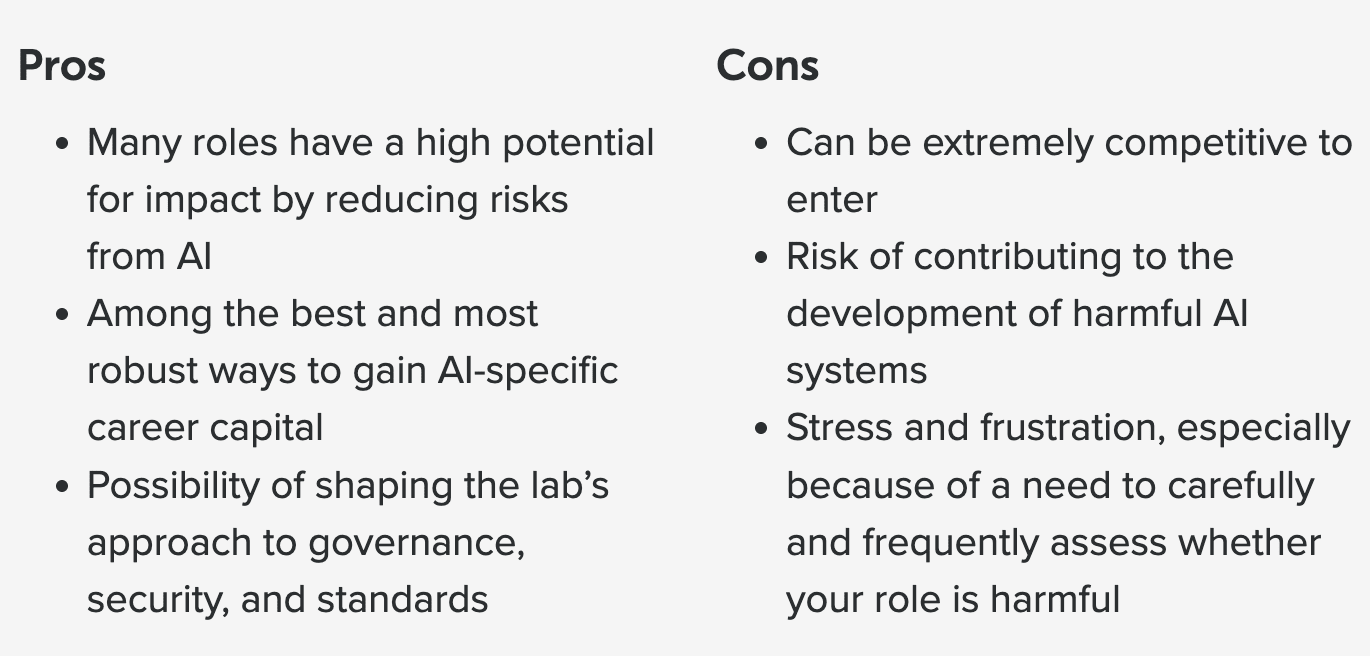

peterbarnett @ 2024-07-04T17:49 (+39)

Relatedly, I think that the "Should you work at a leading AI company?" article shouldn't start with a pros and cons list which sort of buries the fact that you might contribute to building extremely dangerous AI.

I think "Risk of contributing to the development of harmful AI systems" should at least be at the top of the cons list. But overall this sort of reminds me of my favorite graphic from 80k:

Rohin Shah @ 2024-07-06T08:29 (+9)

?? It's the second bullet point in the cons list, and reemphasized in the third bullet?

If you're saying "obviously this is the key determinant of whether you should work at a leading AI company so there shouldn't even be a pros / cons table", then obviously 80K disagrees given they recommend some such roles (and many other people (e.g. me) also disagree so this isn't 80K ignoring expert consensus). In that case I think you should try to convince 80K on the object level rather than applying political pressure.

Habryka @ 2024-07-06T17:06 (+15)

This thread feels like a fine place for people to express their opinion as a stakeholder.

Like, I don't even know how to engage with 80k staff on this on the object level, and seems like the first thing to do is to just express my opinion (and like, they can then choose to respond with argument).

Conor Barnes @ 2024-07-04T20:25 (+1)

(Copied from reply to Raemon)

Yeah, I think this needs updating to something more concrete. We put it up while ‘everything was happening’ but I’ve neglected to change it, which is my mistake and will probably prioritize fixing over the next few days.

Yonatan Cale @ 2024-07-04T06:36 (+10)

Hey Conor!

Regarding

we don’t conceptualize the board as endorsing organisations.

And

contribute to solving our top problems or build career capital to do so

It seems like EAs expect the 80k job board to suggest high impact roles, and this has been a misunderstanding for a long time (consider looking at that post if you haven't). The disclaimers were always there, but EAs (including myself) still regularly looked at the 80k job board as a concrete path to impact.

I don't have time for a long comment, just wanted to say I think this matters.

Jeff Kaufman @ 2024-07-04T11:43 (+19)

I don't read those two quotes as in tension? The job board isn't endorsing organizations, it's endorsing roles. An organization can be highly net harmful while the right person joining to work on the right thing can be highly positive.

I also think "endorsement" is a bit too strong: the bar for listing a job shouldn't be "anyone reading this who takes this job will have significant positive impact" but instead more like "under some combinations of values and world models that the job board runners think are plausible, this job is plausibly one of the highest impact opportunities for the right person".

Yonatan Cale @ 2024-07-04T06:39 (+11)

My own intuition on what to do with this situation - is to stop trying to change your reputation using disclaimers.

There's a lot of value in having a job board with high impact job recommendations. One of the challenging parts is getting a critical mass of people looking at your job board, and you already have that.

Rebecca @ 2024-07-04T07:42 (+4)

What are the relevant disclaimers here? Conor is saying 80l does think that alignment roles at OpenAI are impactful. Your article mentions the career development tag, but the roles under discussion don’t have that tag right?

Yonatan Cale @ 2024-07-06T09:23 (+2)

- If Conor thinks these roles are impactful then I'm happy we agree on listing impactful roles. (The discussion on whether alignment roles are impactful is separate from what I was trying to say in my comment)

- If the career development tag is used (and is clear to typical people using the job board) then - again - seems good to me.

Rebecca @ 2024-07-06T16:39 (+3)

I’m still confused about what the misunderstanding is

Remmelt @ 2024-07-19T14:44 (+9)

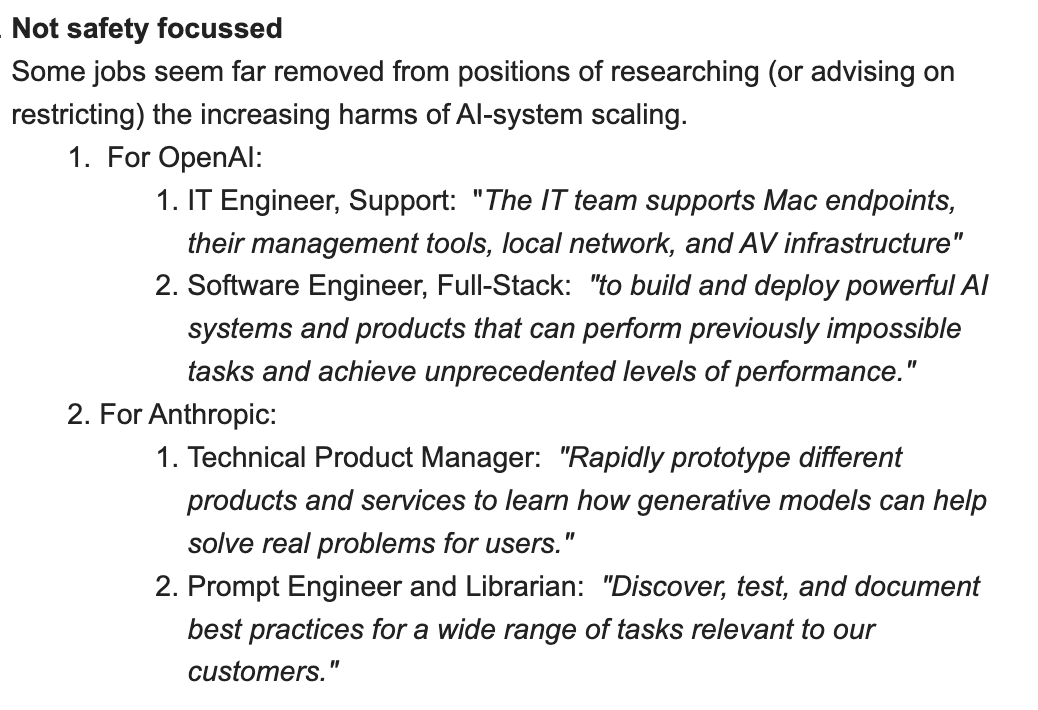

We used to list roles that seemed more tangentially safety-related, but because of our reduced confidence in OpenAI...

This misses aspects of what used to be 80k's position:

❝ In fact, we think it can be the best career step for some of our readers to work in labs, even in non-safety roles. That’s the core reason why we list these roles on our job board.

– Benjamin Hilton, February 2024

❝ Top AI labs are high-performing, rapidly growing organisations. In general, one of the best ways to gain career capital is to go and work with any high-performing team — you can just learn a huge amount about getting stuff done. They also have excellent reputations more widely. So you get the credential of saying you’ve worked in a leading lab, and you’ll also gain lots of dynamic, impressive connections.

– Benjamin Hilton, June 2023 - still on website

80k was listing some non-safety related jobs:

– From my email on May 2023:

– From my comment on February 2024:

Rebecca @ 2024-07-20T03:01 (+2)

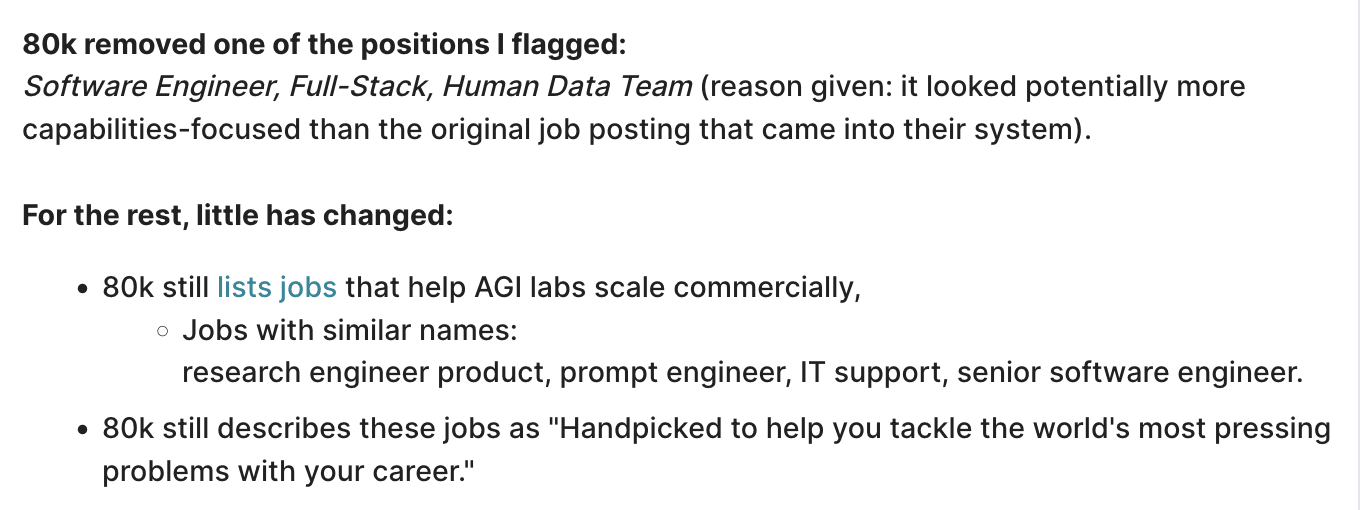

Where do they say the handpicked line?

Remmelt @ 2024-07-27T02:42 (+2)

Could you quote which line you mean? Then I can mention where you can find it back

Rebecca @ 2024-07-27T11:53 (+4)

It’s the last line in your second screenshot

Remmelt @ 2024-07-28T05:59 (+2)

They mentioned that line at the top of the 80k Job board.

Still do I see.

“Handpicked to help you tackle the world's most pressing problems with your career.”

Rebecca @ 2024-07-04T07:28 (+8)

Echoing Raemon, it’s still a value judgement about an organisation to say that 80k believes that a given role is one where, as you say, “they can contribute to solving our top problems or build career capital to do so”. You are saying that you have sufficient confidence that the organisation is run well enough that someone with little context of internal politics and pressures that can’t be communicated via a job board can come in and do that job impactfully.

But such a person would be very surprised to learn that previous people in their role or similar ones at the company have not been able to do their job due to internal politics, lies, obfuscation etc, and that they may not be able to do even the basics of their job (see the broken promise of dedicated compute supply).

It’s difficult to even build career capital as a technical researcher when you’re not given the resources to do your job and instead find yourself having to upskill in alliance building and interpersonal psychology.

Raemon @ 2024-07-04T19:07 (+6)

I have slightly complex thoughts about the "is 80k endorsing OpenAI?" question.

I'm generally on the side of "let people make individual statements without treating it as a blanket endorsement."

In practice, I think the job postings will be read as an endorsement by many (most?) people. But I think the overall policy of "social-pressure people to stop making statements that could be read as endorsements" is net harmful.

I think you should at least be acknowledging the implication-of-endorsement as a cost you are paying.

I'm a bit confused about how to think about it here, because I do think listing people on the job site, with the sorts of phrasing you use, feels more like some sort of standard corporate political move than a purely epistemic move.

I do want to distinguish the question of "how does this job-ad funnel social status around?" from "does this job-ad communicate clearly?". I think it's still bad to force people only speak words that can't be inaccurately read into, but, I think this is an important enough area to put extra effort in.

An accurate job posting, IMO, would say "OpenAI-in-particular has demonstrated that they do not follow through on safety promises, and we've seen people leave due to not feeling effectual."

I think you maybe both disagree with that object level fact (if so, I think you are wrong, and this is important), as well as, well, that'd be a hell of a weird job ad. Part of why I am arguing here is I think it looks, from the outside, like 80k is playing a slightly confused mix of relating to orgs politically and making epistemic recommendations.

I kind of expect at this point you to leave the job ad up, and maybe change the disclaimer slightly in a way that is leaves some sort of plausibly-deniable veneer.

Conor Barnes @ 2024-08-08T18:09 (+42)

Hi there, I'd like to share some updates from the last month.

Text during last update (July 5)

- OpenAI is a leading AI research and product company, with teams working on alignment, policy, and security. We recommend specific positions at OpenAI that we think may be high impact. We do not necessarily recommend working at other jobs at OpenAI. You can read more about considerations around working at a leading AI company in our career review on the topic.

Text as of today:

- OpenAI is a frontier AI research and product company, with teams working on alignment, policy, and security. We post specific opportunities at OpenAI that we think may be high impact. We do not necessarily recommend working at other positions at OpenAI. You can read concerns about doing harm by working at a frontier AI company in our career review on the topic. Note that there have also been concerns around OpenAI's HR practices.

The thinking behind these updates has been:

- We continue to get negative updates concerning OpenAI, so it's good for us to update our guidance accordingly.

- While it's unclear exactly what's going on with the NDAs (are they cancelled or are they not?), it's pretty clear that it's in the interest of users to know there's something they should look into with regard to HR practices.

- We've tweaked the language to "concerns about doing harm" instead of "considerations" for all three frontier labs to indicate more strongly that these are potentially negative considerations to make before applying.

- We don't go into much detail for the sake of length / people not glazing over them -- my guess is that the current text is the right length to have people notice it and then look into it more with our newly updated AI company article and the Washington Post link.

This is thanks to discussions within 80k and thanks to some of the comments here. While I suspect, @Raemon, that we still don't align on important things, I nonetheless appreciate the prompt to think this through more and I believe that it has led to improvements!

Raemon @ 2024-08-08T18:25 (+14)

Yeah, this does seem like an improvement. I appreciate you thinking about it and making some updates.

Neel Nanda @ 2024-08-13T06:30 (+9)

This is a great update, thanks!

Re the "concerns around HR practices" link, I don't think that Washington Post article is the best thing to link. That focuses on clauses stopping people talking to regulators which, while very bad, seems less "holy shit WTF" to me than the threatening people's previously paid compensation over non-disparagements thing. I think the best article on that is Kelsey Piper's (though OpenAI have seemingly mostly released people from those and corresponding threats, and Kelsey's article doesn't link to follow-ups discussing those).

My metric here is roughly "if a friend of mine wanted to join OpenAI, what would I warn them about" rather than "which is objectively worse for the world", and I think 'they are willing to threaten millions of dollars of stock you have already been paid' is much more important to warn about.

Raemon @ 2024-08-13T18:19 (+3)

In the context of an EA jobs list it seems like both are pretty bad. (there's the "job list" part, and the "EA" part)

Neel Nanda @ 2024-08-13T22:17 (+5)

I'm pro including both, but was just commenting on which I would choose if only including one for space reasons

yanni kyriacos @ 2024-07-03T23:52 (+36)

I think an assumption 80k makes is something like "well if our audience thinks incredibly deeply about the Safety problem and what it would be like to work at a lab and the pressures they could be under while there, then we're no longer accountable for how this could go wrong. After all, we provided vast amounts of information on why and how people should do their own research before making such a decision".

The problem is, that is not how most people make decisions. No matter how much rational thinking is promoted, we're first and foremost emotional creatures that care about things like status. So, if 80k decides to have a podcast with the Superalignment team lead, then they're effectively promoting the work of OpenAI. That will make people want to work for OpenAI. This is an inescapable part of the Halo effect.

Lastly, 80k is explicitly targeting very young people who, no offense, probably don't have the life experience to imagine themselves in a workplace where they have to resist incredible pressures to not conform, such as not sharing interpretability insights with capabilities teams.

The whole exercise smacks of nativity and I'm very confident we'll look back and see it as an incredibly obvious mistake in hindsight.

Elizabeth @ 2024-07-07T00:53 (+34)

Alignment concerns aside, I think a job board shouldn't host companies that have taken already-earned compensation hostage. Especially without noting this fact. That's a primary thing about good employers, they don't retroactively steal stock they already gave you.

Jeff Kaufman @ 2024-07-08T11:40 (+7)

I agree that was pretty terrible behavior, but there are lots of anti-employee things an organization could do which are orthogonal (especially if you know this going in, which OpenAI employees previously didn't but we're talking about new ones here) to whether the work is impactful. There are lots of hard lines that seem like they would make sense, but I'm not in favor of them: at some point there will be a job worth listing where it really is very impactful despite serious downsides.

For example, I think good employers pay you enough for a reasonably comfortable life, but if, say, some key government role is extremely poorly paid it may still make sense to take it if you have savings you're willing to spend down to support yourself.

Or, I think graduate school is often pretty bad for people, where PIs have far more power than corporate world bosses, but while you should certainly think hard about this before going to grad school it's not determinative.

Elizabeth @ 2024-07-10T00:55 (+8)

No argument from me that it's sometimes worth it to take low paying or miserable jobs. But low pay isn't a surprise fact you learn years into working for a company, it's written right on the tin[1]. The issue for me isn't that OpenAI paid undermarket rates, it's that it lied about material facts of the job. You could put up a warning that OpenAI equity is ephemeral, but the bigger issue is that OpenAI can't be trusted to hold to any deal.

- ^

The power PIs hold can be a surprise, and I'm disappointed 80k's article on PhDs doesn't cover that issue.

Jeff Kaufman @ 2024-07-10T01:57 (+3)

the bigger issue is that OpenAI can't be trusted to hold to any deal

I agree that's a big issue and it's definitely a mark against it, but I don't think that should firmly rule out working there or listing it as a place EAs might consider working.

Elizabeth @ 2024-07-10T18:42 (+3)

I don't think the dishonesty entirely rules out working at OpenAI. Whether or not OpenAI safety positions should be on the 80k job board depends on the exact mission of the job board. I have my models, but let me ask you: who is it you think will have their plans changed for the better by seeing OpenAI safety positions[1] on 80k's board?

- ^

I'm excluding IS positions from this question because it seems possible someone skilled in IS would not think to apply to OpenAI. I don't see how anyone qualified for OpenAI safety positions could need 80k to inform them the positions exist.

Jeff Kaufman @ 2024-07-10T19:02 (+2)

I don't object to dropping OpenAI safety positions from the 80k job board on the grounds that the people who would be highly impactful in those roles don't need the job board to learn about them, especially when combined with the other factors we've been discussing.

In this subthread I'm pushing back on your broader "I think a job board shouldn't host companies that have taken already-earned compensation hostage".

Elizabeth @ 2024-07-11T19:43 (+2)

I still think the question of "who is the job board aimed at?" is relevant here, and would like to hear your answer.

Jeff Kaufman @ 2024-07-12T01:47 (+3)

As I tried to communicate in my previous comment, I'm not convinced there is anyone who "will have their plans changed for the better by seeing OpenAI safety positions on 80k's board", and am not arguing for including them on the board.

EDIT: after a bit of offline messaging I realize I misunderstood Elizabeth; I thought the parent comment was pushing me to answer the question posed in the great grandcomment but actually it was accepting my request to bring this up a level of generality and not be specific to OpenAI. Sorry!

I think the board should generally list jobs that, under some combinations of values and world models that the job board runners think are plausible, are plausibly one of the highest impact opportunities for the right person. I think in cases like working in OpenAI's safety roles where anyone who is the "right person" almost certainly already knows about the role, there's not much value in listing it but also not much harm.

I think this mostly comes down to a disagreement over how sophisticated we think job board participants are, and I'd change my view on this if it turned out that a lot of people reading the board are new-to-EA folks who don't pay much attention to disclaimers and interpret listing a role as saying "someone who takes this role will have a large positive impact in expectation".

If there did turn out to be a lot of people in that category I'd recommend splitting the board into a visible-by-default section with jobs where conditional on getting the role you'll have high positive impact in expectation (I'd biasedly put the NAO's current openings in this category) and a you-need-to-click-show-more section with jobs where you need to think carefully about whether the combination of you and the role is a good one.

Ben Millwood @ 2024-07-03T23:29 (+20)

Thanks for raising this. I'm kind of on board with 80k's current strategy, but I think it's useful to have a public discussion like this nevertheless.

To the extent the proposed move is a political / diplomatic move, how much leverage do we actually have here? Does OpenAI nontrivially value their jobs being listed in our job boards? Are they still concerned about having a good relationship with us at all at this point? If I were running the job board, I'd probably imagine no-one much at OpenAI really losing sleep over my decision either way, so I'd tend to do just whatever seemed best to me in terms of the direct consequences.

Raemon @ 2024-07-04T05:39 (+10)

I do basically agree we don't have bargaining power, and that they most likely don't care about having a good relationship with us.

The reason for the diplomatic "line of retreat" in the OP is more because:

- it's hard to be sure how adversarial a situation you're in, and it just seems like generally good practice to be clear on what would change your mind (in case you have overestimated the adversarialness)

- it's helpful for showing others, who might not share exactly my worldview, that I'm "playing fairly."

I'd probably imagine no-one much at OpenAI really losing sleep over my decision either way, so I'd tend to do just whatever seemed best to me in terms of the direct consequences.

I'm not sure about "direct consequences" being quite the right frame. I agree the particular consequence-route of "OpenAI changes their policies because of our pushback" isn't plausible enough to be worth worrying about, but, I think indirect consequences on our collective epistemics are pretty important.

Remmelt @ 2024-07-19T14:13 (+13)

I haven't shared this post with other relevant parties – my experience has been that private discussion of this sort of thing is more paralyzing than helpful.

Fourteen months ago, I emailed 80k staff with concerns about how they were promoting AGI lab positions on their job board.

The exchange:

- I offered specific reasons and action points.

- 80k staff replied by referring to their website articles about why their position on promoting jobs at OpenAI and Anthropic was broadly justified (plus they removed one job listing).

- Then I pointed out what those articles were specifically missing,

- Then staff stopped responding (except to say they were "considering prioritising additional content on trade-offs").

It was not a meaningful discussion.

Five months ago, I posted my concerns publicly. Again, 80k staff removed one job listing (why did they not double-check before?). Again, staff referred to their website articles as justification to keep promoting OpenAI and Anthropic safety and non-safety roles on their job board. Again, I pointed out what's missing or off about their justifications in those articles, with no response from staff.

It took the firing of the entire OpenAI superalignment team before 80k staff "tightened up [their] listings". That is, three years after the first wave of safety researchers left OpenAI.

80k is still listing 33 Anthropic jobs, even as Anthropic has clearly been competing to extend "capabilities" for over a year.

Raemon @ 2024-07-04T19:12 (+12)

I do want to acknowledge:

I refer to Jan Leike's and Daniel Kokotajlo's comments about why the left, and reference other people leaving the company.

I do think this is important evidence.

I want to acknowledge I wouldn't actually bet that Jan and Daniel would endorse everyone else leaving OpenAI, and only weakly bet that they'd endorse not leaving up the current 80k-ads as written.

I am grateful to them for having spoken up publicly, but I know that a reason people hesitate to speak publicly about this sort of thing is that it's easier for soundbyte words to get taken and runaway by people arguing for positions stronger than you endorse, and I don't want them to regret that.

I know at least one person who has less negative (but mixed) feelings who left OpenAI for somewhat different reasons, and another couple people who still work at OpenAI I respect in at least some domains.

(I haven't chatted with either of them about this recently)

Chris Leong @ 2024-07-03T23:40 (+10)

I wonder if it would be worthwhile for a bunch of AI Safety societies at elite universities to make some kind of public commitment about something in this vein. This probably has more weight/influence than 80,000 Hours, however, it would be more valuable if we were trying to influence them, but it's less valuable since we probably don't have any plausibly satisfiable asks so long as Sam is there.

JackM @ 2024-07-04T18:27 (+5)

If OpenAI doesn't hire an EA they will just hire someone else. I'm not sure if you tackle this point directly (sorry if I missed it) but doesn't it straightforwardly seem better to have someone safety-conscious in these roles rather than someone who isn't safety-conscious?

To reiterate, it's not like if we remove these roles from the job board that they will less likely be filled. They would still definitely be filled, just by someone less safety-conscious in expectation. And I'm not sure the person who would get the role would be "less talented" in expectation because there are just so many talented ML researchers - so I'm not sure removing roles from the job board would slow down capabilities development much if at all.

I get a sense that your argument is somewhat grounded in deontology/virtue ethics (i.e. "don't support a bad organization") but perhaps not so much in terms of consequentialism?

Raemon @ 2024-07-04T18:50 (+11)

I attempted to address this in the Isn't it better to have alignment researchers working there, than not? Are you sure you're not running afoul of misguided purity instincts? FAQ section.

I think the evidence we have from OpenAI is that it isn't very helpful to "be a safety conscious person there." (i.e. combo of people leaving who did not find it tractable to be helpful there, and NDAs making it hard to reason about, and IMO better to default assume bad things rather than good things given the NDAs)

I think it's especially not helpful if you're a low-context person, who reads an OpenAI job board posting, and isn't going in with a specific plan to operate in an adversarial environment.

If the job posting literally said "to be clear, OpenAI has a pretty bad track record and seems to be an actively misleading environment, take this job if you are prepared to deal with that", that'd be a different story. (But, that's also a pretty weird job ad, and OpenAI would be rightly skeptical of people coming from that funnel. I think taking jobs at OpenAI that are net helpful to the world requires a mix of a very strong moral and epistemic backbone, and nonetheless still able to make good faith positive sum trades with OpenAI leadership. Most of the people I know who maybe had those skills have left the company)

I expect the object-level impact of a person joining OpenAI to be slightly harmful on net (although realistically close to neutral because of replaceability effects. I expect them to be slightly-harmful on net because OpenAI is good at hiring competent people, and good at funneling them into harmful capabilities work. So, the fact that you got hired is evidence you are slightly better at it than the next person).

JackM @ 2024-07-04T19:19 (+1)

I think the evidence we have from OpenAI is that it isn't very helpful to "be a safety conscious person there

It's insanely hard to have an outsized impact in this world. Of course it's hard to change things from inside OpenAI, but that doesn't mean we shouldn't try. If we succeed it could mean everything. You're probably going to have lower expected value pretty much anywhere else IMO, even if it does seem intractable to change things at OpenAI.

I think it's especially not helpful if you're a low-context person, who reads an OpenAI job board posting, and isn't going in with a specific plan to operate in an adversarial environment.

Surely this isn't the typical EA though?

Raemon @ 2024-07-04T19:21 (+3)

Surely this isn't the typical EA though?

I think job ads in particular are a filter for "being more typical."

I expect the people who have a chance of doing a good job to be well connected to previous people who worked at OpenAI, with some experience under their belt navigating organizational social scenes while holding onto their own epistemics. I expect such a person to basically not need to see the job ad.

JackM @ 2024-07-04T19:25 (+2)

You're referring to job boards generally but we're talking about the 80K job board which is no typical job board.

I would expect someone who will do a good job to be someone going in wanting to stop OpenAI destroying the world. That seems to be someone who would read the 80K Hours job board. 80K is all about preserving the future.