I'm interviewing historian Ian Morris on the intelligence explosion — what should I ask him this time?

By Robert_Wiblin @ 2023-07-21T12:33 (+12)

Next week I'm again interviewing Ian Morris, historian and author of 'Why The West Rules—For Now'.

My first interview with him — Ian Morris on what big picture history teaches us — is one of the most popular interviews I've ever done.

In 'Why The West Rules—For Now' Ian argues in passing that studying long-term history should cause us to project some form of intelligence explosion/singularity during the 21st century, and that by 2200 humanity will either be extinct, or the world will be completely unrecognisable to those of us alive today.

He briefly describes the economic explosion idea in this talk from 12 years ago.

Ian is also the author of

- 'Foragers, Farmers, and Fossil Fuels: How Human Values Evolve'

- 'War! What is it Good For? Conflict and the Progress of Civilization from Primates to Robots' and

- 'Geography Is Destiny: Britain and the World: A 10,000-Year History'.

What should I ask him?

Jackson Wagner @ 2023-07-21T18:05 (+7)

- Since "Why The West Rules" is pretty big on understanding history through quantifying trends and then trying to understand when/why/how important trends sometimes hit a ceiling or reverse, it might be interesting to ask some abstract questions about how much we can really infer from trend extrapolation, what dangers to watch out for when doing such analysis, etc. Or as Slate Star Codex entertainingly put it, "Does Reality Drive Straight Lines On Graphs, Or Do Straight Lines On Graphs Drive Reality?" How often are historical events overdetermined? (Why The West Rules is already basically about this question as applied to the Industrial Revolution. But I'd be interested in getting his take more broadly -- eg, if the Black Death had never happened, would Europe have been overdue for some kind of pandemic or malthusian population collapse eventually? And of course, looking forward, is our civilization's exponential acceleration of progress/capability/power overdetermined even if AI somehow turns out to be a dud? Or is it plausible that we'll hit some new ceiling of stagnation?)

- On the idea of an intelligence explosion in particular, it would be fascinating to get Morris's take on some of the prediction / forecasting frameworks that rationalists have been using to understand AI, like Tom Davidson's "compute-centric framework" (the Slate Star Codex popularization that I read has a particularly historical flavor!), or Aeja Cotra's Bio Anchors report. It would probably be way too onerous to ask Ian Morris to read through these reports before coming on the show, but maybe you could try to extract some key questions that would still make sense even without the full CCF or Bio Anchors framework?

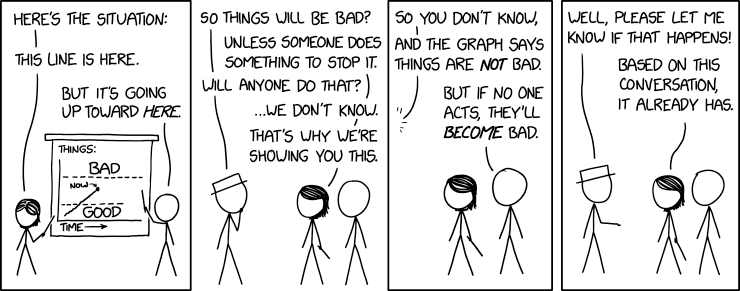

- Not sure exactly how to phrase this question, but I might also ask him how likely he thinks it is that the world will react to the threat of AI in appropriate ways -- will governments recognize the problem and cooperate to try and solve it? (As for instance governments were somewhat able to adapt to nuclear weapons, adopting an uneasy norm that all wars must now be "limited wars" and mostly cooperating on an international regime of nuclear nonproliferation enforcement.) Will public opinion recognize the threat of AI and help push government actions in a good direction, before it's too late? In light of previous historical crises (pandemics both old and recent, world wars, nuclear weapons, climate change, etc), to what extent does Morris think humanity will "rise to the challenge", versus to what extent do you sometimes just run into a problem that is way beyond your state-capacity to solve? TL;DR I would basically like you to ask Ian Morris about this xkcd comic:

- Personally if I had plenty of time, I would also shoot the shit about AI's near-term macro impacts on the economy. (Will the labor market see "technological unemployment" or will the economy run hotter than ever? Which sectors might see lots of innovation, versus which might be difficult for AI to have an impact on? etc.) I don't really expect Morris to have any special expertise here, but I am just personally confused about this and always enjoy hearing people's takes.

- Not AI related, but it would be interesting to get a macro-historical perspective on the current geopolitical outlook on the trajectories of major powers -- China, the USA, Europe, Russia, India, etc. How does he see the relationship between China and the USA evolving over coming decades? What does the Ukraine war mean for Russia's long-term future? Will Europe ever be able to rekindle enough dynamism to catch back up with the USA? And how might these traditional geopolitical concerns affect and/or be affected by the impacts of an ongoing slow-takeoff intelligence explosion? (Again, not really expecting Morris to be an impeccable world-class geopolitical strategist, but he probably knows more than I do and it would be interesting to hear a macro-historical perspective.)

- Not a question to ask Ian Morris, but if you've read "Why The West Rules", you might appreciate this short (and loving) parody I wrote, chronicling a counterfactual Song Dynasty takeoff fueled by uranium ore: "Why The East Rules -- For Now".

Finally, thanks so much for running the 80,000 Hours podcast! I have really appreciated it over the years, and learned a lot.