Person-affecting intuitions can often be money pumped

By Rohin Shah @ 2022-07-07T12:23 (+94)

This is a short reference post for an argument I wish was better known. Note that it is primarily about person-affecting intuitions that normal people have, rather than a serious engagement with the population ethics literature, which contains many person-affecting views not subject to the argument in this post.

EDIT: Turns out there was a previous post making the same argument.

A common intuition people have is that our goal is "Making People Happy, not Making Happy People". That is:

- Making people happy: if some person Alice will definitely exist, then it is good to improve her welfare

- Not making happy people: it is neutral to go from "Alice won't exist" to "Alice will exist"[1]. Intuitively, if Alice doesn't exist, she can't care that she doesn't live a happy life, and so no harm was done.

This position is vulnerable to a money pump[2], that is, there is a set of trades that it would make that would achieve nothing and lose money with certainty. Consider the following worlds:

- World 1: Alice won't exist in the future.

- World 2: Alice will exist in the future, and will be slightly happy.

- World 3: Alice will exist in the future, and will be very happy.

(The worlds are the same in every other aspect. It's a thought experiment.)

Then this view would be happy to make the following trades:

- Receive $0.01[3] to move from World 1 to World 2 ("Not making happy people")

- Pay $1.00 to move from World 2 to World 3 ("Making people happy")

- Receive $0.01 to move from World 3 to World 1 ("Not making happy people")

The net result is to lose $0.98 to move from World 1 to World 1.

FAQ

Q. Why should I care if my preferences lead to money pumping?

This is a longstanding debate that I'm not going to get into here. I'd recommend Holden's series on this general topic, starting with Future-proof ethics.

Q. In the real world we'd never have such clean options to choose from. Does this matter at all in the real world?

See previous answer.

Q. What if we instead have <slight variant on a person-affecting view>?

Often these variants are also vulnerable to the same issue. For example, if you have a "moderate view" where making happy people is not worthless but is discounted by a factor of (say) 10, the same example works with slightly different numbers:

Let's say that "Alice is very happy" has an undiscounted worth of 2 utilons. Then you would be happy to (1) move from World 1 to World 2 for free, (2) pay 1 utilon to move from World 2 to World 3, and (3) receive 0.5 utilons to move from World 3 to World 1.

The philosophical literature does consider person-affecting views to which this money pump does not apply. I've found these views to be unappealing for other reasons but I have not considered all of them and am not an expert in the topic.

If you're interested in this topic, Arrhenius proves an impossibility result that applies to all possible population ethics (not just person-affecting views), so you need to bite at least one bullet.

EDIT: Adding more FAQs based on comments:

Q. Why doesn't this view anticipate that trade 2 will be available, and so reject trade 1?

You can either have a local decision rule that doesn't take into account future actions (and so excludes this sort of reasoning), or you can have a global decision rule that selects an entire policy at once. I'm talking about the local kind.

You could have a global decision rule that compares worlds and ignores happy people who don't exist in all worlds. In that case you avoid this money pump, but have other problems -- see Chapter 4 of On the Overwhelming Importance of Shaping the Far Future.

You could also take the local decision rule and try to turn it into a global decision rule by giving it information about what decisions it would make in the future. I'm not sure how you'd make this work but I don't expect great results.

Q. This is a very consequentialist take on person-affecting views. Wouldn't a non-consequentialist version (e.g. this comment) make more sense?

Personally I think of non-consequentialist theories as good heuristics that approximate the hard-to-compute consequentialist answer, and so I often find them irrelevant when thinking about theories applied in idealized thought experiments. If you are instead sympathetic to non-consequentialist theories as being the true answer, then the argument in this post probably shouldn't sway you too much. If you are in a real-world situation where you have person-affecting intuitions, those intuitions are there for a reason and you probably shouldn't completely ignore them until you know that reason.

Q. Doesn't total utilitarianism also have problems?

Yes! While I am more sympathetic to total utilitarianism than person-affecting views, this post is just a short reference post about one particular argument. I am not defending claims like "this argument demolishes person-affecting views" or "total utilitarianism is the correct theory" in this post.

Further resources

- On the Overwhelming Importance of Shaping the Far Future (Nick Beckstead's thesis)

- An Impossibility Theorem for Welfarist Axiologies (Arrhenius paradox, summarized in Section 2 of Impossibility and Uncertainty Theorems in AI Value Alignment)

- ^

For this post I'll assume that Alice's life is net positive, since "asymmetric" views say that if Alice would have a net negative life, then it would be actively bad (rather than neutral) to move Alice from "won't exist" to "will exist".

- ^

A previous version of this post incorrectly called this a Dutch book.

- ^

By giving it $0.01, I'm making it so that it strictly prefers to take the trade (rather than being indifferent to the trade, as it would be if there was no money involved).

elliottthornley @ 2022-07-08T11:48 (+35)

My impression is that each family of person-affecting views avoids the Dutch book here.

Here are four families:

(1) Presentism: only people who presently exist matter.

(2) Actualism: only people who will exist (in the actual world) matter.

(3) Necessitarianism: only people who will exist regardless of your choice matter.

(4) Harm-minimisation views (HMV): Minimize harm, where harm is the amount by which a person's welfare falls short of what it could have been.

Presentists won't make trade 2, because Alice doesn't exist yet.

Actualists can permissibly turn down trade 3, because if they turn down trade 3 then Alice will actually exist and her welfare matters.

Necessitarians won't make trade 2, because it's not the case that Alice will exist regardless of their choice.

HMVs won't make trade 1, because Alice is harmed in World 2 but not World 1.

Rohin Shah @ 2022-07-08T21:55 (+6)

I agree that most philosophical literature on person-affecting views ends up focusing on transitive views that can't be Dutch booked in this particular way (I think precisely because not many people want to defend intransitivity).

I think the typical person-affecting intuitions that people actually have are better captured by the view in my post than by any of these four families of views, and that's the audience to which I'm writing. This wasn't meant to be a serious engagement with the population ethics literature; I've now signposted that more clearly.

EDIT: I just ran these positions (except actualism, because I don't understand how you make decisions with actualism) by someone who isn't familiar with population ethics, and they found all of them intuitively ridiculous. They weren't thrilled with the view I laid out but they did find it more intuitive.

elliottthornley @ 2022-07-11T10:26 (+3)

Okay, that seems fair. And I agree that the Dutch book is a good argument against the person-affecting intuitions you lay out. But the argument only shows that people initially attracted to those person-affecting intuitions should move to a non-Dutch-bookable person-affecting view. If we want to move people away from person-affecting views entirely, we need other arguments.

The person-affecting views endorsed by philosophers these days are more complex than the families I listed. They're not so intuitively ridiculous (though I think they still have problems. I have a couple of draft papers on this.).

Also a minor terminological note, you've called your argument a Dutch book and so have I. But I think it would be more standard to call it a money pump. Dutch books are a set of gambles all taken at once that are guaranteed to leave a person worse off. Money pumps are a set of trades taken one after the other that are guaranteed to leave a person worse off.

Rohin Shah @ 2022-07-11T13:03 (+7)

If we want to move people away from person-affecting views entirely, we need other arguments.

Fwiw, I wasn't particularly trying to do this. I'm not super happy with any particular view on population ethics and I wouldn't be that surprised if the actual view I settled on after a long reflection was pretty different from anything that exists today, and does incorporate something vaguely like person-affecting intuitions.

I mostly notice that people who have some but not much experience with longtermism are often very aware of the Repugnant Conclusion and other objections to total utilitarianism, and conclude that actually person-affecting intuitions are the right way to go. In at least two cases they seemed to significantly reconsider upon presenting this argument. It seems to me like, amongst the population of people who haven't engaged with the population ethics literature, critiques of total utilitarianism are much better known than critiques of person affecting intuitions. I'm just trying to fix that discrepancy.

Also a minor terminological note, you've called your argument a Dutch book and so have I. But I think it would be more standard to call it a money pump.

Thanks, I've changed this.

elliottthornley @ 2022-07-12T11:02 (+24)

I'm just trying to fix that discrepancy.

I see. That seems like a good thing to do.

Here's another good argument against person-affecting views that can be explained pretty simply, due to Tomi Francis.

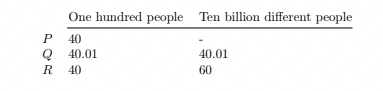

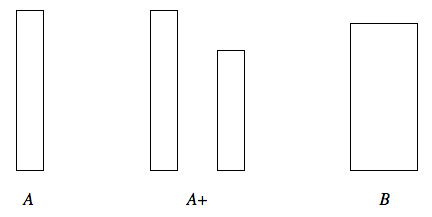

Person-affecting views imply that it's not good to add happy people. But Q is better than P, because Q is better for the hundred already-existing people, and the ten billion extra people in Q all live happy lives. And R is better than Q, because moving to R makes one hundred people's lives slightly worse and ten billion people's lives much better. Since betterness is transitive, R is better than P. R and P are identical except for the extra ten billion people living happy lives in R. Therefore, it's good to add happy people, and person-affecting views are false.

MichaelStJules @ 2022-09-08T18:40 (+3)

There are also Parfit's original Mere Addition argument and Huemer's Benign Addition argument for the Repugnant Conclusion. They're the familiar A≤A+<B arguments, adding a large marginally positive welfare population, and then redistributing the welfare evenly, except with Huemer's, A<A+, strictly, because those in A are made slightly better off in A+.

Huemer's is here: https://philpapers.org/rec/HUEIDO

I think this kind of argument can be used to show that actualism endorses the RC and Very RC in some cases, because the original world without the extra people does not maximize "self-conditional value" (if the original people in A are better off in A+, via benign addition), whereas B does, using additive aggregation.

I think the Tomi Francis example also only has R maximizing self-conditional value, among the three options, when all three are available. And we could even make the original 100 people worse off than 40 each in R, and this would still hold.

Voting methods extending from pairwise comparisons also don't seem to avoid the problem, either: https://forum.effectivealtruism.org/posts/fqynQ4bxsXsAhR79c/teruji-thomas-the-asymmetry-uncertainty-and-the-long-term?commentId=ockB2ZCyyD8SfTKtL

I guess HMVs, presentist and necessitarian views may work to avoid the RC and VRC, but AFAICT, you only get the procreation asymmetry by assuming some kind of asymmetry with these views. And they all have some pretty unusual prescriptions I find unintuitive, even as someone very sympathetic to person-affecting views.

Frick’s conditional interests still seem promising and could maybe be used to justify the procreation asymmetry for some kind of HMV or negative axiology.

Rohin Shah @ 2022-07-13T07:44 (+3)

Nice, I hadn't seen this argument before.

MichaelStJules @ 2022-07-08T17:55 (+3)

This all seems right if all the trades are known to be available ahead of time and we're making all these decisions before Alice would be born. However, we can specify things slightly differently.

Presentists and necessitarians who have made trade 1 will make trade 2 if it's offered after Alice is born, but then they can turn down trade 3 at that point, as trade 3 would mean killing Alice or an impossible world where she was never born. However, if they anticipate trade 2 being offered after Alice is born, then I think they shouldn't make trade 1, since they know they'll make trade 2 and end up in World 3 minus some money, which is worse than World 1 for presently existing people and necessary people before Alice is born.

HMVs would make trade 1 if they don't anticipate trade 2/World 3 minus some money being an option, but end up being wrong about that.

Ben Millwood @ 2024-03-20T16:30 (+2)

However, if they anticipate trade 2 being offered after Alice is born, then I think they shouldn't make trade 1, since they know they'll make trade 2 and end up in World 3 minus some money, which is worse than World 1 for presently existing people and necessary people before Alice is born.

I think it's pretty unreasonable for an ethical system to:

- change its mind about whether something is good or bad, based only on time elapsing, without having learned anything new (say, you're offered trade 2, and you know that Alice's mother has just gone into labour, and now you want to call the hospital to find out if she's given birth yet? or you made trade 2 ten years ago, and it was a mistake if Alice is 8 years old now, but not if she's 12?)

- as a consequence, act to deliberately frustrate its own future choices, so that it will be later unable to pick some option that would have seemed the best to it

I haven't come up with much of an argument beyond incredulity, but I nevertheless find myself incredulous.

(I'm mindful that this comment is coming 2 years later and some things have happened in between. I came here by looking at the person-affecting forum wiki tag after feeling that not all of my reasons for rejecting such views were common knowledge.)

MichaelStJules @ 2024-03-21T02:43 (+2)

Only presentists have the problem in the first bullet with your specific example.

There's a similar problem that necessitarians have if the identity of the extra person isn't decided yet, i.e. before conception. However, they do get to learn something new, i.e. the identity. If a necessitarian knew the identity ahead of time, there would be no similar problem. (And you can modify the view to be insensitive to the identity of the child by matching counterparts across possible worlds.)

The problem in the second bullet, basically against burning bridges or "resolute choice", doesn't seem that big of a deal to me. You run into similar problems with Parfit's hitchhiker and unbounded utility functions.

Maybe I can motivate this better? Say you want to have a child, but being a good parent (and ensuring high welfare for your child) seems like too much trouble and seems worse to you than not having kids, even though, conditional on having a child, it would be best.

Your options are:

- No child.

- Have a child, but be a meh parent. You're better off than in 1, and the child has a net positive but just okay life.

- Have a child, but work much harder to be a good parent. You're worse off than in 2, but the child is much better off than in 2, and this outcome is better than 2 in a pairwise comparison.

In binary choices:

- 1 < 2, because 2 is better for you and no worse for your child (person-affecting).

- 2 < 3, impartially by assumption.

- 3 < 1, because 1 is better for you and no worse for your child (person-affecting).

With all three options available, I'd opt for 1, because 2 wouldn't be impartially permissible if 3 is available, and I prefer 1 to 3. 2 is not really an option if 3 is available.

It seems okay for me to frustrate my own preference for 2 over 1 in order to avoid 3, which is even worse for me than 1. No one else is worse off for this (in a person-affecting way); the child doesn't exist to be worse off, so has no grounds for complaint. So it seems to me to be entirely my own business.

elliottthornley @ 2022-07-11T09:53 (+1)

Agreed

Michael_Wiebe @ 2022-07-08T16:46 (+3)

Is the difference between actualism and necessitarianism that actualism cares about both (1) people who exist as a result of our choices, and (2) people who exist regardless of our choices; whereas necessitarianism cares only about (2)?

elliottthornley @ 2022-07-11T09:54 (+3)

Yup!

Michael_Wiebe @ 2022-07-11T16:31 (+3)

Hm, then I find necessitarianism quite strange. In practice, how do we identify people who exist regardless of our choices?

elliottthornley @ 2022-07-12T11:18 (+5)

I think in ordinary cases, necessitarianism ends up looking a lot like presentism. If someone presently exists, then they exist regardless of my choices. If someone doesn't yet exist, their existence likely depends on my choices (there's probably something I could do to prevent their existence).

Necessitarianism and presentism do differ in some contrived cases, though. For example, suppose I'm the last living creature on Earth, and I'm about to die. I can either leave the Earth pristine or wreck the environment. Some alien will soon be born far away and then travel to Earth. This alien's life on Earth will be much better if I leave the Earth pristine. Presentism implies that it doesn't matter whether I wreck the Earth, because the alien doesn't exist yet. Necessitarianism implies that it would be bad to wreck the Earth, because the alien will exist regardless of what I do.

MichaelStJules @ 2022-07-07T18:52 (+11)

More generally, Arrhenius proves an impossibility result that applies to all possible population ethics (not just person-affecting views), so (if you want consistency) you need to bite at least one of those bullets.

That result (The Impossibility Theorem), as stated in the paper, has some important assumptions not explicitly mentioned in the result itself which are instead made early in the paper and assume away effectively all person-affecting views before the 6 conditions are introduced. The assumptions are completeness, transitivity and the independence of irrelevant alternatives. You could extend the result to include incompleteness, intransitivity, dependence on irrelevant alternatives or being in principle Dutch bookable/money pumpable as alternative "bullets" you could bite on top of the 6 conditions. (Intransitivity, dependence on irrelevant alternatives and maybe incompleteness imply Dutch books/money pumps, so you could just add Dutch books/money pumps and maybe incompleteness.)

MichaelStJules @ 2022-07-07T19:25 (+6)

There are some other similar impossibility results that apply to I think basically all aggregative views, person-affecting or not (although there are non-aggregative views which avoid them). See Spears and Budolfson:

- https://philpapers.org/rec/BUDWTR

- http://www.stafforini.com/docs/Spears & Budolfson - Repugnant conclusions.pdf

The results are basically that all aggregative views in the literature allow small changes in individual welfares in a background population to outweigh the replacement of an extremely high positive welfare subpopulation with a subpopulation with extremely negative welfare, an extended very repugnant conclusion. The size and welfare levels of the background population, the size of the small changes and the number of small changes will depend on the exact replacement and view. The result is roughly:

For any positive welfare population and negative welfare population, there exists some background population + small average welfare changes to it such that the negative welfare population + the changes to the background population are preferred to the positive welfare population (without the changes to the background population).

This is usually through a much much larger number of small changes to the background population than the number of replaced individuals, or the small changes happening to individuals who are extremely prioritized (as in lexical views and some person-affecting views).

(I think the result actually also adds a huge marginally positive welfare population along with the negative welfare one, but I don't think this is necessary or very interesting.)

Rohin Shah @ 2022-07-07T20:05 (+4)

You could extend the result to include incompleteness, intransitivity, dependence on irrelevant alternatives or being in principle Dutch bookable/money pumpable as alternative "bullets" you could bite on top of the 6 conditions.

Yeah, this is what I had in mind.

JP Addison @ 2022-07-07T20:12 (+10)

Mod note: I've enabled agree-disagree voting on this thread. This is still in the experimental phase, see the first time we did so here. Still very interested in feedback.

RedStateBlueState @ 2022-07-07T12:46 (+10)

Maybe I have the wrong idea about what “person-affecting view” refers to, but I thought a person-affecting view was a non-consequentialist ideology that would not take trade 3, ie it is neutral about moving from no person to happy person but actively dislikes moving from happy person to no person.

Lukas_Gloor @ 2022-07-07T13:56 (+30)

Wouldn't the view dislike it if the happy person was certain to be born, but not in the situation where the happy person's existence is up to us? But I agree strongly with person-affecting views working best in a non-consequentialist framework!

I think I find step 1 the most dubious – Receive $0.01 to move from World 1 to World 2 ("Not making happy people").

If we know that world 3 is possible, we're accepting money for creating a person under conditions that are significantly worse than they could be. That seems quite bad even if Alice would rather exist than not exist.

My reply violates the independence of irrelevant(-seeming) alternatives condition. I think that's okay.

To give an example, imagine some millionaire (who uses 100% of their money selfishly) would accept $1,000 to bring a child into existence that will grow up reasonably happy but have a lot of struggles – let's say she'll only have the means of a bottom-10%-income American household. Seems bad if the millionaire could instead bring a child into existence that is better positioned to do well in life and achieve her goals!

Now imagine if a bottom-10%-income American family wants to bring a child into existence, and they will care for the child with all their resources (and are good parents, etc.). Then, it seems neutral rather than bad.

I think of person-affecting principles not as "parts of a consequentialist theory of value" but rather as part of a set of non-consequentialist principles – something like "population ethics as a set of appeals or principles by which newly created people/beings can hold their creators accountable."

Rohin Shah @ 2022-07-08T06:26 (+6)

Added an FAQ:

Q. This is a very consequentialist take on person-affecting views. Wouldn't a non-consequentialist version (e.g. this comment) make more sense?

Personally I think of non-consequentialist theories as good heuristics that approximate the hard-to-compute consequentialist answer, and so I often find them irrelevant when thinking about theories applied in idealized thought experiments. If you are instead sympathetic to non-consequentialist theories as being the true answer, then the argument in this post probably shouldn't sway you too much. If you are in a real-world situation where you have person-affecting intuitions, those intuitions are there for a reason and you probably shouldn't completely ignore them until you know that reason.

In your millionaire example, I think the consequentialist explanation is "if people generally treat it as bad when Bob takes action A with mildly good first-order consequences when Bob could instead have taken action B with much better first-order consequences, that creates an incentive through anticipated social pressure for Bob to take action B rather than A when otherwise Bob would have taken A rather than B".

(Notably, this reason doesn't apply in the idealized thought experiment where no one ever observes your decisions and there is no difference between the three worlds other than what was described.)

Lukas_Gloor @ 2022-07-08T08:28 (+3)

if people generally treat it as bad when Bob takes action A with mildly good first-order consequences when Bob could instead have taken action B with much better first-order consequences,

On my favored view, this isn't the case. I think of creating new people/beings as a special category.

I also am mostly on board with consequentialism applied to limited domains of ethics, but I'm against treating all of ethics under consequentialism, especially if people try to do the latter in a moral realist way where they look for a consequentialist theory that defines everyone's standards of ideally moral conduct.

I am working on a post titled "Population Ethics Without an Objective Axiology." Here's a summary from that post:

- The search for an objective axiology assumes that there’s a well-defined “impartial perspective” that determines what’s intrinsically good/valuable. Within my framework, there’s no such perspective.

- Another way of saying this goes as follows. My framework conceptualizes ethics as being about goals/interests.[There are, I think, good reasons for this – see my post Dismantling Hedonism-inspired Moral Realism for why I object to ethics being about experiences, and my post Against Irreducible Normativity on why I don’t think ethics is about things that we can’t express in non-normative terminology.] Goals can differ between people and there’s no goal correct goal for everyone to adopt.

- In fixed-population contexts, a focus on goals/interests can tell us exactly what to do: we best benefit others by doing what these others (people/beings) would want us to do.

- In population ethics, this approach no longer works so well – it introduces ambiguities. Creating new people/beings changes the number of interests/goals to look out for. Relatedly, creating people/beings of type A instead of type B changes the types of interests/goals to look out for. In light of these options, a “focus on interests/goals” leaves many things under-defined.

- To gain back some clarity, we can note that population ethics has two separate perspectives: that of existing people/beings and that of newly created people/beings. (Without an objective axiology, these perspectives cannot be unified.)

- Population ethics from the perspective of existing people is analogous to settlers standing in front of a giant garden: There’s all this unused land and there’s a long potential future ahead of us – what do we want to do with it? How do we address various tradeoffs?

- In practice, newly created beings are at the whims of their creators. However, “might makes right” is not an ideal that altruistically-inclined/morally-motivated creators would endorse. Population ethics from the perspective of newly created people/beings is like a court hearing: newly created people/beings speak up for their interests/goals. (Newly created people/beings have the opportunity to appeal to their creators' moral motivations and altruism, or at least hold them accountable to some minimal standards of pro-social conduct.)

- The degree to which someone’s life goals are self-oriented vs. inspired by altruism/morality produces a distinction between minimalist morality and maximally ambitious morality. Minimalist morality is where someone respects both population-ethical perspectives sufficiently to avoid harm on both of them, while following self-oriented interests/goals otherwise. By contrast, effective altruists want to spend (at least a portion of) their effort and resources to “do what’s most moral/altruistic.” They’re interested in maximally ambitious morality.

- Without an objective axiology, the placeholder “do what’s most moral/altruistic” is under-defined. In particular, there’s a tradeoff where cashing out “doing what’s most moral/altruistic” primarily according to the perspective of existing people leaves less room for altruism on the second perspective (that of newly created people), and vice versa.

- Besides, what counts as “doing what’s most moral/altruistic” according to the second perspective is under-defined. Without an objective axiology, the interests of newly created people/beings depend on who we create. (E.g, some newly created people would rather not be created than be at a small risk of experiencing intense suffering; others would gladly take significant risks and care immensely about a chance of a happy existence. It is impossible to do right from both perspectives.)

--

Some more thoughts to help make the above intelligible:

I think there's an incongruence behind how people think of population ethics in the standard way. (The standard way being something like: look for an objective axiology, something that has "intrinsic value," then figure out how we are to relate to that value/axiology and whether to add extra principles around it.)

The following two beliefs seem incongruent:

- There’s an objective axiology

- People’s life goals are theirs to choose: they aren’t making a mistake of rationality if they don’t all share the same life goal

There’s a tension between these beliefs – if there was an objective axiology, wouldn’t the people who don’t orient their goals around that axiology be making a mistake?

I expect that many effective altruists would hesitate to say “One of you must be wrong!” when two people discuss their self-oriented life goals and one cares greatly about living forever and the other doesn’t.

The claim “people are free to choose their life goals” may not be completely uncontroversial. Still, I expect many effective altruists to already agree with it. To the degree that they do, I suggest they lean in on this particular belief and explore what it implies for comparing the “axiology first” framework to my framework “population ethics without an objective axiology.” I expect that leaning in on the belief “people are free to choose their life goals” makes my framework more intuitive and gives a better sense of what the framework is for, what it’s trying to accomplish.

To help understand what I mean by minimalist morality vs. maximally amibitious morality, I'll now give some examples of how to think about procreation. These will closely track common sense morality. I'll indicate for each example whether it arises from minimalist morality or some person's take on maximally ambitious morality. Some examples also arise from there not being an objective axiology:

- Parents are obligated to provide a very high standard of care for their children (principle from minimalist morality).

- People are free to decide against becoming parents (“there’s no objective axiology”).

- Parents are free to want to have as many children as possible (“there’s no objective axiology”), as long as the children are happy in expectation (principle from minimalist morality).

- People are free to try to influence other people’s moral stances and parenting choices (“there’s no objective axiology”) – for instance, Joanne could promote anti-natalism and Marianne could promote totalism (their respective interpretations of “doing what’s most moral/altruistic”) – as long as they remain within the boundaries of what is acceptable in a civil society (principle from minimalist morality).

So, what's the role for (something like) person-affecting principles in population ethics? Basically, if you only want minimalist morality and otherwise want to pursue self-oriented goals, person-affecting principles seem like a pretty good answer to "what should be ethical constraints to your option space for creating new people/beings." In addition, I think person-affecting principles have some appeal even for specific flavors of "doing what's most moral/altruistic," but only in people who lean toward interpretations of this that highlight benefitting people who already exist or will exist regardless of your actions. As I said in the bullet point summary, there's a tradeoff where cashing out “doing what’s most moral/altruistic” primarily according to the perspective of existing people leaves less room for altruism on the second perspective (that of newly created people), and vice versa. (For the "vice versa," note that, e.g., a totalist classical utilitarian would be leaving less room for benefitting already existing people. They would privilege an arguably defensible but certainly not 'objectively correct' interpretation of what it means to benefit newly created people, and they would lean in on that perspective more so than they lean into the perspective "What are existing people's life goals and how do I benefit them.")

Rohin Shah @ 2022-07-08T09:38 (+5)

I can't tell what you mean by an objective axiology. It seems to me like you're equivocating between a bunch of definitions:

- An axiology is objective if it is universally true / independent of the decision-maker / not reliant on goals / implied by math. (I'm pointing to a cluster of intuitions rather than giving a precise definition.)

- An axiology is objective if it provides a decision for every possible situation you could be in. (I would prefer to call this a "complete" axiology, perhaps.)

- An axiology is objective if its decisions can be computed by taking each world, summing some welfare function over all the people in that world, and choosing the decision that leads to the world with a higher number. (I would prefer to call this an "aggregative" axiology, perhaps.)

Examples of definition 1:

The search for an objective axiology assumes that there’s a well-defined “impartial perspective” that determines what’s intrinsically good/valuable. [...]

if there was an objective axiology, wouldn’t the people who don’t orient their goals around that axiology be making a mistake?

Examples of definition 2:

Without an objective axiology, the placeholder “do what’s most moral/altruistic” is under-defined. [...]

I think there's an incongruence behind how people think of population ethics in the standard way. (The standard way being something like: look for an objective axiology, something that has "intrinsic value," then figure out how we are to relate to that value/axiology and whether to add extra principles around it.)

Examples of definition 3:

we can note that population ethics has two separate perspectives: that of existing people/beings and that of newly created people/beings. (Without an objective axiology, these perspectives cannot be unified.)

I don't think I'm relying on an objective-axiology-by-definition-1. Any time I say "good" you can think of it as "good according to the decision-maker" rather than "objectively good". I think this doesn't affect any of my arguments.

It is true that I am imagining an objective-axiology-by-definition-2 (which I would perhaps call a "complete axiology"). I don't really see from your comment why this is a problem.

I agree this is "maximally ambitious morality" rather than "minimal morality". Personally if I were designing "minimal morality" I'd figure out what "maximally ambitious morality" would recommend we design as principles that everyone could agree on and follow, and then implement those. I'm skeptical that if I ran through such a procedure I'd end up choosing person-affecting intuitions (in the sense of "Making People Happy, Not Making Happy People", I think I plausibly would choose something along the lines of "if you create new people make sure they have lives well-beyond-barely worth living"). Other people might differ from me, since they have different goals, but I suspect not.

I agree that if your starting point is "I want to ensure that people's preferences are satisfied" you do not yet have a complete axiology, and in particular there's an ambiguity about how to make decisions about which people to create. If this is your starting point then I think my post is saying "if you resolve this ambiguity in this particular way, you get Dutch booked". I agree that you could avoid the Dutch book by resolving the ambiguity as "I will only create individuals whose preferences I have satisfied as best as I can".

Lukas_Gloor @ 2022-07-08T12:37 (+5)

Personally if I were designing "minimal morality" I'd figure out what "maximally ambitious morality" would recommend we design as principles that everyone could agree on and follow, and then implement those.

I think this is a crux between us (or at least an instance where I didn't describe very well how I think of "minimal morality"). (A lot of the other points I’ve been making, I see mostly as “here’s a defensible alternative to Rohin’s view” rather than “here’s why Rohin is wrong to not find (something like) person-affecting principles appealing.”)

In my framework, it wouldn’t be fair to derive minimal morality from a specific take on maximally ambitious morality. People who want to follow some maximally ambitious morality (this includes myself) won’t all pick the same interpretation of what that means. Not just for practical reasons, but fundamentally: for maximally ambitious morality, different interpretations are equally philosophically defensible.

Some people may have the objection "Wait, if maximally ambitious morality is under-defined, why adopt confident and specific views for how you want things to be? Why not keep your views on it under-defined, too?” (See Richard Ngo’s post on Moral indefinability.) I have answered this objection in this section of my post The Moral Uncertainty Rabbit Hole, Fully Excavated. In short, I give an analogy between "doing what's maximally moral" and "becoming ideally athletically fit." In the analogy, someone grows up with the childhood dream of becoming “ideally athletically fit” in a not-further-specified way. They then have the insight that "becoming ideally athletically fit" has different defensible interpretations – e.g., the difference between a marathon runner or a 100m-sprinter ((or someone who is maximally fit in reducing heart attack risks – which are actually elevated for professional athletes!)). Now, it is an open question for them whether to care about a specific interpretation of the target concept or whether to embrace under-definedness. My advice to them for resolving this question is “think about which aspects of fitness you feel most drawn to, if any.”

Minimal morality is the closest we can come to something “objective” in the sense that it’s possible for philosophically sophisticated reasoners to all agree on it (your first interpretation). (This is precisely because minimal morality is unambitious – it only tells us to not be jerks; it doesn’t give clear guidance for what else to do.)

Minimal morality will feel unsatisfying to anyone who finds effective altruism appealing, so we want to go beyond it in places. However, within my framework, we can only go beyond it by forming morality/altruism-inspired life goals that, while we try to make them impartial/objective, have to inevitably lock in subjective judgment calls. (E.g., “Given that you can’t be both at once, do you want to be maximally impartially altruistic towards existing people or towards newly created people?” or “Assuming the latter, given that different types of newly created people will have different views on what’s good or bad for them, how will define for yourself what it means to maximally benefit (which?) newly created people?”)

It is true that I am imagining an objective-axiology-by-definition-2 (which I would perhaps call a "complete axiology"). I don't really see from your comment why this is a problem.

I agree it’s not a problem as long as you’re choosing that sort of success criterion (that you want a complete axiology) freely, rather than thinking it’s a forced move. (My sense is that you already don't think of it as a forced move, so I should have been more clear that I wasn't necessarily arguing against your views.)

I agree that if your starting point is "I want to ensure that people's preferences are satisfied" you do not yet have a complete axiology, and in particular there's an ambiguity about how to make decisions about which people to create. If this is your starting point then I think my post is saying "if you resolve this ambiguity in this particular way, you get Dutch booked". I agree that you could avoid the Dutch book by resolving the ambiguity as "I will only create individuals whose preferences I have satisfied as best as I can".

Yes, that describes it very well!

That said, I’m mostly arguing for a framework* for how to think about population ethics rather than a specific, object-level normative theory. So, I’m not saying the solution to population ethics is “existing people’s life goals get comparatively a lot of weight.” I’m only pointing out how that seems like a defensible position, given that the alternative would be to somewhat arbitrarily give them comparatively very little weight.

*By “framework,” I mean a set of assumptions for thinking about a domain, answering questions like “What am I trying to figure out?”, “What makes for a good solution?” and “What are the concepts I want to use to reason successfully about this domain?”

I can't tell what you mean by an objective axiology. It seems to me like you're equivocating between a bunch of definitions:

I like the three interpretations of "objective" that you distilled!

I use the word “objective” in the first sense, but you noted correctly that I’m arguing as though rejecting “there’s an objective axiology” in that sense implies other things, too. (I should make these hidden inferences explicit in future versions of the summary!)

I’d say I’ve been arguing hard against the first interpretation of “objective axiology” and softly against your second and third descriptions of desirable features of an axiology/"answer to population ethics."

By “arguing hard” I mean “anyone who thinks this is wrong.” By “arguing softly” I mean “that may be defensible, but there are other defensible alternatives.”

So, on the question of success criteria for answers to population ethics (whether we're looking for a complete axiology, per your 2nd description, and whether the axiology should "fall out" naturally from world states, rather than be specific to histories or to "who's the person with the choice?", per your 3rd description)... On those questions, I think it's perfectly defensible to end up with answers that satisfy each respective criterion, but I think it's important to keep the option space open while we're discussing population ethics within a community, so we aren't prematurely locking in that "solutions to population ethics" need to be of a specific form. (It shouldn't become uncool within EA to conceptualize things differently, if the alternatives are well formed / well argued.)

I think there's a practical effect where people who think “ethics is objective” (in the first sense) might prematurely restrict their option space. (This won't apply to you.) I think they're looking for the sort of (object-level) normative theories that can fulfill the steep demands of objectivity – theories that all philosophically sophisticated others could agree on despite the widespread differences in people’s moral intuitions. With this constraint, one is likely to view it as a positive feature that a theory is elegantly simple, even if it demands a lot of “bullet biting.” (Moral reasoners couldn’t agree on the same answer if they all relied too much on their moral intuitions, which are different from person to person.)

In other words, if we thought that morality was a coordination game where we try to guess what everyone else is trying to guess will be the answer everyone converges on (and we also have priors that the answer is about “altruism” and “impartiality”), then we’d come up with different solutions than if we started without the "coordination game" assumption.

In any case, theories that fit your second and third description tend to be simpler, so they're more appealing to people who endorse "ethics is objective" (in the first sense). That's the link I see between the three descriptions. It’s no coincidence that the examples I gave in my previous comment (moral issues around procreation) track common sense ethics. The less we think “morality is objective” (in the first sense), the more alternatives we have to biting specific bullets.

Rohin Shah @ 2022-07-07T13:54 (+6)

Yeah, you could modify the view I laid out to say that moving from "happy person" to "no person" has a disutility equal in magnitude to the welfare that the happy person would have had. This new view can't be Dutch booked because it never takes trades that decrease total welfare.

My objection to it is that you can't use it for decision-making because it depends on what the "default" is. For example, if you view x-risk reduction as preventing a move from "lots of happy people to no people" this view is super excited about x-risk reduction, but if you view x-risk reduction as a move from "no people to lots of happy people" this view doesn't care.

(You can make a similar objection to the view in the post though it isn't as stark. In my experience, people's intuitions are closer to the view in the post, and they find the Dutch book argument at least moderately convincing.)

Erich_Grunewald @ 2022-07-07T14:59 (+1)

My objection to it is that you can't use it for decision-making because it depends on what the "default" is. For example, if you view x-risk reduction as preventing a move from "lots of happy people to no people" this view is super excited about x-risk reduction, but if you view x-risk reduction as a move from "no people to lots of happy people" this view doesn't care.

That still seems somehow like a consequentialist critique though. Maybe that's what it is and was intended to be. Or maybe I just don't follow?

From a non-consequentialist point of view, whether a "no people to lots of happy people" move (like any other move) is good or not depends on other considerations, like the nature of the action, our duties or virtue. I guess what I want to say is that "going from state A to state B"-type thinking is evaluating world states in an outcome-oriented way, and that just seems like the wrong level of analysis for those other philosophies.

From a consequentalist point of view, I agree.

Rohin Shah @ 2022-07-07T15:04 (+2)

I totally agree this is a consequentialist critique. I don't think that negates the validity of the critique.

From a non-consequentialist point of view, whether a "no people to lots of happy people" move (like any other move) is good or not depends on other considerations, like the nature of the action, our duties or virtue. I guess what I want to say is that "going from state A to state B"-type thinking is evaluating world states in an outcome-oriented way, and that just seems like the wrong level of analysis for those other philosophies.

Okay, but I still don't know what the view says about x-risk reduction (the example in my previous comment)?

Erich_Grunewald @ 2022-07-07T15:23 (+1)

I don't think that negates the validity of the critique.

Agreed -- I didn't mean to imply it was.

Okay, but I still don't know what the view says about x-risk reduction (the example in my previous comment)?

By "the view", do you mean the consequentialist person-affecting view you argued against, or one of the non-consequentialist person-affecting views I alluded to?

If the former, I have no idea.

If the latter, I guess it depends on the precise view. On the deontological view I find pretty plausible we have, roughly speaking, a duty to humanity, and that'd mean actions that reduce x-risk are good (and vice versa). (I think there are also other deontological reasons to reduce x-risk, but that's the main one.) I guess I don't see any way that changes depending on what the default is? I'll stop here since I'm not sure this is even what you were asking about ...

Rohin Shah @ 2022-07-07T20:00 (+2)

Oh, to be clear, my response to RedStateBlueState's comment was considering a new still-consequentialist view, that wouldn't take trade 3. None of the arguments in this post are meant to apply to e.g. deontological views. I've clarified this in my original response.

RedStateBlueState @ 2022-07-07T14:05 (+1)

Right, the “default” critique is why people (myself included) are consequentialists. But I think the view outlined in this post is patently absurd and nobody actually believes it. Trade 3 means that you would have no reservations about killing a (very) happy person for a couple utilons!

Rohin Shah @ 2022-07-07T14:31 (+6)

Oh, the view here only says that it's fine to prevent a happy person from coming into existence, not that it's fine to kill an already existing person.

MichaelStJules @ 2022-07-07T14:57 (+8)

I don't actually think Dutch books and money pumps are very practically relevant in charitable/career decision-making. To the extent that they are, you should aim to anticipate others attempting to Dutch book or money pump you and model sequences of decisions, just like you should aim to anticipate any other manipulation or exploitation. EDIT: You don't need to commit to views or decision procedures which are in principle not Dutch bookable/money pumpable. Furthermore, "greedy" (as in "greedy algorithm") or short-sighted EV maximization is also suboptimal in general, since you should in general consider what options will be available in the future depending on your decisions.

Also, it's worth mentioning that, in principle, EV maximization with* unbounded utility/social welfare functions can be Dutch booked/money pumped and violate the sure-thing principle, so if such arguments undermine person-affecting views, they also undermine total utilitarianism. Or at least the typical EV-maximizing unbounded versions, but you can apply a bounded squashing function to the sum of utilities before taking expected values, which will then be incompatible with Harsanyi's theorem, one of the main arguments for utilitarianism. Or you can assume, I think unreasonably, fixed bounds to total value, or that only finitely many outcomes from any given choice are possible, (EDIT) or otherwise assign 0 probability to the problematic possibilities.

*Added in an edit.

Rohin Shah @ 2022-07-08T05:31 (+6)

I don't think the case for caring about Dutch books is "maybe I'll get Dutch booked in the real world". I like the Future-proof ethics series on why to care about these sorts of theoretical results.

I definitely agree that there are issues with total utilitarianism as well.

Derek Shiller @ 2022-07-07T15:28 (+4)

unbounded social welfare functions can be Dutch booked/money pumped and violate the sure-thing principle

Do you have an example?

MichaelStJules @ 2022-07-07T16:15 (+3)

See this comment by Paul Christiano on LW based on St. Petersburg lotteries (and my reply).

Derek Shiller @ 2022-07-07T16:45 (+1)

Interesting. It reminds me of a challenge for denying countable additivity:

God runs a lottery. First, he picks two integers at random (each integer has an equal and 0 probability of being picked, violating countable additivity.) Then he shows one of the two at random to you. You know in advance that there is a 50% chance you'll see the higher one (maybe he flips a coin), but no matter what it is, after you see it you'll be convinced it is the lower one.

I'm inclined to think that this is a problem with infinities in general, not with unbounded utility functions per se.

MichaelStJules @ 2022-07-07T22:41 (+2)

I'm inclined to think that this is a problem with infinities in general, not with unbounded utility functions per se.

I think it's a problem for the conjunction of allowing some kinds of infinities and doing expected value maximization with unbounded utility functions. I think EV maximization with bounded utility functions isn't vulnerable to "isomorphic" Dutch books/money pumps or violations of the sure-thing principle. E.g., you could treat the possible outcomes of a lottery as all local parts of a larger single universe to aggregate, but then conditioning on the outcome of the first St. Petersburg lottery and comparing to the second lottery would correspond to comparing a local part of the first universe to the whole of the second universe, but the move from the whole first universe to the local part of the first universe can't happen via conditioning, and the arguments depend on conditioning.

Bounded utility functions have problems that unbounded utility functions don't, but these are in normative ethics and about how to actually assign values (including in infinite universes), not about violating plausible axioms of (normative) rationality/decision theory.

RedStateBlueState @ 2022-07-07T16:31 (+1)

After reading the linked comment I think the view that total utilitarianism can be dutch booked is fairly controversial (there is another unaddressed comment I quite agree with), and on a page like this one I think it's misleading to state as fact in a comment that total utilitarianism can be dutch booked in a similar way that person-affecting views can be dutch booked.

MichaelStJules @ 2022-07-07T16:52 (+2)

I should have specified EV maximization with an unbounded social welfare function, although the argument applies somewhat more generally; I've edited this into my top comment.

Looking at Slider's reply to the comment I linked, assuming that's the one you meant (or did you have another in mind?):

- Slider probably misunderstood Christiano about truncation, because Christiano meant that you'd truncate the second lottery at a point that depends on the outcome of the first lottery. For any actual value outcome X of the original St. Petersburg's lottery, half St. Pesterburg can be truncated at some point and still have a finite expected value greater than X. (EDIT: However, I guess the sure-thing principle isn't relevant here with conditional truncation, since we aren't comparing only two fixed options anymore.)

- I don't understand what Slider meant in the second paragraph, and I think it's probably missing the point.

- The third paragraph misses the point: once the outcome is decided for the first St. Petersburg lottery, it has finite value, and half St. Petersburg still has infinite expected value, which is greater than a finite value.

RedStateBlueState @ 2022-07-07T17:15 (+1)

Yes, I should have thought more about Slider's reply before posting, I take back my agreement. Still, I don't find dutch booking convincing in Christiano's case.

The reason to reject a theory based on dutch booking is that there is no logical choice to commit to, in this case to maximize EV. I don't think this applies to the Paul Christiano case, because the second lottery does not have higher EV than the first. Yes, once you play the first lottery and find out that it has a finite value the second one will have higher EV, but until then the first one has higher EV (in an infinite way) and you should choose it.

But again I think there can be reasonable disagreement about this, I just think equating dutch booking for the person-affecting view and for the total utilitarianism view is misleading. These are substantially different philosophical claims.

MichaelStJules @ 2022-07-08T01:05 (+2)

Yes, once you play the first lottery and find out that it has a finite value the second one will have higher EV, but until then the first one has higher EV (in an infinite way) and you should choose it.

I think a similar argument can apply to person-affecting views and the OP's Dutch book argument:

Yes, starting with World 1, once you make trade 1 to get World 2 and find out that trade 2 to World 3 is available, trade 1 will have negative value, but until then trade 1 has positive value and you should choose it.

MichaelStJules @ 2022-07-07T18:21 (+2)

I agree that you can give different weights to different Dutch book/money pump arguments. I do think that if you commit 100% to complete preferences over all probability distributions over outcomes and invulnerability to Dutch books/money pumps, then expected utility maximization over each individual decision with an unbounded utility function is ruled out.

As you mention, one way to avoid this St. Petersburg Dutch book/money pump is to just commit to sticking with A, if A>B ex ante, and regardless of the actual outcome of A (+ some other conditions, e.g. A and B both have finite value under all outcomes, and A has infinite expected value), but switching to C under certain other conditions.

You may have similar commitment moves for person-affecting views, although you might find them all less satisfying. You could commit to refusing one of the 3 types of trades in the OP, or doing so under specific conditions, or just never completing the last step in any Dutch book, even if you'd know you'd want to. I think those with person-affecting views should usually refuse moves like trade 1, if they think they're not too unlikely to make moves like trade 2 after, but this is messier, and depends on your distributions over what options will become available in the future depending on your decisions. The above commitments for St. Petersburg-like lotteries don't depend on what options will be available in the future or your distributions over them.

Jacy @ 2022-07-07T12:55 (+8)

Trade 3 is removing a happy person, which is usually bad in a person-affecting view, possibly bad enough to not be worth less than $0.99 and thus not be Dutch booked.

Rohin Shah @ 2022-07-07T13:55 (+4)

Responded in the other comment thread.

MichaelStJules @ 2022-07-07T18:38 (+5)

In practice, I think those with person-affecting views should refuse moves like trade 1 if they "expect" to subsequently make moves like trade 2, because World 1 ≥ World 3*. This would depend on the particulars of the numbers, credences and views involved, though.

EDIT: Lukas discussed and illustrated this earlier here.

*EDIT2: replaced > with ≥.

Rohin Shah @ 2022-07-08T05:26 (+4)

In practice, I think those with person-affecting views should refuse moves like trade 1 if they "expect" to subsequently make moves like trade 2, because World 1 > World 3.

You can either have a local decision rule that doesn't take into account future actions (and so excludes this sort of reasoning), or you can have a global decision rule that selects an entire policy at once. I was talking about the local kind.

You could have a global decision rule that compares worlds and ignores happy people who will only exist in some of the worlds. In that case I'd refer you to Chapter 4 of On the Overwhelming Importance of Shaping the Far Future.

EDIT: Added as an FAQ.

(Nitpick: Under the view I laid out World 1 is not better than World 3? You're indifferent between the two.)

MichaelStJules @ 2022-07-08T09:22 (+2)

You can either have a local decision rule that doesn't take into account future actions (and so excludes this sort of reasoning), or you can have a global decision rule that selects an entire policy at once. I was talking about the local kind.

Thanks, it's helpful to make this distinction explicit.

Aren't such local decision rules generally vulnerable to Dutch book arguments, though? I suppose PAVs with local decision rules are vulnerable to Dutch books even when the future options are fixed (or otherwise don't depend on past choices or outcomes), whereas EU maximization with a bounded utility function isn't.

I don't think anyone should aim towards a local decision rule as an ideal, though, so there's an important question of whether your Dutch book argument undermines person-affecting views much at all relative to alternatives. Local decision rules will undweight option value, value of information, investments for the future, and basic things we need to do survive. We'd underinvest in research, and individuals would underinvest in their own education. Many people wouldn't work, since they only do it for their future purchases. Acquiring food and eating it are separate actions, too.

(Of course, this also cuts against the problems for unbounded utility functions I mentioned.)

You could have a global decision rule that compares worlds and ignores happy people who will only exist in some of the worlds. In that case I'd refer you to Chapter 4 of On the Overwhelming Importance of Shaping the Far Future.

I'm guessing you mean this is a bad decision rule, and I'd agree. I discuss some alternatives (or directions) I find more promising here.

(Nitpick: Under the view I laid out World 1 is not better than World 3? You're indifferent between the two.)

Woops, fixed.

Rohin Shah @ 2022-07-08T09:51 (+4)

I don't think anyone should aim towards a local decision rule as an ideal, though, so there's an important question of whether your Dutch book argument undermines person-affecting views much at all relative to alternatives. Local decision rules will undweight option value, value of information, investments for the future, and basic things we need to do survive.

I think it's worth separating:

- How to evaluate outcomes

- How to make decisions under uncertainty

- How to make decisions over time

The argument in this post is just about (1). Admittedly I've illustrated it with a sequence of trades (which seems more like (3)) but the underlying principle is just that of transitivity which is squarely within (1). When thinking about (1) I'm often bracketing out (2) and (3), and similarly when I think about (2) or (3) I often ignore (1) by assuming there's some utility function that evaluates outcomes for me. So I'm not saying "you should make decisions using a local rule that ignores things like information value"; I'm more saying "when thinking about (1) it is often a helpful simplifying assumption to consider local rules and see how they perform".

It's plausible that an effective theory will actually need to think about these areas simultaneously -- in particular, I feel somewhat compelled by arguments from (2) that you need to have a bounded mechanism for (1), which is mixing those two areas together. But I think we're still at the stage where it makes sense to think about these things separately, especially for basic arguments when getting up to speed (which is the sort of post I was trying to write).

MichaelStJules @ 2022-07-08T16:14 (+2)

Do you think the Dutch book still has similar normative force if the person-affecting view is transitive within option sets, but violates IIA? I think such views are more plausible than intransitive ones, and any intransitive view can be turned into a transitive one that violates IIA using voting methods like beatpath/Schulze. With an intransitive view, I'd say you haven't finished evaluating the options if you only make the pairwise comparisons.

The options involved might look the same, but now you have to really assume you're changing which options are actually available over time, which, under one interpretation of an IIA-violating view, fails to respect the view's assumptions about how to evaluate options: the options or outcomes available will just be what they end up being, and their value will depend on which are available. Maybe this doesn't make sense, because counterfactuals aren't actual?

Against an intransitive view, it's just not clear which option to choose, and we can imagine deliberating from World 1 to World 1 minus $0.98 following the Dutch book argument if we're unlucky about the order in which we consider the options.

MichaelStJules @ 2022-07-08T07:58 (+2)

Suppose that if I take trade 1, I have a p≤100% subjective probability that trade 2 will be available, will definitely take it if it is, and conditional on taking trade 2, a q≤100% subjective probability that trade 3 will be available and will definitely take it if it is. There are two cases:

- If p=q=100%, then I stick with World 1 and don't make any trade. No Dutch book. (I don't think p=q=100% is reasonable to assume in practice, though.)

- Otherwise, p<100% or q<100% (or generally my overall probability of eventually taking trade 3 is less than 100%; I don't need to definitely take the trades if they're available). Based on my subjective probabilities, I'm not guaranteed to make both trades 2 and 3, so I'm not guaranteed to go from World 1 to World 1 but poorer. When I do end up in World 1 but poorer, this isn't necessarily so different from the kinds of mundane errors that EU maximizers can make, too, e.g. if they find out that an option they selected was worse than they originally thought and switch to an earlier one at a cost.

- A more specific person-affecting approach that handles uncertainty is in Teruji Thomas, 2019. The choices can be taken to be between policy functions for sequential decisions instead of choices between immediate decisions; the results are only sensitive to the distributions over final outcomes, anyway.

- Alternatively (or maybe this is a special case of Thomas's work), as long as you guarantee transitivity within each set of possible definite outcomes from your corresponding policy functions (even at the cost of IIA), e.g. by using voting methods like Schulze/beatpath, then you can always avoid (strictly) statewise dominated policies as part of your decision procedure*. This rules out the kinds of Dutch books that guarantee that you're no better off in any state but worse off in some state. I'm not sure whether or not this approach will be guaranteed to avoid (strict) stochastically dominated options under the more plausible extensions of stochastic dominance when IIA is violated, but this will depend on the extension.

*over a finite set of policies to choose from. Say outcome distribution (strictly) statewise dominates outcome distribution given the set of alternative outcome distributions (including and ) if

where the inequality is evaluated statewise by fixing a state for , and all the alternatives in , i.e. for state , with respect to a probability measure over .

MichaelStJules @ 2022-07-07T20:58 (+2)

Q. In step 2, Alice was definitely going to exist, which is why we paid $1. But then in step 3 Alice was no longer definitely going to exist. If we knew step 3 was going to happen, then we wouldn't think Alice was definitely going to exist, and so we wouldn't pay $1.

If your person-affecting view requires people to definitely exist, taking into account all decision-making, then it is almost certainly going to include only currently existing people. This does avoid the Dutch book but has problems of its own, most notably time inconsistency. For example, perhaps right before a baby is born, it take actions that as a side effect will harm the baby; right after the baby is born, it immediately undoes those actions to prevent the side effects.

Do you mean in the case where we don't know yet for sure if we'll have the option to undo the actions after the baby is born?

If we do know for sure the option will be available, we'll be required to undo them, and the net welfare of those who will definitely exist anyway will be worse if we do the actions and then undo them than not taking them at all, then we wouldn't take the actions that would harm the baby in the first place, because it's worse for those who will definitely exist anyway.

A solution to time inconsistency could be to make commitments ahead of time, which is also a solution for some other decision-theoretic problems, like St. Petersburg lotteries for EU maximization with unbounded utility functions. Or, if we're accepting time inconsistency in some cases, then we should acknowledge that our reasons for it aren't generally decisive, and so not necessarily decisive against time inconsistent person-affecting views in particular.

Rohin Shah @ 2022-07-08T05:39 (+2)

I was imagining a local decision rule that was global in only one respect, i.e. choosing which people to consider based on who would definitely exist regardless of what decision-making happens. But in hindsight I think this is an overly complicated rule that no one is actually thinking about; I'll delete it from the post.

Devin Kalish @ 2022-07-07T15:41 (+2)

Maybe this is a little off topic, but while Dutch book arguments are pretty compelling in these cases, I think the strongest and maybe one of the most underrated arguments against intransitive axiologies is Michael Huemer's in "In Defense of Repugnance"

https://philpapers.org/archive/HUEIDO.pdf

Basically he shows that intransitivity is incompatible with the combination of:

If x1 is better than y1 and x2 is better than y2, then x1 and x2 combined is better than y1 and y2 combined

and

If a state of affairs is better than another state of affairs, then it is not also worse than that state of affairs

By giving the example of:

A>B>C>D>A

A+C

>

B+D

>

C+A

My nitpicky analytic professor pointed out that technically this form of the argument only works on axiologies in which cycles of four states exist, not all intransitive axiologies, but it's simple to tweak it to work for any number of states by lining them up in order, and comparing the same state shifted over once and then twice, leading to the even more absurd conclusion that a state of affairs can be both better and worse than itself:

A>B>C>D>E>A

A+B+C+D+E

>

B+C+D+E+A

>

C+D+E+A+B

In a way, I think this result is even more troubling than the dutch book, Because it prevents you from ranking worlds even relative to a single other world in a way that isn't sensitive to just the way the same world is described.

MichaelStJules @ 2022-07-07T16:29 (+13)

Person-affecting views aren't necessarily intransitive; they might instead give up the independence of irrelevant alternatives, so that A≥B among one set of options, but A<B among another set of options. I think this is actually an intuitive way to explain the repugnant conclusion:

If your available options are S, then the rankings among them are __:

- S={A, A+, B}: A>B, B>A+, A>A+

- S={A, A+}: A+≥A

- S={A, B}: A>B

- S={A+, B}: B>A+

A person-affecting view would need to explain why A>A+ when all three options are available, but A+≥A when only A+ and A are available.

However, violating IIA like this is also vulnerable to a Dutch book/money pump.

David Johnston @ 2022-08-24T04:19 (+3)

I think this makes more sense than initial appearances.

If A+ is the current world and B is possible, then the well-off people in A+ have an obligation to move to B (because B>A).

If A is the current world, A+ is possible but B impossible, then the people in A incur no new obligations by moving to A+, hence indifference.

If A is the current world and both A+ and B are possible, then moving to A+ saddles the original people with an obligation to further move the world to B. But the people in A, by supposition, don't derive any benefit from the move to A+ and the obligation to move to B harms them. On the other hand, the new people in A+ don't matter because they don't exist in A. Thus A+>A in this case.

Basically: options create obligations, and when we're assessing the goodness of a world we need to take into account welfare + obligations (somehow).

Devin Kalish @ 2022-07-07T17:07 (+1)

I'm really showing my lack of technical savy today, but I don't really know how to embed images, so I'll have to sort of awkwardly describe this.

For the classic version of the mere addition paradox this seems like an open possibility for a person affecting view, but I think you can force pretty much any person affecting view into intransitivity if you use the version in which every step looks like some version of A+. In other words, you start with something like A+, then in the next world, you have one bar that looks like B, and in addition another, lower but equally wide bar, then in the next step, you equalize to higher than the average of those in a B-like manner, and in addition another equally wide, lower bar appears, etc. This seems to demand basically any person affecting view prefer the next step to the one before it, but the step two back to that one.

MichaelStJules @ 2022-07-07T19:08 (+2)

Views can be transitive within each option set, but have previous pairwise rankings changed as the option set changes, e.g. new options become available. I think you're just calling this intransitivity, but it's not technically intransitivity by definition, and is instead a violation of the independence of irrelevant alternatives.

Transitivity + violating IIA seems more plausible to me than intransitivity, since the former is more action-guiding.

Devin Kalish @ 2022-07-07T19:27 (+1)

I agree that there's a difference, but I don't see how that contradicts the counter example I just gave. Imagine a person affecting view that is presented with every possible combination of people/welfare levels as options, I am suggesting that, even if it is sensitive to irrelevant alternatives, it will have strong principled reasons to favor some of the options in this set cyclically if not doing so means ranking a world that is better on average for the pool of people the two have in common lower. Or maybe I'm misunderstanding what you're saying?

MichaelStJules @ 2022-07-07T20:04 (+4)

There are person-affecting views that will rank X<Y or otherwise not choose X over Y even if the average welfare of the individuals common to both X and Y is higher in X.

A necessitarian view might just look at all the people common to all available options at once, maximize their average welfare, and then ignore contingent people (or use them to break ties, say). Many individuals common to two options X and Y could be ignored this way, because they aren't common to all available options, and so are still contingent.

Christopher J. G. Meacham, 2012 (EA Forum discussion here) describes another transitive person-affecting view, where I think something like "the available alternatives are so relevant, that they can even overwhelm one world being better on average than another for every person the two have in common", which you mentioned in your reply, is basically true. For each option, and each individual in the the option, we take the difference between their maximum welfare across options and their welfare in that option, add up them up, and then minimize the sum. Crucially, it's assumed when someone doesn't exist in an option, we don't add their welfare loss from their maximum for that option, and when someone has a negative welfare in an option but don't exist in another option, their maximum welfare across options will at least be 0. There are some technical details for matching individuals with different identities across worlds when there are people who aren't common to all options. So, in the repugnant conclusion, introducing B makes A>A+, because it raises the maximum welfares of the extra people in A+.

Some views may start from pairwise comparisons that would give the kinds of cycles you described, but then apply a voting method like beatpath voting to rerank or select options and avoid cycles within option sets. This is done in Teruji Thomas, 2019. I personally find this sort of approach most promising.

Devin Kalish @ 2022-07-07T20:53 (+1)

This is interesting, I'm especially interested in the idea of applying voting methods to ranking dilemmas like this, which I'm noticing is getting more common. On the other hand it sounds to me like person-affecting views mostly solve transitivity problems by functionally becoming less person-affecting in a strong, principled sense, except in toy cases. Meacham sounds like it converges to averagism on steroids from your description as you test it against a larger and more open range of possibilities (worse off people loses a world points, but so does more people, since it sums the differences up). If you modify it to look at the average of these differences, then the theory seems like it becomes vulnerable to the repugnant conclusion again, as the quantity of added people who are better off in one step in the argument than the last can wash out the larger per-individual difference for those who existed since earlier steps. Meanwhile the necessitarian view as you describe it seems to yield either no results in practice if taken as described in a large set of worlds with no one common to every world, or if reinterpreted to only include the people common to the very most worlds, sort of gives you a utility monster situation in which a single person, or some small range of possible people, determine almost all of the value across all different worlds. All of this does avoid intransitivity though as you say.

Devin Kalish @ 2022-07-07T17:09 (+1)

Or I guess maybe it could say that the available alternatives are so relevant, that they can even overwhelm one world being better on average than another for every person the two have in common?

Devin Kalish @ 2022-07-07T15:47 (+1)

(also, does anyone know how to make a ">" sign on a new line without it doing some formatty thing? I am bad with this interface, sorry)

Gavin @ 2022-07-07T15:51 (+2)

You could turn off markdown formatting in settings

Devin Kalish @ 2022-07-07T16:12 (+1)

Seems to have worked, thanks!