MATS Winter 2023-24 Retrospective

By utilistrutil @ 2024-05-11T00:09 (+62)

Co-Authors: @Rocket, @LauraVaughan, @McKennaFitzgerald, @Christian Smith, @Juan Gil, @Henry Sleight, @Matthew Wearden, @Ryan Kidd

The ML Alignment & Theory Scholars program (MATS) is an education and research mentorship program for researchers entering the field of AI safety. This winter, we held the fifth iteration of the MATS program, in which 63 scholars received mentorship from 20 research mentors. In this post, we motivate and explain the elements of the program, evaluate our impact, and identify areas for improving future programs.

Summary

Key details about the Winter Program:

- The four main changes we made after our Summer program were:

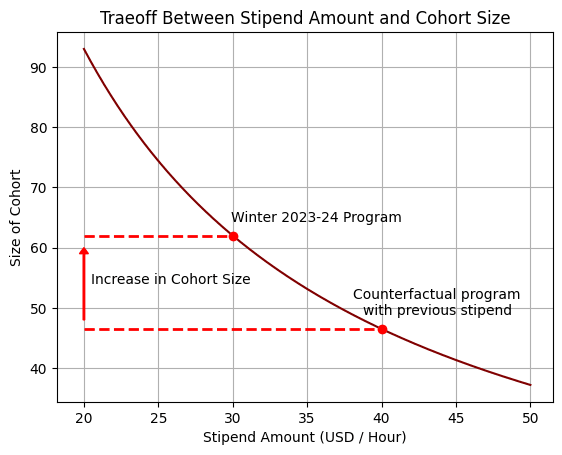

- Reducing our scholar stipend from $40/h to $30/h based on alumni feedback;

- Transitioning Scholar Support to Research Management;

- Using the full Lighthaven campus for office space as well as housing;

- Replacing Alignment 201 with AI Strategy Discussions.

- Educational attainment of MATS scholars:

- 48% of scholars were pursuing a bachelor’s degree, master’s degree, or PhD;

- 17% of scholars had a master’s degree as their highest level of education;

- 10% of scholars had a PhD.

- If not for MATS, scholars might have spent their counterfactual winters on the following pursuits (multiple responses allowed):

- Conducting independent alignment research without mentor (24%);

- Working at a non-alignment tech company (21%);

- Conducting independent alignment research with a mentor (13%);

- Taking classes (13%).

Key takeaways from scholar impact evaluation:

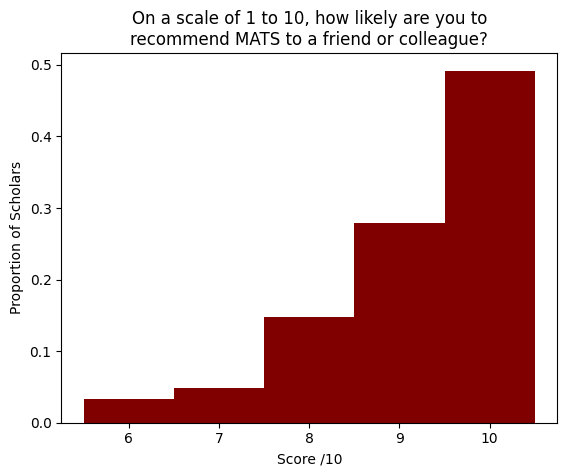

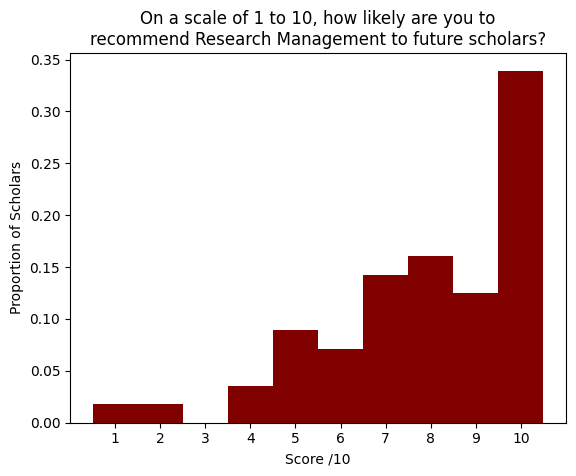

- Scholars are highly likely to recommend MATS to a friend or colleague (average likelihood is 9.2/10 and NPS is +74).

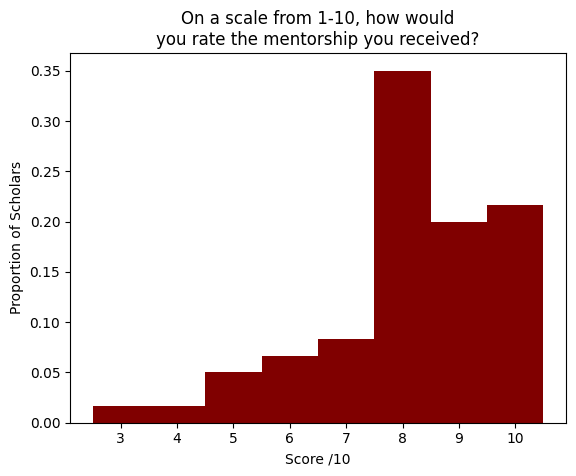

- Scholars rated the mentorship they received highly (average rating is 8.1/10).

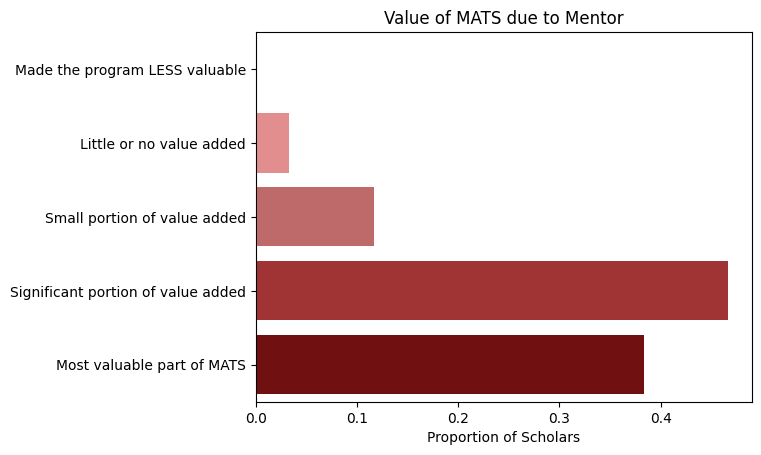

- For 38% of scholars, mentorship was the most valuable element of MATS.

- Scholars are likely to recommend Research Management to future scholars (average likelihood is 7.9/10 and NPS is +23).

- The median scholar valued Research Management at $1000.

- The median scholar reported accomplishing 10% more at MATS because of Research Management and gaining 10 productive hours.

- The median scholar made 5 professional connections and found 5 potential future collaborators during MATS.

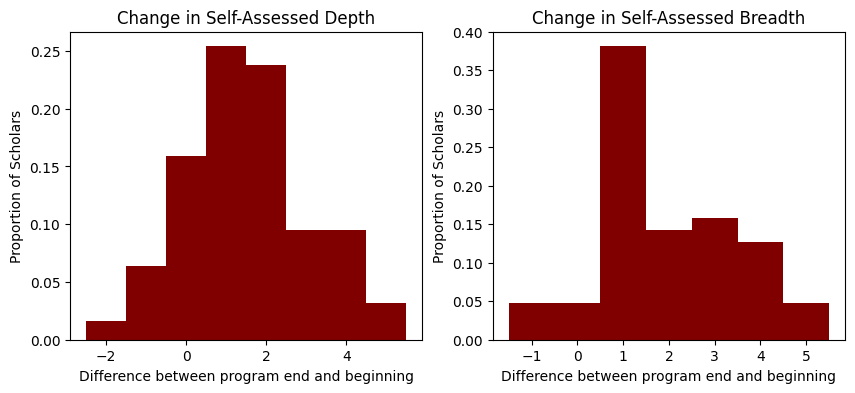

- The average scholar self-assessed their improvement on the depth of their technical skills by +1.53/10, their breadth of knowledge by +1.93/10, their research taste by +1.35/10, and their theory of change construction by +1.25/10.

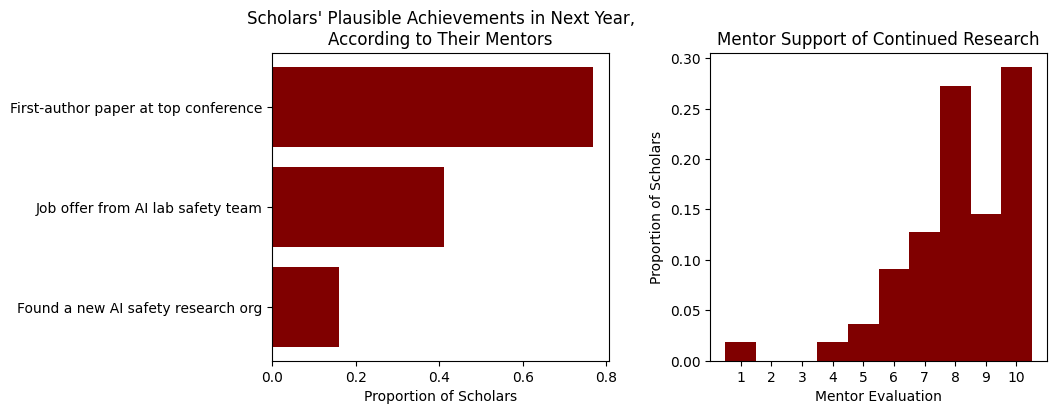

- According to mentors, of the 56 scholars evaluated, 77% could achieve a “First-author paper at top conference,” 41% could receive a “Job offer from AI lab safety team,” and 16% could “Found a new AI safety research org.”

- Mentors were enthusiastic for scholars to continue their research, rating the average scholar 8.1/10, on a scale where 10 represented “Very strongly believe scholar should receive support to continue research.”

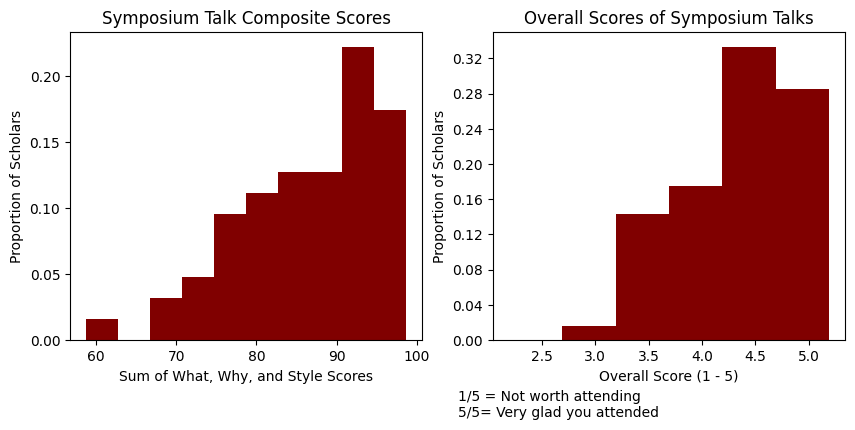

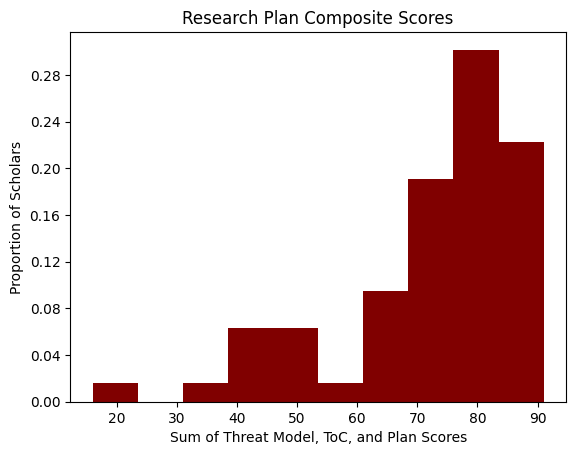

- Scholars completed two milestone assignments, a research plan and a presentation.

- Research plans were graded by MATS alumni; the median score was 76/100.

- Presentations received crowdsourced evaluations; the median score was 86/100.

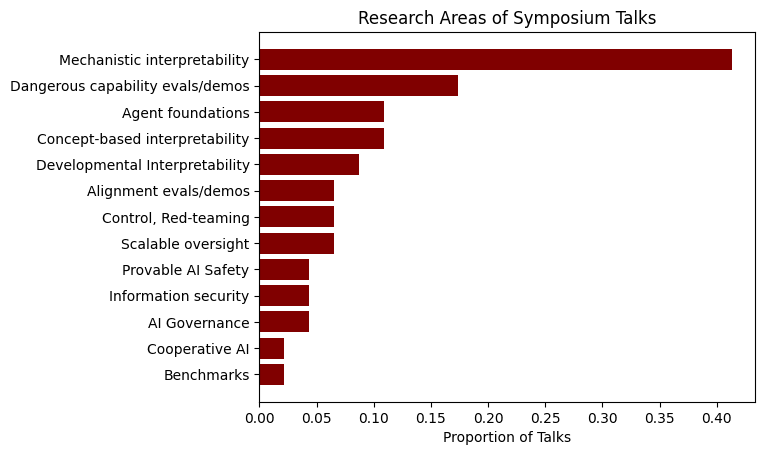

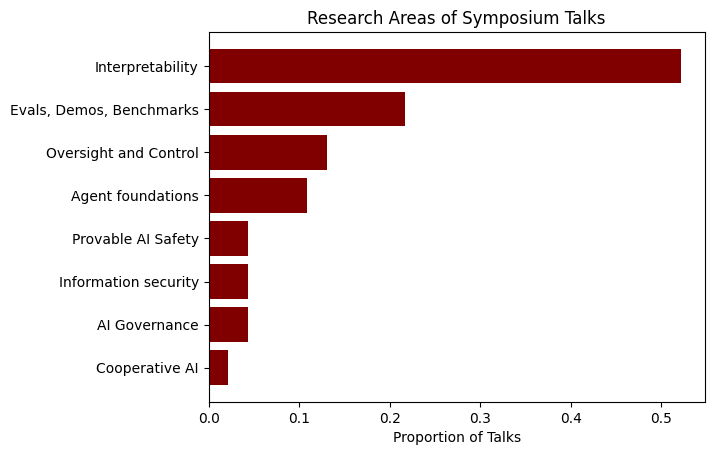

- 52% of presentations featured interpretability research, representing a significant proportion of the cohort’s research interests.

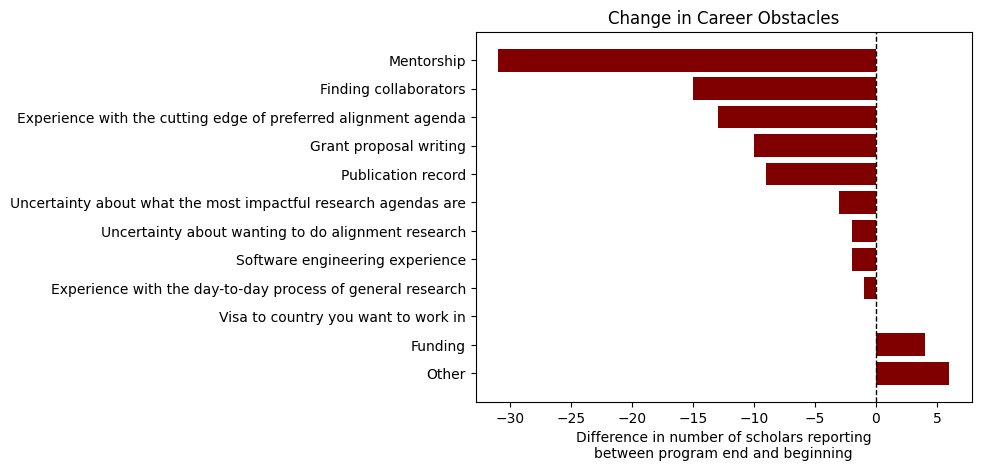

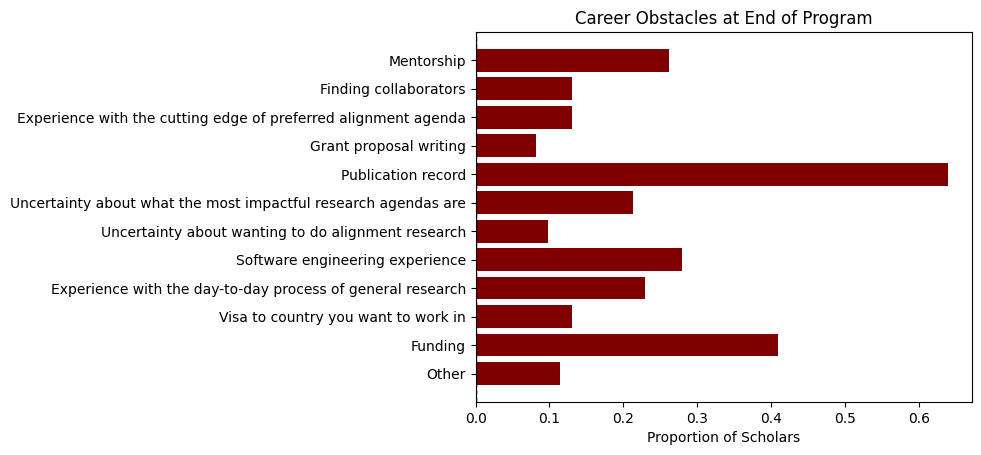

- After MATS, scholars reported facing fewer obstacles to a successful alignment career than they did at the start of the program.

- The obstacles that decreased the most were “mentorship,” “collaborators,” experience with a specific alignment agenda, and “grant proposal writing.”

- “Funding” increased as an obstacle over the course of MATS.

- At the end of the program, “publication record” was an obstacle for over 60% of scholars.

Key takeaways from mentor impact evaluation:

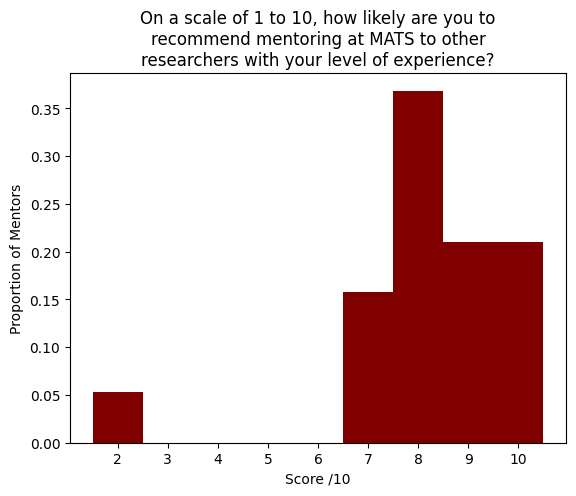

- Mentors are highly likely to recommend MATS to other researchers (average likelihood is 8.2/10 and NPS is +37).

- Mentors are likely to recommend Research Management (average likelihood is 7.7/10 and NPS is +7).

- The median mentor valued Research Management at $3000.

- The median mentor reported accomplishing 10% more because of Research Management and gaining 4 productive hours.

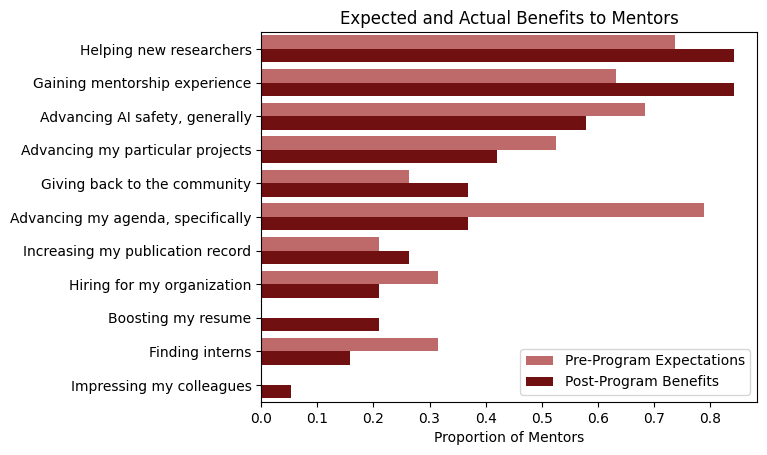

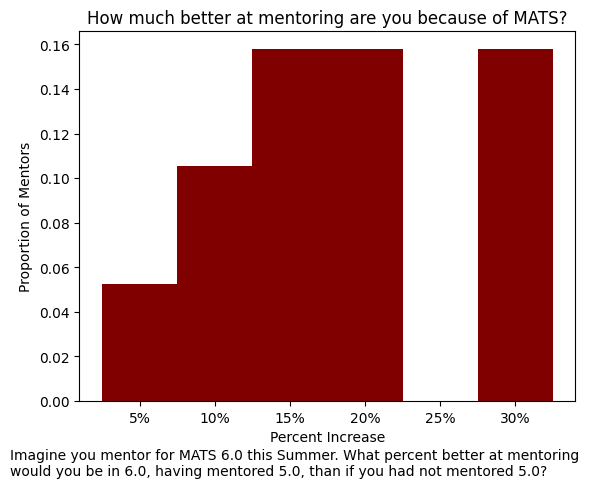

- The most common benefits of mentoring were “helping new researchers,” “gaining mentorship experience,” “advancing AI safety, generally,” and “advancing my particular projects.”

- Mentors improved their mentorship abilities by 18%, on average.

Key changes we plan to make for future cohorts:

- Introducing an advisory board for mentor selection;

- Shifting our research portfolio away from such a large interpretability dominance;

- Supporting AI governance mentors;

- Pre-screening more applicants for their software engineering abilities; and

- Modifying our format for AI safety strategy discussion groups.

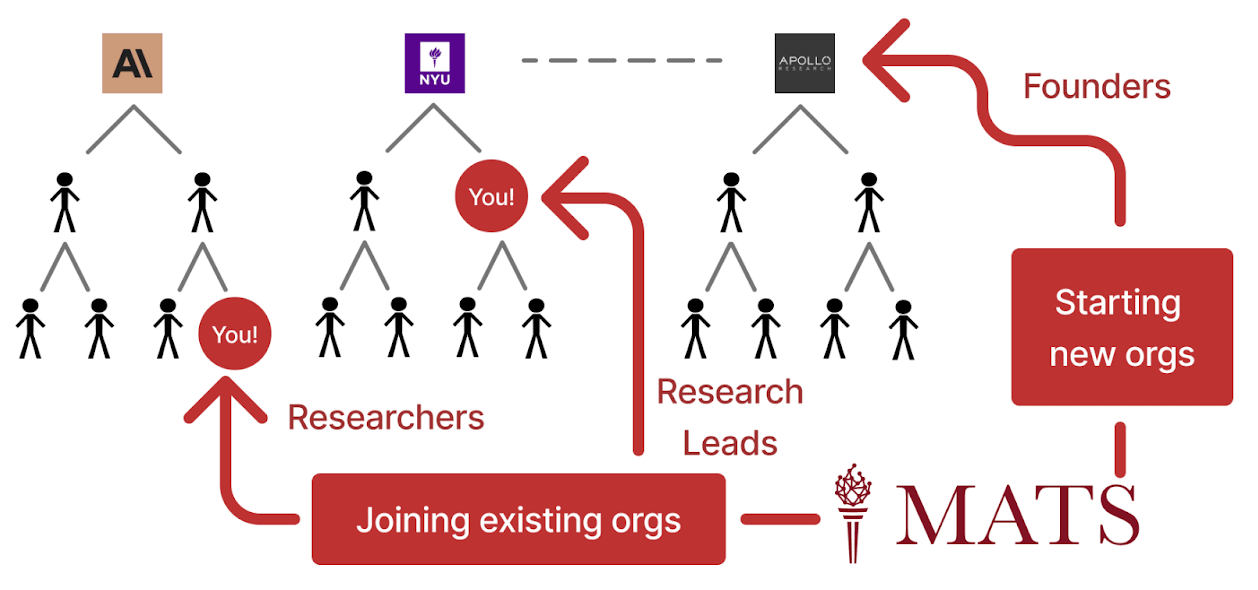

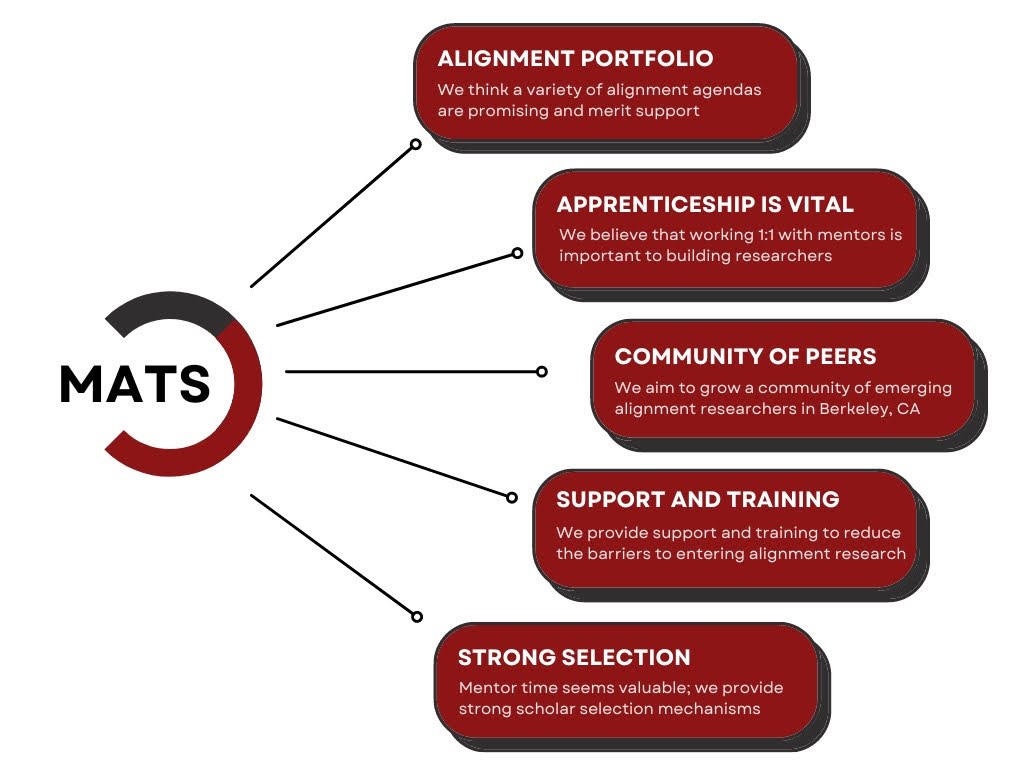

Theory of Change

MATS expands the talent pipeline for AI safety research by empowering scholars to work on AI safety at existing organizations, found new organizations, or pursue independent research.

To this end, MATS connects scholars with senior research mentors and reduces the barriers for these mentors to take on mentees by providing funding, housing, training, office spaces, research management, networking opportunities, and logistical support. By mentoring MATS scholars, these senior researchers benefit from research assistance and improve their mentorship skills, preparing them to supervise future research more effectively.

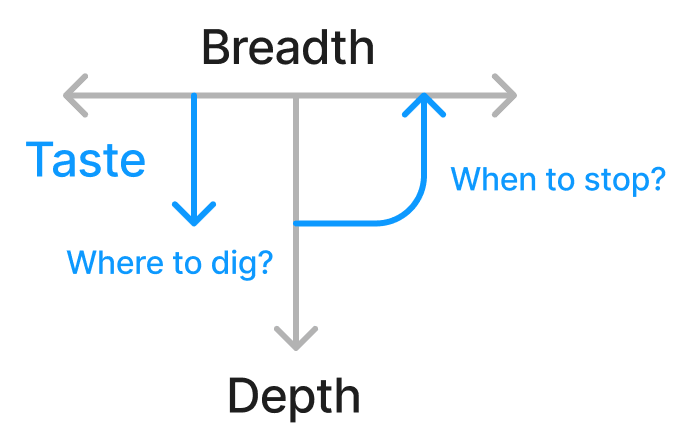

MATS aims to select and develop research scholars on three primary dimensions:

- Depth: Thorough understanding of a specialist field of AI safety research and sufficient technical ability (e.g., ML, CS, math) to pursue novel research in this field.

- Breadth: Broad familiarity with the AI safety landscape, including organizations and research agendas; a large “toolbox” of useful theorems and knowledge from diverse subfields; and the ability to conduct literature reviews.

- Taste: Good judgment about research direction and strategy, including what (sub-)questions to investigate, what hypotheses to consider, what assumptions to test, how to measure progress, how to present findings, how to balance risks such as capabilities advancements, and when to cease or pivot a line of research.

Read more about our theory of change here. An article expanding on our theory of change is forthcoming.

Winter Program Overview

Schedule

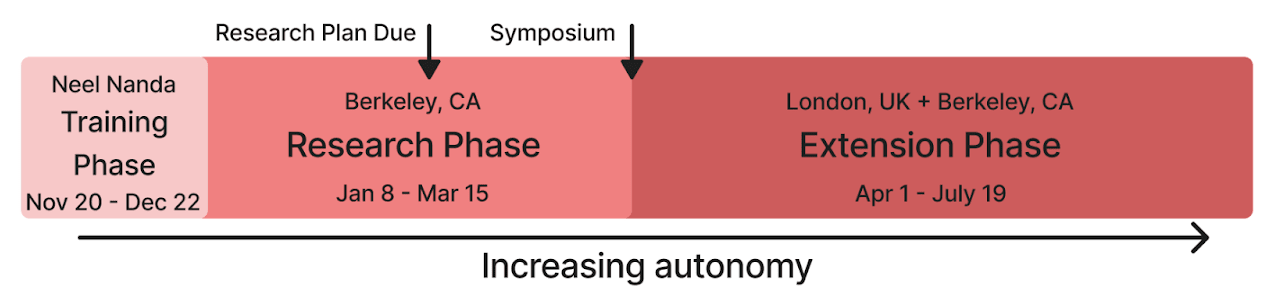

The Winter 2023-24 Program had three phases.

- Training Phase: Neel Nanda’s scholars participated in a month-long remote curriculum, culminating in a Research Sprint, which informed acceptance decisions for the Research Phase. For other scholars, a series of AI Safety Strategy Discussions replaced our traditional Training Phase (see Strategy Discussions below).

- Research Phase: In Berkeley, scholars conducted research under a mentor, submitted a written “Research Plan,” networked with the Bay Area AI safety community, connected with collaborators, and presented their findings to an audience of peers and professional researchers.

- Extension Phase: Many scholars applied to continue their projects in London and Berkeley, with ongoing mentorship and support from MATS.

Mentor Selection

Approach

At a high level of abstraction, MATS faces a complex optimization problem: how should we select mentors and, by extension, scholars that, in expectation, most reduce catastrophic risk from AI? One reason this problem is especially hard is that the value of a portfolio of mentors depends on non-additive interactions; that is, the marginal value of a mentor depends on which other mentors have already been selected working on similar or complementary research agendas. Conscious of these interactions, we aim to construct a “diverse portfolio of research bets” that might contribute to AI safety even if some research agendas prove unviable or require complementary approaches. To make this problem more tractable, we rely on a number of simplifications and heuristics.

Firstly, we take a greedy approach to program impact, focusing on improving the next program rather than committing significant efforts to future programs well in advance. We are unlikely to, for example, save significant funding for a future program when we could use that funding for an additional scholar in the upcoming program, particularly as the marginal rejected scholars are generally quite talented. Secondly, we take a mentor-centric approach to scholar selection, beginning with mentor selection and then conducting scholar selection to mentor specifications. In part this is because the number of scholars we can support in a given program depends on the level of funding we receive, which is somewhat determined by funders’ enthusiasm for the mentors in that program. Primarily, however, we adopt a mentor-centric approach because we believe that mentors are the best judge of contributors to their research projects.

We ask potential mentors to submit an expression of interest form, which is then reviewed by the MATS Executive. When evaluating potential mentors, we chiefly consider these heuristics:

- On the margin, how much do we expect this research agenda to reduce catastrophic risk from AI?

- What do trusted experts think about this individual and their research agenda?

- How much “exploration value” would come from supporting this research agenda? Could the agenda catalyze a paradigm shift, if necessary?

- How much research and mentoring experience does this individual have?

- How would this individual spend their time if they were not supported by MATS?

- How would supporting this individual and their scholars shape the development of ideas and talent in the broader AI safety ecosystem?

Heuristics 5 and 6 are systemic considerations, where we assess the whole-field impact of supporting mentors suggested by heuristics 1-4. For example, if a mentor is already well-served by an academic talent pipeline or hiring infrastructure, perhaps we do not provide significant marginal value. Additionally, if we support too many mentors pursuing a particular style of research, we might unintentionally steer the composition of the AI safety research field, given the size of our program (~60 scholars) relative to the size of the field (~500 technical researchers).

Scholar Allocation

Because MATS was funding-constrained in Winter 2023-24, we could not admit as many scholars as our selected mentors would have preferred. Consequently, the MATS Executive allocated scholar “slots” to mentors based on the value of the marginal scholar for each mentor (up to the mentors’ self-expressed caps). For example, we might have supported an experienced mentor with four scholar slots before supporting a less experienced mentor with one scholar slot. Conversely, allocating one scholar to a new mentor might have offered higher marginal value than providing an eighth scholar to an experienced mentor.

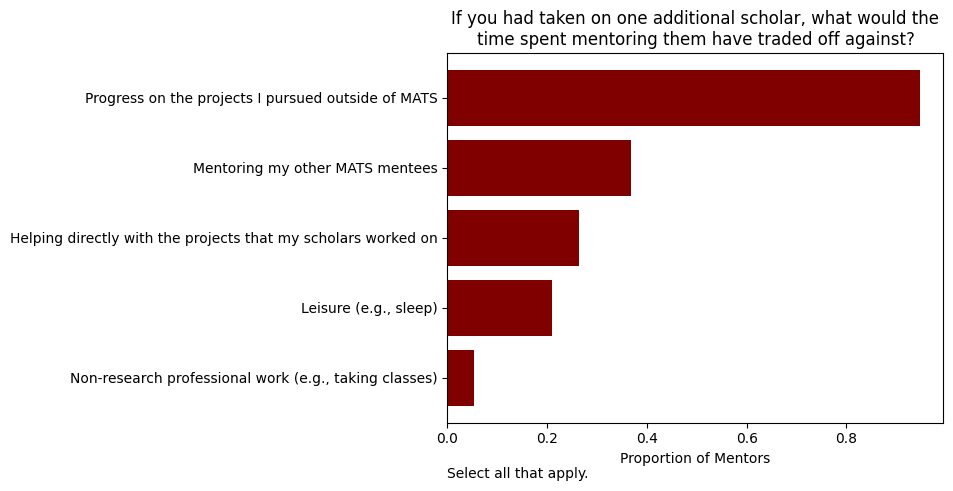

We surveyed mentors about the opportunity cost of taking on an additional scholar.

For 95% of mentors, their time spent on an additional scholar would have traded off against progress on their own projects.

Winter Mentor Portfolio

Of the 23 mentors in the Winter 2023-24 program, 7 were returning from a previous MATS program: Jesse Clifton, Evan Hubinger, Vanessa Kosoy, Jeffrey Ladish, Neel Nanda, Lee Sharkey, and Alex Turner. We welcomed 16 new mentors for the winter: Adrià Garriga Alonso, Stephen Casper, David ‘davidad’ Dalrymple, Shi Feng, Jesse Hoogland, Erik Jenner, David Lindner, Julian Michael, Daniel Murfet, Caspar Oesterheld, David Rein, Jessica Rumbelow, Jérémy Scheurer, Asa Cooper Stickland, Francis Rhys Ward, and Andy Zou.

These mentors represented 10 research agendas:

- Agent Foundations

- Concept-Based Interpretability

- Cooperative AI

- Deceptive Alignment

- Developmental Interpretability

- Evaluating Dangerous Capabilities

- Mechanistic Interpretability

- Provable AI Safety

- Scalable Oversight

- Understanding AI Hacking

Mentors’ Counterfactual Winters

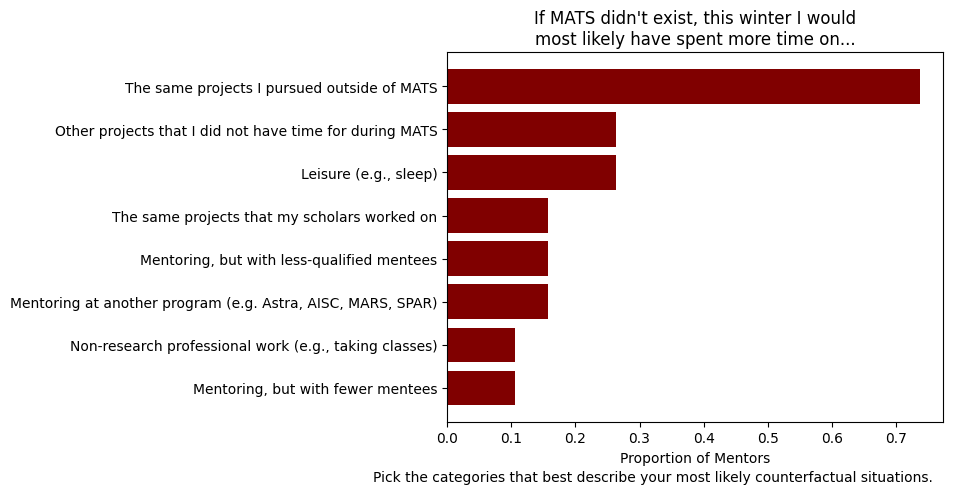

We surveyed mentors about how they would have spent their winters if they had not mentored for MATS.

For 74% of respondents, mentoring traded off against time spent on the same projects they pursued outside of MATS. 26% would have spent some counterfactual time on projects they did not have time for during MATS.

Other Mentorship Programs

Some mentors indicated that if they had not mentored for MATS, they would have spent their counterfactual winters mentoring for a different program. Concurrent with the winter MATS Program, Constellation, a Berkeley AI safety office, ran two research upskilling programs: the Astra Fellowship, and the Visiting Researchers Program. Mentors who indicated they would have counterfactually mentored for a different program may have had Constellation or another program in mind.

Scholar Selection

Our application process for scholars was highly competitive. Of 429 applicants, 15% were accepted for the Research Phase.[1] Five of these 63 scholars participated entirely remotely; the rest participated in-person for at least part of the program. There was considerable variance in acceptance rates between mentors (2.6% to 33%). Mentors generally chose to screen applicants with rigorous questions and tasks.[2]

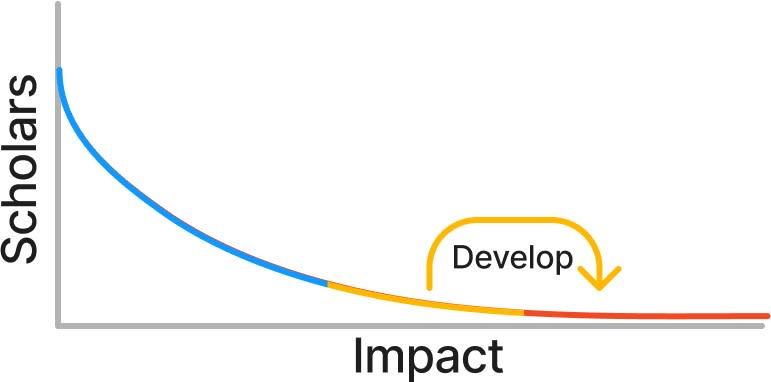

Why employ such a difficult application process? First, we believe that the distribution of expected impact from prospective scholars is long-tailed, such that most of MATS’ expected impact can be attributed to identifying and developing talent among the most promising applicants.

Second, we are bottlenecked on scholar funding and mentor time, so we cannot accept every good applicant. For these two reasons, it is imperative that our application process achieves resolution in the talent tail. From our Theory of Change:

We believe that our limiting constraint is mentor time. This means we wish to have strong filtering mechanisms (e.g. candidate selection questions) to ensure that each applicant is suitable for each mentor. We’d rather risk rejecting a strong participant than admitting a weak participant.

In particular, given that 74% of mentors would have used their time spent mentoring on their (highly valuable) research if not in program, we feel justified in our high bar for scholar acceptance. We additionally believe, based on our conversations with past applicants and scholars, that the challenging selection problems are often seen as a fun and useful skill-building exercise.

Our rigorous application process reduces noise in our assessments of applicant quality, even at the cost of discouraging some potentially promising candidates from applying. Additional filters reduced the number of scholars admitted to the Extension Phase from the Research Phase.[3]

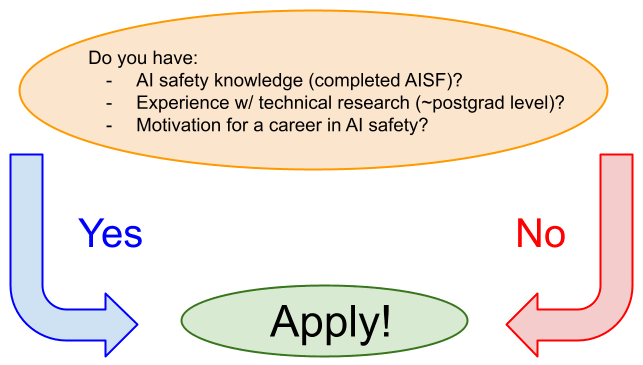

Strong applicants have demonstrated their abilities in terms of the depth, breadth, and taste dimensions described above. During pre-program recruiting, we informed prospective applicants that our ideal candidate possesses a breadth of AI safety knowledge equivalent to having completed AISF’s Alignment Course, experience with technical research at a postgraduate level (e.g., in CS, ML, math, or physics), and a motivation to pursue a career in AI safety to mitigate catastrophic risk. We clarified that, for some mentors, engineering experience can substitute for research experience. We also encouraged people who did not fit these criteria to apply, noting that many past applicants without high expectations were accepted.

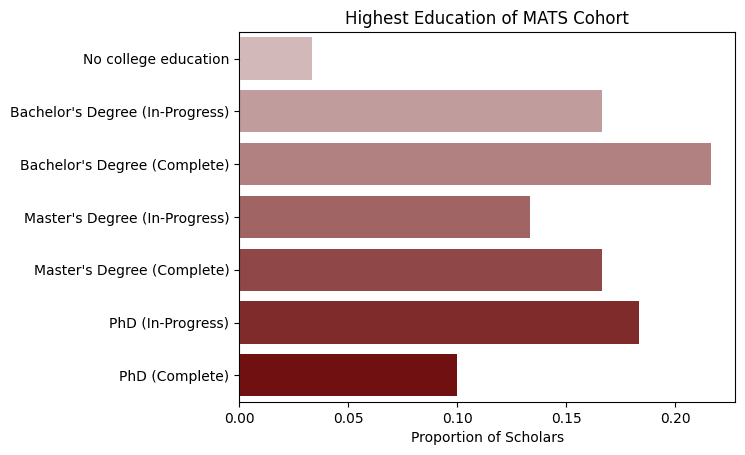

Educational Attainment of Scholars

A variety of educational and employment backgrounds were represented among the admitted cohort. 3% of scholars had no college education, 38% of scholars had at most a bachelor’s degree or were in college, 30% had at most a master’s degree or were in a master’s program, 28% had a PhD or were in a PhD program. Almost half of scholars were students.

The high level of academic and engineering talent entering AI safety continues to impress us, but we remain committed to increasing outreach to experienced researchers and engineers.

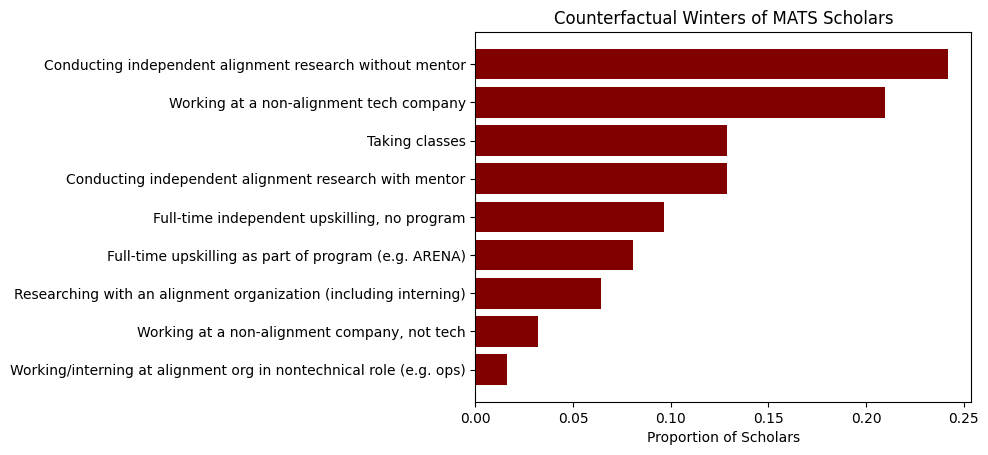

Scholars’ Counterfactual Winters

At the start of the program, we prompted scholars, “If MATS didn't exist, this winter I would most likely be…”, instructing them to “pick the category that best describes your most likely counterfactual situation.”

Of the 8 scholars who would have conducted mentored alignment research in the absence of MATS, 4 are undergraduate students, and 3 are pursuing a graduate degree.

Elaborating on their alternative summer plans, many respondents mentioned non-safety technical roles, coursework, and independent research:

- “Day job, machine learning research engineer to publish papers/develop products.”

- “Working a regular software development job”

- “I would probably have taken a job in industry as a research engineer at an AI lab (although this may not have been realistic). Alternatively I may have done an internship in an alignment organisation.”

- “I'd be doing similar research but more independently and with less mentorship.”

- “More school + possibly working under a phd student at school on a less exciting/alignment-relevant project”

- “The Astra fellowship. If that didn't exist either, I'd be doing my PhD research”

- “I'd research AI safety and alignment with my advisor and collaborators”

Engineering Tests

In our Summer 2023 Retrospective, we expressed our intention to improve our application filtering process.

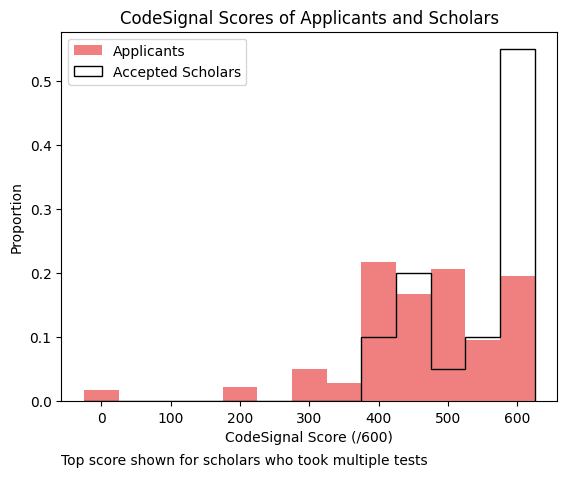

One component of a better filter for some streams is increased screening for software engineering skill. This can be a costly evaluation process for mentors to run themselves, so for the Winter 2023-24 Program we contracted a SWE evaluation organization to screen applicants for empirical ML research streams.

At the suggestion of Ethan Perez, we contracted CodeSignal to administer engineering tests, which focused on general programming ability, rather than ML experience. Ideally, every applicant to a mentor who focused on empirical research would have been pre-screened with a CodeSignal test, saving the mentor time on scholar selection. Due to budget constraints, we only had enough tests for 20-32% of each mentor’s applicants, so mentors had to manually review each application to determine who would receive a CodeSignal test. For the next program, we expect to administer CodeSignal tests more widely (see Pre-Screening with CodeSignal below).

Of the accepted scholars, 40% achieved perfect CodeSignal scores of 600/600, compared to 15% of applicants. Notably, some applicants who achieved perfect scores were not accepted into the Research Phase. Two mentors disproportionately accounted for the accepted scholars with lower scores. One of these mentors rejected an applicant with a perfect score and an applicant with a near-perfect score, but indicated in a later survey that they wished their applicants had more programming experience.

Stipends

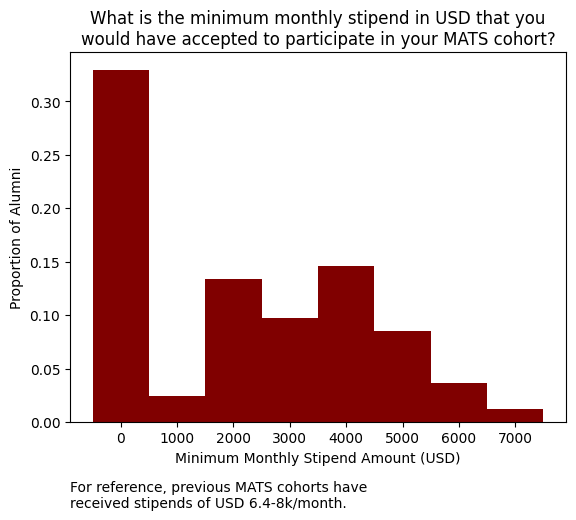

Financial barriers can prevent promising researchers from entering AI safety, so providing grants (referred to here as stipends) to scholars is an important component of programs like ours. While MATS does not provide funding directly to scholars (except via office and housing support and reimbursements for travel and computing costs), AI Safety Support has offered to provide grants to scholars who complete the program. During the 8-week Summer 2023 program, stipends were set at $40/h. Following that program, surveys of our alumni revealed that many would have done MATS for a lower stipend.

The average surveyed alumnus would have participated in MATS for a stipend of $2160/month, or $13.50/h at 40 h/week. Note that 35% of the surveyed alumni said they would have participated in MATS for free; if we discount these alumni, the average minimum stipend was $3486/month, or $21.79/h at 40 h/week. MATS and AI Safety Support ultimately chose a stipend of $4800/month, or $30/h, for the Winter 2023-34 Program because it would have satisfied 85% of alumni, was more than double the average minimum stipend, and was nevertheless larger than that offered by comparable academic mentorship programs. Constellation subsequently chose the same rate for those participating in their Winter programs.

We followed up with some of these alumni to ask for explanations of their low numbers. Some cited savings, alternative funding sources, jobs to return to, the in-kind benefits MATS provides (housing, office, food, etc), and expectations of low compensation as they pivoted into AI safety. One alumnus commented that, while they would have done MATS without a stipend, the stipend was an honest signal that “this is a serious programme that expects to get serious people . . . not just fresh grads with nothing else to do.”

We are cognizant that these alumni are reporting with the benefit of hindsight, so their answers may not reflect the positions of would-be applicants who do not yet know how valuable MATS will be for them. Moreover, these responses are subject to survivorship bias: we could never observe alumni for whom the stipend was too low, because such people would not have joined the program. But these caveats would still apply no matter how high of a stipend we chose. Our alumni responses indicated that we could afford to reduce the stipend without jeopardizing the talent of our applicant pool.

For a fixed budget, setting a lower stipend allows MATS to fund more scholars.[4]At $30/h, MATS was able to accept ~18 more scholars than we could have accommodated under the previous program’s rate. Anecdotally, we have heard of one individual who was deterred from applying but would have applied if the stipend was higher. Given the tradeoff we face, we expect that there will always be cases like this, even under the optimal stipend amount.

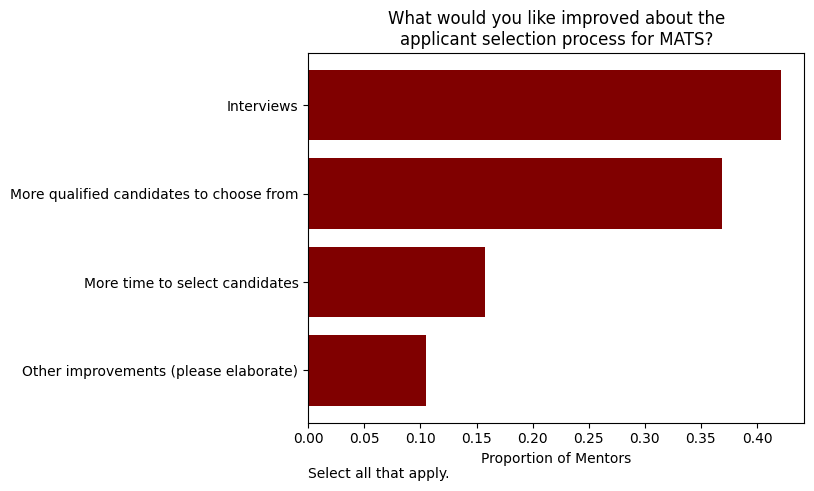

Mentor Suggestions

We surveyed mentors about their experiences with our Scholar Selection process. Mentors recommended some improvements:

Mentors elaborated on “Other improvements”:

- “I got some mixed messages about how available the coding interview option is. So I spent some time thinking about which of my applicants I'd want coding interviews for and then got a response saying sth along the lines of "yeah, actually we really only want to do this in cases where it's really necessary" and then no coding interviews happened in the end.”

- “I followed a relatively rigorous process (spreadsheet with a bunch of scores, aggregated in some way) for evaluating applicants to avoid [conflicts of interest (CoIs)] (because I knew/was friends with) at least one of the applicants. (I was asked to follow such a process when I mentioned CoIs.) This was kind of a pain. It took a lot of time, without offering huge benefits. Anyway, to some extent this was self-inflicted. I probably could have come up with a simpler system.”

- “Better coordination across streams.”

One mentor followed up on their answer to say “I selected "more qualified candidates to choose from" because that always seems better, but I want to be clear that I'm already impressed by MATS scholars (and think it's definitely worth my time mentoring them).”

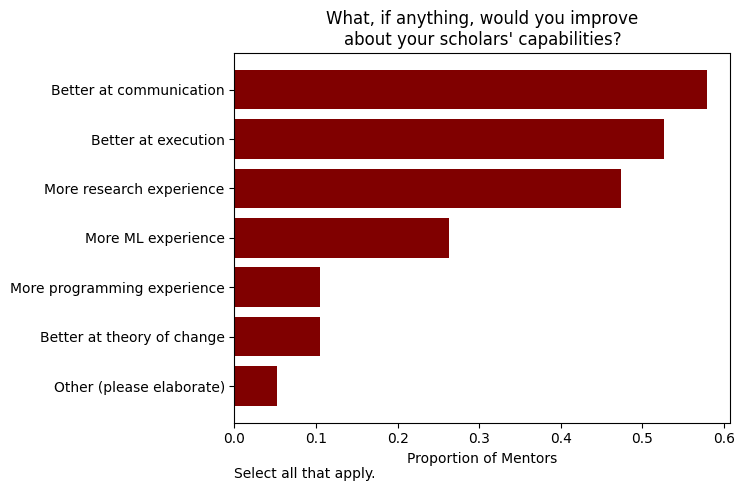

We also asked mentors how their scholars could have been better suited for MATS.

One mentor elaborated, “I mean I'd love for my scholars to be better at everything for sure! But at some point they wouldn't want to be my scholars anymore. I think as it was my scholars were very good. One or two are hidden talents where lack of e.g. communication is blocking them from being a great researcher but otherwise they would be.”

To improve scholars along these dimensions, MATS could offer targeted training, but we could also strengthen the quality of the applicant pool and improve our selection process to choose the most qualified applicants.

Neel Nanda’s Training Phase (Nov 20-Dec 22)

In past programs, MATS has used AI Safety Fundamentals’ Alignment 201 course to prepare incoming scholars for the Research Phase. Due to time constraints and scholar feedback, we replaced Alignment 201 with a custom curriculum that scholars completed throughout the Research Phase (see Strategy Discussions below).

Neel Nanda followed an expedited schedule to run his Training Phase, which plays an indispensable role in his scholar selection process, as well as providing a valuable program in its own right. Neel accepted 30 scholars into the Training Phase, of whom 10 progressed to the Research Phase.[5] The first three weeks included live sessions of Neel doing research, brainstorming open problems, and lecturing on Mechanistic Interpretability (core techniques, SAEs); readings groups for papers (Mathematical Framework, Indirect Object Identification, Toy Models of Superposition, and Towards Monosemanticity); discussion groups on topics like “Is Mech Interp Useful?”; and remote opportunities to connect with collaborators. Participants worked together on research challenges, including extensive pair programming.

The second two weeks were dedicated to a Research Sprint, which participants completed in pairs. Neel made acceptance decisions for the Research Phase primarily on the basis of performance in the Sprint. We think that participating in Neel’s Training Phase was likely worth it even for those scholars who did not progress to the next phase, as evidenced by the large number of trainees who elected to audit Neel’s Training Phase without the possibility of progressing and testimonials from past trainees.[6] 24 of Neel’s trainees received a prorated stipend for their participation: $4.8k for this program. 15 others participated in the Training Phase without a stipend due to funding constraints. Accepted pairs of scholars continued work on their Sprint projects in the Research Phase.

Research Phase Elements (Jan 8-Mar 15)

While mentorship is the core of MATS, additional program elements supply other sources of learning and upskilling. Guest researchers host seminars to deliver technical information to scholars, workshops teach research tools that scholars can apply in their projects, and Research Management aims to improve working relationships between scholars and mentors. The next sections elaborate on each of these elements, along with six more elements that fill out our program offerings: Milestone Assignments, the Lighthaven Office, Strategy Discussions, Networking Events, Social Events, and Community Health.

Mentorship

We believe conducting research under an experienced mentor is a crucial input to the development of research leads. Mentors meet with their scholars at least once a week, and some meet more frequently. Mentors differ in their priorities, styles, and expectations. To communicate these differences to applicants, each mentor composed a personal fit statement. For example, the NYU ARG mentorship team broadcasted:

Each mentor will likely lead separate projects, each of which will have a small team of mentees, although mentees and mentors will help out with/provide feedback to other projects whenever it is useful to do so. Mentees will be able to choose a project/mentor at the beginning of the program (or propose their own project, assuming it aligns with mentor interest). Mentorship for scholars will likely involve:

- 1 hour weekly meetings for each project, and occasional 1:1 meetings with a mentor + "all hands" meetings with all participants in the stream

- Providing detailed feedback on write-up drafts

- Slack response times typically ≤ 48 hours

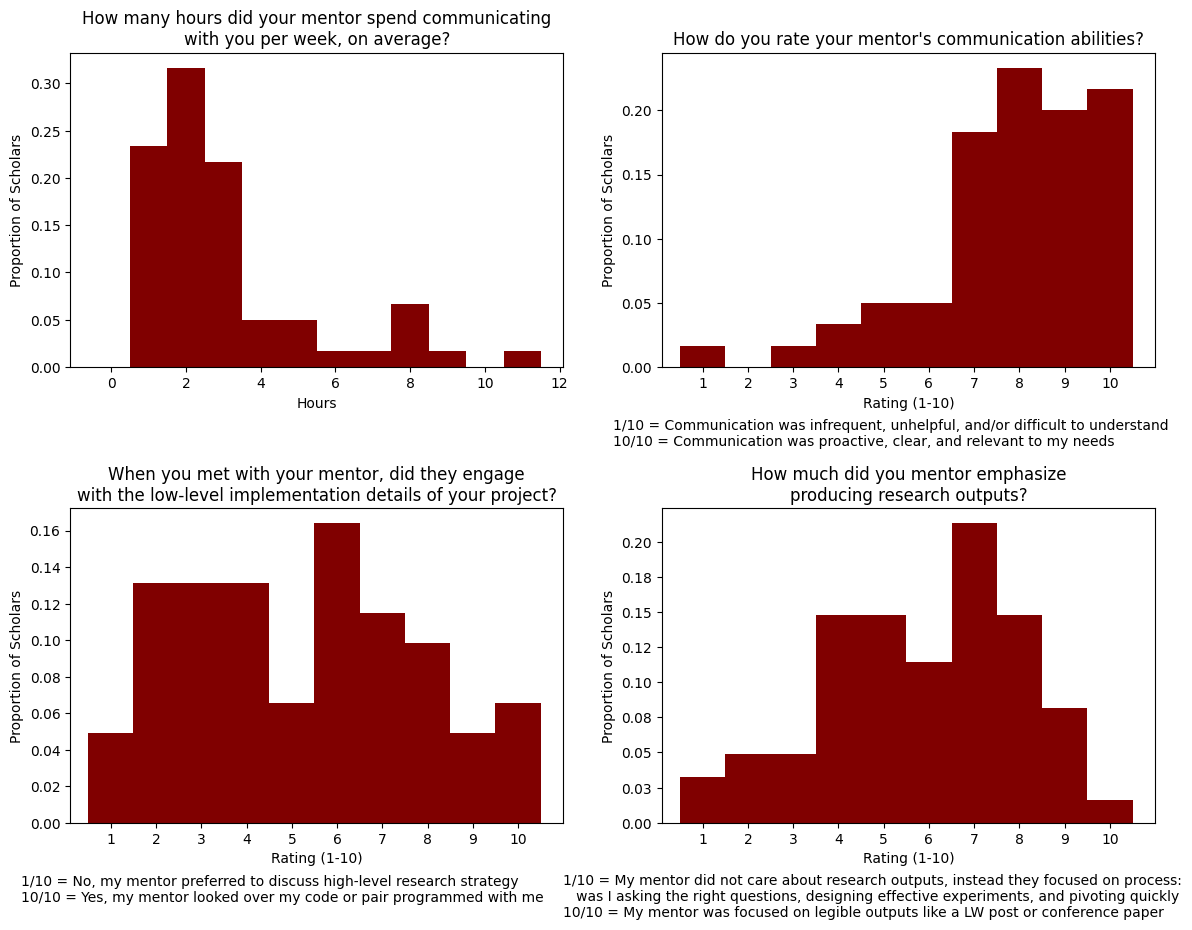

According to scholar ratings, the median scholar’s mentor spent 2.0 hours communicating with them every week, and 3.0 hours for the average scholar.

The average mentor was effective at communicating (8.0/10), engaged with some details of scholars’ projects while delivering high-level feedback on research directions, and balanced an emphasis on research outputs with an emphasis on process.

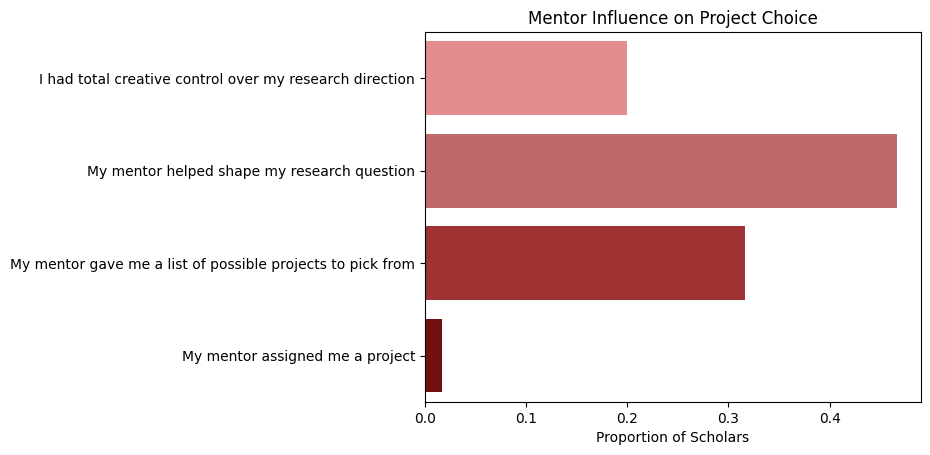

Mentors also differed in their influence on scholars’ project selections.

The most common arrangement involved mentors “shaping” their scholars’ research, but some mentors had a stronger hand in project selection, assigning topics or presenting a list of possibilities.

Research Management

In previous programs, MATS offered one-on-one “Scholar Support” sessions dedicated to research planning, career planning, productivity improvements, and communication advice. Scholar Support took on some responsibilities that mentors often bear by default, such as brainstorming, rubber-ducking, conflict resolution between scholars, and some goal-setting, which freed up mentors to focus on their comparative advantages: mentoring and providing technical expertise. Scholar Support also offered coaching assistance not typically expected of mentors, such as accountability reminders, helped scholars get more value out of their mentor meetings, and assisted scholars with program milestones. Scholar Support Specialists did not typically meet with mentors, just scholars and MATS leadership.

However, the MATS team noticed gaps in the support Scholar Support was able to offer, so during the Autumn 2023 Extension Phase, the MATS Program Coordinator (London) and mentor Ethan Perez piloted an alternative model of scholar/mentor support we call Research Management. Instead of opt-in Scholar Support meetings emphasizing issues indirectly related to scholars’ research, such as time-management and accountability, MATS staff held a mandatory weekly check-in with each of Ethan’s scholars focused more directly on research questions:

- How is your project going?

- What are your main bottlenecks at the moment?

- Any updates on your compute usage and needs at the moment?

- Do you have any feedback for Ethan this week?

The Research Manager distilled scholars’ responses into weekly reports, which apprised Ethan of his scholars’ progress and challenges. In this way, the Research Manager cultivated a relationship with Ethan, and gained visibility across Ethan’s scholars. These features marked a departure from the Scholar Support model, under which two scholars with the same mentor could meet with two different members of the Scholar Support team, making it difficult to identify common themes.

Our foray into Research Management exceeded our expectations. Ethan testified,

I think [Research Management check in notes are] adding like... almost all of the value of my 1:1 check-ins with [scholars]... Amazingly helpful, brought up a bunch of great considerations/flags that are great to know (and would’ve been great to know earlier, so I only wish we started this sooner).

Due to this success, we decided to primarily offer Research Management instead of Scholar Support. In our previous retrospective, we anticipated this shift:

Scholar Support is also planning to help most mentors manage scholar research for the Winter 2023-24 cohort. . .

- Mentors would benefit from the shared information about scholar status;

- Scholars would benefit from their mentors’ goals being understood by the Scholar Support team, especially as the mentors’ research directions change throughout the program.

In offering research management help to mentors, Scholar Support will take a more direct role in understanding research blockers, project directions, and other trends in scholar research, and summarizing that information to mentors with scholar consent.

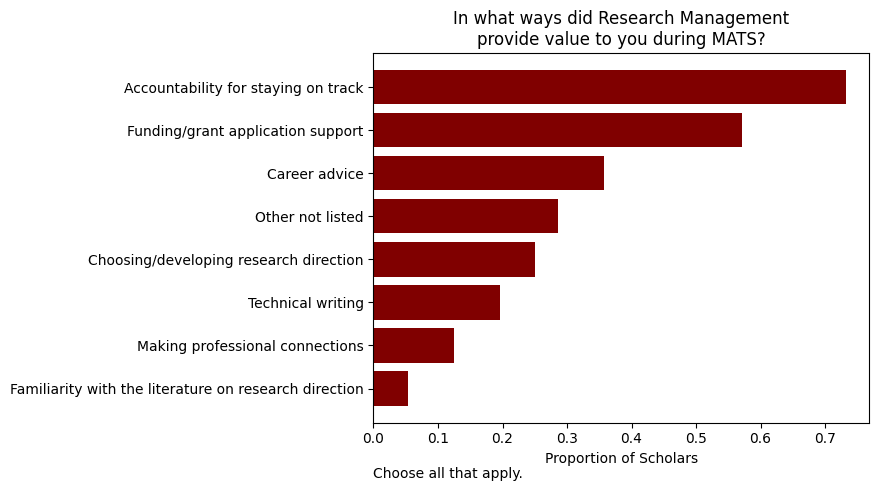

Of the 56 scholars who filled out our final survey, 92% met with a Research Manager at least once. These scholars reported benefiting from Research Management in multiple ways.

Some of the “Other” ways that Research Management helped scholars included:

- “Helping me make some decisions and encouraging me to do some things I was putting off. Following up on those things to make sure I did them. Helping me unblock myself by teaching me / having me try some introspection techniques.”

- “Encouraged me to think about theory of change and higher level motivation for our research direction. Provided feedback for grant applications and presentations. I found it useful to recap what we had achieved each week and what our next steps would be.”

- “Emotional support! That really mattered. Not in, like, a therapy way, just by being there for us, especially for one stream-mate who was struggling with emotional stability.”

- “[My RM] often had good advice on productivity and ideas for impactful high ROI actions that I could take, and generally reflecting on how things were going. It was also very useful that [my RM] knew how other scholars had experienced previous MATS programs, so that I could be more deliberate about how I wanted to spend my time here.”

- “It was useful to me in various ways, but probably the top one was having someone I can safely talk with about various dilemmas and confusions and have help gaining clarity.”

- “He constantly asked questions to get to the core of trying to figure out what I'm *actually* precisely being slowed down by. One realization I had while talking to him is I'm almost constantly sleep-deprived; this is a thing I'll need to improve in the long term.”

- “...She also kept me up to date with relevant communication from MATS and gave me a space to question what I was working on and what direction I was going in. In addition, she helped me set up my cloud computing! She was great and improved my MATS experience.”

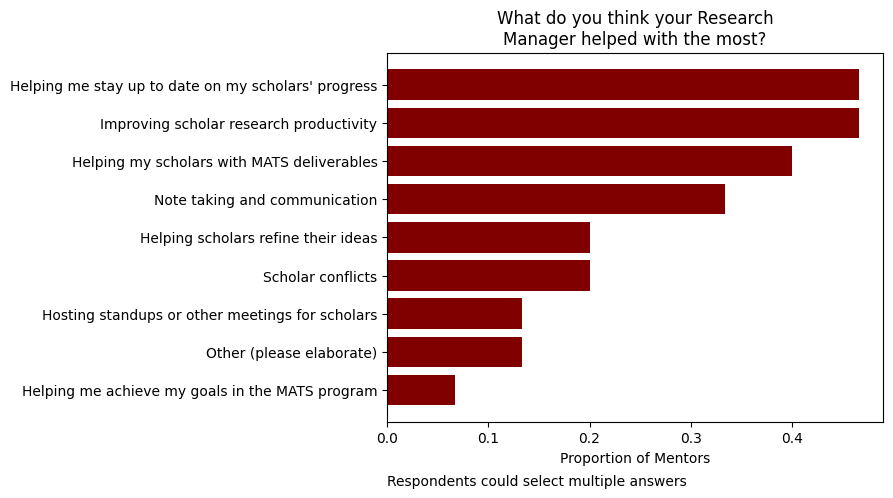

We also asked mentors how Research Management helped them:

One mentor elaborated on the “other” support, saying, “Specifically I gather the RM gave some useful structure to executing my scholars' projects, which I didn't quite have the ability or time for.”

Seminars & Workshops

As foreshadowed in our previous retrospective, we reduced the volume of seminar offerings for the Winter program. MATS hosted 12 seminars with guest speakers: Buck Shlegeris (twice), Lennart Heim, Fabien Roger, Adam Gleave, Neel Nanda, Vanessa Kosoy, Jesse Hoogland, Evan Hubinger, Marius Hobbhahn, David Krueger, and Owain Evans.

Additionally, some scholars invited their own guests to present, including Logan Riggs, Tomáš Gavenčiak, and Jake Mendel.

We held workshops on EA Global Conference Preparation, Research Idea Concretization (with Erik Jenner), Theories of Change (with Michael Aird), Preventing and Managing Burnout (with Rocket Drew), and Language Model Evals and Workflow Tips (with John Hughes).

As with seminars, scholars organized workshops of their own, including speed meetings, a Neuronpedia interpathon, and a career planning workshop.

Milestone Assignments

Research Plans

Halfway through the Research Phase, every scholar was required to submit a Research Plan (RP), outlining the AI threat model or risk factor motivating their research, a theory of change to address that threat model, and a plan (based on SMART principles) for a project to enact that theory of change. Using this rubric, 10 MATS alumni graded the RPs to offer scholars constructive feedback, provide MATS with an internal metric of success, and occasionally inform Extension Phase acceptance decisions (see Extension Phase below). To ensure success on this milestone, the Research Management team held an RP workshop and office hours session.

We required Research Plans to develop scholars’ ability to contribute to goal-oriented research strategy and to make it easier for scholars to write a subsequent grant proposal. Many scholars repurposed components of their RPs in applications to the Long-Term Future Fund (LTFF) for post-MATS grant support, primarily in the MATS Extension Phase. Thomas Larsen, an LTFF grantmaker, held a workshop to demystify the LTFF’s decision process and funding constraints.

Symposium Presentations

The Research Phase concluded with a two-day Scholar Symposium, during which scholars delivered 10-minute talks on their research projects to their peers and members of the Bay Area AI safety community. In previous programs, we compressed the Symposium to one day by holding simultaneous presentations in two different rooms. We expanded the event to two days so attendees, including scholars, would not have to choose between presentations, and to ease the operational burden of hosting the event. Attendees graded talks according to the rubric, providing scholars with constructive feedback and the MATS team with evaluation data (see Milestone Assignments below). We helped scholars practice their talks and held office hours to answer questions about the assignment. During the week of the Symposium, we also hosted “PowerPoint Karaoke,” at which scholars presented someone else’s slides.[7]

We also held weekly lightning talk sessions, which afforded scholars an opportunity to develop their presentation skills in a low-stakes setting.

Lighthaven Office

The Research Phase took place at the Lighthaven campus in Berkeley. While some scholars lived in one Lighthaven building during the Summer 2023 program, this was our first program using the full property and using it for office space in addition to housing. MATS shared the space with the Lightcone Infrastructure team and a few independent researchers. As a renovated inn, Lighthaven possesses unique features that distinguish it from a traditional office: the property includes six detached buildings, an outdoor event space, common spaces conducive to focused work, and an extensive library.

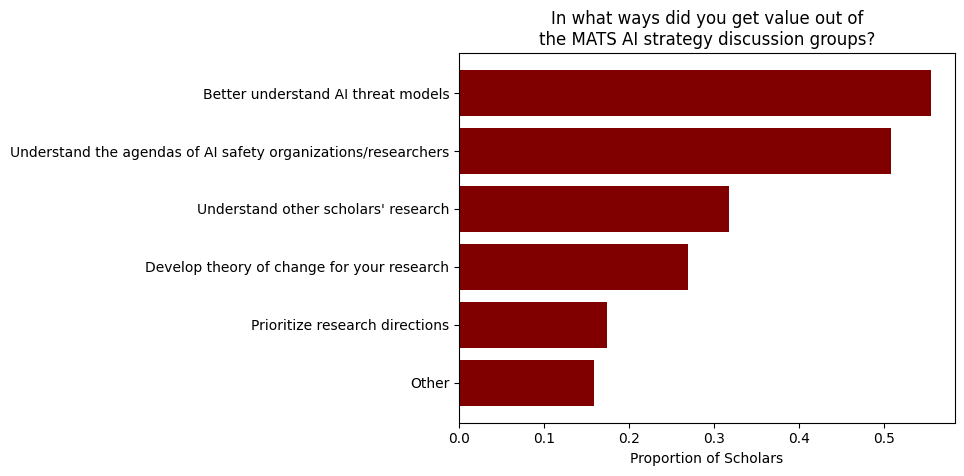

Strategy Discussions

For this program, we introduced a 7-week AI strategy discussion group series to substitute for our typical pre-program Training Phase. The Alignment 201 curriculum we used in past programs was designed to respect multiple constraints, including remote participation, moderate time commitments, limited facilitation, varied backgrounds, varied career interests, and wide diffusion. Since these constraints do not necessarily apply to MATS, it is unlikely that a curriculum designed under them is the optimal curriculum for training MATS scholars. We structured our new material around the key AI safety strategy cruxes that we observed were relevant for MATS scholars’ research.

During each of the first 7 weeks, scholars participated in a facilitated discussion group on an opt-out basis. From our curriculum post:

Each strategy discussion focused on a specific crux we deemed relevant to prioritizing AI safety interventions and was accompanied by a reading list and suggested discussion questions. The discussion groups were facilitated by several MATS alumni and other AI safety community members and generally ran for 1-1.5 h.

The topics of each week were:

- How Will AGI Arise?

- Is the World Vulnerable to AI?

- How Hard Is AI Alignment?

- How Should We Prioritize AI Safety Research?

- What Are AI Labs Doing?

- What Governance Measures Would Reduce AI Risk?

- What Do Positive Futures Look Like?

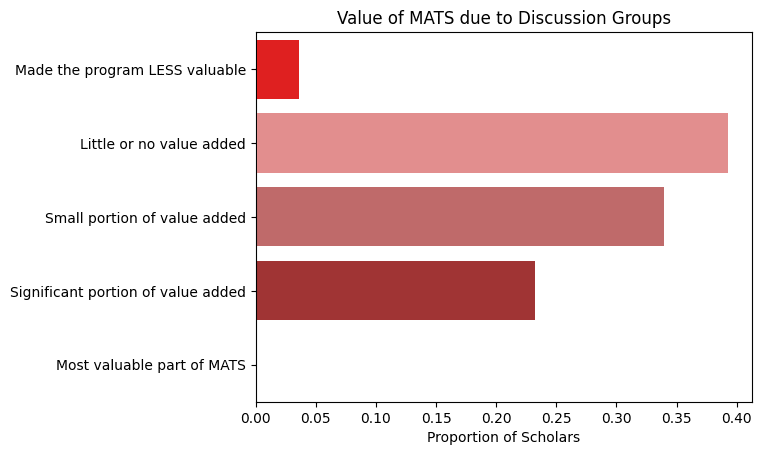

The median scholar attended 4 of the 7 strategy discussions. 51% of scholars would have preferred “fewer” discussions, and 5.7% would have preferred “many fewer.” Based on this and other feedback, we intend to make discussion groups opt-in for scholars in future cohorts (see Modified Discussion Groups below). Discussion groups benefited scholars in different ways:

One scholar elaborated on the “Other” value: “Because of the random groups, I met and talked with more MATS scholars than I otherwise would have. There are also a few scholars who are not so talkative on topics other than AI safety, so it was nice to speak to them during the AI strategy discussion groups.”

Networking Events

We believe that a large benefit of holding the Research Phase in Berkeley and the Extension Phase in London is the ease of connecting scholars to the researchers who work in these global AI safety hubs. To facilitate such connections, MATS organized a number of networking events:

- Alignment Collaborator Speed Meetings (held during the EA Global: Bay Area conference);

- Lunch at FAR Labs;

- Dinner at Constellation;

- AI Impacts Dinner (organized by AI Impacts, held at Lighthaven);

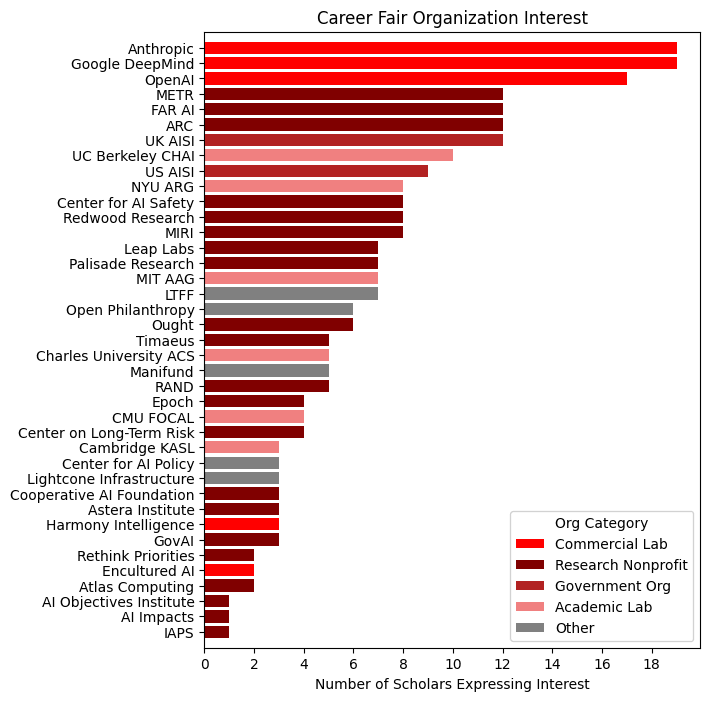

- Career Fair featuring 12 organizations;

- Three evening networking socials open to the community.

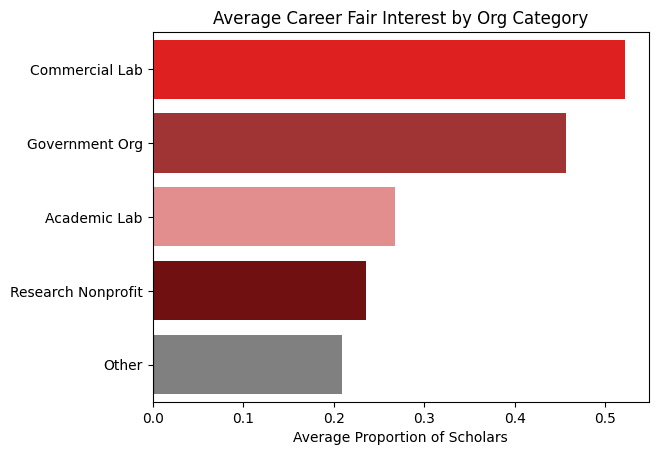

Prior to our career fair, 23 scholars submitted a survey indicating which organizations they would most like to see at the event. Their responses form a snapshot of the current career interests of aspiring AI safety researchers.

To obtain a sense of the popularity of the organization categories, we show how many scholars, out of 23, voted for the average organization in each category:

This distribution is similar to the one we saw for last summer’s career fair, with the exception of the government organizations, which did not exist at the time.

Social Events

In addition to the networking socials, we held a number of social events, including outings for scholars from underrepresented backgrounds (younger, older, religious, women, people of color, and LGBTQ+). Multiple scholars took the initiative to organize social events, including music nights, movie nights, an origami social, DnD games, exercise outings, excursions into San Francisco, and a hike.

Community Health

Scholars had access to a Community Manager to discuss community health concerns, such as conflicts with other scholars or their mentors, and to provide emotional support and referrals to health resources. We believe that community health concerns like imposter syndrome and undiagnosed conditions like ADHD can detriment promising researchers entering AI safety. Furthermore, interpersonal conflicts, mental health concerns, and a lack of connection can inhibit research productivity by contributing to a negative work environment, detracting from the cognitive resources that scholars could allocate toward research and precluding fruitful collaborations. It is important for programs like MATS to provide support in these areas, while air-gapping evaluation and community health when appropriate to incentivize scholars to seek help when they need it without fear of adverse effects on themselves or others.

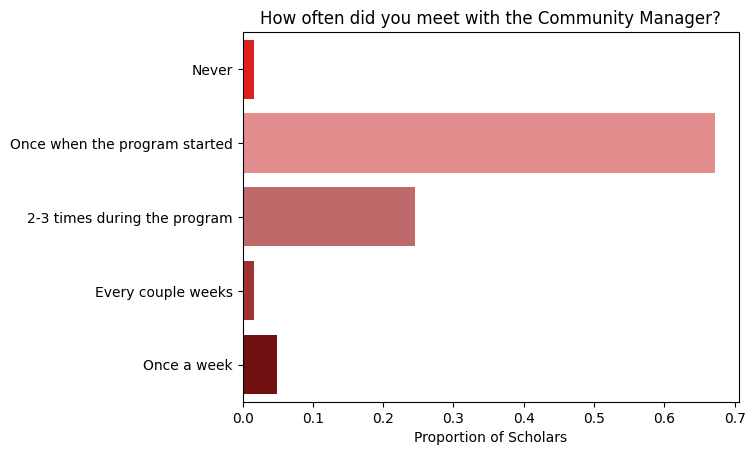

A small number of scholars met with the Community Manager frequently, while 67% of scholars met with the Community Manager just once.

Even scholars who do not seek out community health support benefit from the existence of this safety net (see Community Health below).

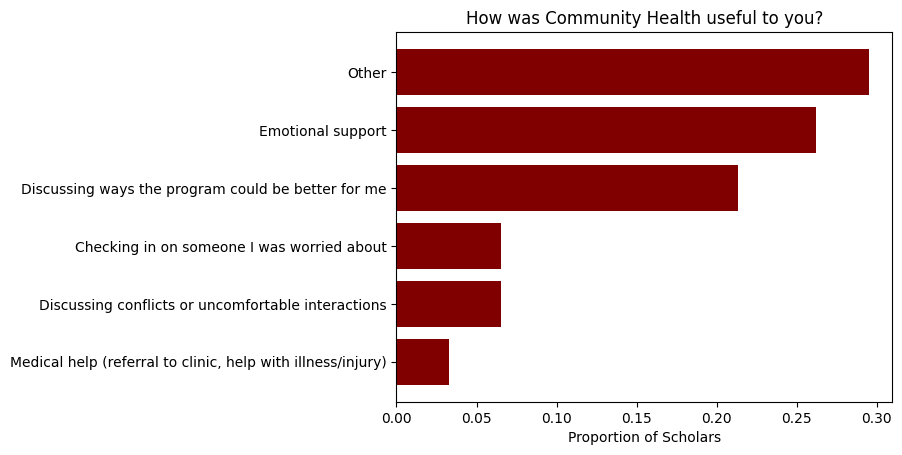

The Community Manager aims to equip scholars with the skills and resources to work productively and sustainably after they leave MATS. Scholars reported how the Community Manager helped them:

Some of the “Other” ways that Community Health helped scholars included:

- “helping to situate myself within the landscape of the AI safety community”

- “Measuring how much I was working”

- “I once asked [the Community Manager] for advice on whether to be more positive vs realistic in discussions of other scholars projects.”

- “I thought the burnout workshop was really good. . . I also liked that there were so many organized activities.”

- “I got some clarity on policies, got some tips and ideas regarding socializing in the bay area, and the burnout workshop was good.”

- “It was useful for getting broader context on how the program is going for others and how other streams are progressing. I was mostly remote throughout the program so the other aspects were less relevant for me. The burnout workshop material was helpful.”

Extension Phase (Apr 1 - Jul 19)

By the end of the main program, many scholars are looking for a structured way to continue their projects while they plan their transition into the next phase of their career. The extension phase allows scholars to pursue their research projects with gradually increasing autonomy from their mentors and MATS. The MATS Executive Team accepts scholars into the Extension Phase based on:

- Mentor endorsements,

- Research Plan grades, and

- Whether scholars had secured independent funding for their research.

Funding from an outside organization, typically the LTFF or Open Philanthropy, is an important input to MATS’ evaluation process because it provides external evaluation of scholars’ research. Of the 50 scholars who applied, 72% were accepted into the Extension Phase, and 4% have pending applications. Of the 36 accepted scholars, 56% are completing the Extension at the London Initiative for Safe AI (LISA) office, 11% are continuing in Berkeley from FAR Labs, and the remainder are working remotely.

During the Extension Phase, we expect scholars to formalize their research projects into publications and plan future career directions. To support these efforts, we continue to offer Research Management (see above) and programming such as seminars, workshops, and networking events, especially for the scholars in the LISA office. FAR Labs offers its own programming, including a weekly seminar series. We tailor this programming to prepare scholars for the transition out of the MATS environment by encouraging them to develop longer term plans and make connections that advance those plans. We additionally offer scholars the support and resources to coordinate their own events and programming, giving them opportunities to take ownership of networking activities and further develop their independence. Concurrently, scholars continue to receive support from their mentors, though with less frequency than during the Research Phase.

Winter Program Evaluation

In this section, we lay out how scholars rated different elements of the program and how we evaluated our impact on scholars’ depth, breadth, and taste, among other metrics.

Evaluating Program Elements

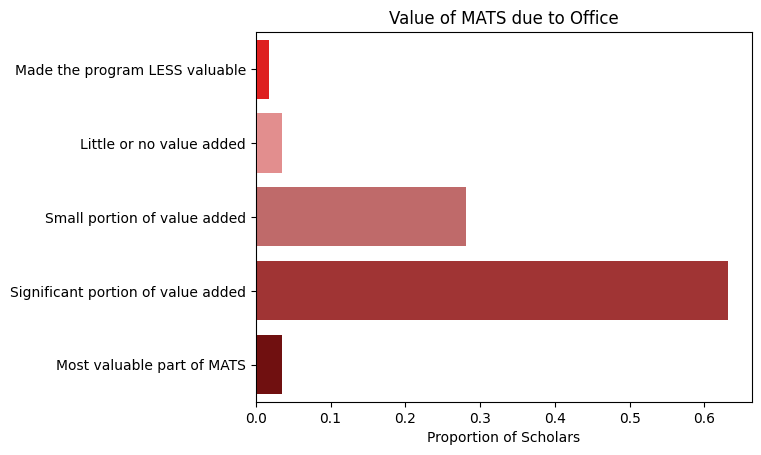

The median scholar rated the following program elements as follows:

- Likelihood to recommend MATS was 9.0/10.

- Mentorship was a significant portion of value, rated 8.0/10.

- Research Management was a small portion of value, rated 8.0/10.

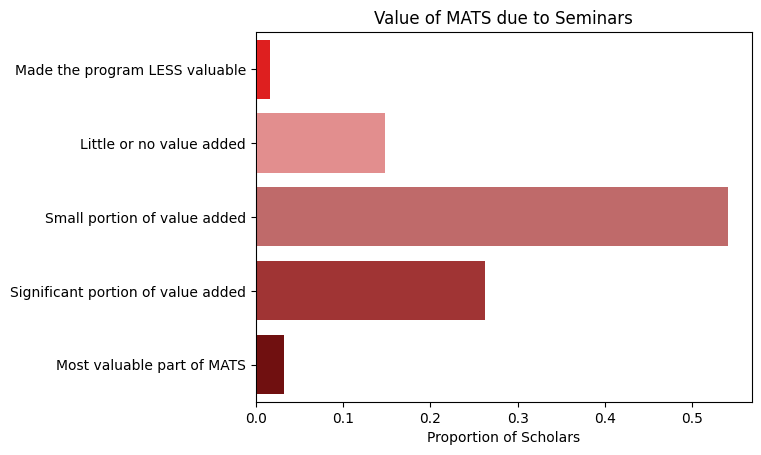

- Seminars were a small portion of value.

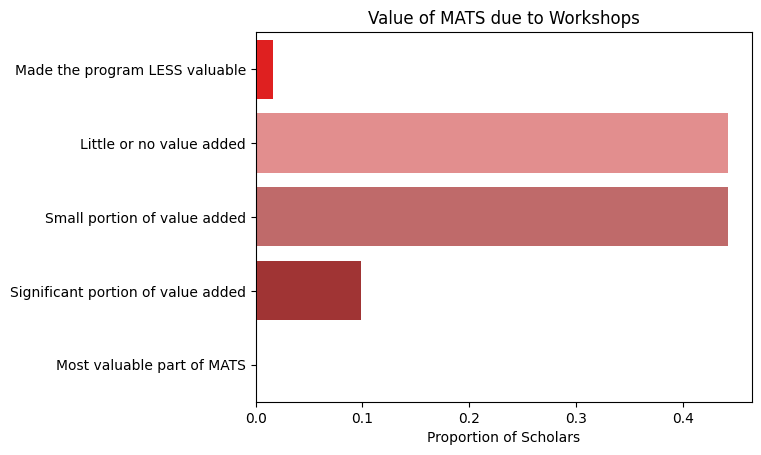

- Workshops were a small portion of value.

- The office was a significant portion of value.

- Strategy Discussions were a small portion of value.

- Other connections, including networking outside of MATS, were a small portion of value.

- Community health was a small portion of value, improving mental health by 5.0%, according to scholar self-reports.

Overall Program

Scholars rated the Research Phase highly when considering their likelihood of recommending MATS to others (mean = 9.2/10).

In many industries, this type of question is commonly used to calculate a “net promoter score” (NPS). Based on our respondents, the NPS for MATS is +74, evidencing a very successful program.

We also asked mentors about their likelihood to recommend MATS.

The average mentor responded 8.2/10, and the NPS was +37. One mentor reported a likelihood to recommend of 2, which we suspect to be artificially low due to extenuating circumstances unrelated to the quality of the program.

Mentorship

Overall, scholars rated mentors highly (mean = 8.1/10).

For 38% of scholars, the mentorship they received was the most valuable component of MATS.

Note that 15/61 respondents did not select any program element as the “Most valuable part of MATS.” Here are some representative comments from scholars in each of these categories:

- Little or no value added

- “I think my mentor was probably not involved enough with my project, [my mentor] did not get involved other than to the extent of knowing what my project was at a high level. I think this was great for intellectual freedom and exploration but I felt like having more involvement in the project could have been useful. Other than the level of involvement she was brilliant, always encouraging and supportive of my ideas. . . I still managed to get sufficient mentorship for my research project from [a specific scholar] and my research manager . . . as well as through conversations with other scholars.”

- Small portion of value added

- “Remote. In the last 2-3 weeks we met only once per week for about 20-30 min. On those meetings, I was mostly giving update on my work. [My mentor] gave me emotional support. Almost no help on [my project] in the slack. I was doing it mostly alone with the help of others. [My mentor] connected me with other people which helped me.

- “I felt as if our styles and expectations didn't quite match up ([one of my mentors] wanted just to code and not ask or think about the high level, and I didn't realise this soon enough). [My other mentor] and I got along better but I felt as if the overall research direction was left quite open ended and I was mostly on my own.”

- Significant portion of value added

- “[My mentor] had incredible insight and research instinct. Talking through different thought processes with him in the room (virtually) was a huge value add.”

- “It was great! It would have been wonderful if she had had more time to offer, but the time she did have to offer was extremely valuable.”

- “There were no issues but we were very focused on the specific project we were working on. I do think there could have been a little more general career advice/ direction. However, I also didn't ask for it a ton or prompt it, and if I did I'm sure [my mentor] would have been happy to share his thoughts.”

- Most valuable part of MATS

- “[My mentor] was very attentive in general, reading my updates daily and answering my questions and several times per week sending ideas that occurred to him, and helping with paper writing.”

- “[My mentor’s] advice about preferred research directions was consistently good - I would have done waaaaay less valuable research on my own. . . I can't believe I learned so much ML software engineering and training skills from [my mentor’s collaborator] in such a short time.”

- “[My mentor] is an outstanding mentor. From technical design to addressing team dynamics, I feel incredibly lucky to have him as a mentor. More specifically, [my mentor] helped set an ambitious research agenda while constantly empowering the team to drive it forward and provide their own input. He was exceptionally responsive to questions and very well organized.”

Among scholars for whom mentorship was not the most valuable component of MATS, 50% said that the cohort of peers was the most valuable component.

Research Management

Overall Rating by Scholars

Scholars rated Research Management highly when considering their likelihood of recommending it to future scholars.

The average likelihood to recommend among scholars was 7.9/10, and the NPS was +23.

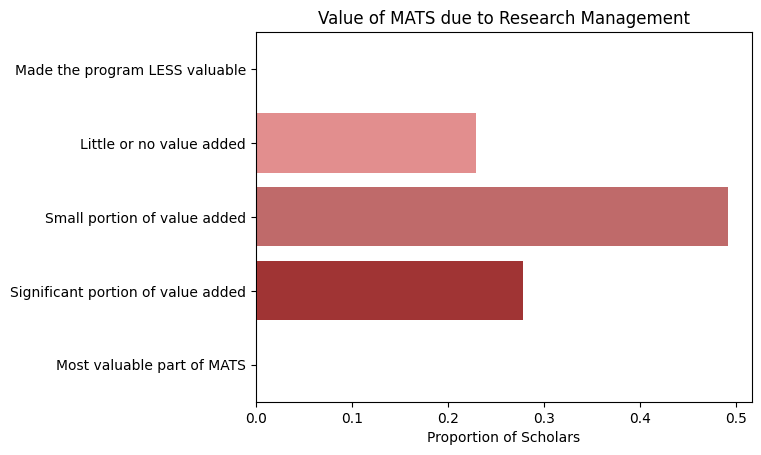

We asked scholars how RM contributed to the value of MATS:

Scholars elaborated on their experiences with Research Management:

- Little or no value added

- “As a solo experienced researcher, I didn't feel I needed my RM to stay on track; I think that help with ideas for [Research Plan Milestone] was valuable but otherwise I don't think a great use of our time.”

- Small portion of value added

- “It was surprisingly useful. I really wish I had a research manager during my PhD, lol. [My RM] was excellent for keeping me on track, helping me set goals, and helping me to re-orient my plans when needed.”

- Significant portion of value added

- “[My RM] was an exceptionally valuable resource, and without her guidance and leadership, MATS would not have generated the same value for me. Not only did [my RM] help resolve major issues with group dynamics, but she helped shape my career and get the most out of the program.”

- “Talking to [my RM] helped me avoid getting more burned-out and helped me stay productive / healthier / happier than I would have been otherwise. It makes it really clear to me that this kind of support is hugely important and it's crazy I don't have a research manager the rest of the time.”

Overall Rating by Mentors

We asked mentors about their likelihood to recommend RM, and the average mentor responded 7.7/10; the NPS was +7.0.

We also asked mentors to rate the support they received from their RM, where

- 1/10 = No value or negative value;

- 5/10 = Time spent interfacing with your RM was roughly as valuable as equivalent time spent on the counterfactual;

- 10/10 = Time spent interfacing with your RM was at least 10 times more valuable than equivalent time spent on the counterfactual.

The average response to this question was 7.3/10.

We asked mentors, “Would you want to continue working with your RM in the extension phase, and/or future cohorts?” All respondents said “Yes, same RM”, except for one mentor who said “Yes, different RM.” Their RM is no longer in this role, and this mentor followed up to say “I got the impression that different RMs had quite different working styles. Would be keen to try working with someone else to see what type of RM works best for me.” Some mentors who expressed interest in continuing with their RM from the winter explained their reasoning. One said “Experience was good overall. It's nice to have updates from someone else so I have a check if there's anything [my scholar] doesn't say directly to me, but that I should know.”

One mentor offered a testimonial about their experiences with Research Management:

Due to Research Management I did not have to spend time on important, but time-consuming things. The RM helped my mentee write various reports, since [my RM] better knew what the expected structure and output should look like. That meant I could fully focus on the content of the doc, and less the expected structure. This helped me out a lot as I could spend less time on this overall (and I was short on time). With the weekly meetings the RM has with my scholar, it gives another opportunity to check in with my mentee, see how it’s going, whether they understand the bigger picture etc. As soon as there were small question marks, problems, or dissatisfaction, I was then able to address this in the next meeting. I would probably not have caught those things, or only much later.

We also asked mentors who did not have RM if they thought they would have benefited from it. One mentor responded “My a priori guess is that RM is pretty great. I expect that RMs are better at many of the things they can help scholars with than I would be (for example, productivity debugging, discussing personal conflicts, potentially also helping scholars generate next steps).” A different mentor wrote, “I'm not sure what it entails as a mentor. It may have been useful for my scholars -- I have found a research manager useful in the past.”

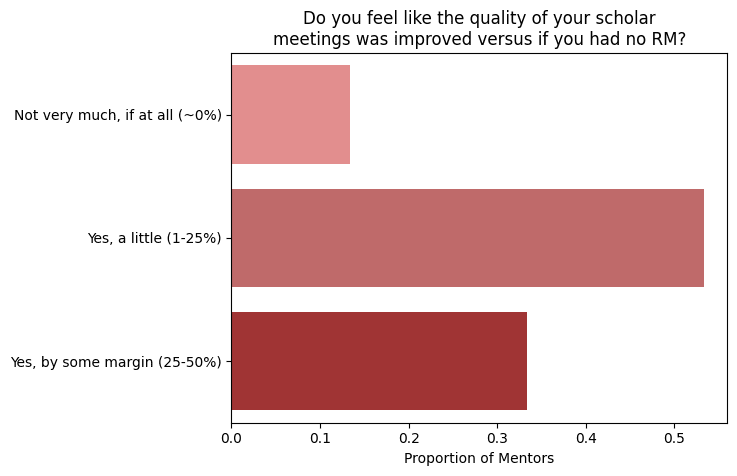

Finally, we asked mentors how much RM improved their scholar meetings. Some mentors reported a modest improvement, but others benefited significantly.

Mentors elaborated on these improvements:

- Yes, a little (1-25%)

- “Sometimes my scholars would bring a sensible project plan for the rest of the program. And they were very aware of when the MATS deadlines are.”

- “Mostly it helped me gauge how my scholar was feeling for direction-setting in the project; we probably would have settled on the same direction but just taken longer to do it or something? not totally sure.”

- Yes, by some margin (25-50%)

- “I switched to 1-1 meetings instead of group meetings, because of feedback by scholars communicating to me by [my Research Manager].”

Vs Mentor Counterfactual

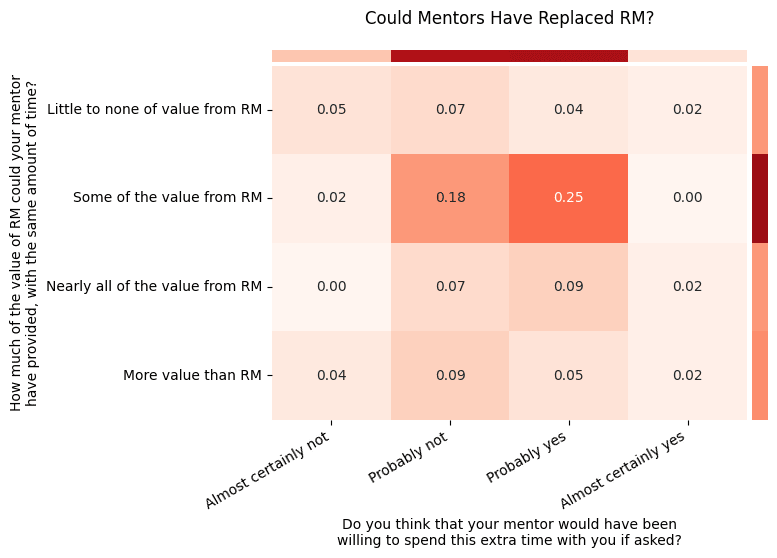

Recall that Research Management aims to take on some responsibilities that mentors often bear by default. To evaluate Research Management’s impact, we can compare it to the counterfactual in which scholars requested support directly from their mentors. We asked two questions:

- If MATS had no Research Management team, how much of the value that you got from Research Management could have been provided by your mentor? Assume that your mentor was able to spend extra time equal to the time you spent with your Research Manager.

- Do you think that your mentor would have been willing to spend this extra time with you if asked?

The first question identifies the value that could have been recovered from mentors, conditional on mentor willingness; the second question elicits mentor willingness. The following heatmap displays the joint distribution of responses.

Cells in the top rows and the left columns represent scholars for whom Research Management provided an improvement over the counterfactual: their mentors could not or would not have replaced Research Management. The cells in the bottom-right represent scholars for whom Research Management detracted value: their mentors would have stepped in to provide Research Management functions. 7% of scholars were in this second category: they thought their mentors probably or certainly would have provided superior support.

One scholar elaborated on a benefit unique to Research Managers: “I think it is very valuable to have an orthogonal perspective alongside the research process that is *not* your mentor. I generally think the structure that was in place was overall helpful for accountability and sharpening the research process in general.”

Even if mentors were willing and able to perform Research Management roles, there would still be a case for dedicated Research Managers:

- For scholars with remote mentors, Research Managers can provide in-person support and distill high-context information for the mentors, who would otherwise have more limited visibility on their scholars’ progress.

- These numbers assume that scholars would have asked their mentors for more attention, but we find that scholars are sometimes reluctant to communicate their needs to their mentors. Since MATS provides Research Management by default, scholars do not have to weigh the reputational consequences of requesting support, which might discourage them. As we wrote in our previous retrospective:

Scholars are sometimes disincentivized from seeking support from mentors. Because mentors evaluate their mentees for progression within MATS (and possibly to external grantmakers), scholars can feel disincentivized from revealing problems that they are experiencing. - Research Management reduces the per-scholar time commitment for mentors. Even if mentors would have been willing to provide additional support, it might have been a worse use of their time than, for example, focusing on their own research.

- If all scholars requested additional time from their mentors, their mentors might have to reduce their number of scholars. Most mentors were not allocated the maximum number of scholar slots they requested, so they likely are not yet in a regime where per-scholar time trades off with total number of scholars, but some mentors did receive their cap, two mentors exceeded their cap, and if future cohorts are better-funded, many more mentors will receive their cap. In such a future cohort, Research Management would increase the number of scholars a given mentor could support.

- As shown in Mentor Selection, for 20% of mentors, taking on an additional scholar would have traded off against time spent mentoring their other scholars.

We asked mentors the same pair of questions. All respondents agreed that they could provide “some of the value from RM.” 57% said they would “probably not” have spent the time; 36% said “probably yes”, and the remaining 7% said “almost certainly yes.”

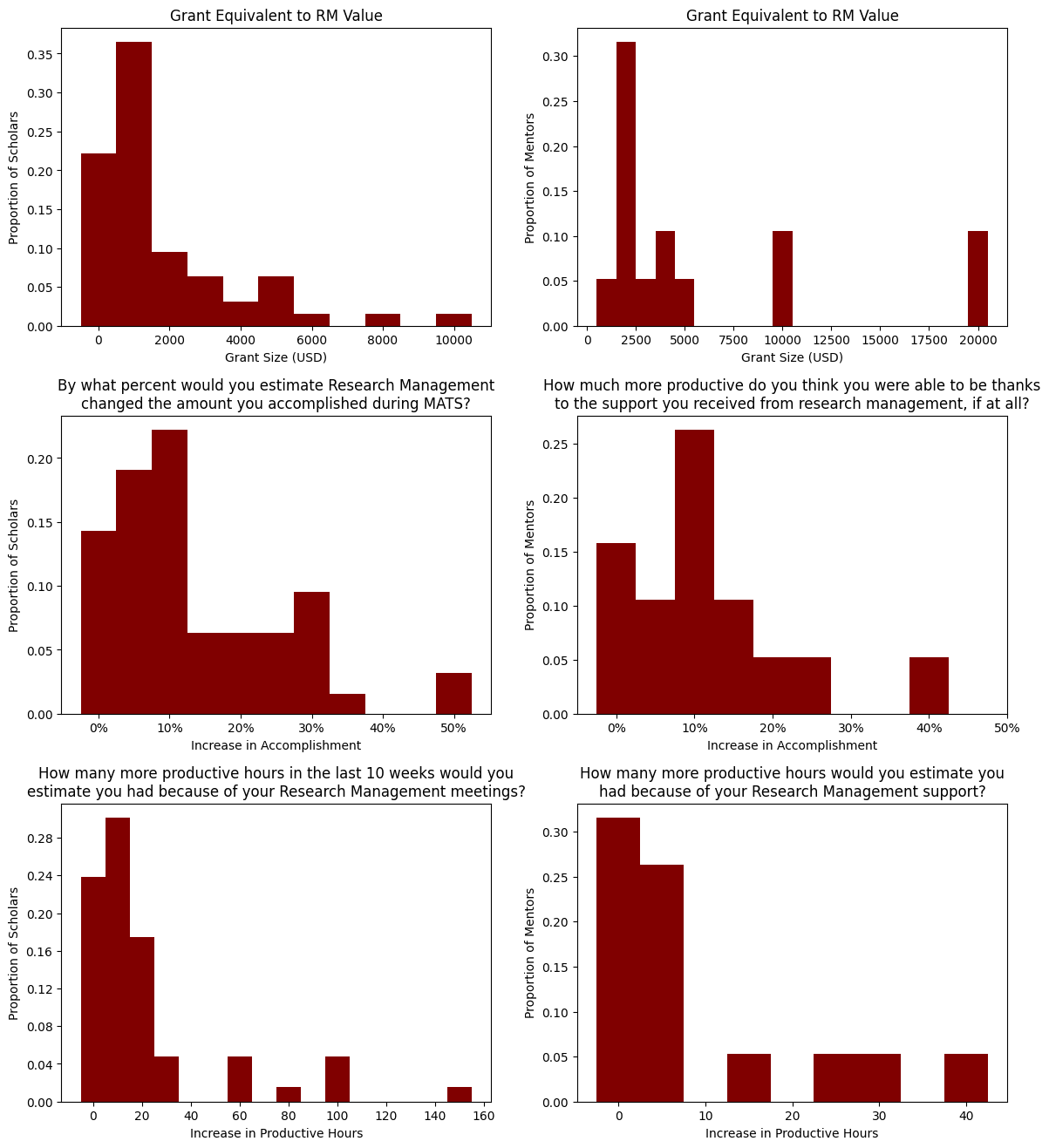

Grant Equivalent Valuation

We asked scholars, “Assuming you got a grant instead of receiving 1-1 Research Management meetings during MATS, how much would you have needed to receive in order to be indifferent between the grant and Research Management?[8] Please answer ex post, i.e. knowing what you know now. If you respond with some number X, that means you value these two the same:

- a grant of $X

- all of the 1-1 Research Management meetings you've had during MATS, collectively.”

The average response was $1711, the median response was $1000, and the sum was $95,810.

We asked mentors the same question. The average response was $5900, the median was $3000, and the sum was $88,000. Mentors expressed higher valuations than scholars for Research Management, perhaps because mentors have higher-paying jobs than scholars, many of whom are students, or mentors value their productivity more highly than scholars. As shown below, Research Management did not create more productive hours for mentors than scholars, on average.

Accomplishments and Productive Hours

Finally, we asked scholars and mentors two (functionally similar) questions about the value of Research Management.

The first question was: “By what percent would you estimate Research Management changed the amount you accomplished during MATS? An answer of X% means you accomplished X% more than you would have otherwise.” The average increase in accomplishments was 14%, and the median was 10%.

We asked mentors the same question. Their average increase in accomplishments was 12%, and the median was 10%.

The second question we asked scholars was: “How many more productive hours in the last 10 weeks would you estimate you had because of your Research Management meetings? For reference, MATS research phase was 10 weeks long. If you estimate you got X more productive hours per week on average, then you should respond 10*X. If you only got a 1-time productivity boost of Y hours, you should respond Y.”

The average increase in productive hours was 21 hours, the median was 10 hours, and the sum was 1200 hours.

We also asked mentors this question. The average increase was 8.9 hours, the median was 4.0 hours, and the sum was 130 hours. Productive hours were not the only benefit that Research Management provided, as evidenced by scholars and mentors who reported 0 increase in productive hours but a nonzero grant equivalent. But if we look at the grant equivalents for mentors and scholars who reported an increase in productive hours, the average mentor valued their time at $690/h, and scholars value their time at $160/h.

Improvements

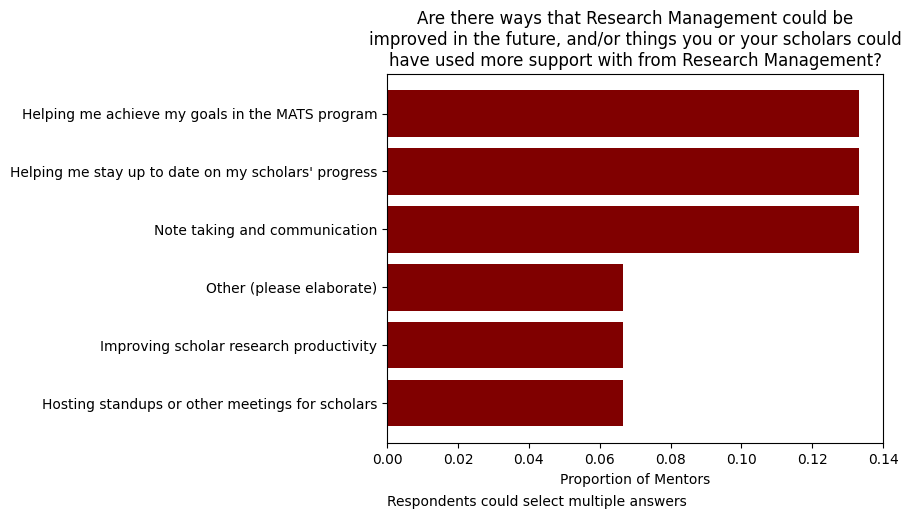

We asked mentors how we could improve Research Management for future programs.

One mentor elaborated on the “other” ways RM could improve, noting, “My scholar had various questions about the extension application that the research manager was not able to answer and I had to reach out directly to people in charge of the extension evaluation process.” Another mentor wrote, “I wish my RM was more proactive in seeking mentor feedback on scholars.”

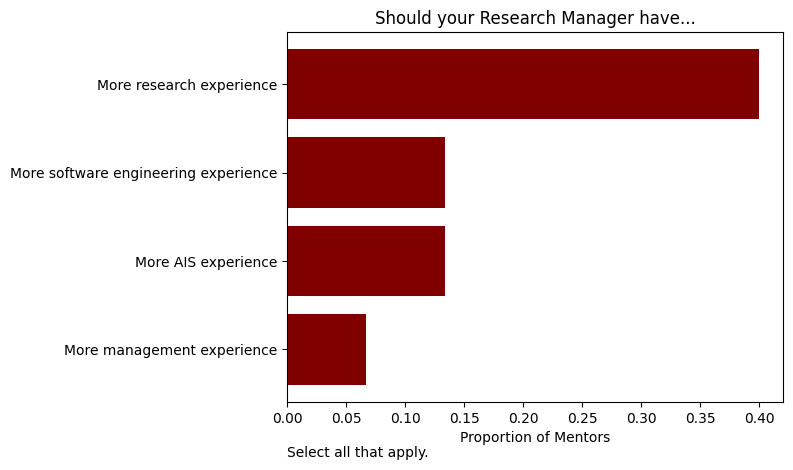

We asked mentors about the skills that RMs could develop to improve their RM abilities. 40% of mentors with RMs suggested that their RM could have more research experience.

We asked mentors, “Are you satisfied with the amount of time your RM spent on your stream? Would you have preferred more, less, or the same amount of RM time?” All respondents said “I am satisfied with the amount of time my RM spent on my stream”, except one, who said they “would have preferred my RM spent more time on my stream.”

Seminars & Workshops

While seminars and workshops were optional, MATS encouraged scholars to attend them. By exposing scholars to experienced researchers and novel agendas, seminars remain a pillar of scholar development.

In optional surveys, scholars rated seminars 6.8/10, on average, where 1/10 represented “Not worth attending,” and 10/10 represented the high bar “It significantly updated your research interests or priorities." These surveys also solicited scholars’ expectations about the value of the events. 33% of ratings exceeded expectations; 23% of ratings were worse than expected. A seminar that was “about as valuable as expected,” received an average rating of 6.8/10.

Workshops were a relatively minor program element—MATS held five workshops on topics other than Research Milestones—so it is unsurprising that they contributed less value than other program elements.

Lighthaven Office

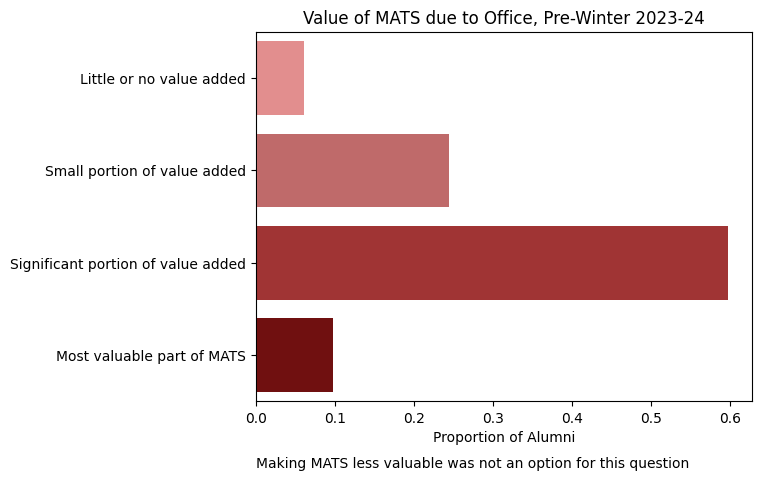

We were interested to see how scholars would rate Lighthaven because the campus differed from our previous offices, as explained above.

Comparing this result against alumni surveys, we see that Lighthaven was not a noticeably more important program element than office spaces in earlier MATS programs.

Note that this question asks about the value of the office relative to other program elements, and we believe other program elements have improved over time, so this question may reflect an improvement in the value of the office, in absolute terms. These responses should also be taken lightly because, for many scholars, this was their first experience working in any office, so their answers may not accurately reflect the quality of the office.

At the end of the program, scholars suggested some minor improvements to the facilities. Some also provided positive feedback:

- “Tbh I think Lighthaven is just perfect.”

- “Lighthaven was nothing but perfect. I loved it and am really sad because I already know my future workplace won't compare!”

- “I think Lighthaven was a really great space, and it was nice to have people around a lot and living and working there. I think sometimes it could be a little bit much but, on the whole, I really enjoyed it.”

Mentors gave feedback as well:

- “The lack of meeting rooms to talk to my scholars was not great. We had to meet in a public area without a table, on a couch.”

- “I liked the venue a lot overall, seems like a great place to run this type of program. Personally I wasn't as productive at Lighthaven compared to other places eg. Constellation but this was probably mostly due to working in a public area at Lighthaven and being interrupted more often.”

- “Very comfortable and good for informal interactions; not great for work.”

- “I thought there was a nice calm vibe, and I liked the style/ surroundings.”

Before the program, we tried to identify the space needs of all mentors; if the first two mentors above had brought their concerns to us during the program, we would have worked to provide private meeting areas for them.

Though we took steps to separate office and living spaces, we were concerned that the thin boundary between professional and personal spaces might lead to an unhealthy work-life balance for scholars. In the words of the previous scholar, we were worried the experience would “be a little bit much.” This proved to not be a significant problem. During the first week of the program, we polled scholars about their concerns, and the most notable change from our previous program was fewer scholars indicating a concern with work-life balance. After the program, we surveyed scholars again: 44 said the “balance felt appropriate,” 8 indicated “too much work,” and 4 said they “didn’t work enough.”

Because scholars were living at Lighthaven, and it had previously been a hotel, we were concerned that the atmosphere might become too informal for a professional program like ours. To investigate this possibility, we asked scholars about the “cozy”/professional balance. Among in-person scholars, 47 said “the balance was just right,” 8 said they “would have preferred a more professional office environment,” and 1 said they “Would have preferred more ‘cozy vibes.’” Based on this feedback, we are satisfied with the level of professionalism that Lighthaven offered.

Strategy Discussions

Scholars had mixed experiences with the Strategy Discussion groups.

Scholars elaborated on the limitations of our discussion format:

- One of the scholars who got negative value from the discussion groups commented, “Discussion seemed a bit basic for people already exposed to eg LW.”

- Another scholar may have preferred the Training Phase format that Strategy Discussions replaced: “As the program went on and deadlines loomed, it got a lot harder to take ~half a day (including reading) for the discussion groups! I personally think it would have been much better to frontload it more, both for that reason and because it would have helped people get to know each other better in the early stages.”

- Two scholars mentioned that some of the content would have been covered in informal conversations, even without dedicated Discussion Groups.

- Many scholars expressed a desire for stronger facilitation than our TAs provided.

- “I thought it would have been much more useful--even solely for improving research strategy--to have more technical readings, the sort of things people may not invest the effort to understand without the extra motivation.”

An AI safety curriculum remains an important lever by which MATS can improve our scholars’ breadth of knowledge about the AI safety field, but these results have underscored some shortcomings of our approach during the past program. In our next program, we will hold Discussion Groups, but we will update the readings, format, and timeline in response to scholar feedback, and we will offer discussions on an opt-in basis, to ensure they do not absorb the time of scholars who are unlikely to benefit from them.

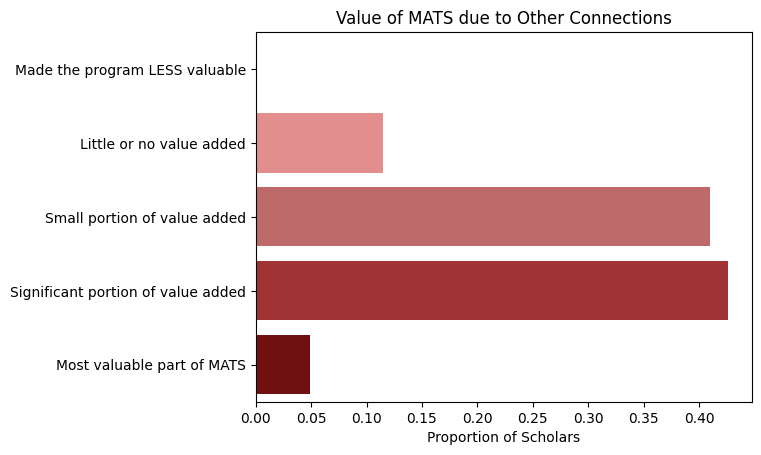

Networking Events

Facilitating connections to the wider Berkeley AI safety research community was an important way MATS provided value to scholars. We asked scholars about the value of the connections they made, clarifying, “This includes connecting with non-MATS members of the alignment community, e.g., at MATS networking events.”

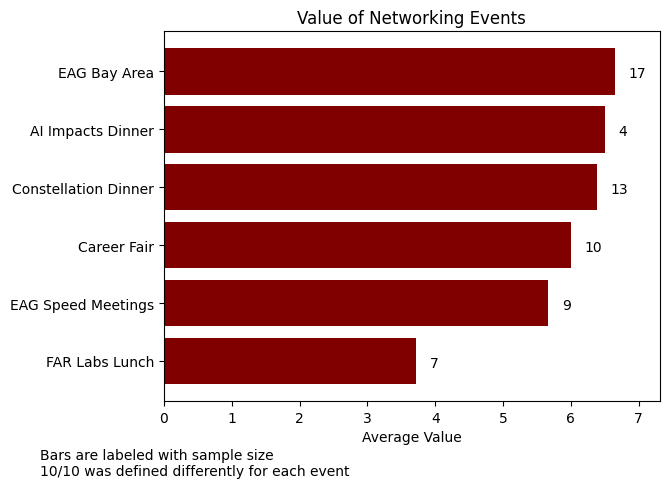

The most highly-rated networking event of the program was the EA Global: Bay Area conference, which many scholars chose to attend. The cause of dissatisfaction with the FAR Labs Lunch, and to some extent the Constellation Dinner, was the high ratio of MATS scholars to office members. The Constellation Dinner was rated about 1 point higher than the same event in the previous program; we think the increase was due to hosting the event at Constellation rather than our office, which helped with the ratio of MATS scholars to office members. We also think the presence of Astra participants and Visiting Researchers may have improved scholars’ experiences at the dinner.

This figure excludes networking socials, the Symposium, and intra-cohort networking events.

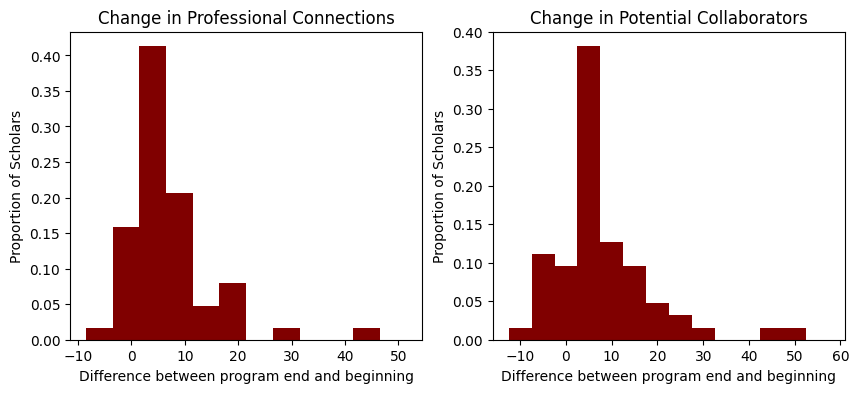

To measure the success of our networking events, we asked scholars two questions at the beginning and end of the program about their professional connections:

- How many professionals in the AI safety field do you know and feel comfortable reaching out to for professional advice or support?

- If you want a more specific scoping: how many professionals who you know would you feel able to contact for 30 minutes of career advice? Factors that influence this include what professionals you know and whether you have sufficient connections that they'll help you out. A rough estimate is fine, and this question isn't just about people you met in MATS!

- How many people who you know do you feel you can reach out to about collaborating on an AI safety research project?

- Imagine you had some research project idea within your AI safety field of interest. How many people that you know could plausibly be collaborators? A rough estimate is fine, and this question isn't just about people you met in MATS!

Responding to both questions, scholars reported an increase in professional connections during MATS.

The median change in professional connections was +5.0, and the median change in potential collaborators was +5.0. As in our previous program, decreases are most likely explained by some day-to-day variance in scholars’ judgments, as they did not have access to their previous responses. These measures also pick up on connections made to other scholars, in addition to researchers outside of MATS, but we believe networking events contributed because remote scholars reported smaller increases.

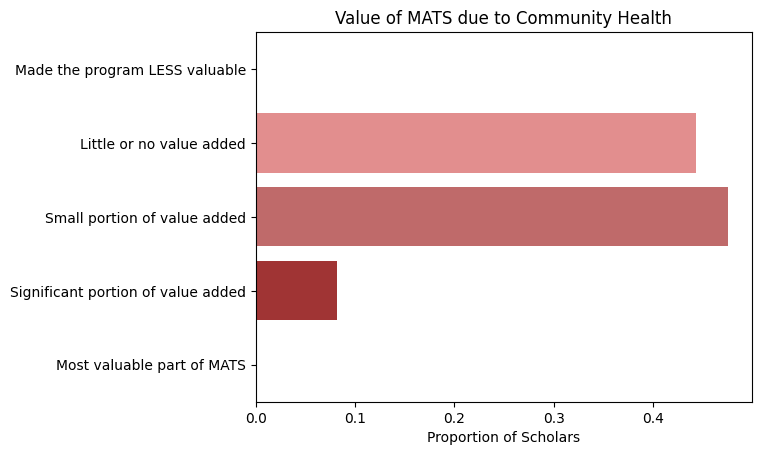

Community Health

As expected, many scholars did not use Community Health support, but a minority of scholars benefited substantially.

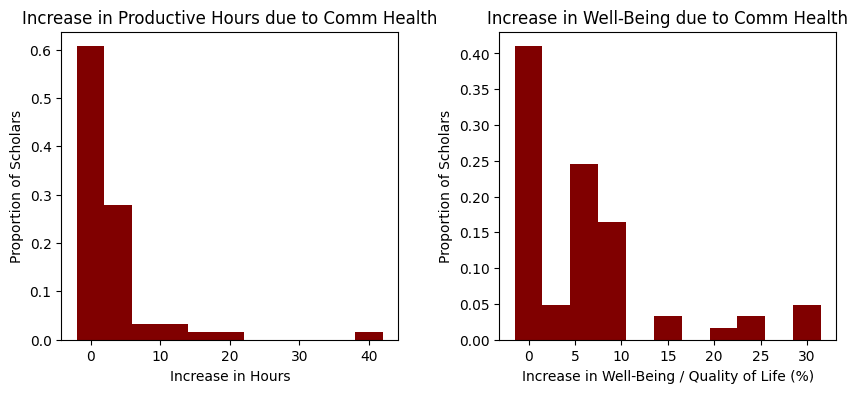

To investigate these benefits, we asked scholars the same question about productive hours we used to evaluate Scholar Support, along with a question about their well-being.

The median scholar gained 0 productive hours, but the sum was 170 hours.

The median scholar’s well-being increased by 5.0% due to Community Health support. Scholars elaborated on the ways that Community Health improved their quality of life:

- “[The Community Manager] was an amazing asset to the team. I really appreciated his emotional support, guidance, and friendship.”

- “[The Community Manager] was immensely helpful for when I was overwhelmed and provided a lot of both emotional and practical support”

- “Noticing I was overworked and telling me to take a break. Directing me to people I could talk to about dual use concerns of my research.”

- “[The Community Manager] was around when I was feeling burnt out and chatted to me / gave some great suggestions and was really supportive.”

- “Helpful for planning social activities, and just venting”

As in last summer’s program, many scholars who did not meet frequently with the Community Manager reported benefitting indirectly from a positive atmosphere and the knowledge of an emotional safety net.

- “And also just knowing someone had their eye out :)”

- “Mostly indirectly by helping other scholars feel better.”

- “I didn't need to make use of it, but I think it's good that it exists”

- “I also think that the Community Health was probably useful to me indirectly: I didn't need to use the Community Health services myself, but I did benefit from (as far as I could tell) a very positive community at MATS!”

- “I think they did a great job of creating a MATS environment that felt safe, social and enjoyable.“

- “I always was aware of its offering and am grateful to have had this awareness.”

- “I'm probably not qualified to comment on this since I did not need these resources, but I could imagine these being very valuable to those who are going through emotional difficulties during the program.”

- “I think it's wonderful that community health exists, and [the Community Manager] was just an awesome presence around MATS. I personally didn't feel the need to leverage the services, but I definitely appreciated that they existed and it was an option!”

Compared to other program elements, the benefits of Community Health were concentrated among fewer scholars, but this small segment of scholars benefited greatly. We continue to believe that Community Health is important and neglected based on its benefits to these scholars and the diffuse benefits it provides to the MATS community.

Evaluating Key Scholar Outcomes

Scholar Self-Reports

To measure scholars’ improvements in the depth, breadth, and taste aspects of conducting research, including the quality of their theories of change, scholars assessed themselves on these dimensions at the beginning and end of the program. The specific questions we asked were:

Depth: How strong are your technical skills as relevant to your chosen research direction? e.g. if the research is pure theory, how strong is your math? If the research is mechanistic interpretability, how well can you use TransformerLens and other tools?

- 10/10 = World class

- 5/10 = Average for a researcher in this domain

- 1/10 = Complete novice

Breadth: How do you rate your understanding of the agendas of major alignment organizations?

- 10/10 = For any major alignment org, you could talk to one of their researchers and pass their Ideological Turing Test (i.e., that researcher would rate you as fluent with their organization's agenda).

- 1/10 = Complete novice.

Research Taste: How well can you independently iterate on research direction? e.g. design and update research proposals, notice confusion, course-correct from unproductive directions

- 10/10 = You could be a research team lead

- 8/10 = You can typically identify and pursue fruitful research directions independently

- 5/10 = You can identify when stuck, but not necessarily identify a direction that will yield good results

- 3/10 = You're not often independently confident about when to stop pursuing a research direction and pivot to something else

- 1/10 = You need significant guidance for research direction

Theory of Change: How strong are your mental models of AI risk factors and your ability to make impactful plans based on these models? Do you have a mental model for how AI development will happen that you think captures many of the relevant dynamics? Do you have a theory of change for why your chosen research direction will make beneficial AI outcomes more likely?

- 10/10 = You have a strong understanding of many factors that contribute to the direction of AI development, and your current direction is informed by a strongly-developed theory of change.

- 5/10 = You think that working in AI safety is impactful due to a rough mental model of AI risk factors, but you have a weaker understanding of what AI safety research agendas are most impactful.

- 1/10 = No mental models of AI development are informing plans.

The average change in depth was +1.53/10, and the average change in breadth was +1.93/10.

The decreases could be explained by calibration variance, as with professional connections above, or by scholars realizing their previous understandings were not as comprehensive as they had thought.

The average change in research taste was +1.35/10, and the average change in theory of change was +1.25/10.

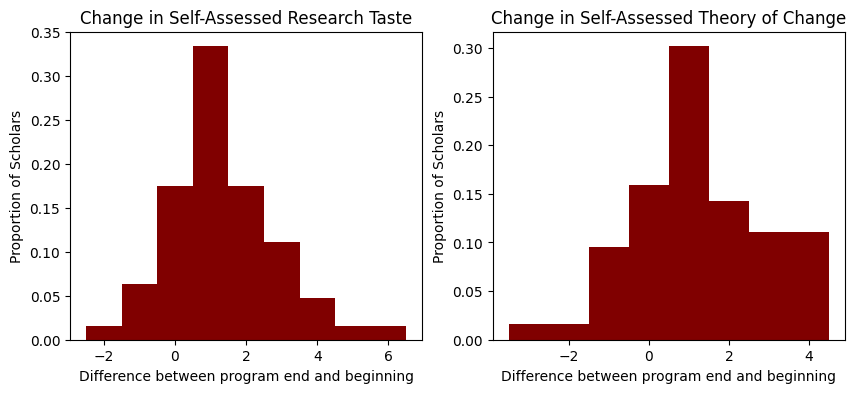

Mentor Evaluations

Halfway through the program, we asked mentors to evaluate their scholars on these same four dimensions. Average depth was 7.4/10, average breadth was 6.9/10, average taste was 7.2/10, and average theory of change was 7.0/10.

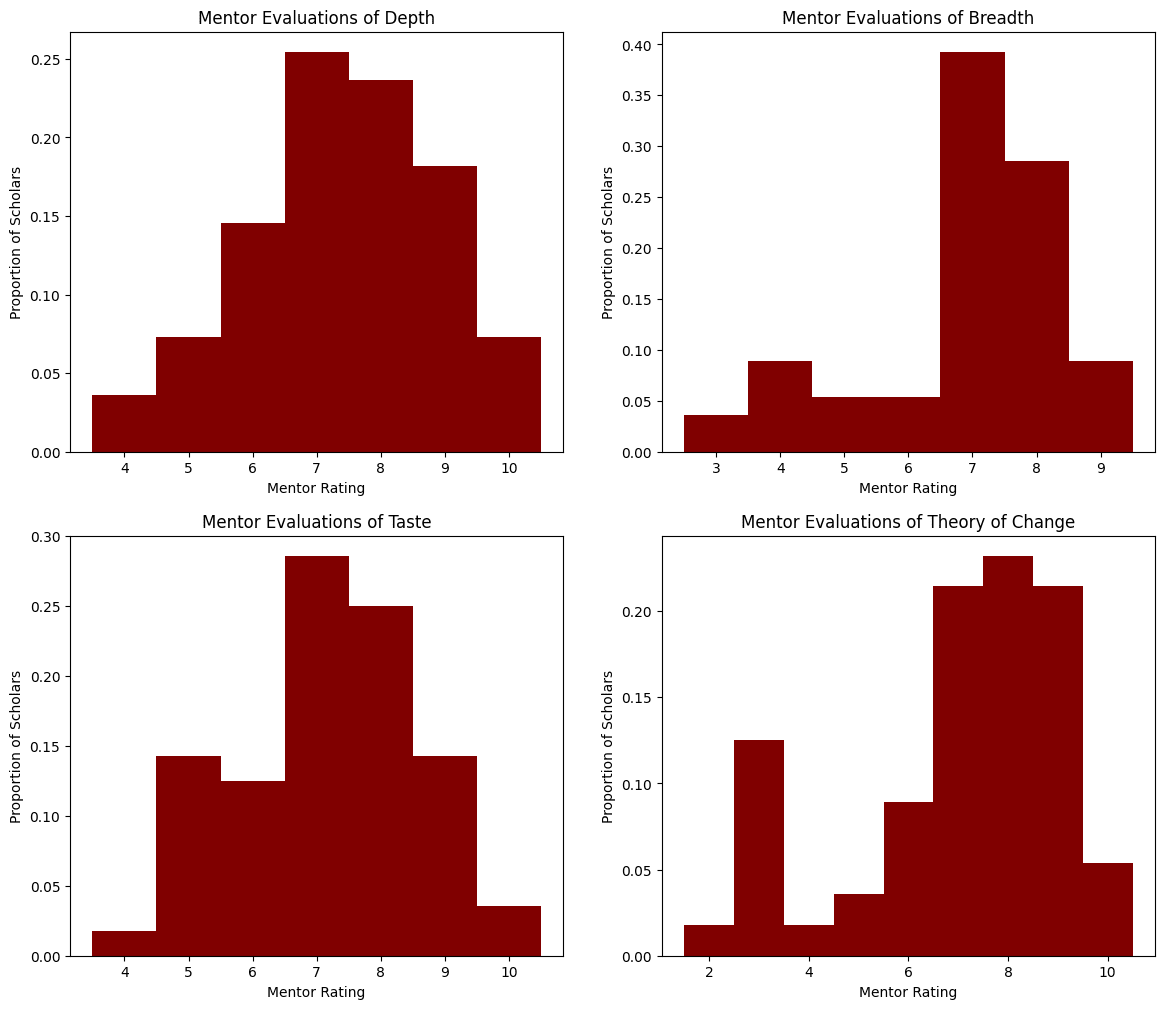

We can compare mentors ratings to their scholars’ self reports. Because mentors evaluated scholars halfway through the program, we use the average of scholars’ self-assessments from the beginning and end of the program.

If mentors and scholars agreed on the abilities of scholars, the data would be concentrated on the grey dashed line. Instead, scholars and mentors diverge in their assessments of breadth and depth. The greater concentration of data below the calibration line indicates that mentors tend to rate scholars higher than scholars rate themselves.