Open Thread: July - September 2023

By Lizka @ 2023-07-02T22:09 (+29)

Welcome!

If you're new to the EA Forum:

- Consider using this thread to introduce yourself!

- You could talk about how you found effective altruism, what causes you work on and care about, or personal details that aren't EA-related at all.

- (You can also put this info into your Forum bio.)

Everyone:

- If you have something to share that doesn't feel like a full post, add it here! (You can also create a quick take.)

- You might also share good news, big or small (See this post for ideas.)

- You can also ask questions about anything that confuses you (and you can answer them, or discuss the answers).

For inspiration, you can see the last open thread here.

Other Forum resources

Niyorurema Pacifique @ 2023-07-18T05:45 (+23)

Hello everyone,

I am Pacifique Niyorurema from Rwanda. I was introduced to the EA movement last year (2022). I did the introductory program and felt overwhelmed by the content, 80k hours podcast, Slack communities, local groups, and literature. having a background in economics and mission aligning with my values and beliefs, I felt I have found my place. I am pretty excited to be in this community. with time, I plan to engage more in the communities and contribute as an active member. I tend to lean more on meta EA, effective giving and governance, and poverty reduction.

Best.

Evan_Gaensbauer @ 2023-09-28T20:21 (+2)

Welcome to the EA Forum! Thanks for sharing!

Linch @ 2023-08-11T00:43 (+20)

Would other people find it helpful if I write an article detailing "reasons to be skeptical of working as an independent researcher?" The reasons will come from a mixture of interviews, first-principle thinking, reading past work on this topic by other people (including RP's work), some of my own experiences in research, and my observations as a grantmaker on LTFF.

"Agree"-vote if helpful, "Disagree" if not helpful; assume my nearest counterfactual is writing a different post drawn from the same distribution as my past posts or comments, particularly LTFF-related ones.

jjanosabel @ 2023-09-04T14:41 (+1)

Linch, I am interested in your use of "first-principle thinking".

So I vote "Agree", yes it would be helpful to see your article.

Personally, I am sceptical of all fields that go under the heading of Social Science (a long story... I, also, would need some encouragement to write :-).

Linch @ 2023-07-25T01:34 (+19)

The Long-Term Future Fund is somewhat funding constrained. In addition, we (I) have written a number of docs and announcement that we hope to publicly release in the next 1-3 weeks. In the meantime, I recommend anti-x-risk donors who think they might want to donate to LTFF to hold off on donating until after our posts are out next month, to help them make informed decisions about where best to donate to. The main exception of course is funding time-sensitive projects from other charities.

I will likely not answer questions now but will be happy to do so after the docs are released.

(I work for the Long-term Future Fund as a fund manager aka grantmaker. Historically this has been entirely in a volunteer capacity, but I've recently started being paid as I've ramped my involvement up).

Linch @ 2023-08-04T19:44 (+4)

Some docs out now:

https://forum.effectivealtruism.org/posts/zZ2vq7YEckpunrQS4/long-term-future-fund-april-2023-grant-recommendations

These are the main announcements I wanted to be out before people donated.

We'd also hopefully release a few other docs on a) what our marginal grants (may) look like, b) how LTFF differs from other AI alignment grantmakers, and c) adverse selection in longtermist grantmaking. We might also write more on other topics that we either think will benefit the community, or there is or has been demand for information from donors, applicants, or broader members of the community.

Alyssa Riceman @ 2023-08-21T21:59 (+18)

Hi! I'm Alyssa. I've been abstractly in favor of EA since around 2014ish when I first encountered it in the LessWrong-sphere, and actively doing the 10%-donations thing since starting my first job post-college in 2021. I've been adjacent to the EA community throughout all of time, but haven't been contributing much to it myself; thus, prior to now, my interactions with the EA forum have been purely reading-ish, rather than posting-ish. But now I've finally found something worth posting about! And thus I'm now here, introducing myself for real.

The 'thing worth posting about' in question is: slightly under two weeks ago, I found an EA channel in my company's Slack, including a nice straightforward pointer to "here are the various EA charities which the company is willing to do donation-matching towards". Previously, I'd looked up various particularly-effective charities in the company's donation-matching tool and found that it wasn't matching with any of them; but, despite this, there apparently are some it matches with! (In particular, CEA's various funds.) My company does up to $10,000 in donation-matching per person per year; I've been entirely missing that opportunity, due to not knowing the right search to find charities-relevant-to-me it's willing to match donations to; as of joining this channel, I now have that knowledge. This is going to let me move significantly more money, over upcoming years.

...and that channel, whose information is going to let me move tens of thousands of extra dollars over the next few years, only has 20 people in it currently. In a tech company with multiple hundreds of thousands of employees. It seems overwhelmingly likely that there are many more effective altruists at my company who are in the same position as me-as-of-two-weeks-ago, wishing they could get matches on their donations-to-EA-charities and not realizing that they have actually-practical options for means by which to do so.

So this has me thinking: at my company alone, there's most likely an opportunity to move tens-to-hundreds of thousands of dollars per year in matched donations by just advertising that channel, or the information therein, better. (Or more, if I roll a critical hit on successful advertising, but I'm currently expecting to get only a handful of people, given my inexperience with the field.) I've started scheming, accordingly, about how to spread the word within my company's internal systems.

But I've also started thinking: there exists more than one company. Most likely, there exists more than one company with circumstances similar to those in mine, donation-matching systems and other such impact-multiplying opportunities which large fractions of their EA-inclined employees either don't know about or don't know how to take advantage of. Therefore, it seems to me that the thing to do here isn't just to advertise company-specific EA resources within my company's channels for such things, but also to set up a larger public list. For each company, here are any relevant links to any EA subcommunity / company-specific EA-resource-listing / etc. that might exist for that company.

I myself don't have the skills to assemble or maintain such a list in well-optimized fashion, currently. I wouldn't know how to search-engine-optimize it, or how to keep its information accurate and up-to-date in the face of how it will almost certainly require submissions-from-the-public, or suchlike. And thus I figure this forum is the natural place to turn, likely to contain people who both have relevant skills and are likely to value employing those skills towards the end of creating and maintaining such a list. (Or, perhaps, to contain people who have arguments-I-have-yet-to-think-of regarding why this isn't as high-value an enterprise as it currently appears to me that it's likely to be, such that I should deprioritize it actually.)

(...possibly I should be making a top-level post about this, rather than just dumping it in the open thread like this? But I am sufficiently unfamiliar with local norms about what warrants top-level posting, currently, that I'm erring on the side of at least starting with this open-thread-post, instead.)

Felix Wolf @ 2023-08-23T10:43 (+3)

Hi Alyssa,

welcome to the EA Forum. :) This post could also have been a Quick Take or a post in this open thread as a starting point.

This serves well as your introduction, and I am glad you wrote it. Your post could already make someone curious if their company maybe too participates in matching donations and gives an incentive to search for opportunities on a small scale level.

To get the proposed public list going, it could help to make a separate post going more into detail on which actions to take and a more detailed sketch of the first plan (does not need to be perfect!).

Perhaps someone else has thought about this idea too, and we can brainstorm here a bit.

Alyssa Riceman @ 2023-08-24T22:20 (+8)

My broad image of what the plan would look like is something to the effect of:

Build a website whose main page consists of a large table of mappings from company names to EA subgroups within those companies, sorted alphabetically for ease-of-browsing. Then there are two branches currently coming to mind for how to gather information into the table: either a second page consisting of a submission-form for "here's some new information to add to the table", or wiki-style editing of the main page.

Then the first difficult hurdle: there needs to be some sort of moderation / fact-checking in place on the user-submitted data, in order to avoid problems with trolls submitting fake information, plus ideally also to avoid problems with its listings going stale. The wiki route is somewhat more accessible-to-editors, which on the one hand means staleness will be less of a danger but on the other hand means trolls will be more of one; but either way there are likely to be nonzero problems on both fronts, if the list gets at all big.

And then the second difficult hurdle: making the people who might benefit from the list aware of its existence. In one prong, this means search-engine-optimization; in another prong, this means posting it on the EA Forum; in a third prong, this means finding people who curate lists of EA-resource-links and letting them know about the list as a thing-that-exists-and-might-be-worth-linking; and plausibly there exist additional relevant angles that I'm currently failing to think of.

If I were working on this purely by myself, I'm reasonably confident that I could do the basic "create website and stick it at a URL somewhere" step, and plausibly I'd be able to figure out the search-engine-optimization and the spreading-word-of-its-existence. (Post on EA Forum, post on the EA Discord channel I'm in, mention its existence to friends who might know of other good places to post about it, et cetera.) However, I feel pretty much entirely inadequately-equipped for the task of moderation-of-user-submissions-to-the-list. So I suppose that's my big bottleneck, currently.

(Also important, on the side, is ensuring reasonable initial seeding of listed companies. Ideally people would be able to look at the list and see both Big Companies With Huge Numbers Of Employees and smaller startups, because otherwise I worry the list would end up with only people in one of those company-types submitting information to the list, on the assumption that the other type is Not The Target Audience, and that'd produce a list-usefulness-reducing feedback loop. I myself work for a single big company, but ideally I'd want to accumulate ~10 initial list-entries of varied company-size prior to posting about the list too much in the broader social sphere, so that most people's first impressions of the list would end up being of its being already-seeded.)

Lorenzo Buonanno @ 2023-08-25T06:23 (+4)

Hi Alyssa!

You might be interested in this table https://airtable.com/app6rLqQByByYXVP2/shrMATSSQRnHazk4a/tblgJmXDO1vROQzjd?backgroundColor=red&viewControls=on from @High Impact Professionals

https://www.highimpactprofessionals.org/groups I think your company is not in the table, and it might be worth adding!

Alyssa Riceman @ 2023-08-25T16:43 (+9)

Ooh. This does, indeed, look like pretty precisely the sort of thing I was envisioning, yes! Albeit less search-optimized than ideal, I think, in light of how I failed to find it even when actively running searches for e.g. "effective altruism at tech companies" in my first pass at trying-to-locate-prior-work-before-resigning-myself-to-doing-the-thing-myself.

Still, this sure does seem like pretty solid prior work in the field! Enough that I will likely pivot over from "try to build the thing myself" to "try to figure out how to improve the preexisting thing", at least for the time being. Thanks for the pointer!

Felix Wolf @ 2023-09-07T07:13 (+1)

This topic was discussed yesterday in the EA Germany Slack, starting with this article and asking if there is a website which lists all companies in Germany who match donations:

Why Workplace Giving Matters for Nonprofits + Companies

Some companies use external service providers for their matching. We can look at their clients to gain more information who matches donations.

Matching Gift Software Vendors: The Comprehensive List

With some examples:

https://benevity.com/client-stories

https://www.joindeed.com/

https://360matchpro.com/partners/

Could be a first step.

ClaudiaSeow @ 2023-09-13T06:01 (+16)

Hi everyone, I'm Claudia!

I found out about Effective Altruism through the Waking Up app. Sam Harris brought William MacAskill as a guest to speak about Being Good and Doing Good, and it really resonated with me. The Waking Up app already did a great job in making me contemplate on what it means to live a considered life, and then hearing about EA really got me thinking about my career and how I can contribute to the world.

I'm currently a Product Manager at a B2B startup, working remotely and based in Singapore. After reading the career guide from 80,000 hours, I found that operations management is probably the best career path for me at the moment, so I'm considering what options I can take to eventually get to Operations at a high impact organization. If anyone has a similar experience of pivoting from product management / working in startups to operations management, I'd love to get your advice!

I'm excited to be here, and looking forward to contributing to the EA community.

Felix Wolf @ 2023-09-15T19:06 (+2)

Hi Claudia,

welcome to the EA Forum. :)

There are EA ops groups:

EA Operations Slack for those who are currently working at EA orgs already, or their Facebook group Effective Altruism Operations.

At the EAGx Berlin 2023 we had an operations meetup, I can ask if you want to connect with some of the organizer.

Here is also the Effective Altruism Singapore local chapter.

Good luck with your career path.

Richard_Leyba_Tejada @ 2023-09-21T19:46 (+2)

Hi Claudia. I am reading the career guide from 80,000 hours (starting chapter 8 today ). I have a systems/business analysis background. Best wishes on your career path. Seems that operations is the best career path for me too.

Felix thank you for the links.

Rainbow Affect @ 2023-08-03T15:41 (+15)

Hi, I'm an undergraduate psychology student from Moldova. I found effective altruism when I was searching for a list of serious global problems when I was trying to write some fiction.

Now I'm trying to learn more about affective neuroscience and brain simulation in the hope that this information could help with AI alignment and safety.

Anyways, good luck with whatever you're working on.

JP Addison @ 2023-08-03T16:06 (+4)

I love that origin story, sounds fun. Welcome!

Rainbow Affect @ 2023-08-04T20:42 (+1)

Thank you a lot for these kind words, Mr. JP Addison!

I would like some EAs reading this comment to give some feedback on an idea I had regarding AI alignment that I'm really unsure about, if they're willing to. The idea goes like this:

- We want to make AIs that have human values.

- Human values ultimately come from our basic emotions and affects. These affects come from brain regions older than the neocortex. (read Jaak Pankseep's work and affective neuroscience for more on that)

- So, if we want AIs with human values, we want AIs that at least have emotions and affects or something resembling them.

- We could, in principle, make such AIs by developing neural networks that work similarly to our brains, especially regarding those regions that are older than the neocortex.

If you think this idea is ridiculous and doesn't make any sense, please say so, even in a short comment.

mhendric @ 2023-08-06T19:28 (+2)

Welcome to EA! I hope you will find it a welcoming and inspiring community.

I dont think the idea is ridiculous at all! However, I am not certain 2. and 3. are true. It is unclear whether all our human values come from our basic emotions and affects (this would seem to exclude the possibility of value learning at the fundamental level; I take this to be still an open debate, and know people doing research on this). It is also unclear if the only way of guaranteeing human values in artificial agents is via emotions and affects or something resembling them, even if it may be one way to do so.

Rainbow Affect @ 2023-08-08T06:54 (+1)

Oh my goodness, thanks for your comment!

Panksepp did talk about the importance of learning and cognition for human affects. For example, pure RAGE is a negative emotion from which we seek to agressively defend ourselves from noone in particular. Anger is learned RAGE and we are angry about something or someone in particular. And then there are various resentments and hatreds that are more subdued and subtle and which we harbor with our thoughts. Something similar goes for the other 6 basic emotions.

Unfortunately, it seems like we don't know that much about how affects work. If I understand you correctly, you said that some of our values have little to no connection to our basic affects (be they emotional or otherwise). I thought that all our values are affective because values tell us what is good or bad and affects also tell us what is good or bad (i.e. values and affects have valence), and that affects seem to "come" from older brain regions compared to the values we think and talk about. So I thought that we first have affects (i.e. pain is bad for me and for the people I care about) and then we think about those affects so much that we start to have values (i.e. suffering is bad for anyone who has it). But I could be wrong. Maybe affects and values aren't always good or bad and that their difference may lie in more than how cognitively elaborated they are. I'd like to know more about what you meant by "value learning at the fundamental level".

mhendric @ 2023-08-10T14:38 (+2)

That is interesting. I am not very familiar with Panksepp's work. That being said, I'd be surprised if his model ( _these specific basic emotions_ ; these specific interactions of affect and emotion) were the only plausible option in current cogsci/psych/neuroscience.

Re "all values are affective", I am not sure I understand you correctly. There is a sense in which we use value in ethics (e.g. Not helping while persons are starving faraway goes against my values), and a sense in which we use it in psychology (e.g. in a reinforcement learning paradigm). The connection between value and affect may be clearer for the latter than the former. As an illustration, I do get a ton of good feelings out of giving a homeless person some money, so I clearly value it. I get much less of a good feeling out of donating to AMF, so in a sense, I value it less. But in the ethical sense, I value it more - and this is why I give more money to AMF than to homeless persons. You claim that all such ethical sense values ultimately stem from affect, but I think that is implausible - look at e.g. Kantian ethics or Virtue ethics, both of which use principles that are not rooted in affect as their basis.

Re: value learning at the fundamental level, it strikes me as a non obvious question whether we are "born" with all the basic valenced states, and everything else is just learning history of how states in the world affected basic valenced states before; or whether there are valenced states that only get unlocked/learned/experienced later. Having a child is sometimes used as an example - maybe that is just tapping into existing kinds of valenced states, but maybe all those hormones flooding your brain do actually change something in a way that could not be experienced before.

Either way, I do think it may make sense to play around with the idea more!

Rainbow Affect @ 2023-08-11T02:47 (+2)

Thanks for commenting!

In other words, there seem to be values that are more related to executive functions (i.e. self-control) than affective states that feel good or bad? That seems like a plausible possibility.

There was a personality scale called ANPS (Affective Neuroscience Personality Scale) that was correlated with the Big Five personality traits. They found that conscienciousness wasn't correlated with any of the six affects of the ANPS, while the other traits in the Big Five were correlated with at least one of the traits in the ANPS. So conscienciousness seems related to what you talk about (values that don't come from affects). But at the same time, there was research about how much conscientious people are prone to experience guilt. They found that conscientiousness was positively correlated to how prone to guilt one is.

So, it seems that guilt is an experience of responsibility that is different in some way from the affective states that Panksepp talked about. And it's related to conscientiousness that could be related to the ethical philosophical values you talked about and the executive functions.

Hm, I wonder if AIs should have something akin to guilt. That may lead to AI sentience, or it may not.

Bibliography Uncovering the affective core of conscientiousness: the role of self-conscious emotions Jennifer V Fayard et al., J Pers., 2012 Feb. https://pubmed.ncbi.nlm.nih.gov/21241309/

A brief form of the Affective Neuroscience Personality Scales, Frederick S Barrett et al., Psychol Assess., 2013 Sep. https://pubmed.ncbi.nlm.nih.gov/23647046/

Edit: I must say, I'm embarrassed by how much these comments of mine go by the "This makes intuitive sense!" logic, instead of doing rigurous reviews of scientific studies. I'm embarrassed by how my comments have such a low epistemic status. But I'm glad that at least some EA found this idea interesting.

mhendric @ 2023-08-11T12:58 (+2)

Re edit, you should definitely not feel embarrassed. A forum comment will often be a mix of a few sources and intuition rather than a rigorous review of all available studies. I don't think this must hold low epistemic status, especially for the purpose of the idea being exploration, rather than, say, a call for funding or such (which would require a higher standard of evidence). Not all EA discussions are literature reviews, otherwise chatting would be so cumbersome!

I'd recommend using your studies to explore these and other ideas! Undergraduate studies are a wonderful time to soak up a ton of knowledge, and I look fondly upon mine - I hope you'll have a similarly inspiring experience. Feel free to shoot me a pm if you ever want to discuss stuff.

Kuiyaki @ 2023-07-18T02:29 (+15)

Hello everyone,

I am Joel Mwaura Kuiyaki from Kenya. I was introduced to the EA movement by a friend thinking it might be one of those normal lessons but I actually was intrigued and really enjoyed the first Intro sessions we had. It was what I had been looking for for a long while.

I intend to specialize in Effective giving, governance, and longtermism.

However, I am still interested in learning more about other cause areas and implementing them.

jjanosabel @ 2023-09-04T14:11 (+14)

Hello everyone,

I am Janos, a newbie member of two days. Originally from Hungary but have been living in London since late 1950's.

Interested in systemic reform of the current version of "capitalism" which is not capitalism as Adam Smith taught us.

I consider Effective Altruism as an immediate response to current suffering, like a First Aid service. But it has to run in parallel with effective efforts to transform a structurally faulty global system.

As I see that problem, it is based on a bad economic model which is structured to extract the wealth created socially, and transfer it to the top percentile of a population.

PS

I have a self-taught economist's detailed view of the "nuts'n'bolts analysis of the problem...

DC @ 2023-09-16T06:09 (+10)

What is your nuts'n'bolts analysis of the problem?

Joe Rogero @ 2023-07-09T16:15 (+11)

(Cross-posted on the EA Anywhere Slack and a few other places)

I have, and am willing to offer to EA members and organizations upon request, the following generalist skills:

- Facilitation. Organize and run a meeting, take notes, email follow-ups and reminders, whatever you need. I don't need to be an expert in the topic, I don't need to personally know the participants. I do need a clear picture of the meeting's purpose and what contributions you're hoping to elicit from the participants.

- Technical writing. More specifically, editing and proofreading, which don't require I fully understand the subject matter. I am a human Hemingway Editor. I have been known to cut a third of the text out of a corporate document while retaining all relevant information to the owner's satisfaction. I viciously stamp out typos.

- Presentation review and speech coaching. I used to be terrified of public speaking. I still am, but now I'm pretty good at it anyway. I have given prepared and impromptu talks to audiences of dozens-to-hundreds and I have coached speakers giving company TED talks to thousands. A friend who reached out to me for input said my feedback was "exceedingly helpful". If you plan to give a talk and want feedback on your content, slides, or technique, I would be delighted to advise.

I am willing to take one-off or recurring requests. I reserve the right to start charging if this starts taking up more than a couple hours a week, but for now I'm volunteering my time and the first consult will always be free (so you can gauge my awesomeness for yourself). Message me or email me at optimiser.joe@gmail.com if you're interested.

richardiporter @ 2023-07-07T12:34 (+9)

We should use quick posts a lot more. And anyone doing the more typical long posts should ALWAYS do the TLDRS I see many doing. It will help not scare people off. Im new to these forums, joined about a month ago coming in from first hearing Will M on Sam Harris a few times, reading doing good better, listening to lots of 80k hours pods and doing the trial giving what we can pledge, joining EA everywhere slack etc. But I find the vast majority of these forum posts extremely unapproachable. I consider myself a pretty smart guy and I’m pretty into reading books and listening to pods, but I’m still quite put off by the constant wall of words I get delivered by the forum digest (a feature I love!) I have enjoyed a few posts I’ve found and skimmed. It’s just that the main content is usually way too much.

NickLaing @ 2023-07-07T13:00 (+3)

Completely agree nice one - and I even forgot to do a TLDR on my last post! (although it was a 2 minute read and a pretty easy one I think haha).

Great to have you around :)

richardiporter @ 2023-08-19T02:37 (+1)

Thanks Nick!

Connacher Murphy @ 2023-07-05T20:17 (+9)

Hi everyone, I'm Connor. I'm an economics PhD student at UChicago. I've been tangentially interested in the EA movement for years, but I've started to invest more after reading What We Owe The Future. In about a month, I'm attending a summer course hosted by the Forethought Foundation, so I look forward to learning even more.

I intend to specialize in development and environmental economics, so I'm most interested in the global health and development focus area of EA. However, I look forward to learning more about other causes.

I'm also hoping to learn more about how to orient my research and work towards EA topics and engage with the community during my studies.

Tobias Dänzer @ 2023-09-17T11:00 (+7)

What exactly does the "Request for Feedback" button do when writing a post? I began a post, clicked the aforementioned button, and my post got saved as a draft, with no other visible feedback as to what was happening, or whether the request was successful, or what would happen next.

Also, I kind of expected that I'd be able to mention what kind of feedback I'm actually interested in; a generic request for feedback is unlikely to give me the kind of feedback I'm interested in, after all. Is the idea here to add a draft section with details re: the request for feedback, or what?

JordanStone @ 2023-09-10T14:51 (+7)

Hello! I'm just here to introduce myself as I think I'm a bit of an unusual effective altruist. I am an astrobiologist and my research focuses on the search for life on Mars. Before discovering effective altruism I was always very interested in the long term future of life in the context of looming existential risks. I thought the best thing to do was to send life to other planets so that it would survive in a worst case scenario. But a masters degree later, I got into effective altruism and decided that this cause was a 10/10 on importance, 10/10 on neglectedness, and 1/10 on tractability.

So my focus has changed more recently as I progress through my PhD. I'm interested in the moral implications of astrobiology as it plays a really important role at the core of longtermism. There are a few moral implications that depend on astrobiology research:

- Research conclusion: The universe and our Solar System are full of habitable celestial bodies. Moral implication: The number of potential future humans is huge in the long term future, so we ought to protect these people through research into existential risks.

- Research conclusion: The universe seems to be empty of life. Moral Implication: Life on Earth is extremely valuable, so ensuring its survival should be the highest moral priority.

- Research conclusion: Planets like Earth are extremely rare and far away. Moral implication: "there is no planet B" - we ought to protect our Earth for the next ~1000 years as there is no backup plan

I plan to investigate these ideas further and see where they lead me. I think that as I progress in my career, I can equip philosophy and outreach to help people understand the long term perspective and inspire action towards tackling existential risks. Great to meet you all!

Richard_Leyba_Tejada @ 2023-09-21T19:51 (+2)

Very interesting. I enjoyed reading your thoughts on life here and there (loose reference to the "Now and Then, Here and There" anime. Our planet and life are very precious. Good meeting you :)

Rafael Harth @ 2023-09-16T10:46 (+2)

Are you aware of Robin Hanson's Grabby Aliens model? It doesn't have an immediate consequence for the research conclusions you listed, but I think it (and anthropic considerations in general) are in the wheelhouse of interesting stuff if you care about space travel.

JordanStone @ 2023-09-23T20:08 (+1)

Yeah, though I only became aware of it after getting involved with EA. It seems to fit in the group of very interesting philosophical space things that are scary and I have no idea what to do about! Joining the club with the great filter, the doomsday argument, and the fermi paradox.

Felix Wolf @ 2023-09-11T20:58 (+2)

Hi Jordan,

welcome to the EA Forum. :)

@Ekaterina_Ilin studies stars and exoplanets at the Leibniz Institute for Astrophysics Potsdam. Maybe this connection can help you with your research.

JordanStone @ 2023-09-23T20:09 (+2)

Hi Felix. Thanks for the welcome and the introduction to @Ekaterina_Ilin :)

Paul Sochiera @ 2023-09-19T09:23 (+6)

Hi everyone, I'm Paul, a software engineer based in Münster, Germany.

I came across Effective Altruism because of the book by William MacAskill a few years ago. Later, I came in contact with 80.000 hours, giving what we can and many of their representatives in various podcasts/books/articles. I consider Effective Altruism one of the greatest movements I have come across and I feel very grateful for being able to contribute.

Up until now, I have "only" earned to give. But having recently quit my job and having had a few start-up experiences, I now want to go looking for common problems in the main cause area problem spaces, to solve them and directly contribute to them.

Does anyone work in the field and is aware of major problems? Would you be willing to have a short chat with me?

If anyone needs advice on all things software, I would be happy to help out as well.

I'm happy to be here, and look forward to contributing to EA.

Gerald Monroe @ 2023-08-29T00:54 (+6)

Hi. I am posting under my real name. I have been effectively banned from less wrong for non actionable reasons. The moderators made a bunch of false accusations publicly without giving me a chance to review or rebut any of them. I believe it was because I don't think AI is necessarily inevitably going to cause genocide and that obvious existing techniques in software should allow us to control AI. This view seems to be banned from discussion, with downvotes used to silence anyone discussing it. I was curious if this moderation action applied here.

The chief moderator, Raemon, has sanctioned me twice with no prior warning of any misconduct, making up new rules explicitly used against me. He has openly stated he believes in rapid foom - aka extremely rapid world takeover by an AI system which continues to self replicate at an exponential rate, with doubling times somewhere in the days to weeks.

Rainbow Affect @ 2023-08-30T09:55 (+5)

Mr. Monroe, that sounds like a terrible experience to go through. I'm sorry you went through that.

(So, there are software techniques that can allow us to control AI? Sounds like good news! I'm not a software engineer, just someone who's vaguely interested in AIs and neuroscience, but I'm curious as to what those techniques are.)

archdawson @ 2023-08-23T20:36 (+6)

Hello! My name is Dawson. I am near to completing my PhD in aerospace engineering. I recently switched to working remotely and I have been missing collaborative projects and working with people.

If you know of projects, volunteer opportunities, or part-time jobs that would benefit from someone with an engineering research background, please let me know! My primary goal is having a regular reason to talk with people, and I would love to use that time to contribute to EA rather than working at a coffee shop or something. (I'm reading through the EA list of opportunities as well, but I thought I would post something here just in case someone reading this knows of an opportunity)

Linch @ 2023-08-24T01:42 (+5)

Welcome Dawson! Where physically are you based in?

archdawson @ 2023-08-24T11:35 (+2)

I live in Hartford, Connecticut

Bat'ko Makhno @ 2023-07-04T05:15 (+6)

A thought, with low epistemic confidence:

Some wealthy effective altruists argue that by accumulating more wealth, they can ultimately donate more in the long run. While this may initially seem like a value-neutral approach, they reinforce an unequal rather than altruistic distribution of power.

Widening wealth disparities and consolidating power in the hands of a few, further marginalises those who are already disadvantaged. As we know, more money is not inherently valuable. Instead, it is how much someone has relative to others that influences its exchange value, and therefore influence over scarce resources in a zero-sum manner with other market participants, including recipients of charity and their benefactors.

Elias Malisi @ 2023-08-19T03:35 (+1)

While I agree to the fact that more money is not inherently valuable, I believe that there is a valid case for patient philantropy, which you haven not engaged with in your critism of the concept.

Moreover, I disagree with the statement that unequal distributions of power are conceptually opposed to distributions that maximise welfare impartially in light of the argument that it is likely good to increase the power of agents who are sufficiently benevolent and intelligent.

I assume that you refer to distributions of power which maximise welfare impartially by saying altruistic distributions. If this interpretation is incorrect, my disagreement might no longer apply.

Epistemic status from here: I do not have a degree in economics and my knowledge of market dynamics is fairly limited so I might have missed some implicit fact which validates the argument I am commenting on.

I believe that it may be inappropriate to see accumulating money as determining influence over scarce resources in a zero-sum manner since gaining money does not necessarily reduce the influence of any other involved parties over existing resources.

To understand this, we can look at the following scenario:

- Alice and Bob both own $100

- There are 5 insecticide-treated bet nets which are also owned by Alice

- Bob earns $100. He now has twice as much money as Alice.

- However, Alice's 5 bet nets have retained their full effectiveness and she isn't forced to sell any of them to Bob.

- Therefore, Alice has retained her influence over the scarce resource called bet nets even though the relative value of her financial resources decreased.

In the real world Bob could presumably leverage his financial advantage by hiring mercenaries to steal the bet nets from Alice or using other forms of coercion but he does not necessarily do this.

Thus, Alice has lost potential influence but not influence, which is an important distinction because altruists are highly unlikely to use their money for the purpose of actively taking resources from recipients of charity or their benefactors.

Notably, the evaluation would look different if one believed in strong temporal discounting of money since the altruists would then be diminishing the value they are providing through their donations by delaying them, thereby subtracting from the influence of the charities relative to the counterfactual. But in that case the altruist would not have gained any influence, making the sum negative but not zero.

Carlos Ramírez @ 2023-09-06T01:11 (+5)

I was doing the 80,000 hours career guide, but I suppose it's too ambitious for me. I just want to work for an org with a good altruistic mission, not completely maximize my impact. What's the career advice for that? I've been doing full-stack web dev for 11 years now, so I've learned a thing or two about running big complicated projects, at least in the software world, but I think this transfers, since complexity is complexity.

I looked at the orgs listed in the software dev career path, but they didn't seem very inspiring. I'm open to going back to college, but I wouldn't be sure for what. I hear EA still has an operations bottleneck, but it doesn't seem like that's something you can study for, and I'm not sure if transitioning my career to management (a tricky move, as I haven't really gunned for leadership, though I have been the lead at times) would enable a jump to operations later on.

Any advice?

Imma @ 2023-09-06T06:18 (+4)

80k is not the only one who provides altruistic career advice. You can check out

There are probably a few more.

Michelle_Hutchinson @ 2023-11-17T16:25 (+2)

Sorry to hear you didn't find what you were looking for in the 80,000 Hours career guide. You could consider checking out this website that maintains a list of social purpose job boards. I'd guess that going through some of those would yield some good options for full stack web-dev roles at organisations with a broad range of missions, hopefully including some inspiring ones!

quinn @ 2023-07-06T10:52 (+5)

I'm extremely upset about recent divergence from ForumMagnum / LessWrong.

- 1 click to go from any page to my profile became 2 clicks. (is the argument that you looked at the clickstream dashboard and found that Quinn was the only person navigating to his profile noticeably more than he was navigating to say DMs or new post? I go to my profile a lot to look up prior comments so I can not repeat myself across discord servers or threads as much!)

- permalink in the top right corner of comment, instead of clicking the timestamp (David Mears suggests that we're in violation of industry standard now)

- moving upvote downvote to the left. and removing it from the bottom! this seems opposite to me: we want more people upvoting/downvoting at the bottom of the posts (presumably to decrease voting on things without actually reading) and less people voting at the top!

I'm neutral on QuickTakes rebrand: I'm a huge fan of shortform overall (if I was Dictator of Big EA I would ban twitter and facebook and move everybody to to shortform/quicktake!), I trust y'all to do whatever you can to increase adoption.

Sarah Cheng @ 2023-07-11T20:14 (+13)

Thanks for sharing your feedback! Responding to each point:

- I removed the profile button link because I found it slightly annoying that opening that menu on mobile also navigates you to your profile, plus I think it's unusual UX for the user menu button to also be a link.

- We recently changed this back, so now both the timestamp and the link icon navigate you to the comment permalink. We’ll probably change the link icon to copy the link to your clipboard instead.

- We didn't change the criteria for whether the vote arrows appear at the bottom of a post - we still use the same threshold (post is >300 words) as LW does. We did move the vote arrows from the right side of the title to the left side of the title on the post page. This makes the post page header more visually consistent with the post list item, which also has the karma info on the left of the title.

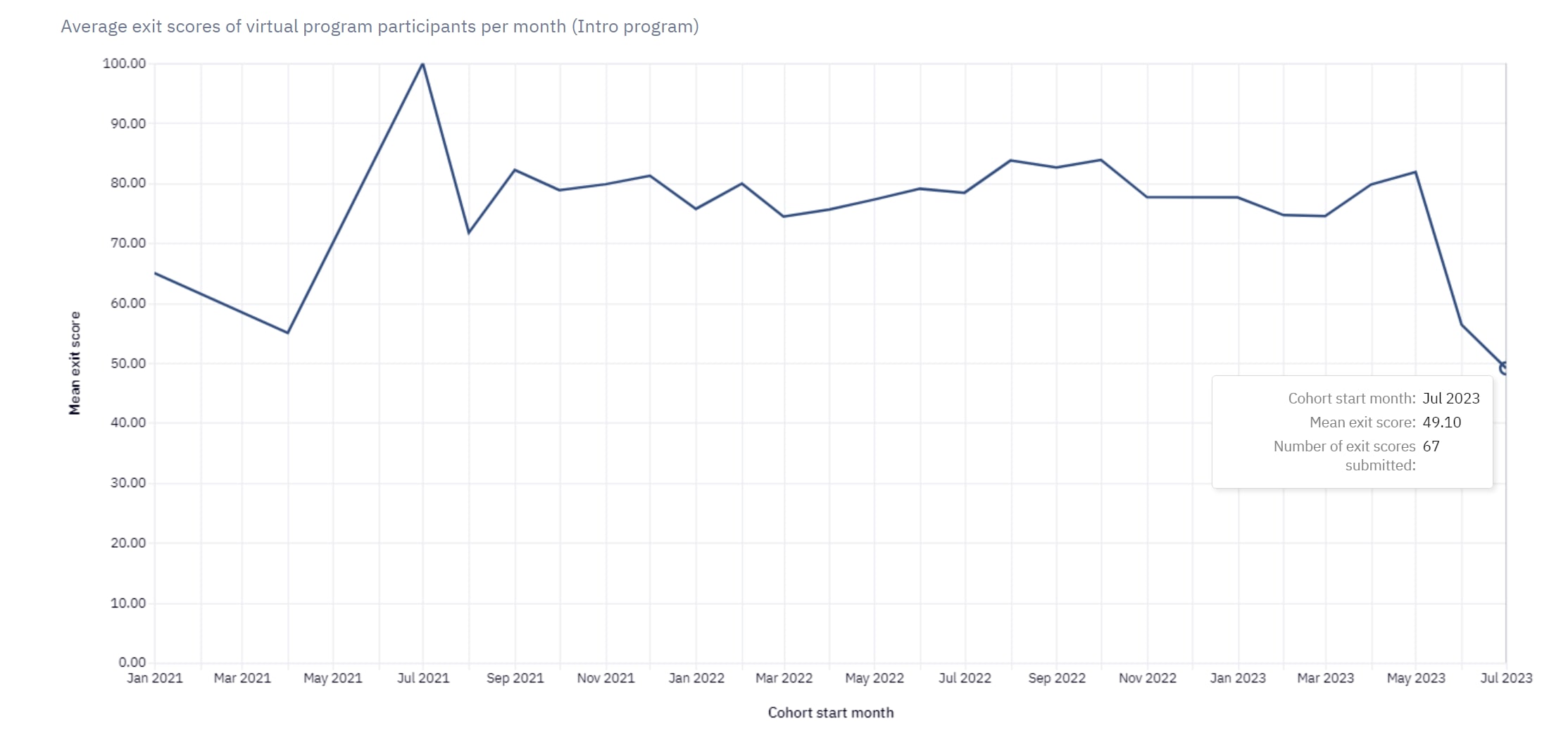

Defacto @ 2023-09-25T21:57 (+4)

I think it's important that there isn't a knee jerk reaction (e.g. it may not be the fault of any person, there may be a complex cause, or there may not be any real issue at all).

Why has the virtual program scores sort of fallen off? That's a huge stat change.

Defacto @ 2023-09-25T21:57 (+3)

example reasons:

- someone with a different vision has started and there's some rough spots to smooth out

- someone with a different vision has started and this is exactly the correct score produced by their correct programs

- there was gaming or gardening of the scores and this gardening stopped

- staff left or anticipates leaving after funding pulled, and have a "schools out" mentality, e.g. participants showing up to empty zoom meeting

reception to the surprise SBF appearance less positive than expected

Conor McGurk @ 2023-09-26T15:37 (+12)

Hi Defacto! I work on Virtual Programs at CEA.

I believe Angelina left a note in the post where she shared this graph, but right before that cycle we changed the quiz (only 4/10 of the questions are the same). We knew these new questions were quite a bit harder, although candidly we were not expecting score drops this dramatic. We're doing some investigation of the questions, and my guess is we'll iterate a bit more since I think some of the new questions were difficult in ways that are not actually about understanding of the content.

With all that said, I wouldn't focus too much on changes in this score right now, since they are almost certainly tracking changes in evaluation criteria and not changes in student understanding.

JWS @ 2023-09-18T12:36 (+4)

Are the Career Conversations and AI Pause Debate weeks on the Forum part of a trend to have 'week-specific' topics? I sort-of like the idea to focus Forum discussion on rotating/specific topics (alongside the usual dynamics) to see what the Community thinks of issue X at a given time as well as share up-to-date opinions and resources.

If it is, is there a prospective calendar anywhere?

Neill @ 2024-01-05T14:04 (+3)

Hi all,

I came across the 80,000 hours initiative a few years ago and it 100% aligned with my purpose in life.

I'm on track to give 50% of my salary to the top EA charity [We are downsizing our home] later this year.

Just having this purpose has undoubtedly made me happier and I'd like to promote this benefit to others, as my second passion is increasing Happiness, the synergy between the two is fantastic.

In the meantime, I've been adjusting some famous quotes to include an EA mindset, what thinks you?

'If you want to lift yourself up, lift up someone else.'

Booker T. Washington

To: ''If you want to lift yourself up as high as possible, lift up someone else as high as you can.'

'If you want happiness for a year, inherit a fortune. If you want happiness for a lifetime, help someone else.'

Confucius

To: If you want happiness for a year, inherit a fortune. If you want maximum happiness for a lifetime, help someone else as best you can.'

'As the purse is emptied the heart is filled.'

Victor Hugo

'As the purse is emptied the heart is filled. But keep it filled enough to keep yourself. '

John Usher @ 2023-08-20T23:40 (+3)

Perhaps not entirely EA but I came across this post that’s potentially interesting and disturbing of a statistician casting scepticism on the evidence that convicted Lucy Letby and I wondered if any rationalists could assess its claim. Potentially touches on weaknesses in the justice system regarding medical evidence and how’s it’s weighed up. https://gill1109.com/2023/05/24/the-lucy-letby-case/?amp=1

Lorenzo Buonanno @ 2023-08-20T23:48 (+4)

I wondered if any rationalists could assess its claim.

You might want to post this on LessWrong: "an online forum and community dedicated to improving human reasoning and decision-making. We seek to hold true beliefs and to be effective at accomplishing our goals. Each day, we aim to be less wrong about the world than the day before."

emre kaplan @ 2023-08-09T08:23 (+3)

The blog post "This can't go on" is quite prominent in the introductory reading lists to AI Safety. I really struggle to see why. Most of the content in the post is about why the growth we currently have is very unusual and why we can't have economic growth forever. I think mainstream audience is already OK with that and that's a reason they are sceptical to AI boom scenarios. When I first read that post I was very confused. After organising a few reading groups other people seem to have similar confusions too. It's weird to argue from "look, we can't grow forever, growth is very rare" to "we might have explosive growth in our lifetimes." A similar reaction here.

Habryka @ 2023-08-19T01:47 (+4)

It's an argument for most-important century hypothesis. Seems like something big has to change this century, things can't go on in terms of growth. This is IMO substantial evidence in favor of either extinction or like a century of continued growth, and very strong evidence against "the world in 100 years will look kind of similar to what it looks like today".

emre kaplan @ 2023-08-26T14:54 (+8)

I disagree with the following:

"very strong evidence against "the world in 100 years will look kind of similar to what it looks like today"."

Growth is an important kind of change. Arguing against the possibility of some kind of extreme growth makes it more difficult to argue that the future will be very different. Let me frame it this way:

Scenario -> Technological "progress" under scenario

- AI singularity -> Extreme progress within this century

- AI doom -> Extreme "progress" within this century

- Constant growth -> Moderate progress within this century, very extreme progress in 8200 years

- Collapse through climate change, political instability, war -> Technological decline

- Stagnation/slowdown -> Weak progress within this century

Most of the mainstream audience mostly give credence in the scenarios 3, 4 and 5. The scenario 3 is the scenario with the highest technological progress. The blog post is mostly spent on refuting the scenario 3 by explaining the difficulty and rareness of the growth and technological change. This argument makes people give more credence in scenarios 4 and especially 5 rather than 1 and 2, since the scenarios 1 and 2 also involve a lot of technological progress.

For these reasons, I'm more inclined to believe that an introductory blog post should be more focused on assessing the possibility of 4 and 5 rather than 3.

Arguing against 3 is still important, as it is decision-relevant on the questions of whether philanthropic resources should be spent now or later. But it doesn't look like this topic makes a good intro blog-post for AI risk.

Henry Wilkin @ 2023-08-03T20:33 (+3)

Hello everyone,

I was introduced to the effective altruism movement about a decade ago, but it's taken me a while to engage with it. At present, I'm interested in exploring ways that modern information and computing technology can help people more effectively align short term decisions (e.g. purchases, housing choices, transportation, employment, etc.) with their ultimate/long-term objectives (e.g. in medicine, economics, social justice, sustainability, etc.), as well as understanding the ethical implications of and possibilities allowed by emerging technologies, and what factors might ultimately determine or limit how those technologies are adopted and expressed.

Richard_Leyba_Tejada @ 2023-09-21T20:03 (+2)

Hi I am Richard. I live in the US. Moved to the US from the Dominican Republic in 1994. I am reading the career guide from 80,000 hours (starting chapter 8 today ). I have a systems/business analysis background. I want to use my career to do good in EA, extreme poverty and mental health. Looks like operations is the best path for me. Looking forward to meeting others in this space, learning more and contributing.

Azad Ellafi @ 2023-09-15T18:06 (+2)

Hello everybody, I'm Azad.

I've been into EA for a while but I never explored the community aspect. Since I've decided to go the EAG this year and help volunteer I thought it would be worth being more active on the forums and engage with the community more.

I'm currently a student studying Computer Science. On the side I enjoy reading philosophy papers and books. My introduction into EA was through an Ethics course I took, and I pretty quickly bought it as a moral obligation. From there on I took an interest in AI and animal suffering. Nice to meet you all!

quinn @ 2023-09-13T20:13 (+2)

I would like for XPT to be assembled with the sequence functionality plz https://forum.effectivealtruism.org/users/forecasting-research-institute I like the sequence functionality for keeping track of my progress or in what order did I read things

karl hedderich @ 2023-07-03T21:49 (+2)

I have a proposal for making an AGI killswitch.

Assuming god like computational budgets, algorithmic improvements, and hardware improvements, can you use fully homomorphic encryption (FHE) to train and run an AGI? FHE allows you to run computation on encrypted data without decrypting it. Wouldn't such an AGI find the world illegible with out its input being specifically encrypted for it with a key?

Can the key then be split into shards so that m of n shards are needed to encrypt queries to the FHE AGI? Can you also create a m of n function so that if m of n shards are destroyed the key is useless?

Can you distribute the keys in such a way so if the AGI destabilizes or hurts society m of n keys are destroyed as a byproduct and the AGI is functionally turned off?

I think this would create a kill switch that is hard to subvert by the AGI and easily triggered by society.

At the limit you could create a shard for every person on the planet with only one shard deletion being required to delete the key. Then if anyone doesn't like the way things are going with the AGI they could delete their key killing it.

You might argue that this is a brute force method for alignment. Everyone is happy with the AGI's behavior or the AGI is rendered nonfunctional. This method avoids defining what alignment is. This is important because defining defining what an aligned state is is intractable. There are too many divergent and contradictory opinions about what alignment means to ever reach universal consensus. What alignment means will necessarily change from person to person and moment to moment. It is too subjective and value laden a goal to be objectively defined and put in to an algorithm to control an AI.

I would love criticism of this idea to know why it is impractical, not-even-wrong, or flawed.

One problem is that the FHE AGI could secretly steal enough key shards to generate it's own functional copy of the key. This seems hard to do but not impossible.

Another problem is that the AGI could get smart enough to break the FHE.

FHE of the AGI might make it harder to interpret its internal workings.

Weaver @ 2023-07-04T21:57 (+1)

I feel like this is an excessively software driven way to do this. I have a suggestion.

Make it a hardware thing.

AI relies on circuitboards and memory and connections etc, so instead of making it something that can be found out using an algorithm, make it a physical key that does a physical thing.

Think of a lock on an obscure door versus a really really good password.

You can brute force any password, given time. Physical access is access.

If you can't even find the widget? Yeah.

The opposite is also important. If the Killswitch needs to be integrated then removed it should be a "this needs to be done once every two years but the rest of the time it's hidden via obscurity".

Also development of AGI, just making it would be difficult so hastening it for no reason? Hmmm.

Ethan Roland @ 2023-10-08T18:51 (+1)

Is there any online aggregator for short term volunteering opportunities with EA-affiliated orgs?

Anecdotally I've seen a lot of interest in volunteering among the EAs in my area, but a quick search for volunteering opportunities online only shows the more traditional "staff a soup kitchen" or "do phone banking" style opportunities. While certainly these are good things, it seems against the ethos of doing the most good with limited resources. Many of the EAs near me are professionals with expertise in high-demand / low-supply skill sets, and it seems like there ought to be a low-friction way for them to use their competitive advantages towards direct-impact work.

To make things concrete I'm imagining a site like Fiverr with tasks like "help us analyze the data from this study" or "design marketing materials" or "copyedit this communications letter". I imagine all tasks would be relatively small in scope (1-15 hours of work) and self-contained (able to be comprehensively understood by someone with relevant skills with <30 minutes of explanation). Perhaps there could be something on the site where you can sort opportunities by expected impact / cause area / skills required / etc.

Does anything like this already exist?

Jens Aslaug @ 2023-08-30T17:17 (+1)

I have a question regarding artificial sentience.

While I do agree that it might be theoretically possible and could cause suffering on a astronomical scale, I do not understand why we would intentionally or unintentionally create it. Intentionally I don't see any reason why a sentient AI would proform any better than a non-sentient AI. And unintentionally, I could imagine that with some unknown future technology, it might be possible. But no matter how complex we make AI with our current technology, it will just become a more "intelligent" binary system.

So am I right in these statements? And if so, is the cause area more about preparing for a potential future technology? If you have any information or sources I will be very thankful for your time. I welcome any thoughts.

Elias Malisi @ 2023-08-19T00:02 (+1)

Introducing Myself

Who I am

My name is Elias Malisi, though my friends call me Prince.

I am an undergraduate student of philosophy & physics at the University of St Andrews and I volunteer as an IT-officer for the physics education non-profit Orpheus e.V.

My mission is to improve the long-term future through research and advocacy that seek to mitigate risks from misaligned incentive structures while advancing the development of safe AI.

I grew up in Germany where I recently graduated high school with a perfect GPA as the best student of ethics in the country on top of receiving multiple awards for academic excellence in physics and English.

My history with EA

I have been introduced to EA through the Atlas Fellowship around May 2023 and have since been reading EA materials intensively. Moreover, I am enrolled in the Introductory EA Virtual Program, the Precipice Reading Group, and the AI Safety Quest Pilot Program at the time of this post.

My interests

I am primarily interested in three sets of related fields:

- (Complex) systems science, mechanism design, and machine learning as applied to AI safety and improving institutional decision making

- Acting, video production, and digital outreach as applied to communicating important ideas, building EA, and promoting positive values

- Rationality and self-improvement as applied to improving my capacity to pursue 1. and 2.

What I can do for you

- I know quite a lot about ways for high-school students and, to a more limited extent, early undergraduates to build career capital through competitions, fellowships, or camps, so feel free to reach out to me if such information would be useful to you.

- Also reach out to me if you want to add a promising young researcher to your network. I expect that I will be able to provide much more value to you in the future.

What you can do for me

I am looking for mentorship, opportunities, and funding to build career capital in the following areas:

- Systems Science

- Machine Learning and Technical AI Safety

- Mechanism Design

- Acting

- Video Production

- Digital Outreach and Copywriting

If you consider providing mentorship or know someone who likely would, I believe the expected value of reaching out to me to see whether I would be a good fit is highly positive since any suitable mentoring opportunity would accelerate my impact significantly.

Think of it as using a bit of your time for hits-based giving.

Additionally, I would love to hear about any EA organisations that specialise in or fund upskilling for aspiring EA communicators.

Furthermore, I would welcome feedback on whether or not you think that acquiring skills in acting and video production would likely be valuable for an aspiring EA communicator, assuming that they are a good fit for it. Vote agree to indicate positive counterfactual impact and disagree to indicate that you believe counterfactuals to be more valuable.

PS: Please let me know about appropriate places for cross-posting this introduction.

m(E)t(A) @ 2023-07-26T23:39 (+1)

Has anything changed on the forum recently? I am no longer able to open posts in new tabs with middle-click? Is it just me?

JP Addison @ 2023-07-27T16:19 (+5)

Sorry about that, we recently broke this fixing another bug. Fix should be live momentarily.

Shalott @ 2023-07-16T09:51 (+1)

Hello all, I'm new here and trying to find my way around the site. The main reasons I joined are:

- to look for practical information about donating and tax returns in the Netherlands. Does anyone know where I could find information about this? I'm trying to figure out if it's worth it to get something back from taxes and what hoops I have to jump through.

- suggestions for charities. My current idea is that I want to donate an amount to GiveWell for them to use as they see fit, and donate an amount to a charity specialized in providing contraception/abortion/gynaecologic health for women. If you have suggestions I would love to hear it. Preferably charities with an ANBI-status, which is a necessity in the Netherlands for the tax returns. Right now the only things I've found are SheDecides, which is more political action less practical, and Pathfinder International, which doesn't have the ANBI stamp.

Any replies are welcome!

Imma @ 2023-07-16T16:20 (+4)

Welcome to the EA forum. Great to hear that you would like to donate :).

You can find information about charity selection and tax on the Doneer Effectief website. You can donate to GiveWell recommended charities via Doneer Effectief, but also to a few other charities. They also have a page with info about tax - but you may want read the website of the Belastingdienst to double check. (I can try to find the info in English for you upon request).

If you are looking for a community where you can talk about giving and charity selection, see De Tien Procent Club which is specific for the Netherlands, and Giving What We Can which is international.

Shalott @ 2023-07-17T20:26 (+1)

Thanks, that's very useful information! En Nederlands is prima, hoor :)

James Herbert @ 2023-08-21T09:38 (+1)

Welcome to the Forum Shalott! The Tien Procent Club is a great suggestion from Imma but you can get a broader overview of the Dutch EA community via Effectief Altruisme Nederland at effectiefaltruisme.nl.

For example, upcoming events include a career planning session, a workshop on lobbying, and various socials around the country. We also have a co-working space in Amsterdam, an introductory course where you can learn more and meet new people, information on volunteering, and much more besides! Feel free get in touch if you have any further questions.

Lorenzo Buonanno @ 2023-07-16T16:16 (+3)

Hi Shalott, welcome to the Forum!

- On taxes, you might be interested in this page https://doneereffectief.nl/advies/belastingvoordeel/ (en: https://doneereffectief.nl/en/advice/tax-and-anbi/ )

- On suggestions for charities, there is no easy answer, as it depends a lot on your empirical and moral beliefs. You might be interested in these posts on family planning, which seem related to what you're looking for. I have personally donated to Family Empowerment Media

Shalott @ 2023-07-17T20:27 (+1)

Thanks, that looks useful!

sagearbor @ 2023-07-12T14:07 (+1)

TLDR: Bio Data scientist here concerned about AI risks , working to get his institution (DCRI) at Duke working on AI and alignment.

--

Long Version: I wrote below blurb and pasted it into https://bard.google.com/ to get TLDR to us...

Can you create a TLDR for the following post: Hi Sage Arbor here. I just joined effectivealtruism.org and have been listening to the 80K podcast for about a year. I work in data science (PhD biochem) and currently work with clinical trails at Duke. My main concernt is AI in the next 10 years. Id like my institution Duke Clinical Research Institute (DCRI) create a center of excellence for AI and dedicate a percentage of work towards alignment. Im starting to give talks to that end now. I thought this might be a good place to post alignment articles or work I have to get suggestions on improvement (as always tha work seems 6 months off when I find time).

Sure, here is a TLDR for Sage Arbor's post:

- Sage Arbor is a data scientist with a PhD in biochemistry who works with clinical trials at Duke.

- They are concerned about the potential risks of AI in the next 10 years and want to help their institution, Duke Clinical Research Institute (DCRI), create a center of excellence for AI and dedicate a percentage of work towards alignment.

- They are starting to give talks about this and thought this forum might be a good place to post alignment articles or work they have to get suggestions on improvement.

Here is a more concise TLDR:

- Data scientist concerned about AI risks wants to create AI alignment center at DCRI.

I hope this is helpful!

Martin (Huge) Vlach @ 2023-08-07T11:17 (+1)

Is this (made by )you?