AISN #54: OpenAI Updates Restructure Plan

By Center for AI Safety, Corin Katzke, Dan H @ 2025-05-13T16:48 (+7)

This is a linkpost to https://newsletter.safe.ai/p/ai-safety-newsletter-54-openai-updates

Welcome to the AI Safety Newsletter by the Center for AI Safety. We discuss developments in AI and AI safety. No technical background required.

In this edition: OpenAI claims an updated restructure plan would preserve nonprofit control; A global coalition meets in Singapore to propose a research agenda for AI safety.

Listen to the AI Safety Newsletter for free on Spotify or Apple Podcasts.

Subscribe to receive future versions.

OpenAI Updates Restructure Plan

On May 5th, OpenAI announced a new restructure plan. The announcement walks back a December 2024 proposal that would have had OpenAI’s nonprofit—which oversees the company’s for-profit operations—sell its controlling shares to the for-profit side of the company. That plan drew sharp criticism from former employees and civil‑society groups and prompted a lawsuit from co‑founder Elon Musk, who argued OpenAI was abandoning its charitable mission.

OpenAI claims the new plan preserves nonprofit control, but is light on specifics. Like the original plan, OpenAI’s new plan would have OpenAI Global LLC become a public‑benefit corporation (PBC). However, instead of the nonprofit selling its control over the LLC, OpenAI claims the nonprofit would retain control of the PBC.

It’s unclear what form that control would take. The announcement claims that the nonprofit would be a large (but not necessarily majority) shareholder of the PBC, and, in a press call, an OpenAI spokesperson told reporters that the nonprofit would be able to appoint and remove PBC directors.

The new plan may still remove governance safeguards. Arguably, the new plan may not preserve any of the governance safeguards that critics said would be lost in the original reorganization plan.

First, unlike OpenAI’s original “capped‑profit” structure, the new PBC will issue ordinary stock with no ceiling on investor returns. The capped-profit model was intended to ensure that OpenAI equitably distributed the resources of developing AGI. CEO Sam Altman framed the move as a simplification that would allow OpenAI to raise capital. However, if OpenAI developed AGI, the nonprofit would only partially control the wealth OpenAI would accumulate.

Second, owning shares of and appointing directors to the PBC does not ensure that the nonprofit could adequately control the PBC’s behavior. The PBC would be legally required to balance its charitable purpose with investor returns, and the nonprofit would only have indirect influence over the PBCs behavior. This differs from the current model, in which the nonprofit has direct oversight of development and deployment decisions. (For more discussion of corporate governance, see our textbook.)

The new plan isn’t a done deal. Up to $30 billion in funding led by SoftBank is contingent on the new plan going through. Microsoft, OpenAI’s largest backer, holds veto power over structural changes and is negotiating what percentage of the PBC it will own. Delaware Attorney General Kathy Jennings said she will review the new plan; her California counterpart signaled a similar review.

AI Safety Collaboration in Singapore

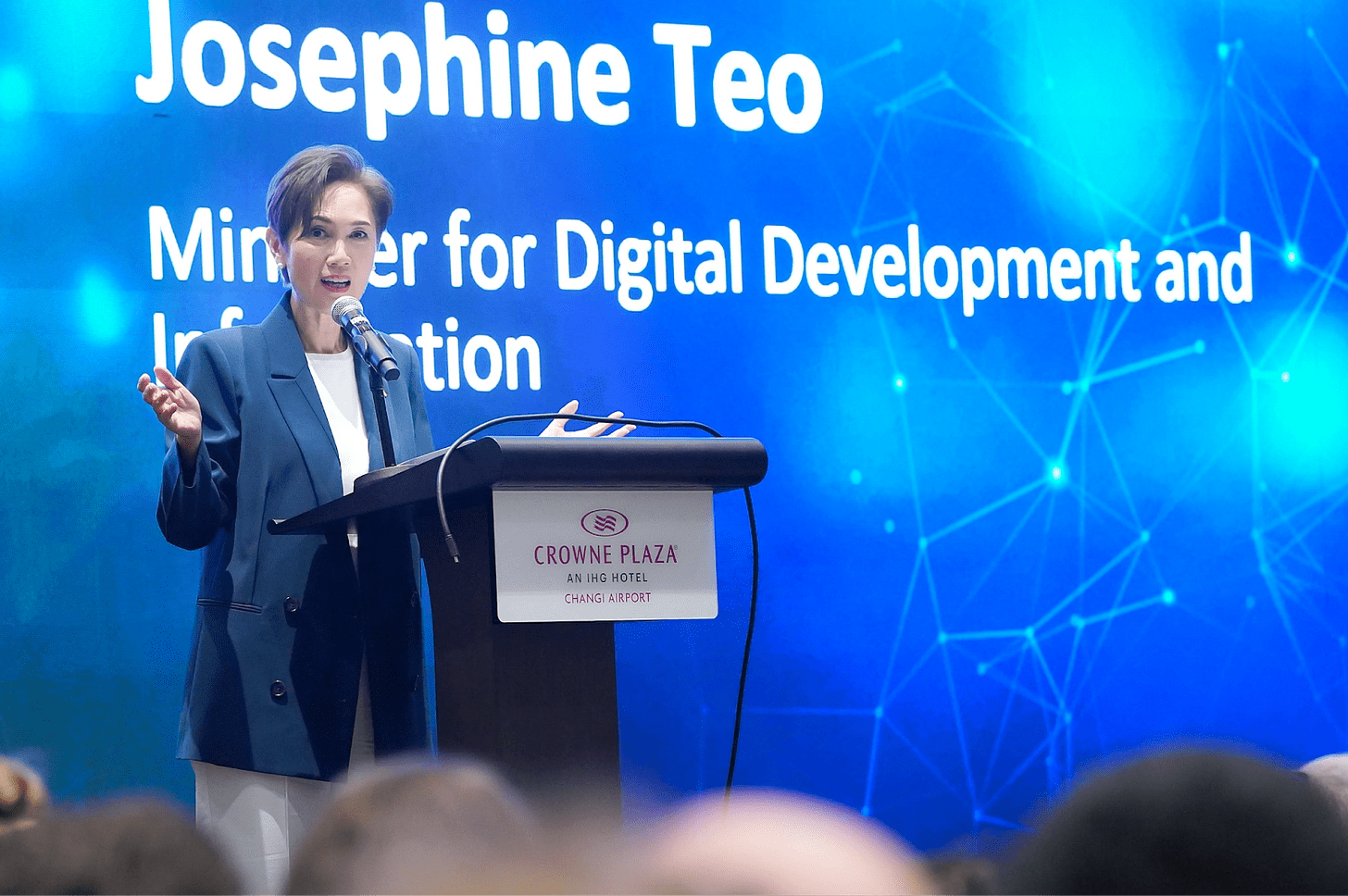

Last week, the 2025 Singapore Conference on AI brought together more than 100 participants from 11 countries to identify AI safety research priorities, resulting in a 40-page consensus document.

The conference included each AISI—including the US and China. The conference is notable in that it was sponsored by the government of Singapore and included participation of every government AI safety institute, including the US AISI and its Chinese counterpart.

Singapore has close ties with both the US and China, and its government has previously shown support for AI safety—meaning it has the potential to play a key role in negotiating international agreements on AI between the US and China.

The document recommends defense in depth for AI safety. The consensus document itself takes a defence‑in‑depth approach (proposed in Unsolved Problems in ML Safety) to technical AI safety research made up of three pillars: risk assessment, development, and control.

- Risk assessment. The first pillar proposes research tracks to make risk measurement rigorous, repeatable, and hard to game. These tools would let regulators set clear red‑lines for scaling.

- Development. The second pillar focuses on baking safety into the development process by turning broad goals into concrete design requirements, adding safeguards during training, and stress‑testing models before release.

- Monitoring and Control. The third pillar aims to control deployed systems through continuous monitoring, dependable shutdown options, and tracking how systems are used. These measures are meant to spot trouble early and give authorities clear levers to intervene when needed.

Each area contains concrete research programmes designed to give policymakers shared “areas of mutual interest” where cooperation is rational even among competitors.

While the document doesn’t break new technical ground—or discuss non-technical issues like geopolitical conflict—these three pillars show how even rival companies and states can benefit from cooperation on technical AI safety research.

In Other News

Government

- The NSF issued an RFI seeking public input for the 2025 National AI R&D Strategic Plan (comments due May 29).

- President Xi urged China to achieve AI self‑reliance in chips and software to narrow the gap with the United States, while ensuring safety.

- China is set to merge more than 200 firms into about 10 giants to strengthen semiconductor self‑sufficiency.

- At a Senate hearing, Sam Altman and other tech leaders urged strategic investment to keep U.S. AI ahead of China.

- The European Commission launched a public consultation to clarify how the EU AI Act will regulate general‑purpose models, with draft guidance due before August 2025.

- Pope Leo XIV identified AI as one of the most important challenges facing humanity.

- President Trump fired the director of the U.S. Copyright Office after a report raised concerns about the use of copyrighted material in AI training.

Industry

- FutureHouse unveiled a platform of four scientific agents for literature search and experiment design.

- Visa announced a pilot program to let autonomous AI agents make purchases directly on its network.

- Alibaba released Qwen3, claiming state‑of‑the‑art scores for an open-weight model.

- Anthropic set up an Economic Advisory Council of leading economists to study AI’s impact on labor and growth.

- Gemini 2.5 Pro beat Pokémon Blue (with some help).

Civil Society

- Common Sense Media warns that social‑AI companion apps pose “unacceptable risks” for anyone under 18 and calls for age bans and more research.

- A new paper argues that AI agents should be designed to be law-following.

- 404 Media reported that researchers secretly conducted a large, unauthorized AI persuasion experiment on Reddit users.

AI Frontiers

- Helen Toner argues that ‘dynamism’ vs. ‘stasis’ is a clearer lens for criticizing controversial AI safety prescriptions.

- Philip Tschirhart and Nick Stockton write that securing AI weights from foreign adversaries would require a level of security never seen before.

See also: CAIS website, X account for CAIS, our paper on superintelligence strategy, our AI safety course, and AI Frontiers, a new platform for expert commentary and analysis.

Listen to the AI Safety Newsletter for free on Spotify or Apple Podcasts.