What specific changes should we as a community make to the effective altruism community? [Stage 1]

By Nathan Young @ 2022-12-05T09:04 (+58)

I thought I'd run the listening exercise I'd like to see.

- Get popular suggestions

- Run a polis poll

- Make a google doc where we research consensus suggestions/ near consensus/consensus for specific groups

- Poll again

Stage 1

Give concrete suggestions for community changes. 1 - 2 sentences only.

Upvote if you think they are worth putting in the polis poll and agreevote if you think the comment is true.

Agreevote if you think they are well-framed.

Aim for them to be upvoted. Please add suggestions you'd like to see.

I'll take the top 20 - 30

I will delete/move to comments top-level answers that are longer than 2 sentences.

Stage 2

Polis poll here: https://pol.is/5kfknjc9mj

Jason @ 2022-12-05T13:10 (+93)

There should be more recognition that the core EA community is not a good fit for everyone, and more support and affirmation for ways to be involved in effectively doing good that don't require being heavily invested "in the community" per se.

Gideon Futerman @ 2022-12-05T12:52 (+85)

EAGs should have critics and non EAs invited to speak

OllieBase @ 2022-12-08T13:58 (+12)

Broadly agree. One recent EAGx team did invite a vocal critic, but they declined. The EAG team also does lots of outreach to non-EAs whose work is relevant.

NickLaing @ 2022-12-06T11:42 (+1)

Wow I love this. Feels really in the spirit of EA as well!

Dancer @ 2022-12-05T14:29 (+1)

I really like this. Definitely my favourite suggestion so far.

Gideon Futerman @ 2022-12-05T12:54 (+84)

Will MacAskill should make an effort to reduce his visibility as the face of the movement and should elevate others instead

Jack Lewars @ 2022-12-06T14:04 (+65)

EA organizations should adhere closely to governance norms, as expressed e.g. here.

[Edited to move the long blurb into the comments]

Jack Lewars @ 2022-12-06T14:14 (+25)

This includes things like:

- appointing people to Boards who have specific expertise relevant to the Board's functions, following a Board skills audit / scope to identify needs and gaps (typically this involves accountants, lawyers, governance experts; it typically doesn't include five moral philosophers and no one else)

- conflicts of interest should be managed seriously. This would normally mean avoiding the appointment of people with significant conflicts of interest, rather than appointing them anyway and just acknowledging the conflict. For example, it would not be typical for a foundation to have a Board member who also sits on the Board of the 2-3 organizations receiving the largest grants from that foundation. It would also involve EA organizations appointing a diverse range of Board members, rather than a few people serving on multiple Boards

- Boards that govern organizations and Boards that advise on where to make grants should not necessarily be made up of the same people

- Boards should be adequately-sized to encourage a diversity of viewpoints and expertise; they should also seek a diversity of backgrounds to encourage this range of views (8 people with diverse backgrounds & expertise > 3 people with similar backgrounds & expertise)

- Boards should actively manage risk and take appropriate steps to understand, monitor and mitigate risks. This includes things like reviewing financial reports at every meeting; and maintaining a risk register and reviewing it annually

- Boards should actively assess and manage the CEO and other senior leadership, rather than simply deferring to their judgement

I could go on, but you get the point.

david_reinstein @ 2022-12-06T15:16 (+2)

I agree. I'd aim towards a focus on a small set of clearly communicated, well-defined norms and practice. Done in a way that is manageable and not overwhelming.

Obviously we want this to be real and verifiable, not an exercise of 'cover your rear/plausible deniability' box ticking and lots of flowery prose. My fear from my time in academia and exposure to non-EA nonprofit orgs. But I think we can do this better in EA.

Nathan Young @ 2022-12-05T09:16 (+58)

There should be robust whistleblower protections - an anonymous whistleblowing line, after which if nothing happens people are encouraged to go public with concerns.

Nathan Young @ 2022-12-05T12:07 (+2)

I think the second line is important here. Robust whistleblowing can start with internal anonymous reporting, but if someone doesn't feel comfortable doing that (or nothing happens) it needs to move to external processes.

Jonny Spicer @ 2022-12-05T12:23 (+55)

"Recruitment" initiatives targeted at those under 18 should not receive further funding and should be discontinued.

nevakanezzar @ 2022-12-06T07:15 (+9)

Why?

david_reinstein @ 2022-12-06T15:11 (+7)

I'd also like to know the thinking and justification here. It's hard to know exactly what is suggested and why. E.g., does this pertain to the 'Charity Elections' program associated with GWWC? What about education-focused things?

Nathan Young @ 2022-12-05T12:34 (+1)

I upvoted but didn't agree or disagree. I'm not saying you're right but I think this is under-discussed.

Nathan Young @ 2022-12-05T09:43 (+34)

Giving What We Can should be framed as the way for people to be engaged in the community without necessarily needing to change their jobs/make it their identity.

Nathan Young @ 2022-12-05T12:46 (+10)

Would be cool to see a Giving What We Can conference with business and religious groups.

NickLaing @ 2022-12-06T11:45 (+4)

That's a great call. As a Christian the giving what we can pledge was one of the initial attractions to the movement for me. Consistent generosity with accountability is a great (if perhaps unorthodox) possible connection point with religious groups.

Jack Lewars @ 2022-12-06T13:40 (+6)

I agree that this is a high potential market. OFTW has tried running outreach with EA for Christians and found it pretty hard going; but I still think it's good in expectation, we just haven't cracked it yet

NickLaing @ 2022-12-06T14:09 (+4)

Nice one and sorry to hear it has been hard ! I just checked out your website and it's fantastic I love your work - had never heard of OFTW before. Make sure if possible you get Christians evangelising to Christians as well. We be used to evangelism ;).

Jack Lewars @ 2022-12-07T10:08 (+4)

Thanks Nick! Yes the idea was to set up the opportunities and then for Christian staff or volunteers to give the pitch. It's been much harder than we expected to convince people to host us though. Whisper it, but the disorganisation of a lot of pastors has been genuinely bewildering (only reply to 1 in 4 emails type thing).

DM me if you'd be up for helping with this - I've not given up yet!

NickLaing @ 2022-12-07T12:44 (+2)

Thanks Jack! There is often a tight connection between churches and traditional charity models which can be hard to crack. Some of the big NGOs they are often closely connected have a christian heritage, like World Vision, Tear Fund and Feed the Hungry. Unfortnately in my opinion these are some of the lowest value, inefficient NGOs around.

Often churches also have their own giving systems through their church which might make them hesitant to invite others in, especially when the others aren't religious. Conferences and meetings would be the forum for it, not services I would think. Still I think it could be doable. No point in whispering about organisation of pastors. Often they are actually very stretched and get a LOT of e-mails though.

I'm afraid I'm not in a great position to help you. I've lived in Uganda for 10 years managing healthcare running a social enterprise, so am completely useless for helping with Western Churches.

Nice one.

Gideon Futerman @ 2022-12-05T13:06 (+33)

80000 hours podcast should platform more critics of EA/people adjacent to EA with very different approaches or conclusion with regards to what we do . Some suggestions: Luke Kemp, Zoe Cremer, Timnit Gebru, Emily Bender, Tim Lenton. Thus we can try and get their perspective and understand why we differ

Nathan Young @ 2022-12-05T13:13 (+27)

I agree with the first sentence. I disagree with the suggested people. But then maybe I always would.

Gideon Futerman @ 2022-12-05T14:30 (+4)

Maybe I have pretty high tolerance for people I want to hear from, but generally I want to hear from people who are pretty different. I have a more 'tame' list of suggestions who are less critical of EA but take different approaches to us, but thought a little bit of spiciness would be fun and useful (but maybe I always would, I'm a bit heretical by disposition 😂)

Rina @ 2022-12-05T18:12 (+16)

FWIW I think there could be 'harsher' critics invited than folks like Luke Kemp and Zoe Cremer who are to my views still fairly engaged with EA (adding this just to give a sense of variance in opinions)

John_Maxwell @ 2022-12-08T07:34 (+2)

One consideration is for some of those names, their 'conversation' with EA is already sorta happening on Twitter. The right frame for this might be whether Twitter or a podcast is a better medium for that conversation.

You could argue podcasts don't funge against tweets. I think they might -- I think people are often frustrated and want to say something, and a spoken conversation can be more effective at making them feel heard. See The muted signal hypothesis of online outrage. So I'd be more concerned about e.g. giving legitimacy to inaccurate criticisms, rewarding a low signal/noise ratio, or having extemporaneous speech taken out of context. These are all less of a concern if we substitute the 80K podcast for one that's lower-profile -- some of the people mentioned could be topical for Garrison's podcast?

Edit: I suppose listening to this podcast might be good for value of information?

Gideon Futerman @ 2022-12-05T12:49 (+33)

The identity of those who attend the coordination forum and are on leaders slack channels should be made public

Devon Fritz @ 2022-12-06T15:13 (+4)

I'm curious to hear the perceived downsides about this. All I can think of is logistical overhead, which doesn't seem like that much.

If something is called the "Coordination Forum" and previously called the "Leaders Forum" and there is a "leaders" slack where further coordination that affects the community takes place, it seems fair that people should at least know who is attending them. The community prides itself on transparency and knowing the leaders of your movement seems like one of the obvious first steps of transparency.

Nathan Young @ 2022-12-06T15:37 (+2)

I think that if you have an intense focus on leaders you end up with leaders who can bare that intense focus. I guess I sense that there is something good in this area, but that this specific suggestion would be -EV

Guy Raveh @ 2022-12-10T21:58 (+2)

I strongly doubt we can get anything positive long-term from a leader working in the shadows without public scrutiny. This is a recipe for mismanagement and corruption.

Nathan Young @ 2022-12-05T13:12 (+3)

I think I don't want this, but I think there is something interesting in this space.

sphor @ 2022-12-05T15:51 (+2)

I agree with the first but not the second

Nathan Young @ 2022-12-05T09:23 (+31)

The wiki and intro groups should shift slightly towards displaying uncomfortable truths about EA. Less "we are excellent", more "we are complex and perhaps not for you"

Nathan Young @ 2022-12-05T10:24 (+28)

While parties are fun, we should do what we can to ensure you don't need to attend them to work at EA organisations.

Dancer @ 2022-12-05T10:35 (+10)

Do you need to currently?

Quadratic Reciprocity @ 2022-12-05T16:06 (+2)

It felt like attending parties in the past was somewhat good for me (though not as much as I expected before I started going to more parties) for keeping up to date on what is going on and what various people (including people who are otherwise too busy/intimidating for me to schedule one-on-ones with) thought about topics of my interest. If I went to zero EA parties, I guess I would be at a disadvantage compared to people who are similarly competent but go harder on networking.

Peter Rautenbach @ 2022-12-05T15:42 (+3)

I like this much more than the suggestion to have no parties.

I'm not advocating for ragers, but parties (a fairly loosely defined term) are great for getting to know people in a personal and non-work related way. Plus, they’re fun.

I don’t think one should need to attend them to get an job.

However, it’s hard to move away from the “it’s who you know” concept. Parties give you a chance to see who people are and if you would like to work with them as a person rather than who they are as a professional. I would much rather work with someone who knows their stuff AND is a joy to be around.

The end result being that hiring practice should ensure that the same group of friends aren’t the only one’s being hired, but cancelling parties and the like isn’t the answer.

(I should note that official organizations probably shouldn’t be throwing crazy parties with tons of binge drinking (there is still individual responsibility here of course) for so many other reasons than who gets hired. More moderate events, such as having drinks at a EAG should be continued. And private individuals throwing parties that involve EA people are their own topic.)

Nathan Young @ 2022-12-05T10:39 (+27)

We should stop talking about "EAs" as if we are a big homogeneous group. We aren't. We work on set of causes, but have many varied beliefs and that's okay.

Jeroen_W @ 2022-12-05T11:20 (+26)

All of CEA/EV's spending above $500k should be publicly explained and defended

David Mears @ 2022-12-06T10:08 (+23)

A much increased commitment to reasoning transparency around decisions people have questions about. For example, the purchase of Whytham Abbey, Twitter DMs with Musk and MacAskill, and claims of 2018-era warnings to EA leaders about SBF's trustworthiness. Overall, I'm much more interested in a culture change towards transparency, rather than in answers about any of those few examples.

Arepo @ 2022-12-06T16:24 (+5)

This is my top vote. A lot of stuff orgs like CEA do goes unexplained, and they can get away with it because they have a small number of major donors.

There's a market-logic that I don't particularly disagree with that if they can get donors happy to fund what they're doing then they should feel entitled to do it - but I don't think that can square with the idea of them being in any way representative of the broader community.

If they want to engage in EA black ops, we should have at least one separate white ops org (preferably more), whose funding is crowdsourced as much as possible, and who consider it a core part of their jobs to engage with and serve - rather than manage - the community.

Nathan Young @ 2022-12-05T09:25 (+23)

The community should elect member(s) to CEA's board.

Nathan Young @ 2023-01-05T17:26 (+2)

I don't endorse this btw, I wrote it to allow the option to discuss it. Unsure how I should signal that.

Gideon Futerman @ 2022-12-05T12:49 (+21)

EA should have something akin to an AGM where community members can discuss large scale strategy

Daedalus @ 2022-12-05T18:05 (+20)

EA orgs should take financial stewardship and controls more seriously. More organizations should have financial professionals or there should be shared financial resources. Boards should have more financial experts even if they serve ex officio on finance/audit committees.

Stephen Clare @ 2022-12-06T10:36 (+19)

Most of these suggestions are too vague to be useful. Before people post, they need to read "Taboo EA should". And then when making suggestions, you need to be very specific about which actors they apply to and what specifically you want them to do.

e.g. Less "EA should democratize" and more "EA Funds should allow guest grantmakers with different perspectives to make 20% of their grants" (I don't endorse that suggestion, it's just an example)

Though I do want to say I appreciate this effort, Nathan!

Stefan_Schubert @ 2022-12-06T11:06 (+3)

I think more general claims or questions can be useful as well. Someone might agree with the broader claim that "EA should democratise" but not with the more specific claim that "EA Funds should allow guest grantmakers with different perspectives to make 20% of their grants". It seems to me that more general and more specific claims can both be useful. Surveys and opinion polls often include general questions.

I'm also not sure I agree that "EA should" is that bad of a phrasing. It can help to be more specific in some ways, but it can also be useful to express more general preferences, especially as a preliminary step.

Stephen Clare @ 2022-12-06T11:44 (+15)

But for the purposes of this question, which is asking about "specific changes", I think the person who thinks "EA should democratise" needs to be clear about what is their preferred operationalization of the general claim.

Stefan_Schubert @ 2022-12-06T11:55 (+2)

Nathan, who created the thread, had some fairly general suggestions as well, though, so I think it's natural that people interpreted the question in this way (in spite of the title including the word "specific").

Gideon Futerman @ 2022-12-05T12:51 (+19)

EA funds funding should be allocated more democratically

David Mathers @ 2022-12-05T18:05 (+6)

Why are people down-voting this as well as disagree-voting? It's a controversial but non-crazy opinion honestly expressed without manipulative rhetoric. (I don't have a strong opinion on it one way or the other myself.)

Larks @ 2023-01-05T17:49 (+12)

I think calling for something to be 'more democratic', without further details, is a textbook example of an Applause Light.

Jason @ 2023-01-05T18:59 (+3)

This was a solicitation for items for a polis poll, so 140 characters max

Larks @ 2023-01-05T19:35 (+10)

The post literally asks for 'specific' and 'concrete' suggestions. You can be succinct and concrete, like "annual vote by GWWC members to determine board" or "all grants >$100k decided by public vote on EA forum".

Gideon Futerman @ 2023-01-05T17:58 (+1)

Or a suggestion to open up discussion. There are many more structures that would be more democratic (regranting, assembly groups of EAs, large scale voting, hell even random allocation), but the principle here is essentially saying at the moment ea funds is far too centralised and thus we need a discussion as to how we should do things more democratically. I don’t profess to have all the answers! Moreover, I think I am not sure the idea it is an Applause Light makes sense in the context of it being massively disagree voted. That is pretty much to opposite of what you would expect!

Nathan Young @ 2023-01-05T17:23 (+2)

I downvoted because I think it's less important than comparable ideas in this comment section

Devon Fritz @ 2022-12-06T15:16 (+5)

I think what we really need are more funding pillars in addition to EA Funds and Open Phil. And continue to let EA Funds deploy as they see fit, but have other streams that do the same and maybe take a different position on risk appetite, methodology, etc.

John_Maxwell @ 2022-12-08T07:50 (+4)

Variant: "EA funds should do small-scale experiments with mechanisms like quadratic voting and prediction markets, that have some story for capturing crowd wisdom while avoiding both low-info voting and single points of failure. Then do blinded evaluation of grants to see which procedure looks best after X years."

Guy Raveh @ 2022-12-10T22:01 (+1)

I support experimenting with voting mechanisms, and strongly oppose putting prediction markets in there.

RyanCarey @ 2022-12-05T16:05 (+18)

Upvote for agreement with the general tone of the suggestion or if you think there is a good suggestion nearby.

Agreevote if you think they are well-framed.

Upvote for agreement, and agreevote for good framing? That's roughly the opposite of normal, which may confuse interpretation of the results.

Larks @ 2022-12-05T16:49 (+4)

Oh yeah all my votes are backwards then.

Stefan_Schubert @ 2022-12-05T16:56 (+2)

No, I think yours and Ryan's interpretation is the correct one.

the new axis on the right lets you show much you agree or disagree with the content of a comment

Linked from here.

ludwigbald @ 2022-12-05T20:56 (+17)

EA should become much less reliant on key institutions like CEA, 80k, Will MacAskill or (previously) SBF

Nathan Young @ 2022-12-05T09:15 (+17)

There should be an organisation that is responsible for auditing EA funders (who aren't audited by, say the US government) and publishing this information.

Nathan Young @ 2022-12-05T09:20 (+16)

There should be a poll to understand the opinions of people in EA around feeling uncomfortable at events. Then there can be a listening exercise similar to this one.

Dancer @ 2022-12-05T10:48 (+3)

What sort of thing do you have in mind? Another poll post on the Forum about this specifically? EA Global feedback forms or the EA survey asking questions specifically about feeling uncomfortable (if they don't already)? A Forum post sharing a questionnaire for group organisers to use and strongly encouraging them to use it? Making it a requirement of receiving group funding from Open Phil or CEA that you poll people about feeling uncomfortable at events?

I'm just expecting this will be upvoted because what could be wrong with getting more information? But I suspect a lot of people have a lot of this information already, there aren't easy ways to collect, analyse and share such a poll very widely, and there's always an opportunity cost in terms of people's time and attention. There may well, however, be a concrete version of this that seems worth doing to me (and indeed I've pushed for something like this before on a small scale).

Nathan Young @ 2022-12-05T11:05 (+1)

How about you write some answers and then people can upvote them?

Dancer @ 2022-12-05T12:05 (+10)

But that would imply I want them and I don't. In fact I think the act of listing an answer here is a stronger signal that this is something the community wants than a mere vote is.

Also I cba to find out to find out what's already in place. Part of my point here is that I think there should be a higher bar for saying how things should change than "EA should do more of this thing that sounds nice even though I don't really know how much of it they already do and haven't thought about how that might work in practice and what should be deprioritised accordingly." So I think people like me who can't be bothered to put a bit more effort in shouldn't be making suggestions.

James Herbert @ 2022-12-05T14:07 (+2)

I suggest encouraging CEA to support/train CBGs in conducting these kinds of exercises.

Jason @ 2022-12-05T14:10 (+15)

Rank-and-file members of the EA community, and/or their representatives, should have more of a say in the allocation of funding than they currently do.

Nathan Young @ 2022-12-05T10:40 (+15)

There should be a community listening exercise around longtermism and impact now to avoid the otherwise inevitable community breakdown

Chris Leong @ 2022-12-06T03:33 (+4)

I found this description unclear

ludwigbald @ 2022-12-05T21:22 (+13)

The EA community should celebrate and amplify non-utilitarian serious do-gooding.

Nathan Young @ 2022-12-05T09:26 (+13)

The community should be able to remove members from CEA's board/leadership

Dušan D. Nešić (Dushan) @ 2022-12-07T10:03 (+12)

More outside expertise should be welcomed. All roles in EA organizations should be advertised on open platforms like LinkedIn, and while selecting based on "culture fit" is allowed, people without previous EA exposure should not be disqualified outright.

ludwigbald @ 2022-12-05T21:12 (+12)

There should be a social space for people who leave EA, and it should be easy to leave EA.

Nathan Young @ 2022-12-05T15:18 (+12)

80000 hours podcast should debate more critics of EA processes/behavior with very different approaches or conclusion with regards to what we do.

Some suggestions:

- Jess Whittlestone

- One of the more left-wing EAs (Habiba Islam, Garrison Lovely)

- Zoe Cremer

- Someone from more traditional charity space

ludwigbald @ 2022-12-05T21:28 (+11)

Cause-area communities should be stronger and more self-reliant.

Jonny Spicer @ 2022-12-05T12:29 (+11)

A project should be funded that aims to understand why 71% of EAs who responded to the 2020 survey self-identify as male, what problems could arise from the gender balance skewing this way, and what interventions could redress said balance.

Nathan Young @ 2022-12-05T12:30 (+6)

Though I suggest only redress by getting new non-males rather than making many males want to leave.

Jonny Spicer @ 2022-12-05T12:34 (+1)

I endorse this suggestion, and had it in mind in my original comment but should've made it explicit - thanks for pointing out the ambiguity

D0TheMath @ 2022-12-07T21:43 (+10)

Most suggestions I see for alternative community norms to the ones we currently have seem to throw out many of the upsides of the community norms they're trying to replace.

Guy Raveh @ 2022-12-06T15:45 (+10)

CEA should appoint a community ombudsman (whose job is explicitly to serve the community, rather than 'have positive impact')

Ben_West @ 2023-01-30T02:56 (+2)

FYI I think this was experimented with.

Guy Raveh @ 2023-02-01T05:27 (+2)

This looks only superficially similar. It reads to me like "we're afraid appointing a community panel will lead to problems associated with few people making decisions for many people, so we decided to keep going with even fewer people making decisions."

And the actual appointed advisory board sounds (at least from the post) toothless, unclear in its responsibilities, and like the board can circumvent it by just deciding not to ask it about any particular topic.

A community ombudsman would have a clear responsibility of representing the community and being available to listen to complaints (as opposed to "maximising impact", which is what the rest of CEA declares to be its goal), and should probably have voting power in some capacity.

David Mears @ 2022-12-06T10:09 (+10)

Attempts to reduce our dependency on a few single points of failure in community leaders (Will MacAskill, Toby Ord, Rob Wiblin, Holden Karnofsky...). I think it's a moderately bad sign if our community leaders don't circulate naturally every half-decade or so, since that means it's more about the personalities than the ideas, and is un-meritocratic, assuming that good leadership skills and ideas are not vanishingly rare.

Nathan Young @ 2022-12-05T09:57 (+10)

CEA should stop implicitly discouraging community members from telling journalists they trust information.

James Herbert @ 2022-12-05T14:52 (+12)

Small note, I'd say CEA currently explicitly discourages community members from telling journalists they trust information.

Nathan Young @ 2022-12-05T14:52 (+5)

Can you find a quote?

James Herbert @ 2022-12-08T10:44 (+11)

I don't think it's been publically written down anywhere, I've only been discouraged via private comms. E.g., I've been approached by journalists, I've then mentioned it to CEA, and then CEA will have explicitly discouraged me from engaging. To their credit, when I've explained my reasoning they've said ok and have even provided media training. But there's definitely been explicit discouragement nonetheless.

Nathan Young @ 2022-12-05T09:21 (+9)

There should be scandal markets about top community figures and their likelihood of committing crimes/being involved in sexual scandals

Nathan Young @ 2022-12-05T13:56 (+2)

I know you lot generally don't like this idea. I'm curious if anyone can suggest a version that's less objectionable.

Nathan Young @ 2022-12-05T09:17 (+9)

We should seek for ourselves to no longer be a community, but instead a professional network around a set of orgs with similar missions.

Jason @ 2022-12-05T13:16 (+8)

There should be more focus on sub communities applying EA principles to various cause areas vs. such an extensive focus on a singular EA community. Different areas have different needs, recruitment strategies, PR interests, etc.

D0TheMath @ 2022-12-07T21:43 (+7)

When trying to replace community norms, we should try to preserve the upsides of having the previous community norms.

Dušan D. Nešić (Dushan) @ 2022-12-07T09:59 (+7)

Many EA organizations currently make decisions based on a western-centric (for lack of a better word) mindset, accidentally silencing voices coming from outside. More EAGs/job opportunities should be held outside of USA/UK/EU since third-world countries have a hard time getting a Visa for developed countries.

D0TheMath @ 2022-12-06T22:46 (+7)

The EA community is on the margin too excited to hear about criticism.

Elika @ 2022-12-06T05:24 (+7)

Get everyone to stop using EA as a homogeneous group - ex. by making sure in the Intro fellowship the fact that there is no one-EA is a key point, getting people to not use that language on the Forum, etc

ludwigbald @ 2022-12-05T21:15 (+7)

By the way, I think it's really cool of you, Nathan, to nourish this conversation.

I think it's a conversation we should never stop having, and we should create online and offline spaces where it can happen.

ludwigbald @ 2022-12-05T21:17 (+6)

Most suggestions here are disagreed with, but that's hardly enough justification not to push forward on them. Only hardcore EAs vote on the forum, disgruntled EAs would not come across them.

Larks @ 2022-12-05T22:16 (+9)

This seems unlikely to be the explanation to me. Voters on the forum frequently give very high karma to highly critical and disgruntled top level posts (indeed, I think it is actually easier to get karma writing such posts than more positive ones). I think the true explanation is probably that going from vague complaints to concrete suggestions makes them much easier to critique and see the problems.

Ramiro @ 2022-12-14T16:00 (+6)

Effective Cassandra

A forecasting contest (in addition to the EA criticism contest) to answer "what is the worst hazard that will happen to the EA community in the next couple of years?" (or whatever period people think is more adequate). The best responses, selected by a jury on the basis of their usefulness and justification, receive the first prize; the second prize will be given two years later to the forecaster who predicts the actual answer.

D0TheMath @ 2022-12-07T21:39 (+6)

Most of the focus going into reevaluating community norms feels misplaced.

D0TheMath @ 2022-12-06T22:45 (+6)

All the focus going into reevaluating community norms feels misplaced.

Dušan D. Nešić (Dushan) @ 2022-12-07T09:48 (+2)

"All" feels like too strong of a claim, but I could agree with a weaker version of this.

Arepo @ 2022-12-06T16:29 (+6)

CEA - and perhaps other core orgs - should split into separate entities with smaller focuses, and encouraging the founding of organisations that compete on those focuses, so there's no 'too big to fail' logic in the movement.

Guy Raveh @ 2022-12-06T15:41 (+6)

We should encourage EAs to find ways to start EA projects inside existing, non-EA organisations.

MHR @ 2022-12-05T14:01 (+6)

If the CEA has banned an individual from CEA events for misconduct, also ban them from the EA forum and Twitter community.

Pat Myron @ 2022-12-06T00:12 (+3)

What does it mean to be blocked from a Twitter community? Are there shared blocklists being used?

MHR @ 2022-12-06T00:34 (+3)

I mean specifically Twitter's community feature https://twitter.com/i/communities/1492420299450724353. Moderators of the EA community can remove members.

Linch @ 2022-12-06T01:16 (+2)

Note that the Twitter community is public for reading, just not for posting.

Nathan Young @ 2022-12-05T09:12 (+6)

There should be a community-written EA handbook on the wiki alongside the CEA-written one.

Mathieu Putz @ 2022-12-05T11:41 (+5)

Why?

Nathan Young @ 2022-12-05T12:36 (+2)

So you can see how the community self understands as well as what CEA what's the community to be and then there can be a discussion between those two.

Luca Rossi @ 2022-12-07T13:11 (+5)

We should change the longtermism pitch and make it more realistic for people who would otherwise be deterred. Avoid (at first) mentioning trillions of potential future people or even existential risks, and put more emphasis on how these risks (e.g. pandemics, nuclear wars, etc.) can affect actual people in our lifetime.

Lin BL @ 2022-12-07T20:59 (+1)

Or perhaps a 'shorter' version of longermism (which would also be easier to model):

Your lifetime, that of your children, grandchildren and x generations in the future, which is a given rather than requiring assumptions to reach the higher numbers and therefore more open to dispute e.g. of humanity spreading to the stars.

Guy Raveh @ 2022-12-06T15:43 (+5)

CEA should detail how and to what extent they mamage EA groups that receive funding from them

Elika @ 2022-12-06T04:21 (+5)

There should be alternatives to EAGs/EAGxs - one's that are cause area specific and/or for people interested in EA ideas but not necessarily needing to call yourself an EA.

RedStateBlueState @ 2022-12-06T01:15 (+5)

Effective Altruism should disassociate itself from its major funders - mainly Dustin Moskovitz at this point. Take the money, but don't talk about them.

ludwigbald @ 2022-12-05T21:04 (+5)

The EA community should enter coalitions with other social movements to further our shared goals.

Bob Jacobs @ 2022-12-05T18:13 (+5)

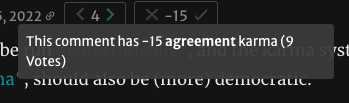

EA organizations should be run democratically, and the karma system, or at least the agreement karma, should also be (more) democratic.

EDIT: I would like to point out that having a lot of disagreement karma doesn't necessarily mean most people disagree. When this suggestion had three votes it had positive agreement karma, but when I returned at five votes it had negative:

I stuck around for a bit to see how the karma would evolve:

What we see is that the downvotes contain a lot more voting weight than the upvotes. This seems logical, people with more voting power have an incentive to use that power to make sure they don't lose it. We really cannot conclude what the EA community believes/wants based on the results of the karma-system, and if we want to know what the community really wants I suggest we use polls/surveys instead.

Nathan Young @ 2022-12-05T10:18 (+5)

All cause areas should have a wiki article and people should be able to up and downvote them to provide a community priority ranking.

AnonymousEAForumAccount @ 2022-12-06T22:58 (+4)

EA should focus more on community-wide governance

ludwigbald @ 2022-12-05T20:58 (+4)

EA community building should be coordinated and funded primarily at the national level

Nathan Young @ 2022-12-05T09:24 (+4)

At least one key EA figure should step back as a result of the FTX crisis (not including anyone who worked at FTX/Alameda).

David Mathers @ 2022-12-05T17:44 (+11)

Seems like bad for intellectual honesty to me to make this decision on the general reasoning of 'something really bad happened and we need a scalp'. Rather we ought to have some general, even if vague, idea of what's a resigning matter, and then actually look and see what people did, without pre-committing to a number who should resign.

Stephen Clare @ 2022-12-05T10:13 (+7)

"Step back" = stop publishing their work? take a differen role in their org/community? leave EA entirely?

Nathan Young @ 2022-12-05T10:17 (+2)

I guess stop doing the leadership part of their roles. Sort of doesn't matter thought since this suggestion is already well underwater.

Can you suggest a form people don't hate so much.

Dancer @ 2022-12-05T10:33 (+6)

What do you mean "doing the leadership part of their roles"?

Nathan Young @ 2022-12-05T09:06 (+4)

Write all the information we know about different topics on the wiki so that new members can get up to speed quickly about potentially damaging topics.

D0TheMath @ 2022-12-07T21:42 (+3)

Almost all community norms we currently have have many upsides we should try to maintain.

D0TheMath @ 2022-12-07T21:40 (+3)

A significant fraction of the focus going into reevaluating community norms feels misplaced.

Babel @ 2022-12-07T13:54 (+3)

I think building epistemic health infrastructure is currently the most effective way to improve EA epistemic health, and is the biggest gap in EA epistemics.

I elaborated on this in my shortform. If the suggestion above seems too vague, there're also examples in the shortform. (I plan to coordinate a discussion/brainstorming on this topic among people with relevant interests; please do PM me if you're interested)

(I was late to the party, but since Nathan encourages late comments, I'm posting my suggestion anyways.)

Guy Raveh @ 2022-12-06T15:43 (+3)

CEA should be publish their financial sources

David Mears @ 2022-12-06T10:10 (+3)

For future earners to give: de-correlate the fate of EA from the fate of crypto.

harfe @ 2022-12-07T14:38 (+1)

With the FTX collapse the correlation has shrunken dramatically, so this seems much less of a concern now.

David Mears @ 2022-12-06T10:10 (+3)

Eventually, a re-negotiated relationship style of EA with billionaire funders: donating large amounts of money is worth some amount of social reward, but not a 'hero worship' amount. We should in future be more ready to criticise the way in which billionaire donors make their fortunes (e.g. the critique that making a fortune in crypto facilitates people getting scammed), rather than overlooking ways in which people might do harm with EtG (I know I had had cognitive dissonance about this re FTX).

Dancer @ 2022-12-05T14:36 (+3)

80000 hours podcast should debate more critics of EA/people adjacent to EA with very different approaches or conclusion with regards to what we do . Some suggestions: Luke Kemp, Zoe Cremer, Timnit Gebru, Emily Bender, Tim Lenton.

Gideon Futerman @ 2022-12-05T14:38 (+4)

That list looks awfully familiar 😂😂

Devon Fritz @ 2022-12-06T15:18 (+1)

I am for more debate, but don't like this suggestion due to specific names, like Timnit, who just seems so hostile I can't imagine a fruitful conversation.

Dušan D. Nešić (Dushan) @ 2022-12-07T10:13 (+2)

A stronger, more deliberate push (backed by funding) should be made to enter professional places (by opening professional groups) and attract mid-to-late-stage professionals from diverse industries, as well as people in countries where EA has no presence. A lesser imperative should be placed on university groups.

Dušan D. Nešić (Dushan) @ 2022-12-07T10:02 (+2)

There should be a separate body to represent communities which is a combination of elected and randomly assigned members. Its purpose is to have a check on the un-elected experts in other organizations while being kept in check by them in turn.

supesanon @ 2022-12-05T19:47 (+2)

There should be a clear separation between funding of cause areas and people working on specific cause areas to avoid conflicts of interest that inevitably affects people's judgements. For contentious cause areas it may be better to commission a broad group of expert stakeholders with a diversity of knowledge, background and opinions to assess the validity of a cause area based on EA criteria. There are currently some cause areas that seem very ideologically driven by some highly regarded folks in EA. These folks seem to both generate the material that is the main source material that supports the cause area as well as are the ones steering funding. This asymmetry in power and funding undoubtedly influences the cause areas that end up becoming and staying prominent in EA.

Nathan Young @ 2022-12-05T10:59 (+2)

As always I'm surprised that a post that's getting lots of interaction in the answers isn't getting upvotes.

Agreevote if that's because you don't think more people should see it. Disagreevote if you have some other reason for not upvoting the main post.

Jasper Meyer @ 2022-12-05T23:35 (+1)

EA should not be an identity. There is too much disagreement within the movement (this is good!) to consider yourself "an EA".

Nathan Young @ 2022-12-05T09:11 (+1)

Funding in EA funders should be democratically allocated.

Stephen Clare @ 2022-12-05T10:11 (+14)

I think something in this direction is useful to test, but "democratically allocated" seems too vague to me. If I can think of more specific versions of this, would you like me to add them as new responses?

Nathan Young @ 2022-12-05T10:17 (+2)

Absolutely!