Silly idea to enhance List representation accuracy

By Phib @ 2023-04-24T00:30 (+7)

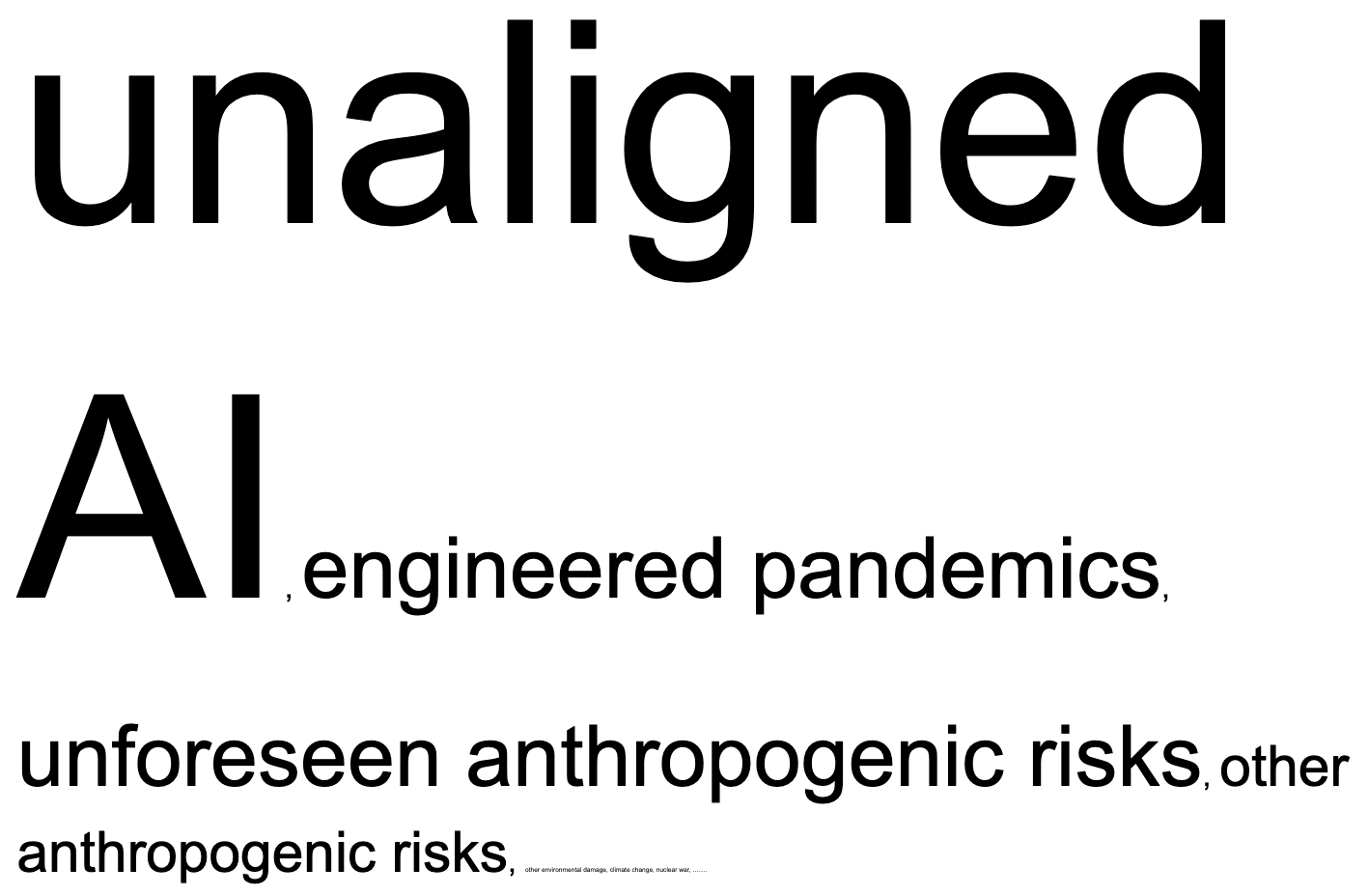

In an (intentionally somewhat silly) effort to increase reasoning transparency, when listing items that have different weights it may be valuable to illustrate those weights accordingly by changing font size, by listing items multiple times, or presenting percentage weights (doesn't escape scope insensitivity) in parentheses next to each item.

Or if audibly listing to someone, be sure (mostly jokes) to modulate the volume of your voice according to the weight of each item in a list, say each a number of times, or present them in tiers (though again, heuristic, not completely representative).

You could also just not be as silly and present some sort of infographic that does the weights justice, like this one inspired by The Precipice. (https://forum.effectivealtruism.org/posts/GGoFCzMM4kigEZike/new-infographic-based-on-the-precipice-any-feedback (sorry I can't figure out linking nicely))

Anyway, here's an example (noting that I don't necessarily subscribe to these probabilities):

First version:

"Some existential risks include AI, Nuclear warfare, climate change, supervolcanos, cosmic events..." (see my bias in even how I remember and present these!)

Incredible, upgraded versions:

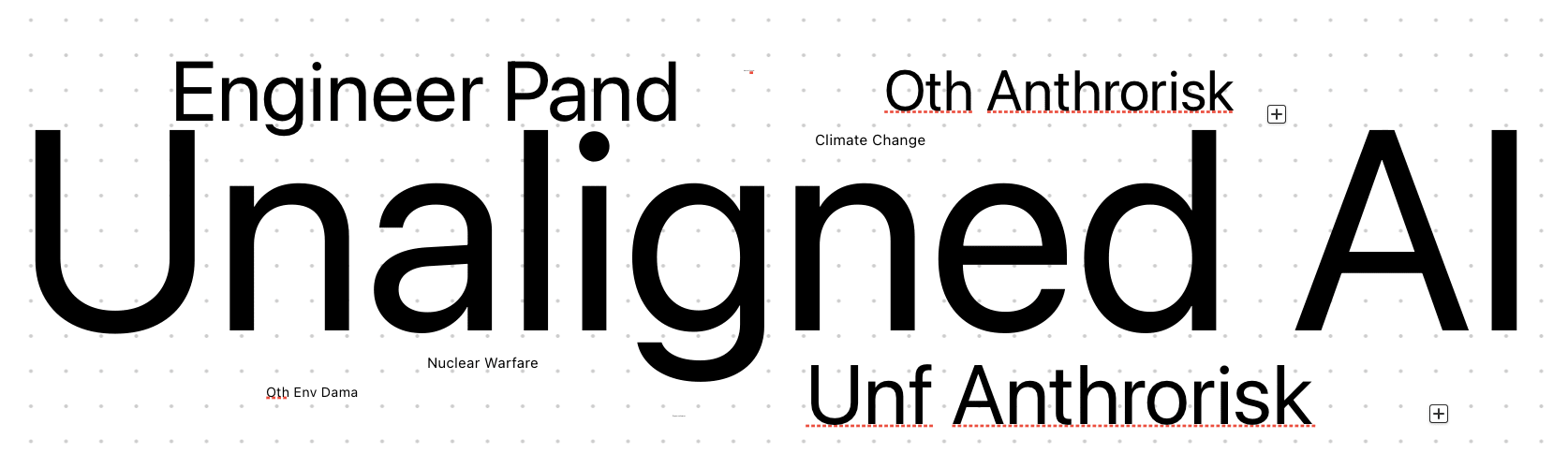

I kinda cheated, couldn't type in <1 font, so a bunch were cut off, so I tried again

Version 2 using mac's freeform. I tried to make all the entries similarly sized, hence the unreasoning untransparency.

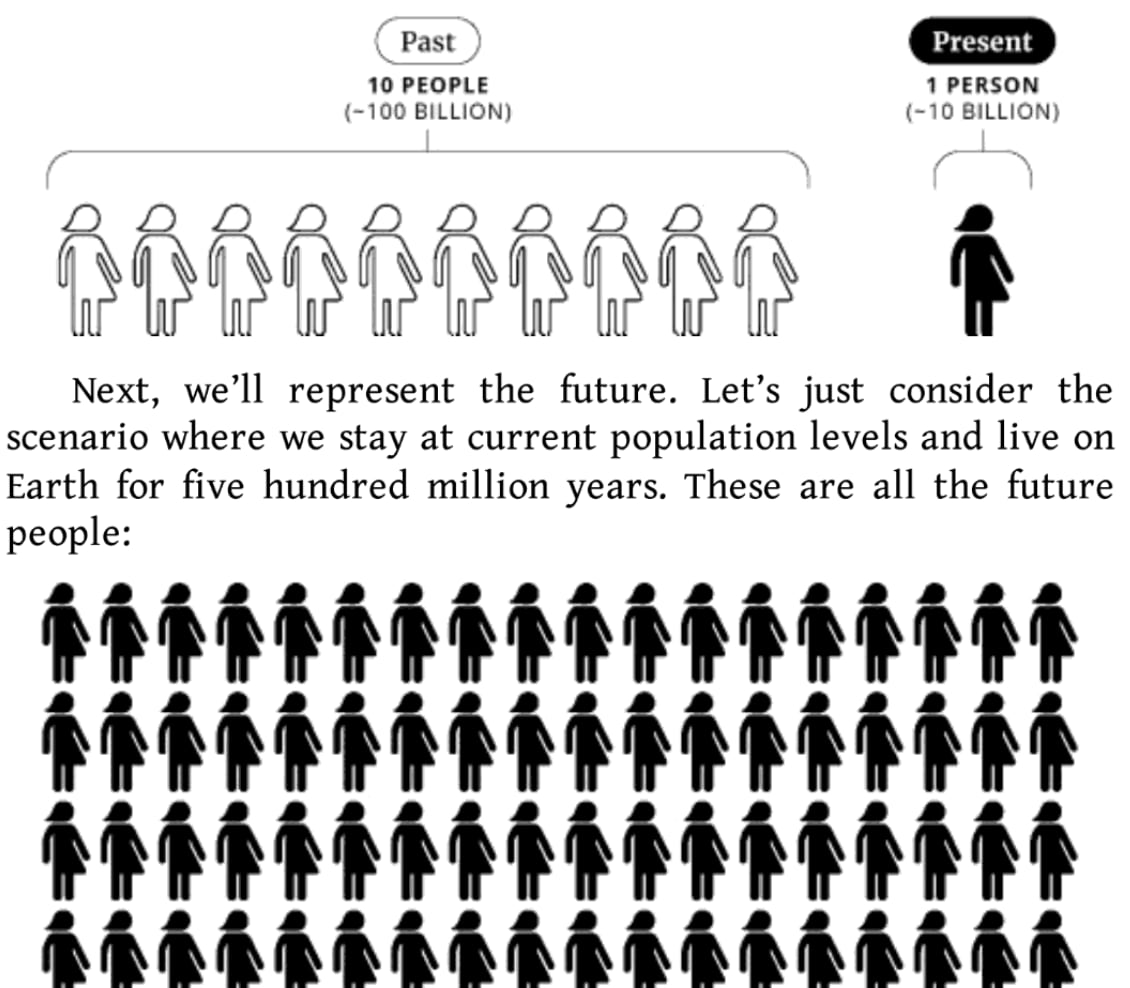

Version 3 with Humanity Survives, this is not necessarily to scale (hahaha)

(though this doesn't take into account neglectedness/tractability)

Rather similar infographic vibes as any of the to-scale cosmic scale portrayals, or this illustration from What We Owe the Future, I cut off the individual's legs at the bottom as an effective 'ellipses', since I didn't want to actually portray this (how hypocritical of me).

But anyway, it's great because this model of elaborating lists and this example of x-risks actually, in certain vocal situations, encourages you to yell at people about AI risk (not necessarily encouraging this), and whisper to them about climate change. Meanwhile, stellar explosions accurately represented should hardly receive a flow of air (then why do I keep bringing them up!)

It seems another result of this model is that we should have 100,000x AI risk representations in media (e.g. movies, frequency of discussion, of papers etc.) than we do asteroid impacts (my impression is that there are quite a lot more asteroid risk movies than AI risk; though two additional thoughts, AI risk is much more discussed now, vs Don't Look Up's 'success'). I think the 100,000x (accurate to Ord's estimates) is pretty shocking to me, in seriousness, when done in comparison with how conscious (per popular culture representations) we are of each. Of course, I think this is a pretty cold take, but the number is rather new to me and the scale is... well I'm insensitive to the scope anyway, I guess.

Ward A @ 2023-04-24T07:09 (+3)

I like the intent and I love the silliness, but the reasons we bring up alternatives include...

- So we seem less biased and less like we've only considered a single option.

- To cooperate with people who prioritise differently than us.

- It seems better if e.g. when someone asks what the best religion is, Christians, Muslims, Buddhists, all agree on providing a list of options while also making clear that they themselves consider their own religion best for XYZ reasons.

And accurately representing our beliefs about the relative merits of focusing on each risk will to some extent negate 1 and 2, especially 1.

Phib @ 2023-05-15T03:33 (+2)

Casual comment on my own post here but - even the x-risk tag symbol is a supervolcano, imagine we just presented over and over again such symbols that… aren’t actually very representative of the field? I guess that’s my main argument here.

Zach Stein-Perlman @ 2023-05-15T03:57 (+3)

(I think it's a mushroom cloud, not a volcano.)

Phib @ 2023-05-15T04:53 (+1)

This is very fair hahaha