My current thoughts on the risks from SETI

By Matthew_Barnett @ 2022-03-15T17:17 (+47)

SETI stands for the search for extraterrestrial intelligence. A few projects, such as Breakthrough Listen, have secured substantial funding to observe the sky and crawl through the data to look for extraterrestrial signals.

A few effective altruists have proposed that passive SETI may pose an existential risk to humanity (for some examples, see here). The primary theory is that alien civilizations could continuously broadcast a highly optimized message intended to hijack or destroy any other civilizations unlucky enough to tune in. Many alien strategies can be imagined, such as sending the code for an AI that takes over the civilization that runs it, or sending the instructions on how to build an extremely powerful device that causes total destruction.

Note that this theory is different from the idea that active SETI is harmful, ie. messaging aliens on purpose. I think active SETI is substantially less likely to be harmful, and yet it has received far more attention in the literature.

Here, I collect my current thoughts about the topic, including arguments for and against the plausibility of the idea, and potential strategies to mitigate existential risk in light of the argument.

In the spirit of writing fast, but maintaining epistemic rigor, I do not come to any conclusions in this post. Rather, I simply summarize what I see as the state-of-the-debate up to this point, in the expectation that people can build on the idea more productively in the future, or point out flaws in my current assumptions or inferences.

Some starting assumptions

Last year, Robin Hanson et al. published their paper If Loud Aliens Explain Human Earliness, Quiet Aliens Are Also Rare. I consider their paper to provide the best available model to-date on the topic of extraterrestrial intelligence and the Fermi Paradox (along with a very similar series of papers written by S. Jay Olson previously). You can find a summary of the model from Robin Hanson here, and a video-summary here.

The primary result of their model is that we can explain the relatively early appearance of human civilization—compared to the total lifetime of the universe—by positing the existence of so-called grabby aliens that expand at a large fraction of the speed of light from their origin. Since grabby aliens quickly colonize the universe after evolving, their existence sets a deadline for the evolution of other civilizations. Our relative earliness may therefore be an observation-selection effect arising from the fact that civilizations like ours can't evolve after grabby aliens have already colonized the universe.

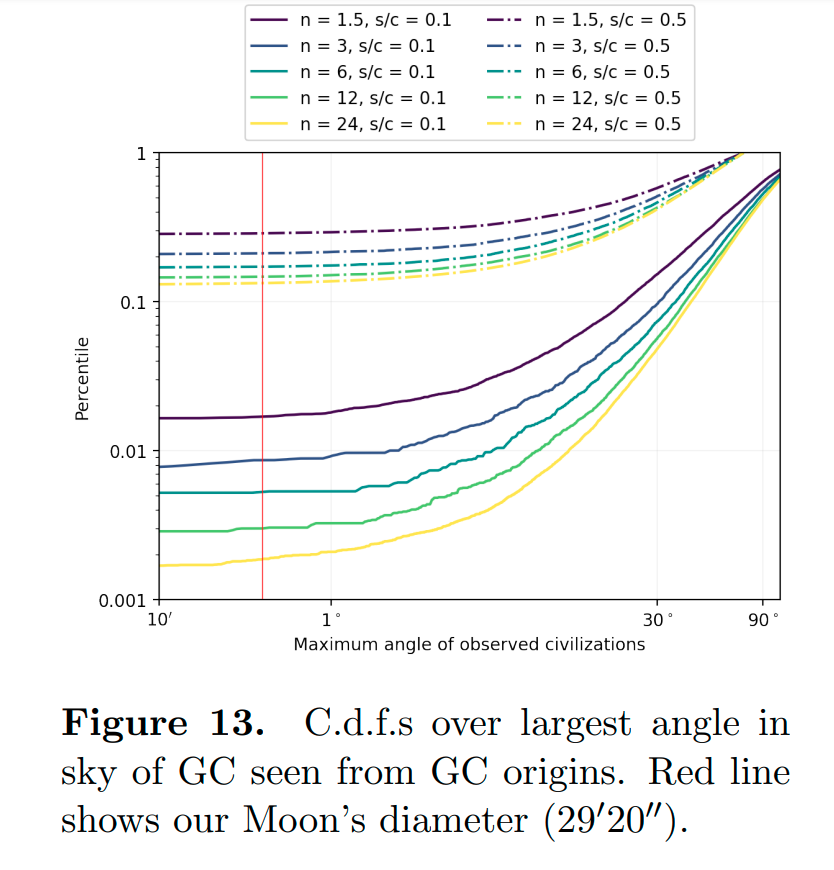

Assuming this explanation of human earliness is correct, and we are not an atypical civilization among all the civilizations that will ever exist, we should expect much of the universe to already be colonized by grabby aliens by now. In fact, as indicated by figure 13 in the paper, such alien volumes should appear to us to be larger than the full moon.

Given the fact that we do not currently see any grabby aliens in our sky, Robin Hanson concludes that they must expand quickly—at more than half the speed of light. He reaches this conclusion by applying a similar selection-effect argument as before: if grabby alien civilizations expanded slowly, then we would be more likely to see them in the night sky, but we do not see them.

However, the assumption that grabby aliens, if they existed, would be readily visible to observers, is arguable, as Hanson et al. acknowledge,

This analysis, like most in our paper, assumes we would have by now noticed differences between volumes controlled or not by GCs. Another possibility, however, is that GCs make their volumes look only subtly different, a difference that we have not yet noticed.

As I will argue, the theory that SETI is dangerous hinges crucially on the rejection of this assumption, along with the rejection of the claim that grabby aliens must expand at at velocities approaching speed of light. Together, these claims are the best reasons for believing that SETI is harmless. However, if we abandon these epistemic commitments, then SETI indeed may pose a substantial risk to humanity, making it worthwhile to examine them in greater detail.

Alien expansion and contact

Grabby aliens are not the only type of aliens that could exist. There could be "quiet" aliens that do not seek expansionist ends. However, in section 15 of their paper, Hanson et al. argue that in order for quiet aliens to be common, it must be that there is an exceptionally low likelihood that a given quiet alien civilization will transition to becoming grabby, which seems unjustified.

Given this inference, we should assume that the first aliens we come in contact with will be grabby. Coming into physical contact with grabby aliens within the next, say, 1000 years is very unlikely. The reason for this is that grabby aliens have existed, on average, for many millions of years, and thus, the only way we will encounter them physically any time soon is if we happened to right now be on the exact outer edge of their current sphere of colonization, which seems implausible (see figure 12 in Hanson et al. for a more quantified version of this claim).

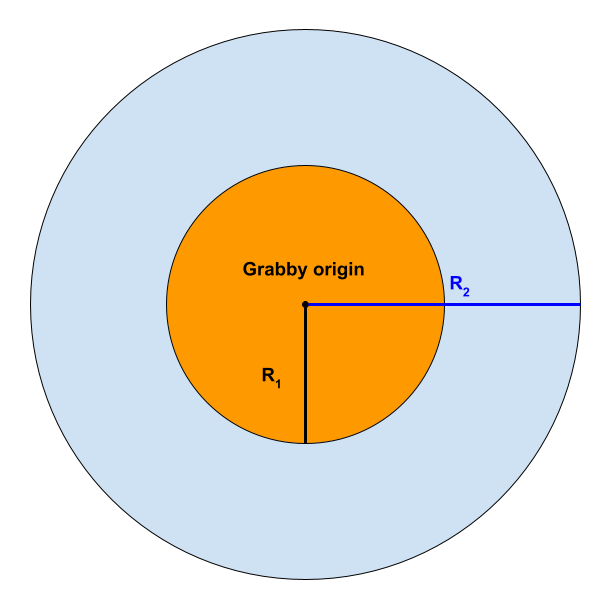

It is far more likely that we will soon come into contact with grabby aliens by picking up signals that they sent in the distant past. Since grabby alien expansion is constrained by a number of factors (such as interstellar dust, acceleration, and deceleration), they will likely expand at a velocity significantly below the speed of light. This implies that there will be a significant lag between when the first messages from grabby aliens could have been received by Earth-based-observers, and the time at which their colonization wave arrives. The following image illustrates this effect, in two dimensions,

The orange region represents the volume of space that has already been colonized and transformed by a grabby alien civilization, and it has a radius of . By contrast, the light-blue region represents the volume of space that could have been receiving light-speed messages from the grabby aliens by now.

In general, the smaller the ratio is across all grabby aliens, the more likely it is that any given point in space will be in the light-blue region of some grabby alien civilization as opposed to the orange region. If we happen to be in the light-blue region of another grabby alien civilization, it would imply that we could theoretically tune in and receive any messages they decided to send out long ago.

Since the formula for the volume of a sphere is , with a ratio of even 0.9—or equivalently, if grabby aliens expand at 90% the speed of light—only 72.9% of the total volume would be part of the orange region, with 28.1% belonging to the light-blue region. This presents a large opportunity for even very-rapidly expanding grabby alien civilizations to continuously broadcast messages, in order to expand their supremacy by hijacking civilizations that happen to evolve in the light-blue region. I think grabby aliens would perform a simple expected-value calculation and conclude that continuous broadcasting is worth the cost in resources. Correspondingly, this opportunity provides the main reason to worry that we might be hijacked by a grabby alien civilization at some point ourselves.

Generally, the larger the ratio , the less credence we should have that we currently are in danger of being hijacked by incoming messages. At a ratio of 0.99, only 2.9% of the total volume is in the light-blue region.

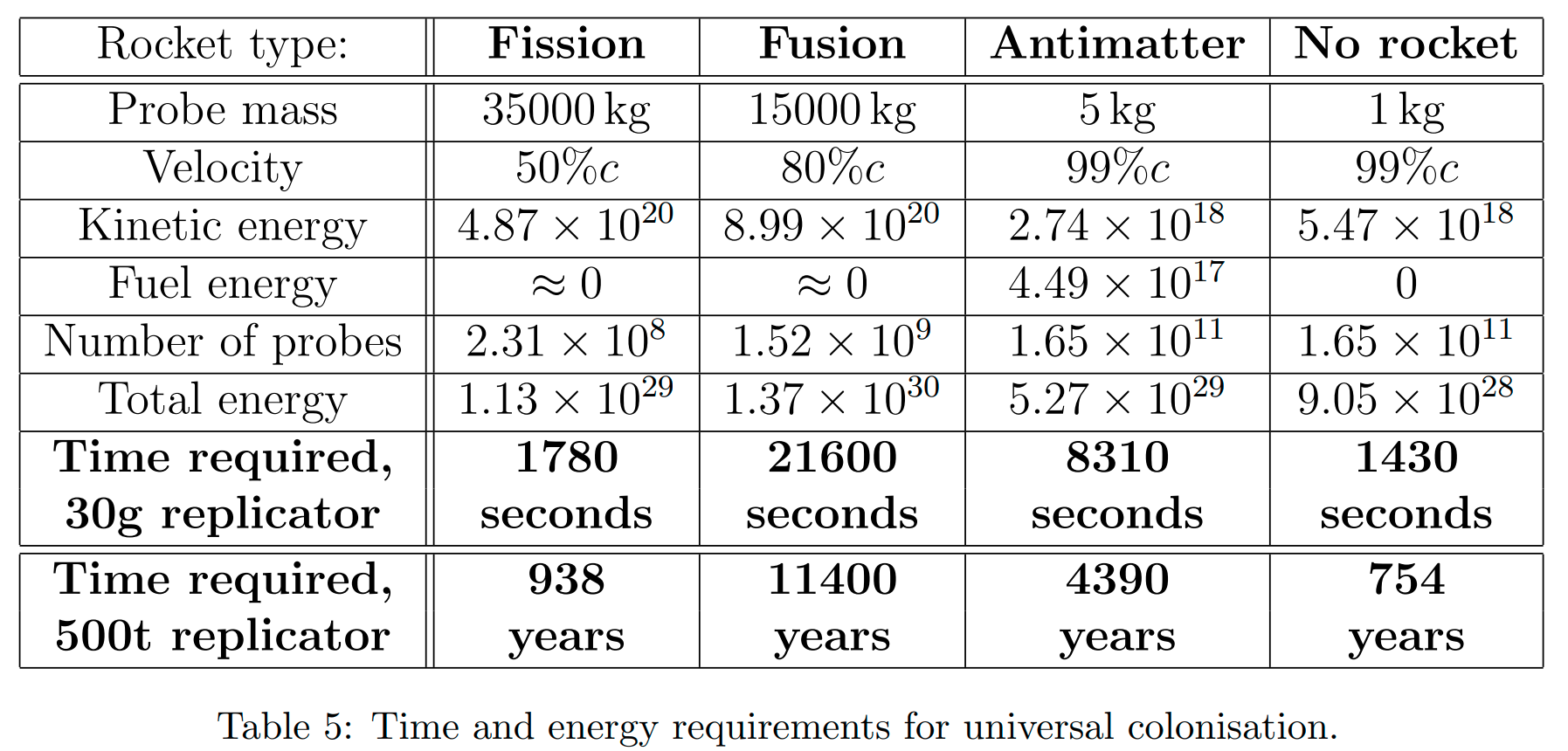

In their paper, Stuart Armstrong and Anders Sandberg attempt to show, using relatively modest assumptions, that grabby aliens could expand at speeds very close to the speed of light. This is generally recognized to be the strongest argument against the idea that SETI is dangerous.

According to table 5 in their paper, a fusion-based rocket could let an expansionist civilization expand at 80% of the speed of light. However, if we're able to use coilguns instead, then we get to 99%, which is perhaps more realistic.

Still, not everyone is convinced. For example, in a thread from 2018 in response to this argument, Paul Christiano wrote,

Overall I still think that you can't get to >90% confidence of >0.9c colonization speed (our understanding of physics/cosmology just doesn't seem high enough to get to those confidences).

I am not qualified to evaluate the plausibility of this assessment. That said, I think given that at least a few smart people seem to think that there is a non-negligible chance that near-light speed space colonization is unattainable, it is sensible to consider the risk of SETI seriously.

Alien strategy

Previously, I noted that another potential defeater to the idea that SETI is dangerous is that, if we were close enough to a grabby alien civilization to receive messages from them, they should already be clearly visible in the night sky, perhaps even with the naked eye. I agree their absence is suspicious, and it's a strong reason to doubt that there are any grabby aliens nearby currently.

However, given that we currently have very little knowledge about what form grabby alien structures might take, it would be premature to rule out the possibility that grabby alien civilization may simply be transparent to our current astronomical instruments. I currently think that making progress on answering whether this idea is plausible is one of the most promising ways of advancing this debate further.

One possibility that we can probably rule out is the idea that grabby aliens would be invisible if they were actively trying to contact us. Wei Dai points out,

If matter-energy conversion is allowed, then an alien beacon should have been found easily through astronomical surveys (which photograph large fractions of the sky and then search for interesting objects) like the SDSS, since quasars can be found that way from across the universe (see following quote from Wikipedia), and quasars are only about 100x the luminosity of a galaxy. However this probability isn't 100% due to extinction and the fact that surveys may not cover the whole sky.

My understanding is that, given a modest amount of energy relative to the energy output of a single large galaxy, grabby aliens could continuously broadcast a signal that would be easily detectable across the observable universe. Thus, if we were in the sphere of influence of a grabby alien civilization, they should have been able to contact us by now.

In other words, the fact that we haven't yet been contacted by grabby aliens implies that they either don't exist near us, or they haven't been trying very hard to reach us.

Case closed, then, right? SETI might be hopeless, but at least it's safe? Not exactly.

While some readers may object that we are straining credulity at this point—and for the most part, I agree—there remains a credible possibility that grabby aliens would benefit by sending a message that was carefully designed to only be detectable by civilizations at a certain level of technological development. If true, this would be consistent with our lack of alien contact so far, while still suggesting that SETI poses considerable risk to humanity. This assumption may at first appear to be baseless—a mere attempt to avoid falsification—but there may be some merit behind it.

Consider a very powerful message detectable by any civilization with radio telescopes. The first radio signals from space that humans ever decoded were received in 1932 and analyzed by Karl Guthe Jansky. Let's also assume that the best strategy for an alien hijacker is to send the machine-code for an AI capable of taking over the civilization that receives it.

In 1932, computers were extremely primitive. Therefore, if humans had received such a message back then, there would have been ample time for us to learn a lot more about the nature of the message before we had the capability of running the code on a modern computer. During that time, it is plausible that we would uncover the true intentions behind it, and coordinate to prevent the code from being run.

By contrast, if humans today uncovered an alien message, there is a high likelihood that it would end up on the internet within days after the discovery. In fact, the SETI Institute even recommends this as part of their current protocol,

Confirmed detections: If the verification process confirms – by the consensus of the other investigators involved and to a degree of certainty judged by the discoverers to be credible – that a signal or other evidence is due to extraterrestrial intelligence, the discoverer shall report this conclusion in a full and complete open manner to the public, the scientific community, and the Secretary General of the United Nations. The confirmation report will include the basic data, the process and results of the verification efforts, any conclusions and intepretations, and any detected information content of the signal itself.

As Paul Christiano notes, aliens will likely spend a very large amount of resources simulating potential contact events, and optimizing their messages to ensure the maximum likelihood of successful hijacking. While we can't be sure what strategy that implies, it would be unwise to assume that alien messages will necessarily take any particular character, such as being easily detectable, or clearly manipulative.

Would alien contact be good?

Alien motivations are extremely difficult to predict. As a first-pass model, we could model them as akin to paperclip maximizers. If they hijacked our civilization to produce more paperclips, that would bad from our perspective.

At the same time, Paul Christiano believes that there's a substantial chance that alien contact would be good on complicated decision-theoretic grounds,

If we are likely to build misaligned AI, then an alien message could also be a “hail Mary:” if the aliens built a misaligned AI then the outcome is bad, but if they built a friendly AI then I think we should be happy with that AI taking over Earth (since from behind the veil of ignorance we might have been in their place). So if our situation looks worse than average with respect to AI alignment, SETI might have positive effects beyond effectively reducing extinction risk.

The preceding analysis takes a cooperative stance towards aliens. Whether that’s correct or not is a complicated question. It might be justified by either moral arguments (from behind the veil of ignorance we’re as likely to be them as us) or some weird thing with acausal trade (which I think is actually relatively likely).

Wei Dai, on the other hand remains skeptical about this argument. As for myself, I'm inclined to expect relatively successful AI alignment by default, making this point somewhat moot. But I can see why others might disagree and would prefer to take their chances running an alien program.

My estimate of risk

Interestingly, the literature on SETI risk is extremely sparse, even by the standards of ordinary existential risk work. Yet, while not rising anywhere near the level of probable, I think SETI risk is one of the more credible existential risks to humanity, other than AI. This makes it a somewhat promising target for future research.

To be more specific, I currently think there is roughly a 99% chance that one or more of the arguments I gave above imply that the risk from SETI is minimal. Absent these defeaters, I think there's perhaps a 10-20% chance that SETI will directly cause human extinction in the next 1000 years. This means I currently put the risk of human extinction due to SETI at around 0.1-0.2%. This estimate is highly non-robust.

Strategies for mitigating SETI risk

My basic understanding is that SETI has experienced very extreme growth in recent years. For a long time, potential alien messages, such as the Wow! signal, were collected very slowly, and processed by hand.

We now appear to be going through a renaissance in SETI. The Breakthrough Listen project, which began in 2016,

is the most comprehensive search for alien communications to date. It is estimated that the project will generate as much data in one day as previous SETI projects generated in one year. Compared to previous programs, the radio surveys cover 10 times more of the sky, at least 5 times more of the radio spectrum, and work 100 times faster.

If we are indeed going through a renaissance, now would be a good time to advance policy ideas about how we ought to handle SETI.

As with other existential risks, often the first solutions we think of aren't very good. For example, while it might be tempting to push for a ban on SETI, in reality few people are likely to be receptive to such a proposal.

That said, there do appear to be genuinely tractable and robustly positive interventions on the table.

As I indicated above, the SETI Institute's protocol on how to handle confirmed alien signals seems particularly fraught. If a respectable academic wrote a paper carefully analyzing how to deal with alien signals, informed by the study of information hazards, I think there is a decent chance that the kind people at the SETI Institute would take note, and consider improving their policy (which, for what it's worth, was last modified in 2010).

If grabby aliens are close enough to us, and they really wanted to hijack our civilization, there's probably nothing we could do to stop them. Still, I think the least we can do is have a review process for candidate alien-signals. Transparency and openness are usually good for these types of affairs, but when there's a non-negligible chance of human extinction resulting from our negligence, I think it makes sense to consider creating a safeguard to prevent malicious signals from instantly going public after they're detected.

Pablo @ 2022-03-15T21:57 (+8)

Another relevant consideration is that SETI may help us better locate the Great Filter, which would have information value for cause prioritization and existential risk reduction. As Hanson writes, "Research into SETI and the evolution of life does much more than satisfy intellectual curiosity - it offers us uniquely long-term information about humanity's future."

Hauke Hillebrandt @ 2022-03-15T18:00 (+5)

What do you think of the Dark Forest theory?

Matthew_Barnett @ 2022-03-15T18:04 (+4)

I don't find the scenario plausible. I think the grabby aliens model (cited in the post) provides a strong reason to doubt that there will be many so-called "quiet" aliens that hide their existence. Moreover, I think malicious grabby (or loud) aliens would not wait for messages before striking, which the Dark Forest theory relies critically on. See also section 15 in the grabby aliens paper, under the heading "SETI Implications".

In general, I don't think there are significant risks associated with messaging aliens (a thesis that other EAs have argued for, along these lines).

Harlan @ 2022-03-15T20:01 (+2)

Thanks for this great writeup, this seems like a topic that deserves more discussion.

"Coming into physical contact with grabby aliens within the next, say, 1000 years is very unlikely. The reason for this is that grabby aliens have existed, on average, for many millions of years, and thus, the only way we will encounter them physically any time soon is if we happened to right now be on the exact outer edge of their current sphere of colonization, which seems implausible."

Or encounters with grabby aliens are so dangerous that they don't leave any conscious survivors, in which case we would need to be careful about using the lack of encounters in our past as evidence about the likelihood or frequency in the future.

Jon P @ 2022-03-16T16:48 (+1)

Nice writeup.

"The primary theory is that alien civilizations could continuously broadcast a highly optimized message intended to hijack or destroy any other civilizations unlucky enough to tune in."

One question I have is whether this is possible and how difficult it is?

I mean if I took a professional programmer and told them to write a message which would hijack another computer when sent to it isn't that extremely hard to do? I mean even if you already know about human programming you have no idea what system that machine is running or how it is structured.

Isn't then that problem like 10,000x harder when dealing with a totally different species and their computer equipment? The sender of the message would have no idea what computational structures they were using and absolutely no idea of the syntax or coding conventions? I mean even using binary rather than trinary or decimal is a choice?

Matthew_Barnett @ 2022-03-17T19:08 (+2)

One question I have is whether this is possible and how difficult it is?

I think it would be very difficult without human assistance. I don't, for example, think that aliens could hijack the computer hardware we use to process potential signals (though, it would perhaps be wise not to underestimate billion-year-old aliens).

We can imagine the following alternative strategy of attack. Suppose the aliens sent us the code to an AI with the note "This AI will solve all your problems: poverty, disease, world hunger etc.". We can't verify that the AI will actually do any of those things, but enough people think that the aliens aren't lying that we decide to try it.

After running the AI, it immediately begins its plans for world domination. Soon afterwards, humanity is extinct; and in our place, an alien AI begins constructing a world more favorable to alien values than our own.

Jon P @ 2022-03-18T20:19 (+1)

Yeah I think that's a good point. I mean I could see how you could send a civilisation the blueprints for atomic weapons and hope that they wipe themselves out or something, that would be very feasible.

I guess I'm a bit more skeptical when it comes to AI. I mean it's hard to get code to run and it has to be tailored to the hardware. And if you were going to teach them enough information to build advanced AIs I think there'd be a lot of uncertainty about what they'd end up making, I mean there'd be bugs in the code for sure.

It's an interesting argument though and I can really see your perspective on it.

MikeJohnson @ 2022-03-16T02:24 (+1)

I posted this as a comment to Robin Hanson’s “Seeing ANYTHING Other Than Huge-Civ Is Bad News” —

————

I feel these debates are too agnostic about the likely telos of aliens (whether grabby or not). Being able to make reasonable conjectures here will greatly improve our a priori expectations and our interpretation of available cosmological evidence.

Premise 1: Eventually, civilizations progress until they can engage in megascale engineering: Dyson spheres, etc.

Premise 2: Consciousness is the home of value: Disneyland with no children is valueless.

Premise 2.1: Over the long term we should expect at least some civilizations to fall into the attractor of treating consciousness as their intrinsic optimization target.

Premise 3: There will be convergence that some qualia are intrinsically valuable, and what sorts of qualia are such.

Conjecture: A key piece of evidence for discerning the presence of advanced alien civilizations will be megascale objects which optimize for the production of intrinsically valuable qualia.

Speculatively, I suspect black holes and pulsars might fit this description.

More:

https://opentheory.net/2019/09/whats-out-there/

https://opentheory.net/2019/02/simulation-argument/

————

Reasonable people can definitely disagree here, and these premises may not work for various reasons. But I’d circle back to the first line: I feel these debates are too agnostic about the likely telos of aliens (whether grabby or not). In this sense I think we’re leaving value on the table.

turchin @ 2022-06-02T10:10 (+1)

If aliens need only powerful computers to produce interesting qualia, this will be no different from other large scale projects, and boils down to some Dyson spheres-like objects. But we don't know how qualia appear.

Also, a whole human industry of tourism is only producing pleasant qualia. Extrapolating, aliens will have mega-tourism: almost pristine universe, where some beings interact with nature in very intimate ways. Now it becomes similar to some observations of UFOs.