Aaron Gertler's Quick takes

By Aaron Gertler 🔸 @ 2019-11-15T12:40 (+7)

nullAaron Gertler @ 2022-04-20T21:57 (+27)

My ratings and reviews of rationalist fiction

I've dedicated far too much time to reading rationalist fiction. This is a list of stories I think are good enough to recommend.

Here's my entire rationalist fiction bookshelf —a mix of works written explicitly within the genre and other works that still seem to belong. (I've written reviews for some, but not all.)

Here are subcategories, with stories ranked in rough order from "incredible" to "good". The stories vary widely in scale, tone, etc., and you should probably just read whatever seems most interesting to you.

If you know of a good rational or rational-adjacent story I'm missing, let me know!

Long stories (rational fiction)

- Worm

- Worth the Candle

- Harry Potter and the Methods of Rationality

- Pale

- The Steerswoman (series)

- The Erogamer (warning: X-rated)

- My Little Pony: Friendship is Optimal

- The Gods Are Bastards

- Dr. Stone

- Significant Digits (HPMOR sequel)

- A Practical Guide to Evil

- Pokemon: The Origin of Species

- The Last Ringbearer

- Unsong

- Fine Structure

- Luminosity

- Ra

Long stories (not rational fiction, but close)

- The Dark Forest (second book of a trilogy, other books are good but not as close to rational fiction)

- The Diamond Age

- Red Plenty

- Strong Female Protagonist

- Spinning Silver

- Ender's Shadow

- Blindsight

- The Great Brain (series, quality is consistent)

- The Traitor Baru Cormorant

- The Martian

- Anathem

Short stories and novellas (rational or close)

- Most of Alicorn's work (generally not on Goodreads, so not rated there). Currently the best working rationalist fiction author, IMO.

- Friendship is Optimal (spinoff stories)

- The Metropolitan Man

- The Rules of Wishing

- The Dark Wizard of Donkerk

- It Looks Like You're Trying To Take Over The World

- The Thrilling Adventures of Lovelace and Babbage

- A Wizard's Guide to Defensive Baking

- The Sword of Good

- The Dark Lord's Answer

- A Girl Corrupted by the Internet is the Summoned Hero?

alexrjl @ 2022-04-21T05:26 (+4)

If be keen to hear right how you're defining the genre, especially when the author isn't obviously a member of the community. I loved worm and read it a couple of years ago, at least a year before I was aware rational fiction was a thing, and don't recall thinking "wow this seems really rationalist" so much as just "this is fun words go brrrrrrrr"

Aaron Gertler @ 2022-04-21T17:41 (+7)

I think that "intense, fanatical dedication to worldbuilding" + "tons of good problem-solving from our characters, which we can see from the inside" adds up to ratfic for me, or at least "close to ratfic". Worm delivers both.

alexrjl @ 2022-04-21T20:04 (+2)

Sounds right to me! I'm reading worth the candle at the moment :)

JasperGeh @ 2022-05-05T07:09 (+1)

Ah, that makes sense. I absolutely adore Fine Structrue and Ra but never considered it ratfic (though I don’t know whether Sam Hughes is hanging in rat circles)

EdoArad @ 2022-04-21T10:22 (+2)

I also love Alexander Wales' ongoing This Used To Be About Dungeons

Charles He @ 2022-04-21T04:26 (+2)

Can you give your view why The Dark Forest is an example of near rationalist work?

I guess it shows societal dysfunction, the (extreme) alienness or hostility of reality, and some intense applications of game theory.

I think I want to understand “rationality” as much as the book.

Aaron Gertler @ 2022-04-21T17:43 (+9)

The book poses an interesting and difficult problem that characters try to solve in a variety of ways. The solution that actually works involves a bunch of plausible game theory and feels like it establishes a realistic theory of how a populous universe might work. The solutions that don't work are clever, but fail for realistic reasons.

Aside from the puzzle element of the book, it's not all that close to ratfic, but the puzzle is what compelled me. Certainly arguable whether it belongs in this category.

Rubi @ 2022-05-02T07:46 (+1)

I like many books on the list, but I think you're doing a disservice by trying to recommend too many books at once. If you can cut it down to 2-3 in each category, that gives people a better starting point.

Aaron Gertler @ 2022-05-02T10:36 (+5)

If you want recommendations, just take the first couple of items in each category. They are rated in order of how good I think they are. (That's if you trust my taste — I think most people are better off just skimming the story summaries and picking up whatever sounds interesting to them.)

Rubi @ 2022-05-03T20:02 (+1)

Cool, thanks!

Aaron Gertler @ 2021-03-09T10:32 (+24)

On worrying too much about the impact of a certain EA Forum post:

I am the Forum's lead moderator, but I don't mean to write this with my "mod" hat on — these are personal concerns, from someone who cares a lot about this space.

Michael Aird recently published a list of EA-related books he'd read.

Some people thought this could have bad side effects. The phrase "echo chamber" came up multiple times:

These titles should not become even more canonical in the EA community than they already are (I fear this might lead to an echo chamber)

And:

I agree with Hauke that this risks increasing the extent to which EA is an echo chamber.

I could appreciate some commenters' object-level concerns about the list (e.g. the authors weren't very diverse). But the "echo chamber" concern felt... way off.

Reasons I don't think this concern made much sense:

- The post wasn't especially popular. I don't know how much karma it had when the "echo chamber" comments were made, but it finished with 70 (as I write this), outside the top 10 posts for February.

- The author wasn't famous. If Will MacAskill published a list of book ratings, I could understand this kind of concern (though I still think we should generally trust people not to immediately adopt Will's opinions). Michael Aird is a fantastic Forum contributor, but he doesn't have the same kind of influence.

- Michael included an explicit caveat that he was not making recommendations, and that he didn't mean to make claims about how useful other people would find the books. If anything, this pushes against the idea of EA as an echo chamber.

If this post has any impact on EA as an entire movement, I'd guess that impact will be... minimal, so minimal as to be nigh-untraceable.

Meanwhile, it seems like it could introduce a few people to books they'll find useful — a positive development, and a good reason to share a book list!

*****

More broadly:

There is a sense in which every Forum post plays a role in shaping the culture of EA. But I think that almost every post plays a very small role.

I often hear from people who are anxious about sharing their views because they're afraid that they'll somehow harm EA culture in a way they can't anticipate.

I hear this more frequently from authors in groups that are already underrepresented in EA, which makes me especially nervous about the message spreading further.

While concerns about cultural shifts are sometimes warranted, I think they are sometimes brought up in cases where they don't apply. They seem especially misplaced when an author isn't making a claim about EA culture and is instead sharing a personal experience.

I'd like EA's culture to be open and resilient — capable of considering and incorporating new ideas without suffering permanent damage. The EA Forum should, with rare exceptions, be a place where people can share their thoughts, and discussion can help us make progress.

Downvoting and critical comments have a useful role in this discussion. But this specific type of criticism — "you shouldn't have shared this at all, because it might have some tiny negative impact on EA culture" — often feels like it cuts against openness and resilience.

MichaelA @ 2021-03-10T09:17 (+5)

I think these are good points, and the points made in the second half of your shortform are things I hadn't considered.

The rest of this comment says relatively unimportant things which relate only to the first half of your shortform.

---

If people read only this shortform, without reading my post or the comments there, there are two things I think they should know to help explain the perspective of the critical commenter(s):

- It wasn't just "a list of EA-related books [I'd] read" but a numbered, ranked list.

- That seems more able to produce echo-chamber-like effects than merely a list, or a list with my reviews/commentary but without a numbered ranking

- See also 80,000 Hours' discussion of their observation that people have sometimes overly focused on the handful of priorities paths 80,000 Hours explicitly highlight, relative to figuring out additional paths using the principles and methodologies 80,000 Hours

- I don't immediately recall the best link for this, but can find one if someone is interested

- See also 80,000 Hours' discussion of their observation that people have sometimes overly focused on the handful of priorities paths 80,000 Hours explicitly highlight, relative to figuring out additional paths using the principles and methodologies 80,000 Hours

- That seems more able to produce echo-chamber-like effects than merely a list, or a list with my reviews/commentary but without a numbered ranking

- "the authors weren't very [demographically] diverse" seems like an understatement; in fact, all 50+ of them (some books had coauthors) were male, and I think all were white and from WEIRD societies.

- And I hadn't explicitly noticed that before the commenters pointed that out

- I add "demographically" because I think there's a substantial amount of diversity among the authors in terms of things like worldviews, but that's not the focus for this specific conversation

I think it's also worth highlighting that a numbered list has a certain attention-grabbing, clickbait-y quality, which I think slightly increases the "risk" of it having undue influence.

All that said, I do agree with you that the post (a) seems less likely to be remembered and have a large influence that a couple critical commenters seemed to expect, and (b) seems less likely to cause a net increase in ideological homogeneity (or things like that) than those commenters seemed to expect.

MichaelA @ 2021-03-10T09:24 (+4)

[Some additional, even less important remarks:]

I don't know how much karma it had when the "echo chamber" comments were made, but it finished with 70 (as I write this), outside the top 10 posts for February.

Interestingly, the strong downvote was one of the first handful of votes on the post (so it was at relatively low karma then), and the comment came around then. Though the I guess what was more relevant is how much karma/attention it'd ultimately get. But even then, I think the best guess at that point would've been something like 30-90 karma (based in part on only 1 of my previous posts exceeding 90 karma).

If Will MacAskill published a list of book ratings, I could understand this kind of concern (though I still think we should generally trust people not to immediately adopt Will's opinions). Michael Aird is a fantastic Forum contributor, but he doesn't have the same kind of influence.

Fingers crossed I'll someday reach Will's heights of community-destruction powers! (Using them only for good-as-defined-unilaterally-by-me, of course.)

Aaron Gertler @ 2021-06-21T04:37 (+22)

New EA music

José Gonzalez (GWWC member, EA Global performer, winner of a Swedish Grammy award) just released a new song inspired by EA and (maybe?) The Precipice.

Lyrics include:

Speak up

Stand down

Pick your battles

Look around

Reflect

Update

Pause your intuitions and deal with it

It's not as direct as the songs in the Rationalist Solstice, but it's more explicitly EA-vibey than anything I can remember from his (apparently) Peter Singer-inspired 2007 album, In Our Nature.

KarolinaSarek @ 2021-06-21T15:32 (+8)

"Visions" - another song he released in 2021 gives me very strong EA vibes. Lyrics include:

Visions

Imagining the worlds that could be

Shaping a mosaic of fates

For all sentient beings

[...]Visions

Avoidable suffering and pain

We are patiently inching our way

Toward unreachable utopiasVisions

Enslaved by the forces of nature

Elevated by mindless replicators

Challenged to steer our collective destinyVisions

Look at the magic of reality

While accepting with all honesty

That we can't know for sure what's nextNo, we can't know for sure what's next

But that we're in this together

We are here together

Aaron Gertler @ 2022-05-20T09:04 (+14)

Memories from starting a college group in 2014

In August 2014, I co-founded Yale EA (alongside Tammy Pham). Things have changed a lot in community-building since then, and I figured it would be good to record my memories of that time before they drift away completely.

If you read this and have questions, please ask!

Timeline

I was a senior in 2014, and I'd been talking to friends about EA for years by then. Enough of them were interested (or just nice) that I got a good group together for an initial meeting, and a few agreed to stick around and help me recruit at our activities fair. One or two of them read LessWrong, and aside from those, no one had heard of effective altruism.

The group wound up composed largely of a few seniors and a bigger group of freshmen (who then had to take over the next year — not easy!). We had 8-10 people at an average meeting.

Events we ran that first year included:

- A dinner with Shelly Kagan, one of the best-known academics on campus (among the undergrad population). He's apparently gotten more interested in EA since then, but during the dinner, he seemed a bit bemused and was doing his best to poke holes in utilitarianism (and his best was very good, because he's Shelly Kagan).

- A virtual talk from Rob Mather, head of AMF. Kelsey Piper was visiting from Stanford and came to the event; she was the first EA celebrity I'd met and I felt a bit star-struck.

- A live talk from Julia Wise and Jeff Kaufman (my second and third EA celebrities). They brought Lily, who was a young toddler at the time. I think that saying "there will be a baby!" drew nearly as many people as trying to explain who Jeff and Julia were. This was our biggest event, maybe 40 people.

- A lunch with Mercy for Animals — only three other people showed up.

- A dinner with Leah Libresco, an atheist blogger and CFAR instructor who converted to Catholicism before it was cool. This was a weird mix of EA folks and arch-conservatives, and she did a great job of conveying EA's ideas in a way the conservatives found convincing.

- A mixer open to any member of a nonprofit group on campus. (I was hoping to recruit their altruistic members to do more effective things — this sounds more sinister in retrospect than it did at the time.)

- We gained zero recruits that day, but — wonder of wonders — someone's roommate showed up for the free alcohol and then went on to lead the group for multiple years before working full-time on a bunch of meta jobs. This was probably the most impactful thing I did all year, and I didn't know until years later.

- A bunch of giving games, at activities fairs and in random dining halls. Lots of mailing-list signups, reasonably effective, and sponsored by The Life You Can Save — this was the only non-Yale funding we got all year, and I was ecstatic to receive their $300.

- One student walked up, took the proffered dollar, and then walked away. I was shook.

We also ran some projects, most of which failed entirely:

- Trying to write an intro EA website for high school students (never finished)

- Calling important CSR staff at major corporations to see if they'd consider working with EA charities. It's easy to get on the phone when you're a Yale student, but it turns out that "you should start funding a strange charity no one's ever heard of" is not a compelling pitch to people whose jobs are fundamentally about marketing.

- Asking Dean Karlan, development econ legend, if he had ideas for impactful student projects.

- "I do!"

- Awesome! What is it?

- "Can you help me figure out how to sell 200,000 handmade bags from Ghana?"

- Um... thanks?

- We had those bags all year and never even tried to sell them, but I think Dean was just happy to have them gone. No idea where they wound up.

- Paraphrased ideas that we never tried:

- See if Off! insect repellant (or other mosquito-fighting companies) would be interested in partnering with the Against Malaria Foundation?

- Come up with a Christian-y framing of EA, go to the Knights of Columbus headquarters [in New Haven], and see if they'll support top charities?

- Benefit concert with the steel drum band? [Co-president Pham was a member.]

- Live Below the Line event? [Dodged a bullet.]

- Write EA memes! [Would have been fun, oh well.]

- The full idea document is a fun EA time capsule.

- The only projects that achieved anything concrete were two fundraisers — one for the holidays, and one in memory of Luchang Wang, an active member (and fantastic person) whose death cast a shadow over the second half of the year. We raised $10-15k for development charities, of which maybe $5k was counterfactual (lots came from our members).

- Our last meeting of the year was focused on criticism — what the group (and especially me) didn't do well, and how to improve things. I don't remember anything beyond that.

- The main thing we accomplished was becoming friends. My happiest YEA-related journal entries all involve weird conversations at dinner or dorm-room movie nights. By the end of that year, I'd become very confident that social bonding was a better group strategy than direct action.

What it was like to running a group in 2014: Random notes

- I prepared to launch by talking to 3-4 leaders at other college groups, including Ben Kuhn, Peter Wildeford, and the head of a Princeton group that (I think) went defunct almost immediately. Ben and Peter were great, but we were all flying by the seats of our pants to some degree.

- While I kind of sucked at leading, EA itself was ridiculously compelling. Just walking through the basic ideas drove tons of people to attend a meeting/event (though few returned).

- Aside from the TLYCS grant and some Yale activity funding, I paid for everything out of pocket — but this was just occasional food and maybe a couple of train tickets. I never even considered running a retreat (way too expensive).

- Google Docs was still new and exciting back then. We didn't have Airtable, Notion, or Slack.

- I never mention CEA in my journal. I don't think I'd really heard of them while I was running the group, and I'm not sure they had group resources back then anyway.

- Our first academic advisor was Thomas Pogge, an early EA-adjacent philosopher who melted from public view after a major sexual harassment case. I don't think he ever responded to our very awkward "we won't be keeping you as an adviser" email.

But mostly, it was really hard

The current intro fellowships aren't perfect, and the funding debate is real/important, but oh god things are so much better for group organizers than they were in 2014.

I had no idea what I was doing.

There were no reading lists, no fellowship curricula, no facilitator guides, no nothing. I had a Google doc full of links to favorite articles and sometimes I asked people to read them.

I remember being deeply anxious before every meeting, event, and email send, because I was improvising everything and barely knew what we were supposed to be doing (direct impact? Securing pledges? Talking about cool blogs?).

Lots of people came to one or two meetings, saw how chaotic things were, and never came back. (I smile a bit when I see people complaining that modern groups come off as too polished and professional — that's not great, but it beats the alternative.)

I looked at my journal to see if the anxious memories were exaggerated. They were not. Just reading them makes me anxious all over again.

But that only makes it sweeter that Yale's group is now thriving, and that EA has outgrown the "students flailing around at random" model of community growth.

Aaron Gertler @ 2020-06-25T22:53 (+13)

Excerpt from a Twitter thread about the Scott Alexander doxxing situation, but also about the power of online intellectual communities in general:

I found SlateStarCodex in 2015. immediately afterwards, I got involved in some of the little splinter communities online, that had developed after LessWrong started to disperse. I don't think it's exaggerating to say it saved my life.

I may have found my way on my own eventually, but the path was eased immensely by LW/SSC. In 2015 I was coming out of my only serious suicidal episode; I was in an unhappy marriage, in a town where I knew hardly anyone; I had failed out of my engineering program six months prior.

I had been peripherally aware of LW through a few fanfic pieces, and was directed to SSC via the LessWrong comments section.

It was the most intimidating community of people I had ever encountered -- I didn't think I could keep up.

But eventually, I realized that not only was this the first group of people who made me feel like I had come *home,* but that it was also one of the most welcoming places I'd ever been (IRL or virtual).

I joined a Slack, joined "rationalist" tumblr, and made a few comments on LW and SSC. Within a few months, I had *friends*, some of whom I would eventually count among those I love the most.

This is a community that takes ideas seriously (even when it would be better for their sanity to disengage).

This is a community that thinks everyone who can engage with them in sincere good faith might have something useful to say.

This is a community that saw someone writing long, in-depth critiques on the material produced on or adjacent to LW/SSC...and decided that meant he was a friend.

I have no prestigious credentials to speak of. I had no connections, was a college dropout, no high-paying job. I had no particular expertise, a lower-class background than many of the people I met, a Red-Tribe-Evangelical upbringing and all I had to do, to make these new friends, was show up and join the conversation.

[...]

The "weakness" of the LessWrong/SSC community is also its strength: putting up with people they disagree with far longer than they have to. Of course terrible people slip through. They do in every group -- ours are just significantly more verbose.

But this is a community full of people who mostly just want to get things *right,* become *better people,* and turn over every single rock they see in the process of finding ways to be more correct -- not every person and not all the time, but more than I've seen everywhere else.

The transhumanist background that runs through the history of LW/SSC also means that trans people are more accepted here than anywhere else I've seen, because part of that ideological influence is the belief that everyone should be able to have the body they want.

It is not by accident that this loosely-associated cluster of bloggers, weird nerds, and twitter shitposters were ahead of the game on coronavirus. It's because they were watching, and thinking, and paying attention and listening to things that sound crazy... just in case.

There is a 2-part lesson this community held to, even while the rest of the world is forgetting it:

- You can't prohibit dissent

- It's sometimes worth it to engage someone when they have icky-sounding ideas

It was unpopular six months ago to think COVID might be a big deal; the SSC/LW diaspora paid attention anyways.

You can refuse to hang out with someone at a party. You can tell your friends they suck. But you can't prohibit them from speaking *merely because their ideas make you uncomfortable* and there is value in engaging with dissent, with ideas that are taboo in Current Year.

(I'm not leaving a link or username, as this person's Tweets are protected.)

aarongertler @ 2020-02-06T02:58 (+13)

Another brief note on usernames:

Epistemic status: Moderately confident that this is mildly valuable

It's totally fine to use a pseudonym on the Forum.

However, if you chose a pseudonym for a reason other than "I actively want to not be identifiable" (e.g. "I copied over my Reddit username without giving it too much thought"), I recommend using your real name on the Forum.

If you want to change your name, just PM or email me (aaron.gertler@centreforeffectivealtruism.org) with your current username and the one you'd like to use.

Reasons to do this:

- Real names make easier for someone to track your writing/ideas across multiple platforms ("where have I seen this name before? Oh, yeah! I had a good Facebook exchange with them last year.")

- There's a higher chance that people will recognize you at meetups, conferences, etc. This leads to more good conversations!

- Aesthetically, I think it's nice if the Forum feels like an extension of the real world where people discuss ways to improve that world. Real names help with that.

- "Joe, Sarah, and Vijay are discussing how to run a good conference" has a different feel than "fluttershy_forever, UtilityMonster, and AnonymousEA64 are discussing how to run a good conference".

Some of these reasons won't apply if you have a well-known pseudonym you've used for a while, but I still think using a real name is worth considering.

Aaron Gertler @ 2022-04-24T07:31 (+12)

Memories from running a corporate EA group

From August 2015 - October 2016, I ran an effective altruism group at Epic, a large medical software corporation in Wisconsin. Things have changed a lot in community-building since then, but I figured it would be good to record my memories of that time, and what I learned.

If you read this and have questions, please ask!

Launching the group

- I launched with two co-organizers, both of whom stayed involved with the group while they were at Epic (but who left the company after ~6 months and ~1 year, respectively, leaving me alone).

- We found members by sending emails to a few company mailing lists for employees interested in topics like philosophy or psychology. I'm not sure how common it is for big companies to have non-work-related mailing lists, but they made our job much easier. We were one of many "extracurricular" groups at Epic (the others were mostly sports clubs and other outdoorsy things).

- We had 40-50 people at our initial interest meeting, and 10-20 at most meetings after that.

Running the group

- Meeting topics I remember:

- A discussion of the basic principles of EA and things our group might be able to do (our initial meeting)

- Two Giving Games, with all charities proposed by individual members who prepared presentations (of widely varying quality — I wish I'd asked people to present to me first)

- A movie night for Life in a Day

- Talks from:

- Rob Mather, head of the Against Malaria Foundation

- A member's friend who'd worked on public health projects in the Peace Corps and had a lot of experience with malaria

- A member of the group who'd donated a kidney to a stranger (I think this was our best-attended event)

- Unfortunately, Gleb Tsipursky

- Someone from Animal Charity Evaluators (don't know who, organized after I had left the company)

- We held all meetings at Epic's headquarters; most members lived in the nearby city of Madison, but two organizers lived within walking distance of Epic and didn't own cars, which restricted our ability to organize things easily. (I could have set up dinners and carpooled or something, but I wasn't a very ambitious organizer.)

- Other group activities:

- One of our other organizers met with Epic's head of corporate social responsibility to discuss EA. It didn't really go anywhere, as their current giving policy was really far from EA and the organizer came in with a fairly standard message that didn't account for the situation,

- That said, they were very open to at least asking for our input. We were invited to leave suggestions on their list of "questions to ask charities soliciting Epic's support", and the aforementioned meeting came together very quickly. (The organizer kicked things off by handing Epic's CEO a copy of a Peter Singer book — don't remember which — at an intro talk for new employees. She had mentioned in her talk that she loved getting book recommendations, and he had the book in his bag ready to go. Not sure whether "always carry a book" is a reliable strategy, but it worked well in that case.)

- Successfully lobbying Epic to add a global-health charity (Seva, I think) to the list of charities for which it matched employee donations (formerly ~100% US-based charities)

- This was mostly just me filling out a suggestion form, but it might have helped to be able to point to a group of people who also wanted to support that charity.

- Most of the charities on the list were specifically based in Wisconsin so employees could give as locally as possible. On my form, I pointed out that Epic had a ton of foreign-born employees, including many from developing countries, who might want to support a charity close to their own "homes". Not sure whether this mattered in Epic's decision to add the charity.

- I suggested several charities, including AMF, but Epic chose Seva as the suggestion they implemented (possibly because they had more employees from India than Africa? Their reasoning was opaque to me)

- An Epic spokesperson contacted the group when some random guy was found trying to pass out flyers for his personal anti-poverty project on a road near Epic. Once I clarified that he had no connection to us, I don't remember any other followup.

- One of our other organizers met with Epic's head of corporate social responsibility to discuss EA. It didn't really go anywhere, as their current giving policy was really far from EA and the organizer came in with a fairly standard message that didn't account for the situation,

- Effects of the group:

- I think ~3 members wound up joining Giving What We Can, and a few more made at least one donation to AMF or another charity the group discussed.

- I found a new person to run the group before leaving Epic in November 2016, but as far as I know, it petered out after a couple more meetings.

Reflections // lessons learned

- This was the only time I've tried to share EA stuff with an audience of working professionals. They were actually really into it! Meetings weren't always well-attended, but I got a bunch of emails related to effective giving, and lunch invitations from people who wanted to talk about it. In some ways, it felt like being on the same corporate "team" engendered a bit more natural camaraderie than being students at the same school — people thought it was neat that a few people at Epic were "charity experts", and they wanted to hear from us.

- Corporate values... actually matter? Epic's official motto is "work hard, do good, have fun, make money" (the idea being that this is the correct ordering of priorities). A bunch of more senior employees I talked to about Epic EA took the motto quite seriously, and they were excited to see me running an explicit "do good" project. (This fact came up in a couple of my performance reviews as evidence that I was upholding corporate values.)

- Getting people to stick around at the office after a day of work is not easy. I think we'd have had better turnout and more enthusiasm for the group if we'd added some meetups at Madison restaurants, or a weekend hike, or something else I'd have been able to do if I owned a car (or was willing to pay for an Uber, something which felt like a wasteful expense in that more frugal era of EA).

akrolsmir @ 2022-04-24T08:19 (+3)

Thank you for writing this up! This is a kind of experience I haven't seen expressed much on the forum, so found extra valuable to read about.

Curious, any reason it's not a top level post?

Aaron Gertler @ 2022-04-25T01:09 (+3)

The group was small and didn't accomplish much, and this was a long time ago. I don't think the post would be interesting to many people, but I'm glad you enjoyed reading it!

Aaron Gertler @ 2020-06-23T07:33 (+10)

I recommend placing questions for readers in comments after your posts.

If you want people to discuss/provide feedback on something you've written, it helps to let them know what types of discussion/feedback you are looking for.

If you do this through a bunch of scattered questions/notes in your post, any would-be respondent has to either remember what you wanted or read through the post again after they've finished.

If you do this with a list of questions at the end of the post, respondents will remember them better, but will still have to quote the right question in each response. They might also respond to a bunch of questions with a single comment, creating an awkward multi-threaded conversation.

If you do this with a set of comments on your own post -- one for each question/request -- you let respondents easily see what you want and discuss each point separately. No awkward multi-threading for you! This seems like the best method to me in most cases.

Aaron Gertler @ 2022-04-08T02:21 (+9)

Advice on looking for a writing coach

I shared this with someone who asked for my advice on finding someone to help them improve their writing. It's brief, but I may add to it later.

I think you'll want someone who:

* Writes in a way that you want to imitate. Some very skilled editors/teachers can work in a variety of styles, but I expect that most will make your writing sound somewhat more like theirs when they provide feedback.

* Catches the kinds of things you wish you could catch in your writing. For example, if you want to try someone out, you could ask them to read over something you wrote a while back, then read over it yourself at the same time and see how their fixes compare to your own regrets.

* Has happy clients — ask for references. It's easy for someone to be a good writer but a lousy editor in any number of ways (too harsh, too easygoing, bad at explaining their feedback, disorganized and bad at follow-up...).

aarongertler @ 2019-11-15T12:40 (+8)

Quick PSA: If you have an ad-blocking extension turned on while you browse the Forum, it very likely means that your views aren't showing up in our Google Analytics data.

That's not something we care too much about, but it does make our ideas about how many users the Forum has, and what they like to read, slightly less accurate. Consider turning off your adblocker for our domain if you'd like to do us a tiny favor.

Larks @ 2019-11-15T14:50 (+5)

Done.

Aaron Gertler @ 2021-07-18T21:27 (+4)

I enjoyed learning about the Henry Spira award. It is given by the Johns Hopkins School of Public Health to "honor animal activists in the animal welfare, protection, or rights movements who work to achieve progress through dialogue and collaboration."

The criteria for the award are based on Peter Singer's summary of the methods Spira used in his own advocacy. Many of them seem like strong guiding principles for EA work in general:

- Understands public opinion, and what people outside of the animal rights/welfare movement are thinking.

- Selects a course of action based upon public opinion, intensity of animal suffering, and the opportunities for change.

- Sets goals that are achievable and that go beyond raising public awareness. Is willing to bring about meaningful change one step at a time.

- Is absolutely credible. Doesn't rely on exaggeration or hype to persuade

- Is willing to work with anyone to make progress. Doesn't "divide the world into saints and sinners."

- Seeks dialogue and offers realistic solutions to problems.

- Is courageous enough to be confrontational if attempts at dialogue and collaboration fail.

- Avoids bureaucracy and empire-building.

- Tries to solve problems without going through the legal system, except as a last resort.

- Asks "Will it work?" whenever planning a course of action. Stays focused on the practical realities involved in change, as well as ethical and moral imperatives.

See the bottom of this page for a list of winners (most recently in 2011; I don't know whether the award is still "active").

Aaron Gertler @ 2021-07-05T09:07 (+4)

Tiny new feature notice: We now list all the comments marked as "moderator comments" on this page.

If you see a comment that you think we should include, but it isn't highlighted as a moderator comment yet, please let me know.

aarongertler @ 2020-02-06T02:54 (+3)

Brief note on usernames:

Epistemic status: Kidding around, but also serious

If you want to create an account without using your name, I recommend choosing a distinctive username that people can easily refer to, rather than some variant on "anonymous_user".

Among usernames with 50+ karma on the Forum, we have:

- AnonymousEAForumAccount

- anonymous_ea

- anonymousthrowaway

- anonymoose

I'm pretty sure I've seen at least one comment back-and-forth between two accounts with this kind of name. It's a bit much :-P

Linch @ 2020-02-06T02:58 (+2)

One possibility is to do what Google Docs does, and pick an animal at near-random (ideally a memorable one), and be AnonymousMouse, AnonymousDog, AnonymouseNakedMoleRat, etc.

aarongertler @ 2020-02-06T02:59 (+2)

This feels like the next-worst option to me. I think I'd find it easier to remember whether "fluttershy_forever" or "UtilityMonster" said something than to remember whether "AnonymousDog" or "AnonymousMouse" said it.

Linch @ 2020-02-06T03:13 (+8)

I think this makes it clear that people are deliberately being anonymous rather than carrying over old internet habits.

Also I think there's a possibility of information leakage if someone tries to be too cutesy with their pseudonyms. Eg, fluttershy_forever might lead someone to look for similar names in the My Little Pony forums, say, where the user might be more willing to "out" themselves than if they were writing a critical piece on the EA forum.

This is even more true for narrower interests. My Dominion username is a Kafka+Murakami reference, for example.

There's also a possibility of doxing in the other direction, where eg, someone may not want their EA Forum opinions to be associated with bad fanfiction they wrote when they were 16.

aarongertler @ 2020-02-06T12:43 (+2)

The deliberate anonymity point is a good one. The ideal would be a distinct anonymous username the person doesn't use elsewhere, but this particular issue isn't very important in any case.

jpaddison @ 2020-02-06T18:29 (+1)

You could write that the username is deliberately anonymous in your Forum bio.

Aaron Gertler @ 2021-05-20T02:27 (+2)

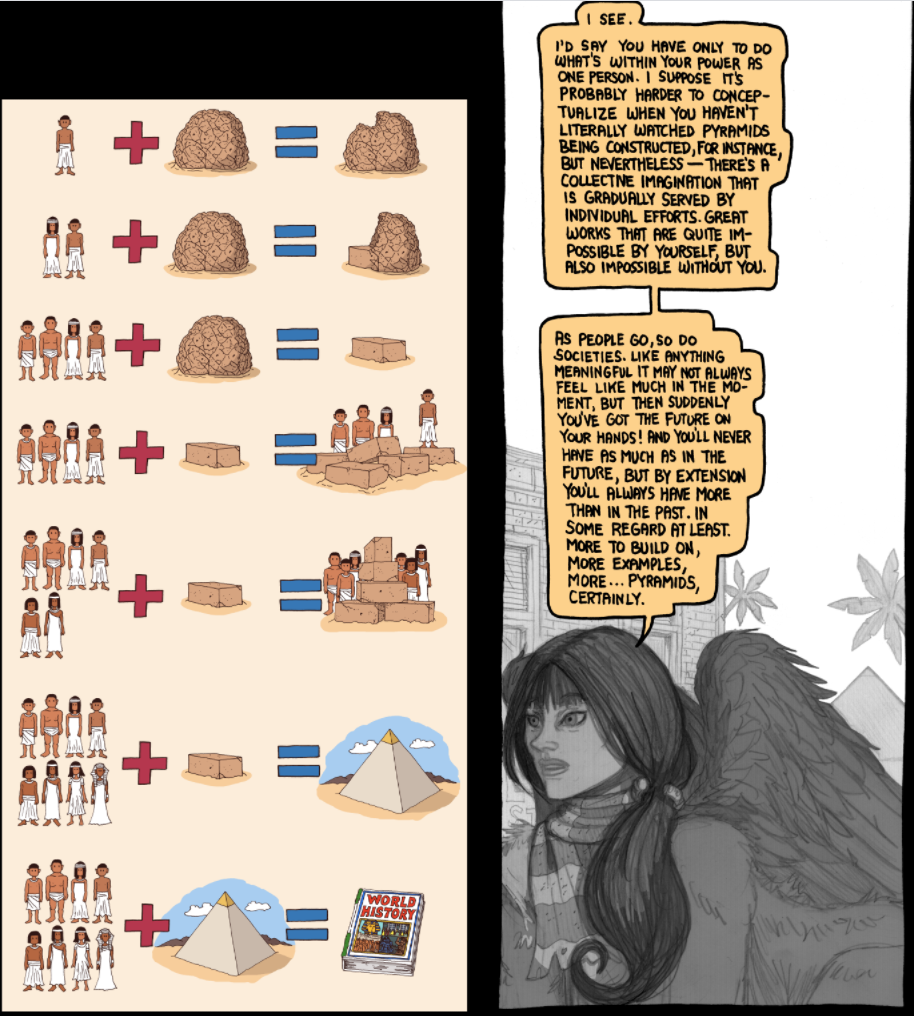

Subnormality, my favorite webcomic, on collective effort:

Spoken by the immortal Sphinx, a voice of wisdom in the series.

"I'd say you have only to do what's within your power as one person. I suppose it's probably harder to conceptualize when you haven't literally watched pyramids being constructed, for instance, but nevertheless — there's a collective imagination that is gradually served by individual efforts. Great works that are quite impossible by yourself, but also impossible without you.

"As people go, so do societies. Like anything meaningful, it may not always feel like much in the moment, but then suddenly you've got the future on your hands! And you'll never have as much as in the future, but by extension you'll always have more than in the past, in some regard at least. More to build on, more examples, more... pyramids, certainly."

aarongertler @ 2019-12-27T02:24 (+2)

I do a lot of cross-posting because of my role at CEA. I've noticed that this racks up a lot of karma that feels "undeserved" because of the automatic strong upvotes that get applied to posts. From now on (if I remember; feel free to remind me!), I'll be downgrading the automatic strong upvotes to weak upvotes. I'm not canceling the votes entirely because I'm guessing that at least a few people skip over posts that have zero karma.

This could be a bad idea for reasons I haven't thought of yet, and I'd welcome any feedback.

Stefan_Schubert @ 2019-12-27T10:32 (+12)

Could the option to strongly upvote one's own comments (and posts, in case you remove the automatic strong upvotes on posts) be disabled, as discussed here? Thanks.

jpaddison @ 2019-12-27T05:01 (+8)

Just to clarify, you know your self-votes don't get you karma?

aarongertler @ 2019-12-27T20:58 (+2)

...nope. That's good to know, thanks! Given that, I don't think I'll bother to un-strong-upvote myself.