[Cause Exploration Prizes] Research Communication

By Coefficient Giving @ 2022-08-30T03:13 (+6)

This anonymous essay was submitted to Open Philanthropy's Cause Exploration Prizes contest and published with the author's permission.

If you're seeing this in summer 2022, we'll be posting many submissions in a short period. If you want to stop seeing them so often, apply a filter for the appropriate tag!

In a nutshell

What is the problem?

Research communication is done almost exclusively by publishing in academic journals. Academic journals were designed centuries ago for an era that had at most hundreds of researchers and where the printing press was the greatest technology for communication. Publishing in academic journals has a lot of defects that curtail efficient research communication. Articles published in academic journals are static, have a very rigid structure, take a very long time to be published, and are very costly. Additionally, there is a lack of a good metric to evaluate the quality of each research finding in a paper. A more effective platform for research communication would impact directly the work of more than 10 million full-time researchers [1], and—by speeding up the progress of research–it will have a clear positive impact on all inhabitants of the planet.

What are possible interventions?

Possible interventions will have to provide an alternative platform for publishing results and will have to eliminate the current dependence on citations for the evaluation of a researcher’s career. I suggest that the platform should be built inspired by the successful model of open-source development with distributed version control, with the addition of prediction markets for evaluating the quality of the findings. In order to eliminate citations as a metric for academic career success, funding for research should be linked to a requirement of publishing on the new platform.

Who else is working on this?

There is an effort by some journals to improve the current process for publishing. However, they will still leave the same static and very rigid structure of the articles in place. There are also ideas on how to revolutionize the current system for research communication, but they have never been deployed simultaneously with measures to allow researchers to step out of the “publish or perish” culture.

The problem

Research communication is the process by which research findings are:

- described in writing,

- evaluated for quality,

- disseminated to the interested public,

- and archived for future reference.

By far the most common way of research communication is publishing in academic journals. The first academic journal was launched in 1665 and after the introduction of the peer-review process in the 1700s [2], the process for publishing through academic journals has changed very little. Researchers are supposed to follow five basic steps:

- obtain results

- prepare a manuscript

- submit the manuscript to a journal

- get the manuscript peer-reviewed

- obtain a decision from the journal

Notice that publishing in an academic journal achieves the four goals of research communication: the published article (Goal A) is evaluated for quality (Goal B) by the peer-review process and if the manuscript is accepted for publication, the journal would disseminate the findings (Goal C) and archive the article for the future (Goal D). The invention of academic journals was a substantial improvement in research communication, which used to be done exclusively by direct communication between two researchers, either in person or by letters. Computers and the Internet have improved the process of publishing in and accessing an academic journal, but the five basic steps remain the same. It is as if the invention of the internal combustion engine would have resulted in our current transportation being carriages pulled by mechanical horses. In this section, I will discuss some of the multiple flaws of the current approach to research communication and in the next section, I will outline possible interventions that would create an alternative to publishing in academic journals without those flaws.

The most important flaw in articles published in academic journals is that they are static. The scientific method establishes hypotheses that are not static because they have to be refined or rejected. Refining or rejecting the results of an already published paper happens rarely. The hindrance is that the venue for refining or rejecting results already published is to publish another article[1]. If the refinement is not large enough to justify a whole new paper it will not occur. For example, if after publishing an article the researchers find a different method that sped up how to obtain the same results for the article, this new method will not be communicated. Rejecting hypotheses also has the problem that it rarely justifies enough material for a whole new article, but it also links to another flaw in academic journals: lack of negative results. But to understand this flaw, we first have to discuss the use of citations as a metric in research communication.

The evaluation of a finding (Goal B) occurs in the publishing process only during peer-review (Step 4) as a yes or no decision for the publication of the whole article. Therefore, right at publication, the only available variable to measure the quality of a finding is how prestigious is the journal that published the finding. The prestige of a journal is nowadays almost a synonym for its impact factor, that is, the yearly mean number of citations of articles published in the journal within the last two years. After publication, a paper can be considered of high quality if it is referenced by the work of other researchers, i.e. if it is cited a lot. So citations have become the most important metric for quality in academia, and by Goodhart's law, they are no longer a good metric. The evaluation of the work of a researcher—which determines where they get hired and when they get promoted—is reduced to a weighted sum of the number of their published articles. The sum is weighted mainly according to where each paper was published, how many citations it has, how recent it is, and the placing of the author in the list of authors of the paper[2]. As expected, research communication has shifted from the original objective of trying to communicate findings to trying to maximize this weighted sum of papers. Accordingly, researchers would try to publish as much as possible in the best journals possible with obvious consequences: they try to split findings to publish later as separate articles, they try to please as much as possible their reviewers, they submit articles first to journals where they have editors (who make the final decision at Step 5) as friends, they suggest as reviewers their own friends, etc. And we can now explain the lack of negative results in academic journals: a negative result will not lead to many citations, therefore it is not published by high-impact factor journals, and thus it is not attractive for a researcher to publish. There are journals that specialize in negative results. However, they tend to have—as expected—very low impact factor and by imposing the same structure for a published article, they have a very high opportunity cost for researchers.

A long time for publication is another major flaw in the process of academic journal publishing. The median time from submission of an article to publication is over 4 months, and the time increases substantially in the case of high-impact journals [4]. A rejection of a paper by a journal starts the clock again with the added delay of formatting the paper according to new guidelines (see below). Clearly, the current system is not adequate when time is of the essence for a result to be communicated, for example in emergency situations. A recent example was the need for communication of findings related to COVID-19. Publication in medRxiv—an Internet site for distributing articles related to health sciences without a peer-review process—saw an 18-fold increase in 2020 [5]. Currently, there are more than 50 pre-print repositories [10], such as medRxiv. However, the lack of a peer-review process makes it difficult to ascertain the veracity of the results presented in pre-prints. Additionally, pre-prints do not weigh strongly when a scientist’s work is judged for hiring, funding, or career advancement [11].

The structure that is imposed in a manuscript to be published in an academic journal is very rigid. The basic structure is a title, a list of authors, an abstract, and different sections for introduction, methods, results, and discussion [6]. This rigidity creates the need for writing new introduction and discussion sections even when a researcher only wants to communicate a new method and result that enhance previous research. Besides the basic structure, the researcher also has to follow the guidelines for formatting that are different for each journal: limits on the number of characters in the titles, limits on the number of words in the abstract, the main text or the figure legends, formatting of the abstract (for example as a paragraph or with delineated subsections), the citation style, a limit in the number of figures, the size and quality of the figures, the table formatting, additional sections such as highlights or research in context, or even spelling such the use of American vs British English. A rejection of a paper by a journal starts the process of formatting the manuscript according to the guidelines of another journal. The cost of the effort to comply with submission requirements alone is estimated to be over $1 billion dollars in researchers' time [7].

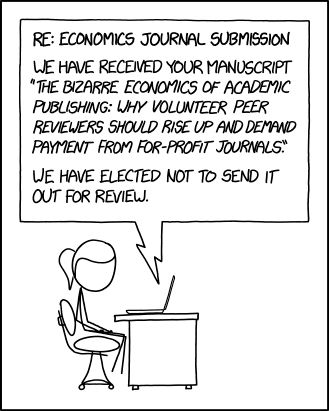

Academic journals are a middle-man between the researcher and whoever could be interested in reading their findings. It comes as a surprise to most non-academic people that authors have to pay up to thousands of dollars to a journal for publishing an article. The advent of the Internet drove some journals to stop the actual printing of their issues, but costs for publishing have not reflected that significant saving. In fact, costs have been going up when journals have been pushed to provide an option for open access[3]. For example, publishing an open-access paper in Nature costs over $11,000 [8]. Costs for publishing impose an additional barrier for early career researchers and could lead to a Catch-22: they need funding via grants so that they can pay for the high costs of publishing in top journals but they cannot obtain funding because their work has not been published in top journals. The journal as a middle-man is the price to pay for the evaluation of the quality of research (Goal B). However, that work is done by other researchers acting as reviewers without any remuneration for their time.[4] Two xkcd comics illustrate the flaw of academic journals as the middle-man [9,10]:

Possible Interventions

The best way to improve research communication is to create an alternative to academic journal articles. A successful intervention will have to tackle the problem by investing in two fronts:

- The development of the platform

- Incentivizing researchers to use it

The development of the platform presents interesting challenges. I believe that it should start by mimicking the model of open-source code development with a distributed version control system. The end product for the platform could be something like what GitHub is for open-source code development. The open-source model has the advantage of fostering collaboration and minimizing duplication of effort, unlike the current publishing in academic journals where the fear of “being scooped”, that is, of someone else publishing your idea before you, is always latent. Additionally, the open-source model will allow for pieces of research to be published separately and attributed to different authors. “Pushing commits” to an already published finding will speed up the publishing of results substantially and make research communication way closer to the ideal of the scientific method, as opposed to the slow, static nature of publishing in academic journals.

The evaluation of research findings has a lot of room for improvement. Even in the current system, there should be an effort to finance peer-reviewing. Grants could be allocated for the whole purpose of financing the time that researchers spend peer-reviewing. For example, a grant could cover 10% of a researcher's salary to dedicate one half-day a week to peer-reviewing. They could be randomly assigned among anyone interested with very few requirements for the author as an experiment. However, we can do better in rating quality. The current system is a binary outcome where all the results contained in a paper are simultaneously deemed worthy of publication or not. The open-source model would permit evaluating each finding for quality separately, but we should improve by providing a rating of each finding. In order to do so, I believe that we should incorporate prediction markets. Each finding published will generally be of the form “we did X to support the hypothesis that A causes B”. For each finding a prediction market of the form “X supports the hypothesis that A causes B” could be created. The interested public (that is, Goal C of research communication) can get involved in evaluating the veracity of the hypothesis according to the evidence presented, or even by trying to replicate the results. Markets that pass a certain threshold of bidders and of probability of being true (say higher than 95%) can be closed automatically, and the finding can skip further peer-review. Likewise, if the probability is pretty low (say lower than 5%) after a lot of bidding, the findings can be deemed not true. And findings can be resolved by assigned peer-reviewing for intermediate cases.

However, no matter how good the new platform is, researchers will not use it unless they can be assured that their careers will not suffer from not publishing in academic journals. The key will be to break the dependence on citations as a metric for quality of work, especially at academic institutions where citations are used to evaluate the hiring and promotion of faculty. In order to succeed, philanthropic investment will have to be destined to fund experimental new positions for researchers where institutions commit to evaluating the promotion of the researcher according to the quality of their results in the new platform. Philanthropic funding of particular research can also be tied to a requirement to publish results on the new platform. More than half of all scientists report that competition for funding is the biggest challenge in career progression [11], so the new platform could also help with scientific funding, that on its own is worthy of a Cause Exploration Prize. A first possible intervention could be the funding of research positions to work on the development of the platform.

Who else is working on this?

Journals have tried to implement changes to improve the current system. Letters to the editor try to circumvent the problem of improving a hypothesis by reporting possible modifications. However, while it does not require writing a whole article, the improvement presented in the letter has to be a major result, cannot be done by the same author of the original paper, and there is a cost of opportunity of writing a letter instead of working on one own’s paper. Some journals have implemented a double-blind review process [12,13], to try to eliminate the bias of judging findings according to how much the reviewers like the authors of the paper. However, most journals, including the most prestigious ones, do not have a double-blind review process. Additionally, among peers, it is easy to guess the authorship of a paper based on previous articles by the authors. Other journals are implementing more transparency in the peer-review process by publishing the reviews of the paper along with the paper itself. However, it is not a common practice and researchers rarely read the reviews after reading a paper. There is a push for journals to accept as first submission papers without formatting, which would decrease the amount of time dedicated to formatting the paper to the guidelines of the journal, but the practice has to become the norm. Moreover, all efforts by journals have the disadvantage of staying with the same rigid model of the 5 steps outlined above, with all the major flaws unresolved.

Ideas for alternatives to journal publishing have been presented, for example, the altmetrics manifesto [14]. Tools have been developed to improve the evaluation of research outside of the classical peer-review process, for example, the plaudit browser extension [15] or Fermat’s Library [16]. However, no matter how good the ideas or the tools are, academic journal publishing will not cease to be the main medium for research communication until there is a concerted effort to eliminate how researchers are judged solely according to their published articles.

Sources

1. The race against time for smarter development. [cited 10 Aug 2022]. Available: https://www.unesco.org/reports/science/2021/en

2. Scholarly Publishing: a Brief History. [cited 11 Aug 2022]. Available: https://www.aje.com/arc/scholarly-publishing-brief-history/

4. Powell K. Does it take too long to publish research? In: Nature Publishing Group UK [Internet]. 10 Feb 2016 [cited 10 Aug 2022]. doi:10.1038/530148a

6. Hofmann AH. Scientific Writing and Communication. Oxford University Press, USA; 2019.

7. Jiang Y, Lerrigo R, Ullah A, Alagappan M, Asch SM, Goodman SN, et al. The high resource impact of reformatting requirements for scientific papers. PLoS One. 2019;14. doi:10.1371/journal.pone.0223976

8. Else H. Nature journals reveal terms of landmark open-access option. In: Nature Publishing Group UK [Internet]. 24 Nov 2020 [cited 11 Aug 2022]. doi:10.1038/d41586-020-03324-y

9. arXiv. In: xkcd [Internet]. [cited 11 Aug 2022]. Available: https://xkcd.com/2085/

10. Peer Review. In: xkcd [Internet]. [cited 11 Aug 2022]. Available: https://xkcd.com/2025/

11. Woolston C. Satisfaction in science. In: Nature Publishing Group UK [Internet]. 24 Oct 2018 [cited 10 Aug 2022]. doi:10.1038/d41586-018-07111-8

12. Trends. Trends in Pediatrics. [cited 11 Aug 2022]. Available: https://trendspediatrics.com/double-blind

13. Publishing Ethics Resource Kit. Double-Blind Peer Review Guidelines. [cited 11 Aug 2022]. Available: https://www.journals.elsevier.com/social-science-and-medicine/policies/double-blind-peer-review-guidelines

14. altmetrics.org. [cited 11 Aug 2022]. Available: http://altmetrics.org/manifesto/

15. Holcombe A. Plaudit · Open endorsements from the academic community. [cited 11 Aug 2022]. Available: https://plaudit.pub/

16. Fermat’s Library. In: Fermat’s Library [Internet]. [cited 11 Aug 2022]. Available: https://fermatslibrary.com/

- ^

Technically, corrections can be sent to a journal so that they are published in a future issue. However, corrections to articles diminish the perceived quality of the research, and thus are not very common.

- ^

In developing countries without a strong research tradition, researchers can be evaluated solely on the number of articles that they publish, leading to consequences such as predatory journals [3].

- ^

Most journals restrict access to their articles unless you or your institution pays for a very costly subscription. There has been a push to allow open access to research to all citizens, especially when the research and even the costs of publication come from grants from the government, i.e. from citizens’ taxes.

- ^

Some journals have specialized reviewers that are payed to review certain aspects of the paper, for example for statistics, but it is not a common practice and all other reviewers are not payed.

eisoj @ 2022-09-14T20:29 (+1)

I think this issue is very important and agree with the author that an open-source approach would make for an interesting and effective solution.