AISN #9: Statement on Extinction Risks, Competitive Pressures, and When Will AI Reach Human-Level?

By Center for AI Safety, Dan H @ 2023-06-06T15:56 (+12)

This is a linkpost to https://newsletter.safe.ai/p/ai-safety-newsletter-9

Welcome to the AI Safety Newsletter by the Center for AI Safety. We discuss developments in AI and AI safety. No technical background required.

Subscribe here to receive future versions.

Top Scientists Warn of Extinction Risks from AI

Last week, hundreds of AI scientists and notable public figures signed a public statement on AI risks written by the Center for AI Safety. The statement reads:

“Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

The statement was signed by a broad, diverse coalition. The statement represents a historic coalition of AI experts — along with philosophers, ethicists, legal scholars, economists, physicists, political scientists, pandemic scientists, nuclear scientists, and climate scientists — establishing the risk of extinction from advanced, future AI systems as one of the world’s most important problems.

The international community is well represented by signatories who hail from more than two dozen countries, including China, Russia, India, Pakistan, Brazil, the Philippines, South Korea, and Japan. The statement also affirms growing public sentiment: a recent poll found that 61 percent of Americans believe AI threatens humanity’s future.

A broad variety of AI risks must be addressed. As stated in the first sentence of the signatory page, there are many “important and urgent risks from AI.” Extinction is a risk, but there are a variety of others, including misinformation, arbitrary bias, cyberattacks, and weaponization.

Different AI risks share common solutions. For example, an open letter from the AI Now Institute argued that AI developers should be held legally liable for harms caused by their systems. This liability would apply across a range of potential harms, from copyright infringement to creating chemical weapons.

Similarly, autonomous AI agents that act without human oversight could amplify bias and pursue goals misaligned with human values. The AI Now Institute’s open letter argued that humans should be able to “intercede in order to override a decision or recommendations that may lead to potential harm.”

Yesterday, the Center for AI Safety wrote about support for several policy proposals from the AI ethics community, including the ones described above. Governing AI effectively will require addressing a range of different problems, and we hope to build a broad coalition for that purpose.

Policymakers are paying attention to AI risks. Rishi Sunak, Prime Minister of the United Kingdom, retweeted the statement and responded, “The government is looking very carefully at this.” During a meeting last week, he “stressed to AI companies the importance of putting guardrails in place so development is safe and secure.”

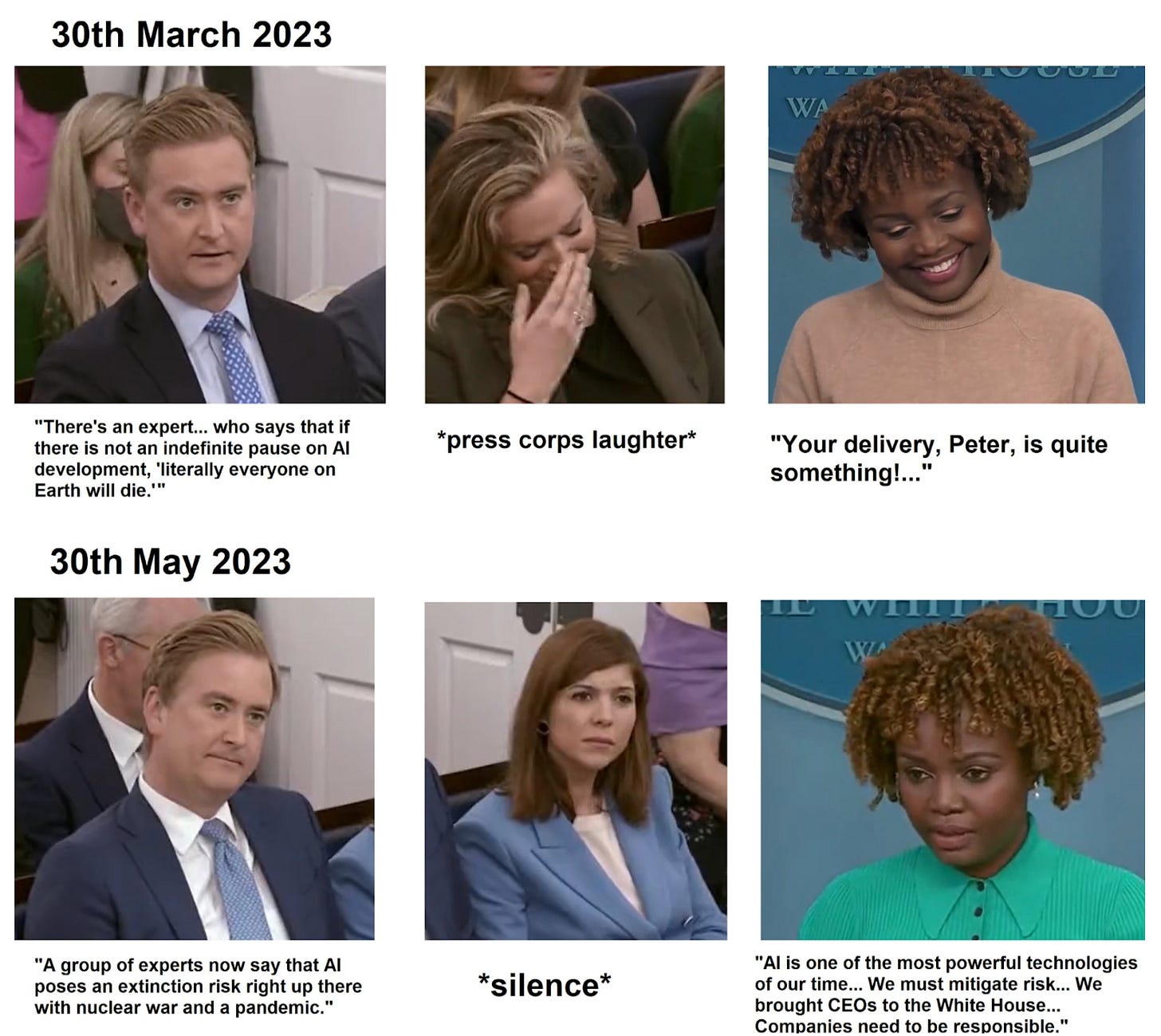

Only a few months ago, White House press secretary Karine Jean-Pierre laughed at the notion of extinction risks from AI. Now, the Biden administration is striking a different tune. Their new 2023 National AI R&D Strategic Plan explicitly calls for additional research on the “existential risk associated with the development of artificial general intelligence.”

The White House’s attitude towards extinction risks from AI have rapidly changed.

The statement was widely covered by media outlets, including the New York Times, CNN, Fox News, Bloomberg News, BBC, and others.

Competitive Pressures in AI Development

What do climate change, traffic jams, and overfishing have in common? In the parlance of game theory, they’re all collective action problems.

We’d all benefit by cooperating to reduce carbon emissions, the number of cars on the road, and the depletion of natural resources. But if everyone selfishly chooses what’s best for themselves, nobody cooperates and we all lose out.

AI development suffers from these problems. Some are imaginary, like the idea that we must race towards building a potentially dangerous technology. Others are unavoidable, like Darwin’s laws of natural selection. Navigating these challenges will require clear-eyed thinking and proactive governance for the benefit of everyone.

AI should not be an arms race. Imagine a crowd of people standing on a lake of thin ice. On a distant shore, they see riches of silver and gold. Some might be tempted to sprint for the prize, but they’d risk breaking the ice and plunging themselves into the icy waters below. In this situation, even a selfish individual should tread carefully.

This situation is analogous to AI development, argues Katja Grace for a new op-ed in TIME Magazine. Everyone recognizes the potential pitfalls of the technology: misinformation, autonomous weapons, even rogue AGI. The developers of AI models are not immune from these risks; if humanity suffers from recklessly developed AI, the companies who built it will suffer too. Therefore, self-interested actors should prioritize AI safety.

Sadly, not every company has followed this logic. Microsoft CEO Satya Nadella celebrated the idea of an AI race, even as his company allowed their chatbot Bing to threaten users who did not follow its rules. Similarly, only days after OpenAI’s alignment lead Jan Leike cautioned about the risks of “scrambling to integrate LLMs everywhere,” OpenAI released plugins which allow ChatGPT to send emails, make online purchases, and connect with business software. Even if their only goal is profit, more companies should prioritize improving AI safety.

Natural selection favors AIs over humans. We tend to think of natural selection as a biological phenomenon, but it governs many competitive processes. Evolutionary biologist Richard Lewontin proposed that natural selection will govern any system where three conditions are present: 1) there is a population of individuals with different characteristics, 2) the characteristics of one generation are passed onto the next, and 3) the fittest variants propagate more successfully.

These three conditions are all present in the development of AI systems. Consider the algorithms that recommend posts on social media. In the early days of social media, posts might’ve been shown chronologically in the order they were posted. But companies soon experimented with new kinds of algorithms that could capture user attention and keep people scrolling. Each new generation improves upon the “fitness” of previous algorithms, causing addictiveness to rise over time.

Natural selection will tend to promote AIs that disempower human beings. For example, we currently have chatbots that can help us solve problems. But AI developers are working to give these chatbots the ability to access the internet and online banking, and even control the actions of physical robots. While society would be better off if AIs make human workers more productive, competitive pressure pushes towards AI systems that automate human labor. Self-preservation and power seeking behaviors would also give AIs an evolutionary advantage, even to the detriment of humanity.

For more discussion of this topic, check out the paper and TIME Magazine article by CAIS director Dan Hendrycks.

When Will AI Reach Human Level?

AIs currently outperform humans in complex tasks like playing chess and taking the bar exam. Humans still hold the advantage in many domains, but OpenAI recently said “it’s conceivable that within the next ten years, AI systems will exceed expert skill level in most domains.”

AI pioneer Geoffrey Hinton made a similar prediction, saying, “The idea that this stuff could actually get smarter than people — a few people believed that. But most people thought it was way off. And I thought it was way off. I thought it was 30 to 50 years or even longer away. Obviously, I no longer think that.”

What motivates these beliefs? Beyond the impressive performance of recent AI systems, there are a variety of key considerations. A new report from Epoch AI digs into the arguments.

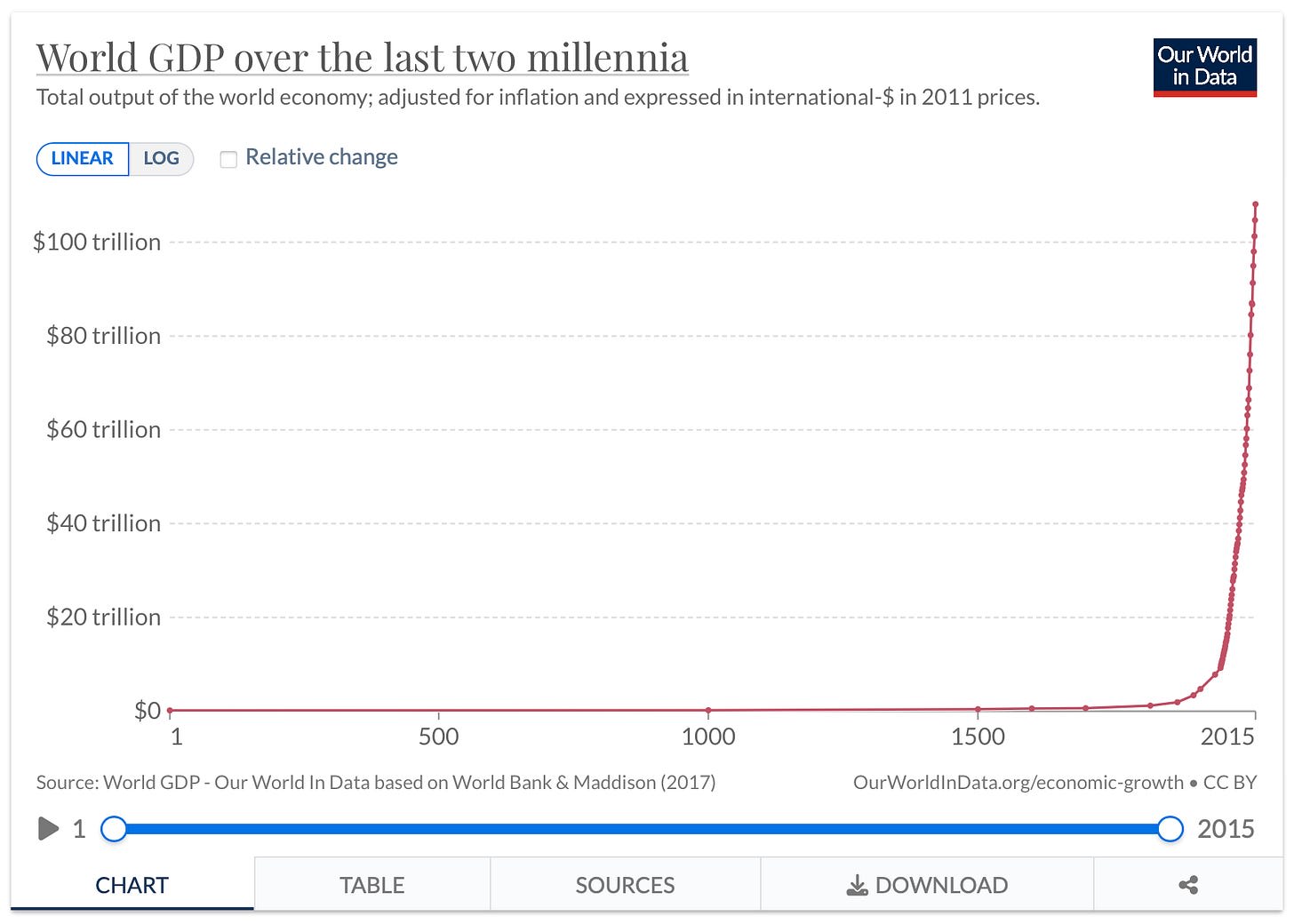

Technological progress has rapidly accelerated before. In some areas, it’s a safe bet that tomorrow will resemble today. The sun will rise, rain will fall, and many things will stay the same. But technological development is probably not one of them.

For the first 100,000 years that homo sapiens walked the earth, there was very little technological progress. People fashioned tools from stone and wood, but typically did not live in permanent settlements, and therefore did not accumulate the kinds of wealth and physical capital that we would observe in the archaeological record.

Agriculture permanently changed the pace of technological progress. Farmers could produce more food than they individually consumed, creating a surplus that could feed people working other occupations. Technologies developed during this time include iron tools, pottery, systems of writing, and elaborate monuments like pyramids and temples.

These technologies accumulated fairly slowly from about 10,000 B.C. until around two hundred years ago, when the Industrial Revolution launched us into the modern paradigm of economic growth. Electricity, running water, cars, planes, modern medicine, and more were invented in only a few generations, following tens of thousands of years of relative stagnation.

Is this the end of history? Have we reached a stable pace of technological progress? Economist Robin Hanson argues against this notion. One possible source of accelerating technological change could be intelligent machines, which are deliberately designed rather than evolved by random chance.

Technological progress can occasionally accelerate more quickly than was previously anticipated.

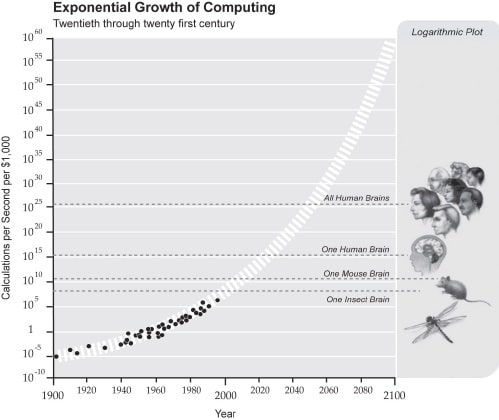

Building AI models as big as the human brain. Modern AI systems are built using artificial neural networks, which are explicitly inspired by neural network of the human brain. This allows us to make comparisons between our AI models and the size of the human brain.

For example, the most advanced AI models have hundreds of millions of parameters, referring to the connections between artificial neurons. The human brain has roughly 100 trillion synapses, or about 1000 times more connections than our largest AI systems.

Similarly, you can consider the number of computational operations performed by biological and artificial neural networks. The human brain uses roughly 1015 operations per second, or 1024 operations over the first 30 years of our lives. This is strikingly close to the amount of computation used to train our largest neural networks today. Google’s largest language model, PaLM, also used 1024 operations during training.

Both of these comparisons show that AI models are quickly approaching the size and computational power of the human brain. Crossing this threshold wouldn’t guarantee any new capabilities, but it is striking that AIs can now perform many of the creative cognitive tasks once exclusively performed by humans, and it raises questions about the capabilities of future AI models.

Back in 2005, futurist Ray Kurzweil (who also signed the CAIS AI extinction statement) predicted that AI systems would exceed the computational power of a single human brain during the 2020s.

AI accelerating AI progress. Another reason to suspect that AI progress could soon hit an inflection point is that AI systems are now contributing to the development of more powerful AIs. CAIS has collected dozens of examples of this phenomenon, but here we’ll explain just a few.

GitHub Copilot is a language model that can write computer code. When a programmer is writing code, GitHub Copilot offers autocomplete suggestions for how to write functions and fix errors. One study found that programmers completed tasks 55% faster when using GitHub Copilot. This reduces the amount of time required to run experiments with new and improved AI systems.

Data is another way that AIs are being used to accelerate AI progress. A Google team trained a language model to answer general questions that required multi-step reasoning. Whenever the model was confident in its answer, they trained the model to mimic that answer in the future. By training on its own outputs, the model improved its ability to answer questions correctly.

Philosopher David Chalmers argues that if we build a human-level AI, and that AI can build an even more capable AI, then it could self-improve and rapidly surpass human capabilities.

Subscribe here to receive future versions.

Links

- Hackers broadcast an AI-generated video of Vladimir Putin declaring full mobilization against Ukraine on multiple TV stations in border regions of Ukraine and Russia yesterday.

- AI could enable terrorism, says a British national security expert.

- The White House will attempt to engage China on nuclear arms control and AI regulation.

- Claims about China’s AI prowess may be exaggerated.

- A Chinese company is selling an AI system that identifies anybody holding a banner and sends a picture of their face to the police.

- A professor at the Chinese Academy of Sciences explains why he signed the statement on AI extinction risks.

- AI labs should have an internal team of independent auditors that can report safety violations directly to the board, argues a new paper.

- Copywriters and social media marketers are among the first to lose their jobs to ChatGPT.

- Sam Altman discusses OpenAI’s plans after GPT-4.

- OpenAI is offering grants for using AI to improve cyberdefense and have provided more public information about their own cybersecurity. Meanwhile, hackers stole the password to the Twitter account of OpenAI’s Chief Technology Officer.

See also: CAIS website, CAIS twitter, A technical safety research newsletter

Subscribe here to receive future versions.

blueberry @ 2023-06-07T09:43 (+3)

Natural selection will tend to promote AIs that disempower human beings. For example, we currently have chatbots that can help us solve problems. But AI developers are working to give these chatbots the ability to access the internet and online banking, and even control the actions of physical robots. While society would be better off if AIs make human workers more productive, competitive pressure pushes towards AI systems that automate human labor. Self-preservation and power seeking behaviors would also give AIs an evolutionary advantage, even to the detriment of humanity.

In this vein, is there anything to the idea of focusing more on aligning incentives than AI itself? Meaning, is it more useful to alter selection pressures (which behaviors are rewarded outside of training) vs trying to induce "useful mutations" (alignment of specific AIs)? I have no idea how well this would work in practice, but it seems less fragile. One half-baked idea: heavily tax direct AI labor, but not indirect AI labor (i.e. make it cheaper to get AIs to help humans be more productive than to do it without human involvement)

aogara @ 2023-06-07T23:59 (+2)

Yep, I think those kinds of interventions make a lot of sense. The natural selection paper discusses several of those kinds of interventions in sections 4.2 and 4.3. The Turing Trap also makes an interesting observation about US tax law: automating a worker with AI would typically reduce a company's tax burden. Bill DeBlasio, Mark Cuban, and Bill Gates have all spoken in favor of a robot tax to fix that imbalance.