20 concrete projects for reducing existential risk

By Buhl @ 2023-06-21T15:54 (+132)

This is a linkpost to https://rethinkpriorities.org/longtermism-research-notes/concrete-projects-for-reducing-existential-risk

This is a blog post, not a research report, meaning it was produced quickly and is not to Rethink Priorities’ typical standards of substantiveness and careful checking for accuracy.

Super quick summary

This is a list of twenty projects that we (Rethink Priorities’ Existential Security Team) think might be especially promising projects for reducing existential risk, based on our very preliminary and high-level research to identify and compare projects.

You can see an overview of the full list here. Here are five ideas we (tentatively) think seem especially promising:

- Improving info/cybersec at top AI labs

- AI lab coordination

- Field building for AI policy

- Facilitating people’s transition from AI capabilities research to AI safety research

- Finding market opportunities for biodefence-relevant technologies

Introduction

What is this list?

This is a list of projects that we (the Existential Security Team at Rethink Priorities) think are plausible candidates for being top projects for substantially reducing existential risk.

The list was generated based on a wide search (resulting in an initial list of around 300 ideas, most of which we did not come up with ourselves[1]) and a shallow, high-level prioritization process (spending between a few minutes and an hour per idea). The process took about 100 total hours of work, spread across three researchers. More details on our research process can be found in the appendix. Note that some of the ideas we considered most promising were excluded from this list due to being confidential, sensitive or particularly high-risk.

We’re planning to prioritize projects on this list (as well as other non-public ideas) for further research, as candidates for projects we might eventually incubate. We’re planning to focus exclusively on projects aiming to reduce AI existential risk in 2023 but have included project ideas in other cause areas on this list as we still think those ideas are promising and would be excited about others working on them. More on our team’s incubation program here[2].

We’d be potentially excited about others researching, pursuing and supporting the projects on this list, although we don't think this is a be-all-end-all list of promising existential-risk-reducing projects and there are important limitations to this list (see “Key limitations of this list”).

Why are we sharing this list and who is it for?

By sharing this list, we’re hoping to:

- Give a sense of what kinds of projects we’re considering incubating and be transparent about our research process and results.

- Provide inspiration for projects others could consider working on.

- Contribute to community discussion about existential security entrepreneurship – we’re excited to receive feedback on the list, additional project suggestions, and information about the project areas we highlight (for example, existing projects we may have missed, top ideas not on this list, or reasons that some of our ideas may be worse than we think).

You might be interested in looking at this list if you’re:

- Considering being a founder or early employee of a new project. This list can give you some inspiration for potential project areas to look into. If you’re interested in being a (co-)founder or early employee for one of the projects on this list, feel free to reach out to Marie Buhl at marie@rethinkpriorities.org so we can potentially provide you with additional resources or contacts when we have them.

- Note that our plan for 2023 is to zoom in on just a few particularly promising projects targeting AI existential risk. This means that we’ll have limited bandwidth to provide ad hoc feedback and support for projects that aren’t our main focus, and that we might not be able to respond to everyone.

- Considering funding us. We’re currently seeking funding to maintain and expand our team. This list gives some sense of the kinds of projects we would use our resources to research, support or bring about. However, note that we’re not making a commitment to working on these specific projects, as (a) we might work on promising but confidential projects that are not included in this list, and (b) we expect our priorities to change over time as we learn more. If you’re interested in funding us, please reach out to Ben Snodin at ben@rethinkpriorities.org.

- Considering funding projects that aim to reduce existential risk in general. This list can give you some inspiration for project types you could consider funding, although we’d like to stress that the value of the projects on this list are for the most part heavily dependent on its execution and hence its founding team.

- Interested in longtermist prioritization research, entrepreneurship and/or our team. Although we don’t provide extensive reasoning for why these projects made it into our list rather than others, this list (and the appendix) can still give you some insight into our research process and how we think about longtermist entrepreneurship. We’re tentatively planning to open a hiring round later in the year; if you’re excited about doing this kind of research or working on getting projects like this off the ground, we’d love for you to express interest in joining our team or apply in a potential hiring round.

Key limitations of this list

- The list is not comprehensive: While we considered a broad range of ideas (~300 in total), we expect that there are still promising project ideas that weren’t on our initial list. Note though that we also excluded some ideas from this list due to them being confidential, sensitive or particularly high-risk.

- Our prioritization process was shallow: We spent a maximum of ~one hour per project and relied primarily on our researchers’ quick judgments. We expect that we could easily change our mind about a given project with further investigation – very roughly, we estimate a ~40% chance that we would drop a given idea after 5-10 hours of further investigation (which approximately correspond to the rate in our last prioritization round in Q3/Q4 of 2022). We also expect that some of the projects we left out of this list were in fact much more promising than we gave them credit for.

- Relatedly, our understanding of each project area is still shallow. We provide brief descriptions for each project, including pointers to some of the existing work that we’re aware of. However, we haven’t spent much time on each idea and we’re not subject-area experts. Based on our previous experiences with this kind of work, we expect that there’ll frequently be ways in which our descriptions miss important details, contain misleading framings, or overlook existing relevant work. It’s plausible that these mistakes could make an idea actually not worth pursuing or only worth pursuing in a different way than we suggest.

- Project ideas were evaluated in the abstract, but high-quality execution and coordination are extremely important. Our excitement about any project on this list is highly contingent on how well the project is executed. We think that a great founding team with relevant domain knowledge, connections, and expertise will likely be crucial and that said founding team should spend considerable time ironing out the details of how to implement a given project (e.g., via further research and pilots, learning about other efforts, and coordinating).

- We evaluated projects mainly by focusing on x-risk, with a significant focus on AI in particular: Our team primarily operates under a worldview that prioritizes reducing existential risk; and in practice, given the average views of our researchers, we furthermore operate on an assumption that AI risk is a large majority of total existential risk. As a result, we’ve ended up with a list that’s heavily skewed towards AI-related projects. If you have a different worldview and/or think differently about the relative risk of AI, you might prioritize very different projects.

Given these limitations, we recommend using this list only as a potential starting point for identifying projects to consider, and to do further research on any project before deciding whether to jump into potentially founding something.

The project list at a glance

Five ideas we (tentatively) think seem especially promising

- Improving info/cybersec at top AI labs

- AI lab coordination

- Field building for AI policy

- Facilitating people’s transition from AI capabilities research to AI safety research

- Finding market opportunities for biodefence-relevant technologies

Other ideas we’re excited about

- Developing better technology for monitoring and evaluation of AI systems

- Ambitious AI incidents database

- Technical support teams for alignment researchers

- AI alignment prizes

- Alignment researcher talent search

- Alignment researcher training programs

- Field building for AI governance research

- Crisis planning and response unit

- Monitoring GCBR-relevant capabilities

- Public advocacy for indoor air quality

- Biolab safety watchdog group

- Advocacy to stop viral discovery projects

- Retrospective grant evaluations of longtermist projects

- EA red teaming project

- Independent researcher infrastructure

Five ideas we (tentatively) think seem especially promising

Improving info/cybersec at top AI labs

Info- and cybersecurity at AI labs could play a key role in reducing x-risk by preventing bad actors from accessing powerful models via model theft, preventing the spread of infohazardous information, and reducing the chance of future rogue agentic AI systems potentially independently replicating themselves outside of labs. However, the existing market may not be able to meet demand because there’s a short supply of top talent, and better security may require professionals with good internal models of x-risk.

Based on the conversations we’ve had so far, we tentatively think that the best approach is via activities that aim to direct existing top info- and cybersecurity talent to top AI labs. We’re aware of some existing efforts in this space (and naturally labs themselves have an interest in attracting top talent in these fields), but we think there’s room for more. There’s also various existing upskilling programs, for example this book club.

We’re also somewhat excited about info- and cybersecurity projects that target other x-risk-relevant organizations (e.g., DNA synthesis companies, major funders, advocacy orgs).

AI lab coordination

AI labs face perverse incentives in many ways, with competitive pressures making it difficult to slow down development to ensure that models are safe before they’re deployed. Coordination among AI labs – for example about scaling speed or sharing safety techniques – could reduce this competitive pressure. We imagine that progress could be made by specifying concrete standards and/or coordination mechanisms that might help reduce existential risk from AI, and convening labs to discuss and voluntarily adopt those. There are many examples of similar self-governance in other industries, including some cases where self-governance has been an effective precursor to regulation.

Several research organizations (for example the Centre for Governance of AI and Rethink Priorities’ AI Governance and Strategy department) are already thinking about what kinds of standards and policies labs might want to adopt. For this reason, we tentatively think the most likely gap is in the area of finalizing, convening, and getting buy-in for those proposals.

We think this is a relatively sensitive project that needs to be executed carefully, and so would likely only encourage a small number of well-placed people to pursue it, especially as additional actors could easily make coordination harder rather than easier. If you’re interested in this project, we suggest coordinating with other actors rather than acting unilaterally.

White this is more of a broad area than a concrete project, we think that well-scoped efforts to develop specific technologies could be highly impactful, if the project founders take care to identify the most relevant technologies in collaboration with AI governance experts.

Field building for AI policy

We think government roles could be highly impactful for implementing key AI policy asks, especially as windows of opportunity for such policy asks are increasingly opening. We also have the impression that the AI safety policy community and talent pipeline is less well-developed than AI safety research (technical and governance), and so we think field building in this area could be especially valuable. We’re especially (but not exclusively) excited about projects targeting AI policy in the US.

Most of the existing programs we’re aware of target early-career people who are relatively late in the “engagement funnel”, including the Horizon Fellowship, Training for Good’s EU tech policy fellowship and others. We think it seems likely that there’s substantial room for more projects. Some areas we’re tentatively excited about include programs that are (a) aimed at people earlier in the funnel (e.g., undergraduate mentorship), (b) aimed at Republicans/conservatives (at various career stages), or (c) separate from the effective altruism and longtermism brands and communities.

We think there are risks that need to be thoughtfully managed with this project. For example, poor execution could result in the spread of harmful ideas or unduly damaging the reputation of the AI safety community. We think having the right founding team is unusually important and would likely only encourage a small number of well-placed people to pursue this project.

Facilitating people’s transition from AI capabilities research to AI safety research

Increasing the ratio of AI safety to AI capabilities researchers (which is currently plausibly as skewed as 1:300[3]) seems like a core way to reduce existential risk from AI. Helping capabilities researchers switch to AI safety could be especially effective since their experience working on frontier models would allow uniquely useful insights in alignment research, and their existing skills would likely transfer well and shorten any training time needed.

While there are many existing projects providing career advice and upskilling programs for people who want to enter AI safety, we’re not currently aware of transition programs that specifically target people who already have a strong frontier ML background. We think this could be a valuable gap to fill since this group likely needs to be approached differently (e.g., ML expertise should be assumed) and will have different barriers to switching into AI safety compared to, say, recent graduates. The project would need to be better scoped, but some directions we imagine it taking include identifying and setting up shovel-ready projects that can absorb additional talent, offering high salaries to top ML talent transitioning to safety research, and providing information, support and career advice for people with existing expertise.

We think this is a relatively challenging and potentially sensitive project. In order to prevent inadvertently causing harm, we think the founding team would likely need to have a deep understanding of alignment research, including the blurry distinction between alignment and capabilities, as well as the relationship between the capabilities and safety communities.

Finding market opportunities for biodefence-relevant technologies

There are biodefence-relevant technologies that would benefit from additional investment and/or steady demand. This would allow for costs to fall over time, or at least for firms to stay in business and ensure production capacity during a pandemic. However, often no current market segment has been identified or developed. A project could help identify market segments (e.g. immuno-suppressed people) and create a market for technologies such as far-UVC systems or personal protective equipment. This could lead to additional investment that would allow them to become more technologically mature, low-cost, or better positioned to be used to reduce existential risk, in addition to benefiting people in the relevant market segment.

One thing to note is that finding market opportunities only makes sense when a technology is relatively mature, i.e., basically ready for some kind of deployment. It would probably be premature to look for potential buyers for many biodefence-relevant technologies that have been identified.

One direction we’re excited about is finding market opportunities for far-UVC systems, which effectively disrupt indoor airborne pathogen transmission. Most firms in this space are small, with few customers and volatile demand. However, there could be useful applications in factory farm settings and other market segments. Note that we think the next step is unlikely to be something like setting up a far-UVC startup, but rather market research and identifying industry pilots that could be run.

Other ideas we’re potentially excited about

Developing better technology for monitoring and evaluation of AI systems

We’re tentatively excited about a set of AI governance proposals centered around monitoring who is training and deploying large AI models, as well as evaluating AI models before (and during) deployment. But many proposals in this vein either cannot be implemented effectively with current technology or could benefit from much better technology, both hardware and software.

For example, monitoring who is training large AI systems might require the development of chips with certain tracking features or ways of identifying when large numbers of chips are being used in concert. Another example is that effective third-party auditing of AI models might require the development of new “structured access” tools that enable researchers to access the information necessary for evaluation while minimizing the risk of model proliferation[4].

We think that developing products along these lines could help ensure better monitoring and evaluation of AI systems, as well as potentially help bring about government regulation or corporate self-governance that relies on such products. Even if market forces would eventually fill the gap, speeding up could be important.

Ambitious AI incidents database

Increasing awareness about – and understanding of – the risks posed by advanced AI systems seems important for ensuring a strong response to those risks. One way to do so is to document “warning shots” of AI systems causing damage (in similar ways that existential-risk-posing systems might cause damage). To this end, it would be useful to have a comprehensive incidents database that tracked all AI accidents with catastrophic potential and presented them just as compellingly as projects like Our World in Data. While there are probably relatively few highly x-risk-relevant incidents today, it would be good to start developing the database and awareness about it as soon as possible.

The project might also yield useful analytical insights, such as companies or sectors that have an unusually high prevalence of incidents, and it could be tied to other initiatives to expose flaws in AI models, like red teaming and auditing.

We’re aware of at least one existing AI Incident Database, but we would be interested in a version that’s more comprehensive, x-risk-focused, and compelling to users, and that actively reaches out to people for whom its findings could be relevant.

However, we know of several other organisations that have incident reporting projects in the works (for example the OECD’s AI Incidents Monitor), so it would be important to coordinate with those actors and make sure there’s a relevant gap to be filled before launching a project.

Technical support teams for alignment researchers

In order to develop techniques for aligning advanced AI systems, we need not only theoretical high-level thinking to identify promising approaches, but also technical ML expertise to implement and run experiments to test said approaches. One way to accelerate alignment research could therefore be to start a team of ML engineers that can be “leased out” to support the work of more theoretical researchers.

We’re unsure to what extent engineering is a bottleneck for theoretical alignment researchers, but we’ve had some conversations that suggest it might be. We would tentatively guess that it tends to be a more substantial bottleneck for smaller, newer teams and independent researchers. If true, this project could have the additional effect of boosting new, independent alignment agendas and thereby diversifying the overall field.

This project could potentially pair well with the idea of facilitating people’s transition from capabilities to alignment research, although we haven’t thought about that in much detail.

FAR AI is doing work in this area via their FAR Labs program. In addition to supporting this project, we think it could be valuable to start new projects with different approaches (for example, a more consultancy-style approach or just similar programs run by people with different research tastes).

It will be important to select research partners carefully to avoid contributing to work that unnecessarily speeds up AI capabilities (which may be challenging, given the blurry distinction between alignment and capabilities research). For this reason, the founding team of this project will probably need a thorough theoretical understanding of alignment in addition to a high level of technical expertise.

AI alignment prizes

There are many people who could be well-placed to contribute to solving some of the hardest technical problems in AI alignment but aren’t currently focusing on those problems; for example a subset of computer science academics. One way to attract interest from these groups could be to make a highly-advertised AI alignment prize contest, which would award hundreds of thousands or even millions of dollars to entrants who solve difficult, important open (sub-)questions in AI alignment. See some of our previous work on this idea here (although aspects of that work are now outdated).

For some types of questions (e.g., in mechanistic interpretability), it might be necessary to first develop new tools to offer sufficient model access to prize participants to do useful research, without contributing to model proliferation (see “Developing better technology for monitoring and evaluation of AI systems”).

There are already various past or present AI alignment prizes, a collection of which can be found here. The prizes we’re aware of are on the smaller end (tens or hundreds of thousands) and haven’t been widely advertised outside of the AI safety community, so we think there’s room for a prize contest to fill this gap. However, this also comes with higher downside risk, and it will be important to be aware of and try to mitigate the risk of unduly damaging the reputation of the AI safety research community and “poisoning the well” for future projects in this space.[5][6]

Alignment researcher talent search

Technical alignment research will likely be an essential component of preventing an existential catastrophe from AI, and we think there’s plausibly a lot of untapped talent that could contribute to the field. Programs like fellowships, hackathons and retreats have previously helped increase the size of the field, and we’d be excited for more such programs, in addition to trying out new approaches like headhunting. Projects in this area could take the form of improving or scaling up existing talent search programs, most of which are (as far as we’re aware) in the US or the UK, or starting new programs in places where there’s currently no or few existing programs.

One difficulty is that it’s not clear that existing “end-stage” alignment projects can grow quickly enough to absorb an additional inflow of people. But we think this project could plausibly still be high-impact because (a) alignment research might grow significantly as a field in the coming years as a result of increased public attention, (b) it might be possible to identify people who can relatively quickly do research independently, and (c) it could still be valuable to improve the talent pool that alignment research organizations recruit from, even if the total number of researchers stays the same.

Note that this is a broad area that we think is particularly hard to execute well (and, with poor execution, there’s a risk of “poisoning the well”, especially if a project is one of the first in a given area).[7]

Alignment researcher training programs

Related to the area of alignment researcher talent search, an important step for tapping untapped potential could be to provide a high-quality training program that enables people to contribute to alignment research more quickly.

This is a relatively crowded space, with several existing upskilling programs from the top to the bottom of the “funnel”, including MLAB, SERI MATS, Arena, AI safety hub labs, AI safety fundamentals and others. However, we think there could be value in both improving existing programs and starting new programs to increase the number of different approaches being tried. Very tentatively, we think some areas where additional programs might be especially valuable include (a) programs that are more engineering-focused, and (b) programs that aim to make more efficient use of the existing resources in the alignment community (for example, setting up ways for mid-funnel people to mentor early-funnel people). However, we strongly recommend coordinating with existing actors to prevent duplication of effort.[8]

Field building for AI governance research

Governance of AI development and deployment seems key to avoiding existential risk from AI, and there is increasing demand for such governance. But the field is still relatively nascent and small. While there’s a number of existing field building efforts, the talent pipeline seems to be less well-developed than for technical AI safety research, and the (increasing) number of qualified applicants that existing programs receive suggests that there’s room for more projects.

As a preliminary hypothesis, we think it might be especially valuable to run earlier-funnel programs that bridge the gap between introductory programs like AI safety fundamentals and end-stage programs like fellowships at leading research orgs. This could look like a program analogous to SERI MATS (but with a focus on governance rather than technical alignment research). Some of this space is already covered by the GovAI summer/winter fellowships and programs like the ERA and CHERI fellowships.

Crisis planning and response unit

Periods of crisis (sometimes called “hinges” of “critical junctions”) are a common feature in the early stages of many x-risk scenarios. These moments present large opportunities to change the established order and help avoid extremely negative outcomes – but it's often difficult to act effectively in a crisis or even understand that one is already unfolding. We think a ‘crisis planning and response unit’ specifically focused on global catastrophic biorisk or extreme risks from AI could increase civilisational resilience and reduce existential risk.

This unit could combine several elements (many of which have been proposed already; see footnote 9): early warning forecasting; scenario analysis and crisis response planning; hardening production and distribution of critical goods; and maintaining surge capacity for crisis response.

We know of one early-stage project underway, Dendritic (led by Alex Demarsh and Sebastian Lodemann), which aims to be a crisis planning and response unit focused on global catastrophic biological risks. We’d also be excited about a parallel effort focusing on AI risks, though it seems likely that the design and implementation of crisis planning and response across different sources of x-risks could look radically different.[9]

Monitoring GCBR-relevant capabilities

Experts[10] think the risk from engineered pandemics is increasing over time with the development and diffusion of knowledge and capabilities relevant to developing pandemic-class agents and bioweapons that could produce global catastrophic bio-risks (GCBRs). Also, various actors (powerful states, rogue states, labs, terrorist groups, and individual actors) have different capabilities that change over time. While certain top-line information is tracked (e.g. cost of DNA synthesis), this is not done systematically, continuously, or with a focus on existential risk.

A team or organization could be responsible for developing and compiling estimates of GCBR-relevant capabilities to inform funders and other organizations working to reduce GCBRs, as well as to make a more compelling case about the risk from engineered pathogens and biotechnology. This team could be analogous to Epoch’s monitoring of AI capabilities.

We think some risks that need to be thoughtfully managed are associated with this project. For example, it could be bad to spread the ‘meme’ to some audiences that bioweapons are increasingly cheap and accessible or go into detail about what specific capabilities are needed. Approaches similar to the ‘base rates’ approach outlined by Open Philanthropy Bio are likely to mitigate these risks.

Public advocacy for indoor air quality to reduce pandemic spread

Airborne pathogens are especially dangerous for catastrophic pandemics, and they are significantly more likely to spread indoors than outdoors.[11] Reducing indoor pathogen transmission could block or significantly slow pandemic spread. There are known effective interventions to improve air quality (ventilation, filtration, and germicidal ultraviolet light), but they need additional support to become deployed en masse globally.

We’re aware of some work already underway to support indoor air quality by various organizations, including SecureBio, Johns Hopkins Center for Health Security, Convergent Research, and 1DaySooner. This work is aimed at changing regulations, promoting R&D into far-UVC, and more firmly establishing the safety and efficacy of these technologies in real-world settings.

We think indoor air quality could have mainstream appeal,[12] and there could be more public advocacy aimed at broadly increasing its profile as a public health issue of concern – similar to other issues like cancer, HIV/AIDs, or, historically, water sanitation. Broader grassroots support could create more political pressure to support policies that promote indoor air quality and ease the adoption of indoor air quality interventions in public settings.

Biolab safety watchdog group

Biolabs can be a potential source of GCBRs, e.g., via accidental release, risky research, or clandestine bioweapons programs. However, it is often difficult to get visibility into a lab’s practices, such as whether they’re reporting significant accidents, upholding safety and security standards, or engaging in dangerous research.

There could be a watchdog group or network, something like a Bellingcat for biosecurity, that uses open-source intelligence and/or investigative journalism practices to uncover labs involved in significant biorisk-relevant activities. This group could bring increased attention to lab-related risks, deter future risky activities, and help authorities to manage irresponsible actors better.

We think this is an especially sensitive project that needs to be executed carefully and so would likely only encourage a small number of well-placed people to pursue it. Depending on the focus of this group, they may face risks of retaliation from powerful actors or challenges in navigating a sometimes highly charged and polarized debate around lab safety and risky research.

Advocacy to stop viral discovery projects

Viral discovery projects (or ‘virus hunting’) are research programs that involve collecting unknown viruses from the wild and producing vast amounts of genetic data about them. These programs plausibly introduce serious infohazards – for example, providing bad actors with ways to advance bioweapons programs – without producing any clear public health or biosecurity benefits.

Advocacy or lobbying to shut down or prevent viral discovery projects from getting started (or ensuring that their results can’t be disseminated widely) could reduce catastrophic biorisk. Kevin Esvelt and other known biosecurity experts have spoken publicly about the risks from viral discovery projects like DEEP VZN, but we aren’t aware of ongoing efforts to stop viral discovery projects more broadly.

We think this is an especially sensitive project that needs to be executed carefully and so would likely only encourage a small number of well-placed people to pursue it. The project team would have to navigate a sometimes highly charged and polarized debate around risky research, particularly if the focus is on the United States.

Retrospective grant evaluations of longtermist projects

It can be difficult to accurately estimate the impact of projects aiming to reduce existential risk, but there’s reason to think this is easier to do retrospectively. A team could be formed that does evaluations of past grantmaking decisions in the longtermist space. These could help to understand grantmakers’ track record better, spread good grantmaking reasoning and decision-making practices, and, in turn, make grantmaking more effective.

Many grantmakers (including OpenPhil and FLI) do some amount of retrospective grant evaluations internally, but we think it could add value to have evaluations that are (a) independent and (b) have more external outputs (e.g., disseminating lessons about grantmaking best practices). There’s some existing work of this kind, for example by Nuno Sempere[13].

We’re uncertain how challenging it is to evaluate grants retrospectively compared to grantmaking itself, especially given potentially limited access to the information necessary to evaluating the grant. Given that the talent pool for grantmaking already seems small, we imagine that it might be hard to find people for whom retrospective grant evaluation is a higher-value option than direct grantmaking. However, we tentatively think that, with the right team, it could be worth running a pilot to get more information.[14]

EA red teaming project

Research produced by many effective altruist organizations is subject to relatively little of the formal scrutiny research outputs are normally subject to (e.g., peer review, the publication filter). This has benefits, but suggests that there might be low-hanging fruit in improving the quality of the outputs of EA orgs with increased scrutiny. This could take the form of a focused investigation of a few key research outputs, or of an ongoing project to evaluate, replicate and poke holes in novel research outputs shortly after publication. The project might also be valuable as a talent pipeline that can productively engage and upskill a relatively large number of more junior researchers. The hope would be to both improve the general quality of research outputs and to update key decision-makers on important beliefs.

We’re unsure how much value this would add on top of existing “ad hoc” red teaming, discussion on the EA Forum, Lesswrong and elsewhere, and red teaming contests, but we think it’s plausible that a formal and high-quality project could add value. Similarly to retrospective grant evaluations, we’re not sure whether the people that would be best-placed to run this project might have a higher impact doing “direct” research.

Independent researcher infrastructure

Our impression is that an unusually large proportion of researchers in the effective altruism community work as independent grant recipients. These researchers don’t have easy access to the kind of network, mentorship and institutional support that’s standard for employed researchers; we think it’s plausibly a substantial bottleneck to their impact and the impact of the research community as a whole.

We can imagine a variety of projects that could help address those bottlenecks. One high-effort version could be to establish an institute that hires independent researchers as employees to reduce their operational burdens and provide a suite of support similar to that received by traditional employees (while maintaining the ability of independent researchers to set their own research agenda). Lighter-touch versions include peer mentoring schemes, mentor-mentee matching services, or help with logistical tasks like filing taxes or finding an office space.

We’re aware of several existing projects in this space, primarily focused on technical AI safety researchers, including AI Safety Support and the upcoming project Catalyze (which is currently seeking funding). Some of the space is also covered by existing fiscal sponsors, office spaces that house independent researchers and research organizations that provide light-touch fellowships (e.g., GovAI’s research scholar position).[15]

Appendix: Our research process

Here’s a slightly simplified breakdown of the research process we used to select which ideas to include in this list:

- We gathered ideas via internal brainstorming (~20%) and external idea solicitation (~80%).

- Three researchers evaluated the ideas on a weighted factor model, spending ~2 mins per project.

- We collected feedback from 1-2 people working in the relevant fields whose expertise we trust. They evaluated the top ideas and highlighted ones they think are either especially promising or unpromising.

- One researcher evaluated each of the top 30 ideas (using the same criteria as in the weighted factor model) for ~30 mins / project, and one other researcher evaluated their reasoning. For some projects, we also had another round of conversations with domain experts.

- One researcher put together a public selection of ideas we still thought were highly promising after the evaluation in the previous step, filtering out sensitive projects, as well as putting together a tentative top five (which was evaluated by other team members).

Acknowledgements

This research is a project of Rethink Priorities. It was written mostly by Marie Davidsen Buhl, with about half the project descriptions written by Jam Kraprayoon.

Thanks to our colleagues Renan Araujo, Ben Snodin and Peter Wildeford for helpful feedback on the post and throughout the research process. Thanks to David Moss and Willem Sleegers for support with quantitative analysis.

Thanks to Michael Aird, Max Daniel, Adam Gleave, Greg Lewis, Joshua Monrad and others for reviewing a set of project ideas within their area of expertise. All views expressed in this post are our own and are not necessarily endorsed by any of the reviewers.

Thanks to Onni Aarne, Michael Aird, Sam Brown, Ales Fidr, Oliver Guest, Jeffrey Ladish, Josh Morrison, Jordan Pieters, Oliver Ritchie, Gavin Taylor, Bruce Tsai, Claire Zabel and many others for sharing project ideas with us.

Thanks to Rachel Norman for help with publishing this post online.

If you like our work, please consider subscribing to our newsletter. You can explore our completed public work here.

- ^

We asked a number of people to suggest ideas (including via a public call). Where the source of the idea is public, we’ve credited it in a footnote. For people who contributed ideas privately, we gave the option to have their ideas credited in public outputs; most people didn’t opt for this. If you think an idea of yours is on the list and you'd like to be credited, feel free to reach out.

- ^

More detailed explanation and justification of our team's strategy here.

- ^

- ^

The “structured access” paradigm was proposed by Toby Shevlane (2022).

- ^

This idea was suggested (among others) by Holden Karnofsky (2022).

- ^

For previous work by Rethink Priorities on the benefits and downsides of prizes, as well as design consideration, see Hird et al. (2022) and Wildeford (2022).

- ^

This idea was suggested (among others) by AW(2022).

- ^

This idea was suggested (among others) by AW (2022).

- ^

Previous related proposals include ALERT, a “pop up think tank” that would maintain a pool of reservist talent for future large-scale emergencies, an Early Warning Forecasting Centre (EWFC), which proposed using elite judgmental forecasters to systematically assess signals and provide early warning of crises, and Hardening Pharma, which aims to proactively increase the resilience of medical countermeasure production & distribution by implementing mitigations & developing extreme emergency plans for GCBRs.

- ^

Cf. Lewis (2020) and Esvelt (2022).

- ^

- ^

- ^

Cf. Sempere (2021) and Sempere (2022).

- ^

This idea was suggested (among others) by Pablo (2022).

- ^

This idea was suggested (among others) by Gavin Taylor (2022).

NunoSempere @ 2023-06-21T19:06 (+18)

I am very amenable to either of these. If someone is starting these, or if they are convinced that these could be super valuable, please do get in touch.

weeatquince @ 2023-08-18T13:07 (+8)

Also keen on this.

Specifically, I would be interested in someone carrying out an independent impact report for the APPG for Future Generations and could likely offer some funding for this.

Ulrik Horn @ 2023-06-22T10:39 (+11)

Excellent list! On biodefence-relevant tech: One market screaming (actually, literally) for a solution is parents of small children. They get infected at a very high rate and I suspect this is via transmission routes in pre-schools that we would also be concerned about in a pandemic. While it is uncertain how a business addressing this market will actually shake out, I think it is generally in the direction of something likely to be biodefence-relevant. As a currently sick parent of small children myself, I would be very happy to start such a business after my current project comes to an end. And I think only ~40% reduction in sick days is enough to make this business viable and the current bar for hygiene is really low so I think it is feasible to achieve such reductions.

More generally, and in parallel to your proposed method of looking for markets that specific interventions could target, I would also consider looking for markets that roughly point in the direction of biodefence. The saying goes that it is more likely to have business success if focusing on a problem, rather than having a solution looking for a problem to solve. Another example from my personal history of markets roughly in the direction of biodefence is traveling abroad - many people have their long-planned and/or expensive holidays ruined due to stomach bugs, etc.

Jam Kraprayoon @ 2023-09-27T10:16 (+4)

I think it’s probably true that teams inside of major labs are better placed to work on AI lab coordination broadly, and this post was published before news of the frontier models forum came out. Still, I think there is still room for coordination to promote AI safety outcomes between labs, e.g. something that brings together open-source actors. However, this project area is probably less tractable and neglected now than when we originally shared this idea.

Akash Kulgod @ 2023-06-22T21:38 (+9)

The biodefense market opportunities dovetails nicely with the recently announced market shaping accelerator by UChicago - https://marketshaping.uchicago.edu/challenge/

Saul Munn @ 2023-06-22T00:39 (+9)

The "Retrospective grant evaluations of longtermist projects" idea seems like something that would work really well in conjunction with an impact market, like Manifund. That — retroactive evaluations — must be done extremely well for impact markets to function.

Since this could potentially be a really difficult/expensive process, randomized conditional prediction markets could also help (full explanation here). Here's an example scheme I cooked up:

Subsidize prediction markets on all of the following:

- Conditional on Project A being retroactively evaluated by the Retroactive Evaluation Team (RET), how much impact will it have[1]?

- Conditional on Project B being retroactively evaluated by the RET, how much impact will it have?

- etc.

Then, randomly pick one project (say, Project G) to retroactively evaluate, and fund the retroactive evaluation of Project G.

For all the other projects' markets, refund all of the investors and, to quote DYNOMIGHT, "use the SWEET PREDICTIVE KNOWLEDGE ... for STAGGERING SCIENTIFIC PROGRESS and MAXIMAL STATUS ENHANCEMENT."

- ^

Obviously, the amount of impact would need to be metricized in some way. Again obviously, this is an incredibly difficult problem that I'm handwaving away.

The one idea that comes to mind is evaluating n projects and ranking their relative impact, where n is a proper subset of the number of total projects greater than 1. Then, change the questions to "Conditional on Project A/B/C/etc being retroactively evaluated, will it be ranked highest?" That avoids actually putting a number on it, but it comes with its own host of problems

Austin @ 2023-06-22T04:17 (+3)

Yeah, we'd absolutely love to see (and fund!) retroactive evals of longtermist project -- as Saul says, these are absolutely necessary for impact certs. For example, the ACX Minigrants impact certs round is going to need evals for distributing the $40k in retroactive funding. Scott is going to be the one to decide how the funding is divvied up, but I'd love to sponsor external evals as well.

weeatquince @ 2023-08-18T13:28 (+8)

1.

I really like this list. Lost of the ideas look very sensible.

I also really really value that you are doing prioritisation exercises across ideas and not just throwing out ideas that you feel sound nice without any evidence of background research (like FTX, and others, did). Great work!

– –

2.

Quick question about the research: Does the process consider cost-effectiveness as a key factor? For each of the ideas do you feel like you have a sense of why this thing has not happened already?

– –

3.

Some feedback on the idea here I know most about: policy field building (although admittedly from a UK not US perspective). I found the idea strong and was happy to see it on the list but I found reading the description of it unconvincing. I am not sure there is much point getting people to take jobs in government without giving them direction, strategic clarity, things to do to or leavers to pull to drive change. Policy success needs an ecosystem, some people in technocratic roles, some in government, some in external policy think tank style research, some in advocacy and lobby groups, etc. If this idea is only about directing people into government I am much less excited by it than a broader conception of field building that includes institution building and lobbying work.

Buhl @ 2023-09-27T10:10 (+4)

Thanks, appreciate your comment and the compliment!

On your questions:

2. The research process does consider cost-effectiveness as a key factor – e.g., the weighted factor model we used included both an “impact potential” and a “cost” item, so projects were favoured if they had high estimated impact potential and/or a low estimated cost. “Impact potential” here means “impact with really successful (~90th percentile) execution” – we’re focusing on the extreme rather than the average case because we expect most of our expected impact to come from tail outcomes (but have a separate item in the model to account for downside risk). The “cost” score was usually based on a rough proxy, but the “impact potential” score was basically just a guess – so it’s quite different from how CE (presumably) uses cost-effectiveness, in that we don’t make an explicit cost-effectiveness estimate and in that we don’t consult evidence from empirical studies (which typically don’t exist for the kinds of projects we consider).

Re: “For each of the ideas do you feel like you have a sense of why this thing has not happened already?” – we didn’t consider this explicitly in the process (though it somewhat indirectly featured as part of considering tractability and impact potential). I feel like I have a rough sense for each of the projects listed – and we wouldn’t include projects where we didn’t think it was plausible that the project would be feasible, that there’d be a good founder out there etc. – but I could easily be missing important reasons. Definitely an important question – would be curious to hear how CE takes it into account.

3. Appreciate the input! The idea here wouldn’t be to just shove people into government jobs, but also making sure that they have the right context, knowledge, skills and opportunities to have a positive impact once there. I agree that policy is an ecosystem and that people are needed in many kinds of roles. I think it could make sense for an individual project to focus just/primarily on one or a few types of role (analogously to how the Horizon Institute focuses primarily on technocratic staffer and executive branch roles + think tank roles), but am generally in favour of high-quality projects in multiple policy-related areas (including advocacy/lobbying and developing think tank pipelines).

JP Addison @ 2023-08-15T18:07 (+8)

This is a really inspiring list, thanks for posting! I'm curating.

Alexandra Bos @ 2023-06-22T18:13 (+7)

Thanks for publishing this! I added it to this list of impactful org/project ideas

rime @ 2023-06-22T00:56 (+7)

Super post!

In response to "Independent researcher infrastructure":

I honestly think the ideal is just to give basic income to the researchers that both 1) express an interest in having absolute freedom in their research directions, and 2) you have adequate trust for.

I don't think much valuable gets done in the mode where people look to others to figure out what they should do. There are arguments, many of which are widely-known-but-not-taken-seriously, and I realise writing more about it here would take more time than I planned for.

Anyway, the basic income thing. People can do good research on 30k USD a year. If they don't think that's sufficient for continuing to work on alignment, then perhaps their motivations weren't on the right track in the first place. And that's a signal they probably weren't going to be able to target themselves precisely at what matters anyway. Doing good work on fuzzy problems requires actually caring.

Denkenberger @ 2023-06-23T03:30 (+8)

People can do good research on even less than 30k USD a year at CEEALAR (EA Hotel).

Mckiev @ 2023-06-22T11:15 (+3)

Well, such a low pay creates additional mental pressure to resist temptation to get 5-10x money in a normal job. I’d rather select people carefully, but then provide them with at least a ~middle class wage

rime @ 2023-06-22T19:51 (+3)

The problem is that if you select people cautiously, you miss out on hiring people significantly more competent than you. The people who are much higher competence will behave in ways you don't recognise as more competent. If you were able to tell what right things to do are, you would just do those things and be at their level. Innovation on the frontier is anti-inductive.

If good research is heavy-tailed & in a positive selection-regime, then cautiousness actively selects against features with the highest expected value.[1]

That said, "30k/year" was just an arbitrary example, not something I've calculated or thought deeply about. I think that sum works for a lot of people, but I wouldn't set it as a hard limit.

- ^

Based on data sampled from looking at stuff. :P Only supposed to demonstrate the conceptual point.

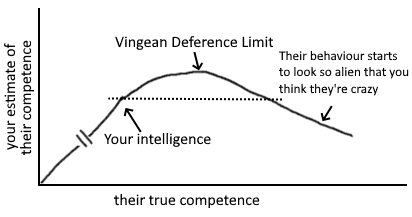

Your "deference limit" is the level of competence above your own at which you stop being able to tell the difference between competences above that point. For games with legible performance metrics like chess, you get a very high deference limit merely by looking at Elo ratings. In altruistic research, however...

Mckiev @ 2023-06-23T11:17 (+2)

I'm sorry I didn't express myself clearly. By "select people carefully", I meant selecting for correct motivations, that you have tried to filter for using the subsistence salary. I would prefer using some other selection mechanism (like references), and then provide a solid paycheck (like MIRI does).

It's certainly noble to give away everything beyond 30k like Singer and MacAskill do, but I think it should be a choice rather than a requirement.

Manuel Allgaier @ 2023-08-18T15:42 (+4)

This is helpful, thanks!

I notice you didn't mention fundraising for AI safety.

Recently, many have mentioned that the funding bar for AI safety projects has increased quite a bit (especially for projects not based in the Bay and not already well connected to funders) and response times from funders such as EA Funds LTFF can be very long (median 2 months afaik), which suggests we should look for more additional funding sources such as new high net worth donors, governments, non-EA foundations etc.

Do you have any thoughts on that? How valuable does this seem to you compared to your ideas?

Jam Kraprayoon @ 2023-09-27T10:15 (+3)

Thanks for the question. At the time we were generating the initial list of ideas, it wasn’t clear that AI safety was funding-constrained rather than talent-constrained (or even idea-constrained). As you’ve pointed out, it seems more plausible now that finding additional funding sources could be valuable for a couple of reasons:

- Helps respond to the higher funding bar that you’ve mentioned

- Takes advantage of new entrants to AI-safety-related philanthropy, notably the mainstream foundations that have now become interested in the space.

I don’t have a strong view on whether additional funding should be used to start a new fund or if it is more efficient to direct it towards existing grantmakers. I’m pretty excited about new grantmakers like Manifund getting set up recently that are trying out new ways for grantmakers to be more responsive to potential grantees. I don't have a strong view about whether ideas around increasing funding for AI safety are more valuable than those listed above. I'd be pretty excited about the right person doing something around educating mainstream donors about AI safety opportunities.

Mckiev @ 2023-06-22T11:09 (+4)

Did anyone consider ELOing the longtermist projects for “Retrospective grant evaluations”. Seems like it could be relatively fast by crowdsourcing comparisons, and orthogonal to other types of estimates

Vasco Grilo @ 2023-06-23T11:02 (+3)

Thanks for sharing!

Although we don’t provide extensive reasoning for why these projects made it into our list rather than others

Is there any reason for not sharing the scores of the weighted factor model?

Buhl @ 2023-07-26T15:26 (+9)

The quick explanation is that I don't want people to over-anchor on it, given that the inputs are extremely uncertain, and that I think that a ranked list produced by a relatively well-respected research organisation is the kind of thing people could very easily over-anchor on, even if you caveat it heavily

berglund @ 2023-09-05T16:53 (+1)

Regarding AI lab coordination, it seems like the governance teams of major labs are a lot better placed to help with this, since they will have an easier time getting buy in from their own lab as well as being listened to by other labs. Also, the frontier models forum seems to be aiming at exactly this.

MattThinks @ 2023-08-23T02:37 (+1)

I’m glad to see that growing the field of AI governance is being prioritized. I would (and in a series of posts elsewhere on the forum, will) argue that, as part of the field development, the distinction between domestic and international governance could be valuable. Both can be covered by field-growing initiatives of course, but each involve distinct expertises and probably have different enough candidate profiles that folks thinking of getting into either may need to pursue divergent paths at a certain point.

Of course, I may be speaking too much from “inside” the field as it were. Was this distinction considered when articulating that point? Or does that strike you as too fine a point to focus on at this stage?

Jam Kraprayoon @ 2023-09-27T10:17 (+2)

Hi Matt, I think it’s right that there’s some distinction between domestic and international governance. Unless otherwise specified, our project ideas were usually developed with the US in mind. When evaluating the projects, I think our overall view was (and still is) that the US is probably the most important national actor for AI risk outcomes and that international governance around AI is substantially less tractable since effective international governance will need to involve China. I’d probably favour more effort going into field-building focused on the US, then the EU, then the UK, in that order, before focusing on field-building initiatives aimed at international orgs.

In the short term, it seems like prospects for international governance on AI are low, with the political gridlock in the UN since the Russian invasion of Ukraine. I think there could be some particular international governance opportunities that are high-leverage, e.g. making the OECD AI incidents database very good, but we haven’t looked into that much.

Julia Michaels @ 2023-08-20T19:59 (+1)

You've done some wonderful research and this is a great list. It does seem very heavy on AI, with only a few projects addressing other existential risks. I'd be curious to know about some other ideas that didn't make the cut for this post, but are aimed at addressing other risks.

Buhl @ 2023-09-27T10:12 (+1)

Thank you!

Worth noting that our input was also very unevenly distributed – our original idea list includes ~40% AI-related ideas, ~15% bio, ~25% movement building / community infrastructure, and only ~20% other. (this was mainly due to us having better access to AI-related project ideas via our networks). If you’re interested in pursuing biosecurity- or movement building-related projects, feel free to get in touch and I can share some of our additional ideas – for the other areas I think we don’t necessarily have great ideas.