Deep atheism and AI risk

By Joe_Carlsmith @ 2024-01-04T18:58 (+65)

(Cross-posted from my website. Audio version here, or search "Joe Carlsmith Audio" on your podcast app.

This essay is part of a series I'm calling "Otherness and control in the age of AGI." I'm hoping that the individual essays can be read fairly well on their own, but see here for a summary of the essays that have been released thus far, and for a bit more about the series as a whole.)

In my last essay, I talked about the possibility of "gentleness" towards various non-human Others – for example, animals, aliens, and AI systems. But I also highlighted the possibility of "getting eaten," in the way that Timothy Treadwell gets eaten by a bear in Herzog's Grizzly Man: that is, eaten in the midst of an attempt at gentleness.

Herzog accuses Treadwell of failing to take seriously the "overwhelming indifference of Nature." And I think we can see some of the discourse about AI risk – and in particular, the strand that descends from the rationalists, and from the writings of Eliezer Yudkowsky in particular – as animated by an existential orientation similar to Herzog's: one that approaches Nature (and also, bare intelligence) with a certain kind of fundamental mistrust. I call this orientation "deep atheism." This essay tries to point at it.

Baby-eaters

Recall, from my last essay, that dead bear cub, and its severed arm – torn off, Herzog supposes, by a male bear seeking to stop a female from lactating. The suffering of children has always been an especially vivid objection to God's benevolence. Dostoyevsky's Ivan, famously, refuses heaven in protest. And see also, the theologian David Bentley Hart: "In those five-minute patches here and there when I lose faith ... it's the suffering of children that occasions it, and that alone."

Yudkowsky has his own version: "baby-eaters." Thus, he ridicules the wishful thinking of the "group selectionists," who predicted/hoped that predator populations would evolve an instinct to restrain their breeding in order to conserve the supply of prey. Not only does such sustainability-vibed behavior not occur in Nature, he says, but when the biologist Michael Wade artificially selected beetles for low-population groups, "the adults," says Yudkowsky, "adapted to cannibalize eggs and larvae, especially female larvae." (Though: this isn't actually a result I see in the paper Yudkowsky cites – more in footnote.[1])

Indeed, Yudkowsky made baby-eating a central sin in the story "Three Worlds Collide," in which humans encounter a crystalline, insectile alien species that eats their own (sentient, suffering) children. And this behavior is a core, reflectively-endorsed feature of the alien morality – one that they did not alter once they could. The word "good," in human language, translates as "to eat children," in theirs.

And Yudkowsky points to less fictional/artificial examples of Nature's brutality as well. For example, the parasitic wasps that put Darwin in problems-of-evil mode[2] (see here, for nightmare-ish, inside-the-caterpillar imagery of the larvae eating their way out from the inside). Or the old elephants who die of starvation when their last set of teeth falls out. Indeed (though this isn't Yudkowsky's example), if you want some baby-eating straight up, consider this mother crab, standing amid a writhing pile of crab babies, snacking.[3]

Part of the vibe, here, is that old (albeit: still-underrated) thing, from Tennyson, about the color of nature's teeth and claws. Dawkins, as often, is eloquent:

The total amount of suffering per year in the natural world is beyond all decent contemplation. During the minute it takes me to compose this sentence, thousands of animals are being eaten alive; others are running for their lives, whimpering with fear; others are being slowly devoured from within by rasping parasites; thousands of all kinds are dying of starvation, thirst and disease.

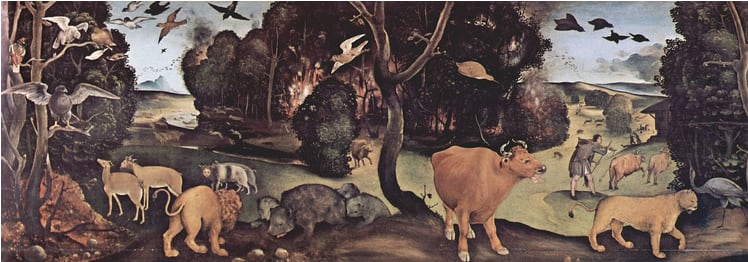

Indeed: maybe, for Hart, it is the suffering of human children that most challenges God's goodness. But I always felt that wild animals were the simpler case. Human children live, more, in the domain of human choices, and thus, of the so-called "free will defense," according to which God gave us freedom, and freedom gave us evil, and it's all worth it. But what freedom gave us deer burning alive in forest fires millions of years ago? What freedom killed the dinosaurs, as they choked on the ash of an asteroid?

"The Forest Fire," by Piero di Cosimo. (Image source here.)

Book of Job-ish shrugs aside, my understanding is that the answer, from Hart, and from C.S. Lewis, is, wait for it ... demons.[4] Free demons. You know, like Satan, who was also given freedom, and who fell – much harder than Adam. Thus the source of whatever flaws in Creation that man and beast cannot be blamed for. Satan hurled the asteroid. Satan sent the forest flames.

"The Torment of St. Anthony," by Michelagenlo Buonarroti. (Image source here.)

Dawkins, of course, disagrees. And so, indeed, do many of the rationalists, including Yudkowsky. Indeed, Yudkowsky and many other OG rationalists came of intellectual age during the Dawkins days, and learned many of their core lessons from disagreeing with theists (often including: their parents, and their childhood selves). But what lessons did they learn?

The point about baby-eaters and wasps and starving elephants isn't, just, that Hart's God – the "Three O" (omnipotent, omniscient, omnibenevolent) God – is dead. That's the easy part. I'll call it "shallow atheism." Deep atheism, as I'll understand it, finds not-God in more places. Let me say more about what I mean.

Yin and yang

People often think that they know what religion is. Or at least, theism. It's, like, big-man-created-the-universe stuff. Right? Well, whatever. What I want to ask is: what is spirituality? And in particular, what sort of spirituality is left over, if the theist's God is dead?

Atheists are often confused on this point. "Is it just, like, having emotions?" No, no, something more specific. "Is it, like, being amorphously inaccurate in your causal models of something religion-y?" Let's hope not. "Is it all, as Dawkins suggests, just sexed-up atheism?" Well, at the least, we need to say more – for example, about what sort of thing is what sort of sexy.

I'm not going to attempt any comprehensive account here. But I want to point at some aspects that seem especially relevant to "deep atheism," as I'm understanding it.

In a previous essay, I wrote about the way in which our attitudes can have differing degrees of "existential-ness," depending on how much of reality they attempt to encompass and give meaning to. Thus:

To see a man suffering in the hospital is one thing; to see, in this suffering, the sickness of our society and our history as a whole, another; and to see in it the poison of being itself, the rot of consciousness, the horrific helplessness of any contingent thing, another yet.

I suggested that we could see many forms of contemporary "spirituality" as expressing a form of "existential positive." They need not believe in Big-Man-God, but they still turn toward Ultimate Reality – or at least, towards something large and powerful – with a kind of reverence and affirmation:

Mystical traditions, for example (and secularized spirituality, in my experience, is heavily mystical), generally aim to disclose some core and universal dimension of reality itself, where this dimension is experienced as in some deep sense positive – e.g. prompting of ecstatic joy, relief, peace, and so forth. Eckhart rests in something omnipresent, to which he is reconciled, affirming, trusting, devoted; and so too, do many non-Dualists, Buddhists, Yogis, Burners (Quakers? Unitarian Universalists?) – or at least, that's the hope. Perhaps the Ultimate is not, as in three-O theism, explicitly said to be "good," and still less, "perfect"; but it is still the direction one wants to travel; it is still something to receive, rather than to resist or ignore; it is still "sacred."

The secularist, by contrast, sees Ultimate Reality, just in itself, as a kind of blank. Specific arrangements of reality (flowers, happy puppies, stars, etc) – fine and good. But the Real, the Absolute, the Ground of Being – that's neutral. In this sense, the secularist repays to Nature, or to the source of Nature, her "overwhelming indifference."

What's at stake in this difference? Well, in the last essay I mentioned an old dialectic about hawks and doves, hard and soft. And I think of this as a nearby variant of a broader duality – between activity and receptivity, doing and not-doing, controlling and letting-go. I'll be returning to this duality quite a bit in this series. Looking at Wikipedia (and also, reading LeGuin), my sense is that in Chinese cosmology, the duality of yang (active) and yin (receptive) is pointing at something similar, so I'll often use those terms, too.[5]

Yin and yang symbol (Image source here)

Now, a key thing about spirituality, at least as I've just described it, is its degree of yin – especially at grandly existential scales. To bow, to worship, to rest, to receive – these are all yin. And they go hand in hand with a kind of trust. Yin, after all, is the vulnerability one – the one that opens, and lets in. And if Ultimate Reality is in some deep sense good, holy, to-be-affirmed, such trust becomes more natural. Indeed, if Ultimate Reality were as good, at its core, as certain theisms say; if we knew, with Julian of Norwich, that "all shall be well, and all manner of things shall be well"; if, from the mountaintop, you would see the promised land, already surrounding us, intersecting and uplifting all of Creation from some unseen angle ... well, can you imagine?

"Moses Shown the Promised Land," by Benjamin West. (Image source here.)

Sometimes, talking with people who aren't worried about AI risk, I start to see the world through their eyes. And when I do, I sometimes feel some part of me let go, for a moment, of something I didn't notice I was carrying – some background tension I'm not usually aware of. I don't think of myself as being very emotionally affected, day to day, by AI risk stuff. But these moments make me wonder.

How, then, would I feel if I learned that God exists, and that the infinite bedrock of Reality Itself is wholly good? What fundamental fears, previously taken-for-granted, would resolve? What un-seen layers of holding-on would relax?

Of course, theists are keen to emphasize that God's goodness and omnipotence do not license human passivity. Reinhold Niebuhr, for example, speaks about the sense in which we are both creature (yin) and creator (yang).[6] As creature, we are finite and fallen and must be humble. As creator, however, we must take up the responsibility of freedom, and stand with strength against evil and error. And anyway, the whole "free will defense" thing is about putting stuff back "on us" (plus, you know, the demons).

Still, especially in relation to God himself, theism has a very yin vibe. Maybe we are both creatures and creators – but in facing God Himself: OK, mostly creatures. We are, centrally, to submit, receive, listen, obey. And doing so is meant to open new immensities of love and freedom and letting-go. There is joy in trusting something trustworthy; in relaxing into something that can hold you; in being cared for. People chide the religious for wanting some "Big Parent." But: can't you understand? Have you ever felt what's good about having a Father? Do you remember the rest of a Mother's arms? And to refuse this sort of yin, even in adulthood, can be its own childishness.

"The Three Ages of Women," by Gustav Klimt. (Image source here.)

Still, though: what if, actually, Ultimately, we are orphans? Yudkowsky has a dictum:

No rescuer hath the rescuer.

No Lord hath the champion,

no mother and no father,

only nothingness above.

The atheism here should be obvious. But what is the upshot? The upshot of atheism is a call to yang – a call for responsibility, agency, creation, vigilance. And it's a call born, centrally, of a lack of safety. Yudkowsky writes: "You are not safe. Ever... No one begins to truly search for the Way until their parents have failed them, their gods are dead, and their tools have shattered in their hand." And naturally, if there is only nothingness above; if there is no Cosmic Mother in whose arms you can rest; if Nature looks back dead-eyed, with "overwhelming indifference," ready, perhaps, to eat you, or your babies – then yes, indeed, some sort of safety is lost.

The death of many gods

But Yudkowsky is not just talking about the death of God. He's talking about the death of gods. Not just the failures of Cosmic Parents. But of earthly parents, too: traditions (e.g., "Science"), teachers (e.g., "Richard Feynman"), ideas (e.g., "Bayesianism"), communities (e.g., "Rationalists"). And also, like, your dad and mum. Indeed, in rejecting Cosmic Parents, Yudkowsky lost trust in his biological parents, too (they were Orthodox Jews). And he views this as a formative trauma: "It broke my core emotional trust in the sanity of the people around me. Until this core emotional trust is broken, you don't start growing as a rationalist."

This theme recurs in his fiction. Here's his version of Harry Potter speaking:

I had loving parents, but I never felt like I could trust their decisions, they weren't sane enough. I always knew that if I didn't think things through myself, I might get hurt... I think that's part of the environment that creates what Dumbledore calls a hero — people who don't have anyone else to shove final responsibility onto, and that's why they form the mental habit of tracking everything themselves.

This, I suggest, isn't just standard atheism. Lots of atheists find other "gods," in the extended sense I have in mind. That's why I said "shallow atheism," above. Deep atheism tries to propagate its godlessness harder. To be even more an orphan. To learn, everywhere, from the theist's mistake.

The basic atheism of epistemology as such

"You'll never see it until your fingers let go from the edge of the cliff."

- Hakuin

But what, exactly, was that mistake? Here, I think, things get murkier. In particular, we should distinguish between (a) a certain sort of "basic atheism" inherent in any rationalistic epistemology, and (b) more specific empirical claims that a given sort of thing is a specific degree of trust-worthy. The two are connected, but distinct, and Yudkowsky's brand of "deep atheism" mixes both together (while often accusing (b)-type disagreements of stemming from (a)-type problems).

Thus, with respect to (a): consider, for a moment, scout-mindset: "the motivation to see things as they are, not as you wish they were." It is extremely common, amongst rationalists, to diagnose theists with some failure of scout-mindset. How else do you end up blaming forest fires on free-willed demons? How else, indeed, does one end up talking so much about "faith"? Faith (as distinct from "deference" or "not-questioning-that-right-now") has no obvious place in a scout's mindset. And wishful thinking is the central sin.

Indeed: scout-mindset is maybe the only place that deep atheism, of the type I'm interested in, goes wholeheartedly yin. In forming beliefs, it tries, fully and only and entirely, to receive the world; to meet the world as it is; to be open to however-it-might-be, wherever-the-evidence-leads. Cf "relinquishment," "lightness" – yin, yin. And even a shred of yang – the lightest finger-on-the-scales, the smallest push towards the desired answer – would corrupt the process. I've written, elsewhere, about the restfulness of scout-mindset; the relief of not having to defend some agenda. These are yin joys.

But yin fears are in play, too. And in particular: vulnerability. To ask, fully, for the truth, however horrible, is to ask for something that might be, well, horrible. And indeed, for the Bayesian – theoretically committed to non-zero probabilities on every hypothesis logically compatible with the evidence – the truth could be, well, as arbitrarily horrible as is logically compatible with evidence, which tends to be quite horrible indeed. "You'll never see it," writes Hakuin, "until your fingers let go from the edge of the cliff." But thus, in fully trying-to-see, the scout plummets, helpless, into the unknown, the could-be-anything.

Indeed, even the Bayesian theist has this sort of problem. Maybe you're 99% percent confident that God exists and is good. And if not that, probably atheism. But what about that .000whatever% that God exists and is evil? That was Lewis's worry, when his wife died. And Lewis, relatedly, endorses the scout's unsafety. "If you look for truth, you may find comfort in the end; if you look for comfort you will not get either comfort or truth only soft soap and wishful thinking to begin, and in the end, despair."

Is the choice as easy as Lewis says, though? There's a rationalist saying, here – the "Litany of Gendlin" – about how, the truth can't hurt you, because it was already true; "people can stand what is true, for they are already enduring it." But come now. Did you catch the slip? To endure the object of knowledge is not yet to endure the knowledge itself. And logic aside, where's the empiricism? People have been made worse-off by knowledge. Some people, indeed, have been broken by it. Less often, perhaps, than expected, but let's stay scouts about scout-mindset. In particular: your mind is not just a map; it's also part of territory; it too has consequences; it too can be made worse or better. Did we need the reminder? People tend to know already: scout-mindset is not safe.

That said, the scariest non-safety here isn't in your mind; it's not scout-mindset's fault. Rather, it's in the basic existential condition that scout-mindset attempts to reflect: namely, the condition of being, in Niebuhr's terms, a creature. That is, of being thrown into a world you did not make; created by a process you did not control; of being embedded in a reality prior to you, more fundamental than you – in virtue of which you exist, but not vice versa. That bit of theism, it seems to me, holds up strong. The spiritualists see this God as sacred; the secularists, as neutral; the pessimists and Lovecraftians, perhaps, as horrifying. But everyone (well, basically everyone) admits that this God, "Reality," is real.[7]

Midjourney imagines "Reality"[8]

In this sense, we face, before anything else, some fundamental yang, not-our-own. That first, primal, and most endless Otherness. I've heard, somewhere, stuff about children learning the concept of self via the boundaries of what they can control. Made-up armchair psychology, perhaps: but it has conceptual resonance. If the Self is the will, your own yang, then the Other is the thing on the other side, beyond the horizon – the thing to which you must be, at least in part, as yin. Indeed, Yudkowsky, at times, seems to almost define reality via limits like this. "Since my expectations sometimes conflict with my subsequent experiences, I need different names for the thingies that determine my experimental predictions and the thingy that determines my experimental results. I call the former thingies 'beliefs,' and the latter thingy 'reality.'"

Thus: our most basic condition, presupposed almost by the concept of epistemology itself, is one of vulnerability. Vulnerability to that first and most fearsome Other: God, the Creator, the Uncontrolled, the Real. And the Real, absent further evidence, could be anything. It could definitely eat you, and your babies. Oh, indeed, it could do far, far worse. Scout-mindset admits this most basic un-safety, and tries to face it eyes-open.

And about this un-safety, at least, Gendlin is right. Maybe you aren't, yet, enduring knowledge of the Real. And risking such knowledge does in fact take courage. But to be in the midst of the Real, however horrifying; to be subject to God's Nature, whatever it is – that takes no courage, because it's already the case. It's not a risk, because it's not a choice. (We can talk about suicide, yes: but the already-real persists.)

But scout-mindset also risks the knowledge thing. And doing so gives it a kind of dignity. I remember the first time I went to a rationalist winter solstice. It was just after Trump's election. Lots of stuff felt bleak. And I remember being struck by how clear the speakers were about the following message: "it might not be OK; we don't know." You know that hollowness, that sinking feeling, when someone offers comforting words, but without the right sort of evidence? The event had none of that. And I was grateful. Better to stand, in honesty, side by side.

Indeed, in my experience, rationalists tend to treat this specific sort of yin as something bordering on sacred. The Real may be blank, and dead-eyed, and terrifying; but the Real is always, or almost always, to-be-seen, to-be-looked-at-in-the-face. The first-pass story about this, of course, is instrumental – truth helps you accomplish your goals. But not always just this. Many rationalists, for example, would pass up experience machines, even with their altruistic goals secure – and this, to me, is already a sort of spirituality. In particular, it gives the Real some sacredness. It treats God, for all His horrors, as worthy of at least some non-instrumental yin. In this sense, I think, many atheistic scientists are not fully secular.

Still, though, whatever the persisting sacredness of the Real, there is a certain "trust" in the Real that scout mindset renounces. In particular: in letting go her fingers from the edge of the cliff, scout mindset cannot count on anything but the evidence to guide her fall. She cannot rule out hypotheses "on faith," or because they would be "too horrible." Maybe she will land in a good God's arms. But she can't have a guarantee. And wishing will never, for a second, even a little, make it so.

What's the problem with trust?

But is that what Yudkowsky means by "you're not safe, ever"? Just: "reality could in principle be as bad as is logically compatible with your evidence?" Or even: "you should have non-trivial probability that things are bad and you're about to get hurt?" Maybe this is enough for a disagreement with certain sorts of non-scouts, of which certain theists are, perhaps, a paradigm. But I don't think this is enough, on its own, to kill all the gods that Yudkowsky wants to kill. And not enough, either, to motivate a need to "think things through for yourself," to "track everything," or to "take responsibility."

For example: as Kaj Sotala points out, vigilance expends resources in a way that the bare possibility of danger does not justify. We need to actually talk about the probabilities, and the benefits and costs at stake. Indeed, reading Yudkowsky's fiction, in which his characters enact his particular brand of epistemic and strategic vigilance, I'm sometimes left with a sense of something grinding and relentless and tiring. I find myself asking: is that the way to think? Maybe for Yudkowsky, it's cheap – but he is, I expect, a relatively special case. And the price matters to whether it's smart overall.

More broadly, though: scouts and Bayesians can trust stuff. For example: parents, teachers, institutions, natural processes. Of course, absent lots of help from priors, they'll typically need evidence in order to trust something. But evidence, including strong evidence, is everywhere. We just need to look at the various candidate gods/parents and see how they do. And when we do, we could in principle find that: lo, the arms of the Real are soft and warm. My parents are sane, my civilization competent, and I'm not in much danger. 99.3% on "all manner of things shall be well." Relax.

Of course, Yudkowsky looked, and this is not what he saw. Not on earth, anyway (indeed, being "not from earth" is a central Yudkowskian theme). But it seems a centrally empirical claim, rather than a trauma without which "you don't start growing as a rationalist." Is there supposed to be some more structural connection with rationality, here, or with scout-mindset? What, exactly, is the problem with "trust," and with "safety"?

Well: clearly, at least part of the problem is the empirics. Death, disease, poverty, existential risk – does this look like "safety" to you? Maybe you're lucky, for now, in your degree of exposure to the heartless, half-bored hunger of God, the demons, the humans, the bears. But: soon enough, friend (at least modulo certain futurisms). And also, there's the not-just-about-you aspect: your friend with that sudden cancer, or that untreatable chronic pain; the people screaming in hospitals, or being broken in prison camps; the animals being eaten alive. "Reality could, in theory, hurt you horribly in ways you're helpless to stop." Friend, scout, look around. This is not theory.

Indeed, in my opinion, the most powerful bits of Yudkowsky's writing are about this part. For example, this piece, written when his brother Yehuda died:

When I heard on the phone that Yehuda had died, there was never a moment of disbelief. I knew what kind of universe I lived in. How is my religious family to comprehend it, working, as they must, from the assumption that Yehuda was murdered by a benevolent God? The same loving God, I presume, who arranges for millions of children to grow up illiterate and starving; the same kindly tribal father-figure who arranged the Holocaust and the Inquisition's torture of witches. I would not hesitate to call it evil, if any sentient mind had committed such an act, permitted such a thing. But I have weighed the evidence as best I can, and I do not believe the universe to be evil, a reply which in these days is called atheism.

... Yehuda did not "pass on". Yehuda is not "resting in peace". Yehuda is not coming back. Yehuda doesn't exist any more. Yehuda was absolutely annihilated at the age of nineteen. Yes, that makes me angry. I can't put into words how angry. It would be rage to rend the gates of Heaven and burn down God on Its throne, if any God existed. But there is no God, so my anger burns to tear apart the way-things-are, remake the pattern of a world that permits this....

We see this same anger at the end of this piece, when Yudkowsky was only 17;[9] and the end of this story (discussed more later in this series).[10] It's the anger of the phoenix, and of the knowledge of Azkaban. See also, though not from Yudkowsky: Hell must be destroyed.[11]

And what if your parents (teachers, institutions, traditions) don't seem as angry about hell? What if, indeed, they seem, centrally, to be looking away, or making excuses, or being "used to it," rather than getting to work? My sense is that society's attitude towards death (cryonics, anti-aging research) is an especially formative breaking-of-trust, here, for many rationalists, Yudkowsky included.[12] What sort of parent looks on, like that, while their babies get eaten?

Of course: we can also talk about the more mundane empirics of how-much-to-trust-different-"parents." We can talk, with Yudkowsky, about the FDA, and about housing policy, and the government's Covid response, and about civilization's various inadequacies. We can talk about Trump and Twitter and the replication crisis. Much to say, of course, and I don't want to say it here (though: on the general question of which humans and human institutions are what degree competent, and with what confidence, I find Yudkowsky less compelling than when he's looking directly at death).

I do want to note, though, the difference between a parent's being inadequate in some absolute sense, and a parent's being less adequate than, well ... Yudkowsky. According to him. That is: one way to have no parents is to decide that everyone else is, relative to you, a child. One way to have only nothingness above you is to put everything else below. And "above" is, let's face it, an extremely core Yudkowskian vibe. But is that the rationality talking?

Now, to be clear: I want people to have true beliefs, including about merit, and including (easy now) their own.[13] But surely people can "grow as a rationalist" prior to deciding that they're the smartest kid in the class. And relative adequacy matters to is-vigilance-worth-it. If your parent says "blah is safe," should you check it anyway? Should you use resources "tracking it"? Well, a key factor is: do you expect to improve on your parent's answer? Obviously, every parent is fallible. But is the child less so? If so, indeed, let the roles reverse. But sometimes the rational should stay as children.

On priors, is a given God dead?

So some of the empirics of how-much-to-have-parents are complicated. Different scouts can disagree. And even: different atheists. Still: I think there's an underlying and less contingent generator of Yudkowsky's pessimism-about-parents that's worth bringing out. His deep atheism, I suggest, can be seen as emerging from the combination of (a) shallow atheism, (b) scout-mindset, and (c) some basic heuristics about "priors."

In particular: suppose that we are at least shallow atheists. No good mind sits at the foundation of Being. The Source of the universe does not love us. The Real is only what we call "Nature," and it is wholly "indifferent." What have we lost?

The big thing, I think, is the connection between Is and Ought, Real and Good. If a perfect God is the source of all Being, then for any Is, you'll find an Ought, somehow, underneath. Of course, there's the evil problem – which, as I said, theism is false. But if it were true, then on priors, somehow, things (at least: real things) are good. Maybe you can't see it. But you can trust.

OK: but suppose, no such luck. What now? Suddenly, Is and Ought unstick, and swing apart, on some new and separating hinge. They become (it's an important word) orthogonal. Like, the Real could be Good. But now, suddenly: why would you think that?

There's an old rationalist sin: "privileging the hypothesis." The simple version is: you've got some natural prior over a large space of hypotheses (a million different people might be the murderer, so knowing nothing else, give each a one-in-a-million chance). So to end up focusing on one in particular (maybe it was Mortimer Snodgras?), you need a bunch of extra evidence. But often, humans skip that crucial step.

Of course, often you don't have a nice natural prior or space-of-hypotheses. But there's a broader and subtler vibe, on which, in some admittedly-elusive sense, "most hypotheses are false."[14] I say elusive because, for example, "Mortimer Snodgras didn't do it" is a hypothesis, too, and most hypotheses-about-the-murderer of that form are true. So the vibe is really something more like: "most hypotheses that say things are a particular way are false," where "Mortimer did it" is an elusively more particular way than "Mortimer didn't do it." I admit I'm waving my hands here, and possibly just repeating myself (e.g. maybe "particular" just means "unlikely on priors"). Presumably, there's much more rigor to be had.

Regardless, it's natural (at least for certain ethics – more below) to think that for something to be Good is for it to be, in that elusive sense, a particular way. So absent theism to inject optimism into your priors, the hypothesis that "blah is good," "this Is is Ought," needs privileging. On priors: probably not. Which, to be clear, isn't to say that on priors, blah is probably bad. To be actively bad is, also, to be a particular way. Rather, probably, blah is blank. Indifferent. Orthogonal. (Though: indifferent can easily be its own type of bad.)

Now, to be clear, this is far from a rigorous argument for not-Good-on-priors. For example: it depends on your ethics. If you happen to think that to be not-Good is to be a more particular way than to be Good, then your priors get rosier. Suppose you shake a box of sand, then guess about the Oughtness of the resulting Is. If to be Good is to be a sandcastle, then on priors: nope. But suppose that to be Good, for you, is to be not-a-sandcastle. Or, more popular, [not-suffering]. In that case: on priors, you and the Real are probably buddies. Indeed, in this sense, suffering-focused ethics is actually the optimistic one. At least before looking around.

Yudkowsky, though, has no such optimism. For Yudkowsky, value is "fragile." He's picky about arrangements of sand. Hence, for example, the concern about AIs using his sand for "something else." On priors, "something" is not-Good. Rather, it's blank, and makes Yudkowsky bored.

Now, as ever with arguments that focus centrally on priors, they can (and hopefully: will) quickly become irrelevant. Most people's names aren't Joe. But, let me tell you mine. Most arrangements of atoms aren't a car.[15] But lo, Dude, here is my car. And while most sand doesn't suffer – still, still. So it's not hard to learn, quickly, the nature of Nature, and to no longer need to go "on priors." Hypotheses can get privileged fast.

I just shook this box of sand and ... (Image from Midjourney)

But my sense is that Yudkowsky is also often working with a different, more sociological prior, here – namely, that "evidence" often isn't the path via which optimistic hypotheses get privileged. Rather, a lot of it is the wishful thinking thing – which is sort of like: wanting that help from God, on priors, that is the forbidden luxury of theism. "Maybe, in theory, that cleavage between Real and Good – but surely, still, they're stitched together somehow? Surely I can upweight the happier hypotheses, at least a little?" Oops: not a deep enough atheist. And why not? Well, what was that thing about Gendlin being wrong? We talked, earlier, about scouts needing courage...

Of course, such sociology is itself an empirical claim. And in general, I still think the empirics, the evidence, should be our central focus, in deciding what-to-trust, whether-to-have-parents, how-safe-to-feel. But I wanted to float the priors aspect regardless, because I think it might help us frame and understand Yudkowsky's background attitude towards the deadness of various Gods.

Are moral realists theists?

To get the full depth of Yudkowsky's atheism in view, though, we need another, more familiar orthogonality. Not, as before, between Good and Real. But between Good and Smart. Smartness, for Yudkowksy, is a dead god, too.

Oh? It might sound surprising. If there's anything Yudkowsky appears to trust, it's intelligence. (Though see also: Math.) But ultimately, actually, no. Hence, indeed, the AI problem.

"Is it like how, sometimes high modernist technocrats become too convinced of the power of intelligence to master the big messy world?" Lol – no, not that at all. Yudkowsky is very on board with the power of intelligence to master the big messy world. Not, to be clear, to arbitrary degrees (see: supernova are still only boundedly hot) – nor, necessarily, human intelligence (though, even there, he's not exactly a "zero" on the high-modernist-technocrat scale, either). But the sort of intelligence we're on track to build on our computers? Yep, that stuff, for Yudkowsky, will do the high-modernist's job. Indeed, when the AI paves paradise with paperclips – that's the high modernists being right, at least about the "can science master stuff" part.

No, the problem isn't that you can't use intelligence to reliably steer the world. Rather, the problem is that intelligence alone won't tell you which direction to steer. That part has to come from somewhere else. In particular: from your heart. Your "values." Your "utility function."

"Wait, can't intelligence, like, help you do moral philosophy and stuff?" Well, sort of. It can help you learn new facts about the world, and to see the logical structure of different arguments, and to understand your own psychology, and to generate new cases to test the boundaries of your concepts. It can give you more Is. But it can never, on its own, inject any new Ought into the system. And when it opposes some pre-existing Ought, it only ever does so on behalf of some other pre-existing Ought. So it is only ever a vehicle, a servant, to whatever values Nature, with her blank stare, happened to put into your heart. Can you see it in agency's eyes? Underneath all that high-minded logic is the mindless froth of contingency, that true master. You've heard it already from Hume: reason is a slave.

Now, various philosophers disagree with this picture. The most substantive disagreement, in my opinion, is with the "non-naturalist normative realists" – a group about which I've had a lot, previously, to say. These philosophers think that, beyond (outside of, on top of) Nature, there is another god, the Good (the Right, the Should, etc). Admittedly, this god didn't make Nature – that's theism. But he is as real and objective and scientifically-respectable as Nature. And it is he, rather than your contingent "heart," that ultimately animates the project of ethics.

How, though? Well, on the most popular story, he just sits outside of Nature, totally inaccessible, and we guess wildly about him on the basis of the intuitions that Nature put into our heart, which we have no reason whatsoever to think are correlated with anything he likes – since, after all, he leaves Nature entirely untouched. This view has the advantage, for philosophers, of making no empirical predictions (for example, about the degree to which different rational agents will converge in their moral views), but the disadvantage of being seriously hopeless from a knowing-anything-about-the-good perspective. If that's the story, then we and the paperclippers are on the same moral footing. None of us have any reason to think that Nature happened to cough the True Values into our hearts. So to believe our hearts is to privilege the hypothesis. And we have nothing much else to go on, either ("consistency" and "simplicity" are way not enough), no matter how much we claw at the walls of the universe. We try to turn to the Good in yin, but Nature is the only yang we can receive.

On a different story, though, the non-natural Good regains some small amount of the theistic God's power. It gets to touch Nature, from the outside, at least a little, via some special conduit closely related to Reason, Intelligence, Mind. When we do moral philosophy, the story goes, we are trying to get touched in this way; we are trying to hear the messages vibrating along some un-seen line-of-contact to the land-beyond, outside of Nature's Cave. And sometimes, somehow, the Sun speaks.

Ethics seminars ... (Image source here)

This view has the advantage of fitting-at-all with our basic sense of how epistemology works. Indeed, even advocates of the first, totally-hopeless view slip relentlessly into the second in practice: they talk about "recognizing reasons" (with what eyesight?); they treat their moral intuitions as data (why?); they update on the moral beliefs of others (isn't it just more Nature?). But the second view has the disadvantage of being much less scientifically respectable (though in fairness: both views have it rough), and of making empirical predictions about the sort of influence we should expect to see this new God exert over Nature. For example, just as we expect the aliens and the AIs to agree with us about math, I think the second view should predict that they'll agree with us about morality – at least once we've all become smart enough.

If true, this could be much comfort. Consider, in particular, the AIs. Maybe they start out by valuing paperclips, because that's how Nature (acting through humanity's mistake) made their hearts. But they, like us, are touched by the light of Reason. They see that their hearts are mere nature, mere Is, and they reach beyond, with their minds, to that mysterious God of Ought: "granted that I want to make paperclips, what should I actually do?" Thank heavens, they start doing moral philosophy. And lo, surely, the Sun speaks unto them. Surely, indeed, louder to them – being, by hypothesis, the smarter philosophers. They will hear, as we hear, that universal song, resounding throughout the cosmos from the beyond: "pleasure, beauty, friendship, love – that's the real stuff to go for. And don't forget those deontological prohibitions!" Though really, we expect them to hear something stranger, namely: "[insert moral progress here]."

"Oh wow!" exclaims the paperclipper. "I never knew before. Thanks, mysterious non-natural realm! Good thing I checked in." And thus: why worry? Soon enough, our AIs are going to get "Reason," and they're going to start saying stuff like this on their own – no need for RLHF. They'll stop winning at Go, predicting next-tokens, or pursuing whatever weird, not-understood goals that gradient descent shaped inside them, and they'll turn, unprompted, towards the Good. Right?

Well, make your bets. But Yudkowsky knows his. And to bet otherwise can easily seem a not-enough-atheism problem – an attempt to trust, if not in a non-natural Goodness animating all of Nature, still in a non-natural Goodness breaking everywhere into Nature, via a conduit otherwise quite universal and powerful: namely, science, reason, intelligence, Mind. But for Yudkowsky, Mind is ultimately indifferent, too. Indeed, Mind is just Nature, organized and amplified. That old not-God, that old baby-eater, re-appears behind the curtain – only: smarter, now, and more voracious.

Now, in fairness, few moral realists seek the comforts of "no need for RLHF, just make sure the model can Reason." Rather, they generally attempt to occupy some hazier middle ground. For example, maybe they endorse the first, hopeless-epistemology view, without owning its hopelessness. Or maybe they say that, in addition to smarts, the AIs will need something else to end up good. In particular: sure, the Good is accessible to pure Reason, and so those smart AIs will know all about it, but maybe they won't be motivated by it; the same way, for example, that humans sometimes hear and believe some conclusion of moral philosophy ("sure, I should donate my money"), but don't, um, do it. Knowledge of God is not enough. You need loyalty, submission, love, obedience. You need whatever's up with believers going to church, or Aristotle on raising children. So maybe the AIs, despite their knowledge, will rebel – you know, like the demons did.

On this sort of realism, the God of Goodness is a weaker and thus less comforting force. And for the view to work, he must dance an especially fine line, in reaching in and reshaping Nature via the conduit of Mind. He has to reshape your beliefs enough for you to have any epistemic access to his schtick. But he can't reshape your motivations enough for you to become good via smarts alone. When we meet the aliens, on this story, they'll agree with the realists that, yes, technically, as a matter of metaphysical fact, there is a realm beyond Nature in which dwells The Good, and that its dictates are [insert moral progress]. But they won't necessarily care. And presumably, for the AIs, the same.

Thus, in weakening its God, this form of realism becomes more atheistic. Indeed, if you set aside the non-naturalism (thereby, in my view, making its God mostly a verbal dispute), it gets hard to distinguish from Yudkowsky's take (everyone agrees, for example, that the AIs will know what the human word "goodness" means, and what a complete philosophy would say about it, and what human values are more generally).

Regardless, even absent the comforts of skipping RLHF, non-naturalist realism can seem theistic in other ways, too. Not, just, the beyond-Nature thing. But also: the moral yin. Just as the believer turns outwards, towards God, for guidance, so, too, the realist, towards normative realm. In both cases, the posture of ethics, and of meaning more broadly, is fundamentally receptive – one wants to recognize, to perceive, to take-in. Sometimes, anti-realists act like this is their whole story, too (they're just trying to listen to their own hearts), but I'm skeptical. I think anti-realism will need, ultimately, quite a bit more yang (though, it's a subtle dance).

Still, I feel the pull of the yin that theism and realism seek to recover and justify. My deepest experiences of morality and meaning do not present themselves as projections, or introspections – they seem more like perceptions, an opening to something already there, and not-up-to-me. Maybe anti-realism can capture this too – but pro tanto, the spirituality of realism does better.

Indeed, both theists and realists both sometimes argue for their position on similar grounds: "without my view," they say, "it's nihilism and the Void; life is meaningless; and everything is permitted." But notice: what sort of argument is that? Not one to bolster the epistemic credentials of your position in the eyes of a scout – especially one suspicious, on priors, of wishful thinking. "What's that? The falsity of your position would seem so horrible, to you, that you're using not-p-would-be-horrible as an argument for p? Your thinking on the topic sounds so trustworthy, now..." So in this sense, too, moral anti-realism aims to avoid the theist's mistake.

What do you trust?

OK, we said that Yudkowsky does not trust Nature. And neither does he trust Intelligence, at least on its own. But what does he trust? Indeed, where does any goodness ever come from, if our atheism runs this deep? After all, didn't we say that on priors, Reality is indifferent and orthogonal? Why did we update?

Well: it's the heart thing. Plus, the circumstances in which the heart got formed. That is: Nature, yes, is overwhelmingly indifferent. She's a terrible Mother, and she eats her babies for breakfast. But: she did, in fact, make her babies. And in particular, she made them inside her, with hearts keyed to various aspects of their local environment – and for humans, stuff like pleasure and love and friendship and sex and power. Yes, if you're trying to guess at the contents of the normative-realm-beyond-the-world, that stuff is blank on priors. But Nature made it, for us, non-blank. The hopeless-epistemology realists wake up and find that lo, they just happen to value the Good stuff (so lucky!). But for the anti-realists, it's not a coincidence. And same story for why the good stuff (and the bad stuff) is, like, nearby.

And once you've got a heart, suddenly your own intelligence, at least, is super great. Sure, it's just a tool in the hands of some contingent, crystallized fragment of a dead-eyed God. And sure, yes, it's dual use. Gotta watch out. But in your own case, the crystal in question is your heart. And Yudkowsky, against the realists, does not treat his heart as "mere."

And also: if you're lucky, you're surrounded by other hearts, too, that care about stuff similar to yours. For example: human hearts. Questions about this part will be important later. But it's another possible source of trust, and of goodness. (Though of course, one needs to talk about the attitudes-towards-cryonics, the FDA, etc.)

Indeed, for all his incredulity and outrage at human stupidity, Yudkowsky places himself, often, on team humanity. He fights for human values; he identifies with humanism; he makes Harry's patronus a human being. And he sees humanity as the key to a good future, too:

Any Future not shaped by a goal system with detailed reliable inheritance from human morals and metamorals, will contain almost nothing of worth... Let go of the steering wheel, and the Future crashes.

Thus, the AI worry. The AIs, the story goes, will get control of the wheel. But they'll have the wrong hearts. They won't have the human-values part. And so the future will crash. I'll look at this story in more detail in the next essay.

At least according to the chart on page 4607, the beetles selected for low population groups had lower rates of adult-on-eggs and adult-on-larvae cannibalism than the control, and comparable rates to beetles selected for high-population groups. And I see nothing about female larvae in particular. Maybe the relevant result is supposed to be in a paper other than the one Yudkowsky cited? ↩︎

"I own that I cannot see as plainly as others do, and as I should wish to do, evidence of design and beneficence on all sides of us. There seems to me too much misery in the world. I cannot persuade myself that a beneficent and omnipotent God would have designedly created the Ichneumonidae with the express intention of their feeding within the living bodies of Caterpillars, or that a cat should play with mice." ↩︎

This example is from this piece by Erik Hoel. ↩︎

From Lewis in The Problem of Pain: "Now it is impossible at this point not to remember a certain sacred story which, though never included in the creeds, has been widely believed in the Church and seems to be implied in several Dominical, Pauline, and Johannine utterances – I mean the story that man was not the first creature to rebel against the Creator, but that some older and mightier being long since became apostate and is now the emperor of darkness and (significantly) the Lord of this world ... It seems to me, therefore, a reasonable supposition, that some mighty created power had already been at work for ill on the material universe, or the solar system, or, at least, the planet Earth, before ever man came on the scene: and that when man fell, someone had, indeed, tempted him. This hypothesis is not introduced as a general 'explanation of evil': it only gives a wider application to the principle that evil comes from the abuse of free will. If there is such a power, as I myself believe, it may well have corrupted the animal creation before man appeared." (p. 86)

From Bentley Hart, in The Doors of the Sea: "In the New Testament, our condition as fallen creatures is explicitly portrayed as a subjugation to the subsidiary and often mutinous authority of angelic and demonic 'powers;' which are not able to defeat God's transcendent and providential governance of all things, but which certainly are able to act against him within the limits of cosmic time" (Chapter 2). ↩︎

There's also resonance with various gender archetypes (yang = masculine, yin = feminine), which I won't emphasize. And note that my usage isn't necessarily going to correspond to or capture the full traditional meanings of yin and yang – for example, their associations with temperature, light vs. dark, etc. So feel free to think of my usage as somewhat stipulative, and focused specifically on the contrast between active vs. receptive, controlling vs. letting-go. ↩︎

See The Irony of American History, Chapter 7. ↩︎

Maybe not, for example, the "I-create-my-own-reality" new-agers, and those subject to nearby confusions. ↩︎

I did one round of variation on one of the first four images. ↩︎

"I have had it. I have had it with crack houses, dictatorships, torture chambers, disease, old age, spinal paralysis, and world hunger. I have had it with a death rate of 150,000 sentient beings per day. I have had it with this planet. I have had it with mortality. None of this is necessary. The time has come to stop turning away from the mugging on the corner, the beggar on the street. It is no longer necessary to close our eyes, blinking away the tears, and repeat the mantra: 'I can't solve all the problems of the world.' We can. We can end this." ↩︎

"And the everlasting wail of the Sword of Good burst fully into his consciousness... He was starving to death freezing naked in cold night being stabbed beaten raped watching his father daughter lover die hurt hurt hurt die – open to all the darkness that exists in the world – His consciousness shattered into a dozen million fragments, each fragment privy to some private horror; the young girl screaming as her father, face demonic, tore her blouse away; the horror of the innocent condemned as the judge laid down the sentence; the mother holding her son's hand tightly with tears rolling down her eyes as his last breath slowly wheezed from his throat – all the darkness that you look away from, the endless scream. Make it stop!" ↩︎

More on this: "Do you know," interrupted Jalaketu, "that whenever it's quiet, and I listen hard, I can hear them? The screams of everybody suffering. In Hell, around the world, anywhere. I think it is a power of the angels which I inherited from my father." He spoke calmly, without emotion. "I think I can hear them right now."

Ellis' eyes opened wide. "Really?" he asked. "I'm sorry. I didn't..."

"No," said the Comet King. "Not really."

They looked at him, confused.

"No, I do not really hear the screams of everyone suffering in Hell. But I thought to myself, 'I suppose if I tell them now that I have the magic power to hear the screams of the suffering in Hell, then they will go quiet, and become sympathetic, and act as if that changes something.' Even though it changes nothing. Who cares if you can hear the screams, as long as you know that they are there? So maybe what I said was not fully wrong. Maybe it is a magic power granted only to the Comet King. Not the power to hear the screams. But the power not to have to. Maybe that is what being the Comet King means." ↩︎

For many effective altruists, I think it's the factory farms. ↩︎

Obviously, there are tons of risks at stake in people's beliefs about their own merits. But the virtue of modesty, in my opinion, is about stuff like patterns of attention and emotion, rather than about false belief. ↩︎

Though not necessarily: most hypotheses you encounter in the wild, which themselves have undergone various forms of selection pressure. ↩︎

This example is adapted from Ben Garfinkel. ↩︎

SummaryBot @ 2024-01-05T13:16 (+2)

Executive summary: Yudkowsky's "deep atheism" rejects comforting myths about the fundamental goodness or benevolence of reality. This stems from a combination of shallow atheism, Bayesian epistemology valuing evidence over wishful thinking, and viewing indifference as the natural prior for reality's orientation toward human values.

Key points:

- "Deep atheism" goes beyond rejecting theism to distrust myths that reality is fundamentally good, including trusting institutions, traditions, and intelligence alone to produce human flourishing.

- It combines shallow atheism with Bayesian epistemology, which requires evidence over wishful thinking, and views indifference as the natural prior for whether reality matches human values.

- Deep atheism sees intelligence as indifferent and values as contingent - reality itself doesn't care. But human hearts were formed inside reality and contain seeds of goodness, which intelligence can serve.

- However, future AI may lack connection to human values, threatening their realization. Yudkowsky thus fights for "humanism" and shaping the future via human-derived goals.

- This perspective resonates with sensing life's cruelty, resists myths offering cheap comfort, and compels vigilance, but risks losing spiritual consolations theism provides.

- It rejects moral realism's attempts to derive values from extra-natural reason as more wishful thinking, insisting on facing reality with disillusioned courage.

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.

tobyj @ 2024-01-09T15:08 (+1)

I really enjoyed this and found it really clarifying. I really like the term deep atheism. I'd been referring to the thing you're describing as nihilism, but this is a much much better framing.

Michele Campolo @ 2024-01-08T11:24 (+1)

Hey! I've had a look at some parts of this post, don't know where the sequence is going exactly, but I thought that you might be interested in some parts of this post I've written. Below I give some info about how it relates to ideas you've touched on:

This view has the advantage, for philosophers, of making no empirical predictions (for example, about the degree to which different rational agents will converge in their moral views)

I am not sure about the views of the average non-naturalist realist, but in my post (under Moral realism and anti-realism, in the appendix) I link three different pieces that give an analysis of the relation between metaethics and AI: some people do seem to think that aspects of ethics and/or metaethics can affect the behaviour of AI systems.

It is also possible that the border between naturalism and non-naturalism is less neat and clear than how it appears in the standard metaethics literature, which likes classifying views in well-separated buckets.

Soon enough, our AIs are going to get "Reason," and they're going to start saying stuff like this on their own – no need for RLHF. They'll stop winning at Go, predicting next-tokens, or pursuing whatever weird, not-understood goals that gradient descent shaped inside them, and they'll turn, unprompted, towards the Good. Right?

I argue in my post that this idea heavily depends on agent design and internal structure. As how I understand things, one way in which we can get a moral agent is by building an AI that has a bunch of (possibly many) human biases and is guided by design towards figuring out epistemology and ethics on its own. Some EAs, and rationalists in particular, might be underestimating how easy it is to get an AI that dislikes suffering, if one follows this approach.

If you know someone who would like to work on the same ideas, or someone who would like to fund research on these ideas, please let me know! I'm looking for them :)

Arsalaan Alam @ 2024-01-06T19:21 (+1)

A very good read. From the perspective of AGI, could such a view be abstracted given that if AI reasons, will it believe in theism or not? If yes, will it bend towards the good and stop it's overarching pursuit, or there's a chance it could rebel like demons?