Ben_West's Quick takes

By Ben_West🔸 @ 2021-10-17T19:59 (+7)

nullBen_West🔸 @ 2024-12-24T17:50 (+130)

Adult film star Abella Danger apparently took an class on EA at University of Miami, became convinced, and posted about EA to raise $10k for One for the World. She was PornHub's most popular female performer in 2023 and has ~10M followers on instagram. Her post has ~15k likes, comments seem mostly positive.

I think this might be the class that @Richard Y Chappell🔸 teaches?

Thanks Abella and kudos to whoever introduced her to EA!

Ben_West🔸 @ 2024-12-24T21:48 (+32)

It looks like she did a giving season fundraiser for Helen Keller International, which she credits to the EA class she took. Maybe we will see her at a future EAG!

akash 🔸 @ 2024-12-26T07:55 (+6)

(Tangential but related) There is probably a strong case to be made for recruiting the help of EA sympathetic celebrities to promote effective giving, and maybe even raise funds. I am a bit hesitant about "cause promotion" by celebrities, but maybe some version of that idea is also defensible. Turns out, someone wrote about it on the Forum a few years ago, but I don't know how much subsequent discussion there has been on this topic since then.

Julia_Wise🔸 @ 2025-01-06T20:34 (+7)

High Impact Athletes is one effort in this direction.

Karthik Tadepalli @ 2024-12-26T17:58 (+5)

I follow a lot of YouTubers and streamers who run large-scale charitable events (example, example, example) and I've always thought about how great it would be to convince them to give the money to an effective charity.

Ben_West @ 2024-04-20T19:01 (+90)

Animal Justice Appreciation Note

Animal Justice et al. v A.G of Ontario 2024 was recently decided and struck down large portions of Ontario's ag-gag law. A blog post is here. The suit was partially funded by ACE, which presumably means that many of the people reading this deserve partial credit for donating to support it.

Thanks to Animal Justice (Andrea Gonsalves, Fredrick Schumann, Kaitlyn Mitchell, Scott Tinney), co-applicants Jessica Scott-Reid and Louise Jorgensen, and everyone who supported this work!

Ben_West @ 2024-04-17T16:55 (+76)

Marcus Daniell appreciation note

@Marcus Daniell, cofounder of High Impact Athletes, came back from knee surgery and is donating half of his prize money this year. He projects raising $100,000. Through a partnership with Momentum, people can pledge to donate for each point he gets; he has raised $28,000 through this so far. It's cool to see this, and I'm wishing him luck for his final year of professional play!

GraceAdams @ 2024-04-18T00:35 (+8)

I was lucky enough to see Marcus play this year at the Australian Open, and have pledged alongside him! Marcus is so hardworking - in tennis alongside his work at High Impact Athletes! Go Marcus!!!

NickLaing @ 2024-04-18T23:27 (+2)

New Zealand let's go!

Ben_West @ 2024-04-24T17:22 (+71)

First in-ovo sexing in the US

Egg Innovations announced that they are "on track to adopt the technology in early 2025." Approximately 300 million male chicks are ground up alive in the US each year (since only female chicks are valuable) and in-ovo sexing would prevent this.

UEP originally promised to eliminate male chick culling by 2020; needless to say, they didn't keep that commitment. But better late than never!

Congrats to everyone working on this, including @Robert - Innovate Animal Ag, who founded an organization devoted to pushing this technology.[1]

- ^

Egg Innovations says they can't disclose details about who they are working with for NDA reasons; if anyone has more information about who deserves credit for this, please comment!

Julia_Wise @ 2024-04-27T21:10 (+6)

For others who were curious about what time difference this makes: looks like sex identification is possible at 9 days after the egg is laid, vs 21 days for the egg to hatch (plus an additional ~2 days between fertilization and the laying of the egg.) Chicken embryonic development is really fast, with some stages measured in hours rather than days.

Holly Morgan @ 2024-04-27T21:42 (+6)

I asked Google when chicken embryos start to feel pain and this was the first result (i.e. I didn't look hard and I didn't anchor on a figure):

A recent study by the Technical University of Munich in Germany measured chicken embryos' heart rate, brain activity, blood pressure and movements in response to potentially painful stimuli like heat and electricity and concluded that they didn't seem to feel them until at least day 13. (14 Oct 2023)

Gina_Stuessy @ 2024-04-26T06:41 (+3)

How many chicks per year will Egg Innovations' change save? (The announcement link is blocked for me.)

Holly Morgan @ 2024-04-27T19:38 (+4)

Egg Innovations, which sells 300 million free-range and pasture-raised eggs a year

This interview with the CEO suggests that Egg Innovations are just in the laying (not broiler) business and that each hen produces ~400 eggs over her lifetime. So this will save ~750,000 chicks a year?

Ben_West @ 2024-04-27T17:24 (+2)

I don't think they say, unfortunately.

Nathan Young @ 2024-04-24T21:03 (+1)

Wow this is wonderful news.

Ben_West @ 2022-03-07T16:37 (+61)

Startups aren't good for learning

I fairly frequently have conversations with people who are excited about starting their own project and, within a few minutes, convince them that they would learn less starting project than they would working for someone else. I think this is basically the only opinion I have where I can regularly convince EAs to change their mind in a few minutes of discussion and, since there is now renewed interest in starting EA projects, it seems worth trying to write down.

It's generally accepted that optimal learning environments have a few properties:

- You are doing something that is just slightly too hard for you.

- In startups, you do whatever needs to get done. This will often be things that are way too easy (answering a huge number of support requests) or way too hard (pitching a large company CEO on your product when you've never even sold bubblegum before).

- Established companies, by contrast, put substantial effort into slotting people into roles that are approximately at their skill level (though you still usually need to put in proactive effort to learn things at an established company).

- Repeatedly practicing a skill in "chunks"

- Similar to the last point, established companies have a "rhythm" where e.g. one month per year where everyone has a priority of writing up reflections on how the sales cycle is going, commenting on each other's writeups, and updating your own. Startups do things by the seat of their pants, which means employees are usually rapidly switching between tasks.

- Feedback from experts/mentorship

- Startup accelerators like YCombinator partially address this, but still a defining characteristic of starting your own project is that you are doing the work without guidance/oversight.

Moreover, even supposing you learn more at a startup, it's worth thinking about what it actually is you learn. I know way more about the laws regarding healthcare insurance than I did before starting a company, but that knowledge isn't super useful to me outside the startup context.

This isn't a 100% universal knockdown argument – some established companies suck for professional development, and some startups are really great. But by default, I would expect startups to be worse for learning.

imben @ 2022-03-26T22:35 (+7)

I think I agree with this. Two things that might make starting a startup a better learning opportunity than your alternative, in spite of it being a worse learning environment:

- You are undervalued by the job market (so you can get more opportunities to do cool things by starting your own thing)

- You work harder in your startup because you care about it more (so you get more productive hours of learning)

Dave Cortright @ 2022-03-27T00:12 (+1)

It depends on what you want to learn. At a startup, people will often get a lot more breadth of scope than they would otherwise in an established company. Yes, you might not have in-house mentors or seasoned pros to learn from, but these days motivated people can fill in the holes outside the org.

Yonatan Cale @ 2022-03-08T23:47 (+1)

It depends what you want to learn

As you said.

- Founding a startup is a great way to learn how to found a startup.

- Working as a backend engineer in some company is a great way to learn how to be a backend engineer in some company.

(I don't see why to break it up more than that)

Ben_West @ 2023-09-25T22:45 (+59)

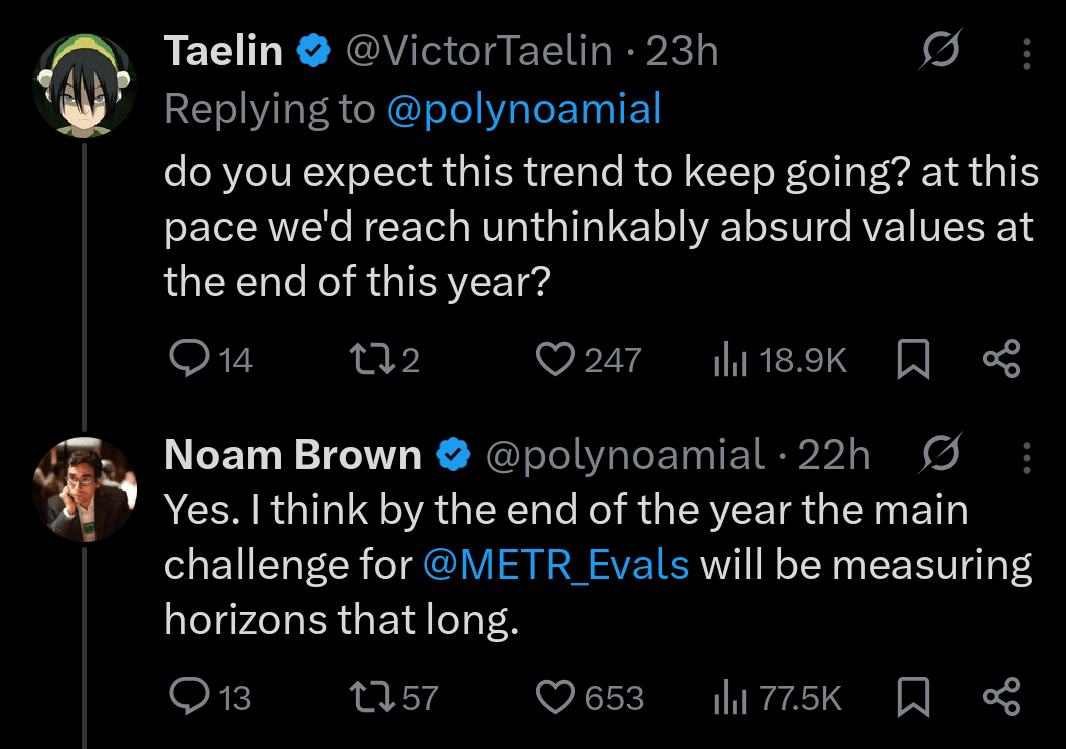

Sam Bankman-Fried's trial is scheduled to start October 3, 2023, and Michael Lewis’s book about FTX comes out the same day. My hope and expectation is that neither will be focused on EA,[1] but several people have recently asked me about if they should prepare anything, so I wanted to quickly record my thoughts.

The Forum feels like it’s in a better place to me than when FTX declared bankruptcy: the moderation team at the time was Lizka, Lorenzo, and myself, but it is now six people, and they’ve put in a number of processes to make it easier to deal with a sudden growth in the number of heated discussions. We have also made a number of design changes, notably to the community section.

CEA has also improved our communications and legal processes so we can be more responsive to news, if we need to (though some of the constraints mentioned here are still applicable).

Nonetheless, I think there’s a decent chance that viewing the Forum, Twitter, or news media could become stressful for some people, and you may want to preemptively create a plan for engaging with that in a healthy way.

- ^

This market is thinly traded but is currently predicting that Lewis’s book will not explicitly assert that Sam misused customer funds because of “ends justify the means” reasoning

Tobias Häberli @ 2023-09-26T06:04 (+25)

My hope and expectation is that neither will be focused on EA

I'd be surprised [p<0.1] if EA was not a significant focus of the Michael Lewis book – but agree that it's unlikely to be the major topic. Many leaders at FTX and Alameda Research are closely linked to EA. SBF often, and publically, said that effective altruism was a big reason for his actions. His connection to EA is interesting both for understanding his motivation and as a story-telling element. There are Manifold prediction markets on whether the book would mention 80'000h (74%), Open Philanthropy (74%), and Give Well (80%), but these markets aren't traded a lot and are not very informative.[1]

This video titled The Fake Genius: A $30 BILLION Fraud (2.8 million views, posted 3 weeks ago) might give a glimpse of how EA could be handled. The video touches on EA but isn't centred on it. It discusses the role EAs played in motivating SBF to do earning to give, and in starting Alameda Research and FTX. It also points out that, after the fallout at Alameda Research, 'higher-ups' at CEA were warned about SBF but supposedly ignored the warnings. Overall, the video is mainly interested in the mechanisms of how the suspected fraud happened, where EA is only one piece of the puzzle. One can equally get a sense of "EA led SBF to do fraud" as "SBF used EA as a front to do fraud".

ETA:

The book description[2] "mentions "philanthropy", makes it clear that it's mainly about SBF and not FTX as a firm, and describes the book as partly a psychological portrait.

- ^

I also created a similar market for CEA, but with 2 mentions as the resolving criteria. One mention is very likely as SBF worked briefly for them.

- ^

"In Going Infinite Lewis sets out to answer this question, taking readers into the mind of Bankman-Fried, whose rise and fall offers an education in high-frequency trading, cryptocurrencies, philanthropy, bankruptcy, and the justice system. Both psychological portrait and financial roller-coaster ride, Going Infinite is Michael Lewis at the top of his game, tracing the mind-bending trajectory of a character who never liked the rules and was allowed to live by his own—until it all came undone."

Sean_o_h @ 2023-09-26T13:51 (+11)

Yeah, unfortunately I suspect that "he claimed to be an altruist doing good! As part of this weird framework/community!" is going to be substantial part of what makes this an interesting story for writers/media, and what makes it more interesting than "he was doing criminal things in crypto" (which I suspect is just not that interesting on its own at this point, even at such a large scale).

DavidNash @ 2023-09-27T14:40 (+24)

The Panorama episode briefly mentioned EA. Peter Singer spoke for a couple of minutes and EA was mainly viewed as charity that would be missing out on money. There seemed to be a lot more interest on the internal discussions within FTX, crypto drama, the politicians, celebrities etc.

Maybe Panorama is an outlier but potentially EA is not that interesting to most people or seemingly too complicated to explain if you only have an hour.

Nathan Young @ 2023-09-27T16:59 (+12)

Yeah I was interviewed for a podcast by a canadian station on this topic (cos a canadian hedge fund was very involved). iirc they had 6 episodes but dropped the EA angle because it was too complex.

Sean_o_h @ 2023-09-27T15:08 (+2)

Good to know, thank you.

joshcmorrison @ 2023-09-28T20:48 (+10)

Agree with this and also with the point below that the EA angle is kind of too complicated to be super compelling for a broad audience. I thought this New Yorker piece's discussion (which involved EA a decent amount in a way I thought was quite fair -- https://www.newyorker.com/magazine/2023/10/02/inside-sam-bankman-frieds-family-bubble) might give a sense of magnitude (though the NYer audience is going to be more interested in these sort of nuances than most.

The other factors I think are: 1. to what extent there are vivid new tidbits or revelations in Lewis's book that relate to EA and 2. the drama around Caroline Ellison and other witnesses at trial and the extent to which that is connected to EA; my guess is the drama around the cooperating witnesses will seem very interesting on a human level, though I don't necessarily think that will point towards the effective altruism community specifically.

quinn @ 2023-09-26T20:03 (+5)

Michael Lewis wouldn't do it as a gotcha/sneer, but this is a reason I'll be upset if Adam McKay ends up with the movie.

Tristan Williams @ 2023-09-28T20:47 (+10)

Update: the court ruled SBF can't make reference to his philanthropy

Ben_West @ 2023-09-26T15:35 (+5)

Yeah, "touches on EA but isn't centred on it" is my modal prediction for how major stories will go. I expect that more minor stories (e.g. the daily "here's what happened on day n of the trial" story) will usually not mention EA. But obviously it's hard to predict these things with much confidence.

Larks @ 2023-09-27T13:54 (+11)

If I understand this correctly, maybe not in the trial itself:

Accordingly, the defendant is precluded from referring to any alleged prior good acts by the defendant, including any charity or philanthropy, as indicative of his character or his guilt or innocence.

I guess technically the prosecution could still bring it up.

Ben_West @ 2023-09-27T15:28 (+5)

I hadn't realized that, thanks for sharing

quinn @ 2023-09-26T20:01 (+3)

(I forgot to tell JP and Lizka in at EAG in NY a few weeks ago, but now's as good a time as any):

Can my profile karma total be two numbers, one for community and one for other stuff? I don't want a reader to think my actual work is valuable to people in proportion to my EA Forum karma, as far as I can tell I think 3-5x my karma is community sourced compared to my object-level posts. People should look at my profile as "this guy procrastinates through PVP on social media like everyone else, he should work harder on things that matter".

Ben_West @ 2023-09-26T21:17 (+7)

Yeah, I kind of agree that we should do something here; maybe the two-dimensional thing you mentioned, or maybe community karma should count less/not at all.

Could you add a comment here?

Tristan Williams @ 2023-09-27T10:18 (+7)

Could see a number of potentially good solutions here, but think the "not at all" is possibly not the greatest idea. Creating a separate community karma could lead to a sort of system of social clout that may not be desirable, but I also think having no way to signal who has and has not in the past been a major contributor to the community as a topic would be more of a failure mode because I often use it to get a deeper sense of how to think about the claims in a given comment/post.

quinn @ 2023-09-27T16:45 (+3)

There would be some UX ways to make community clout feel lower status than the other clout, I agree with you that having community clout means more investment / should be preferred over a new account which for all you know is a driveby dunk/sneer after wandering in on twitter.

I'll cc this to my feature request in the proper thread.

Michelle_Hutchinson @ 2023-09-26T02:56 (+3)

Thank you for the prompt.

Ben_West @ 2022-10-28T17:13 (+55)

The Forum moderation team has been made aware that Kerry Vaughn published a tweet thread that, among other things, accuses a Forum user of doing things that violate our norms. Most importantly:

Where he crossed the line was his decision to dox people who worked at Leverage or affiliated organizations by researching the people who worked there and posting their names to the EA forum

The user in question said this information came from searching LinkedIn for people who had listed themselves as having worked at Leverage and related organizations.

This is not "doxing" and it’s unclear to us why Kerry would use this term: for example, there was no attempt to connect anonymous and real names, which seems to be a key part of the definition of “doxing”. In any case, we do not consider this to be a violation of our norms.

At one point Forum moderators got a report that some of the information about these people was inaccurate. We tried to get in touch with the then-anonymous user, and when we were unable to, we redacted the names from the comment. Later, the user noticed the change and replaced the names. One of CEA’s staff asked the user to encode the names to allow those people more privacy, and the user did so.

Kerry says that a former Leverage staff member “requests that people not include her last name or the names of other people at Leverage” and indicates the user broke this request. However, the post in question requests that the author’s last name not be used in reference to that post, rather than in general. The comment in question doesn’t refer to the former staff member’s post at all, and was originally written more than a year before the post. So we do not view this comment as disregarding someone’s request for privacy.

Kerry makes several other accusations, and we similarly do not believe them to be violations of this Forum's norms. We have shared our analysis of these accusations with Leverage; they are, of course, entitled to disagree with us (and publicly state their disagreement), but the moderation team wants to make clear that we take enforcement of our norms seriously.

We would also like to take this opportunity to remind everyone that CEA’s Community Health team serves as a point of contact for the EA community, and if you believe harassment or other issues are occurring we encourage you to reach out to them.

Ben_West @ 2023-01-15T18:25 (+54)

Possible Vote Brigading

We have received an influx of people creating accounts to cast votes and comments over the past week, and we are aware that people who feel strongly about human biodiversity sometimes vote brigade on sites where the topic is being discussed. Please be aware that voting and discussion about some topics may not be representative of the normal EA Forum user base.

Habryka @ 2023-01-15T20:31 (+46)

Huh, seems like you should just revert those votes, or turn off voting for new accounts. Seems better than just having people be confused about vote totals.

Jason @ 2023-01-16T02:15 (+23)

And maybe add a visible "new account" flag -- I understand not wanting to cut off existing users creating throwaways, but some people are using screenshots of forum comments as evidence of what EAs in general think.

Larks @ 2023-01-16T17:38 (+5)

Arguably also beneficially if you thought that we should typically make an extra effort to be tolerant of 'obvious' questions from new users.

Ben_West @ 2023-01-16T17:42 (+2)

Thanks! Yeah, this is something we've considered, usually in the context of trying to make the Forum more welcoming to newcomers, but this is another reason to prioritize that feature.

Peter Wildeford @ 2023-01-16T19:00 (+1)

I agree.

Ben_West @ 2023-01-16T02:16 (+9)

Yeah, I think we should probably go through and remove people who are obviously brigading (eg tons of votes in one hour and no other activity), but I'm hesitant to do too much more retroactively. I think it's possible that next time we have a discussion that has a passionate audience outside of EA we should restrict signups more, but that obviously has costs.

Habryka @ 2023-01-16T03:03 (+6)

When you purge user accounts you automatically revoke their votes. I wouldn't be very hesitant to do that.

Ben_West @ 2023-01-16T16:40 (+6)

How do you differentiate someone who is sincerely engaging and happens to have just created an account now from someone who just wants their viewpoint to seem more popular and isn't interested in truth seeking?

Or are you saying we should just purge accounts that are clearly in the latter category, and accept that there will be some which are actually in the latter category but we can't distinguish from the former?

Habryka @ 2023-01-16T19:17 (+5)

I think being like "sorry, we've reverted votes from recently signed-up accounts because we can't distinguish them" seems fine. Also, in my experience abusive voting patterns are usually very obvious, where people show up and only vote on one specific comment or post, or on content of one specific user, or vote so fast that it seems impossible for them to have read the content they are voting on.

Peter Wildeford @ 2023-01-16T19:00 (+5)

I agree.

Larks @ 2023-01-16T17:37 (+6)

Could you set a minimum karma threshold (or account age or something) for your votes to count? I would expect even a low threshold like 10 would solve much of the problem.

Ben_West @ 2023-01-16T17:43 (+25)

Yeah, interesting. I think we have a lot of lurkers who never get any karma and I don't want to entirely exclude them, but maybe some combo like "10 karma or your account has to be at least one week old" would be good.

Peter Wildeford @ 2023-01-16T19:01 (+4)

Yeah I think that would be a really smart way to implement it.

pseudonym @ 2023-01-15T20:50 (+4)

Do the moderators think the effect of vote brigading reflect support from people who are pro-HBD or anti-HBD?

Ben_West🔸 @ 2026-02-02T18:43 (+49)

EA Animal Welfare Fund almost as big as Coefficient Giving FAW now?

This job ad says they raised >$10M in 2025 and are targeting $20M in 2026. CG's public Farmed Animal Welfare 2025 grants are ~$35M.

Is this right?

Cool to see the fund grow so much either way.

kierangreig🔸 @ 2026-02-04T01:09 (+25)

Agree that it’s really great to see the fund grow so much!

That said, I don’t think it’s right to say it’s almost as large as Coefficient Giving. At least not yet... :)

The 2025 total appears to exclude a number of grants (including one to Rethink Priorities) and only runs through August of that year. By comparison, Coefficient Giving’s farmed animal welfare funding in 2024 was around $70M, based on the figures published on their website.

Jeff Kaufman 🔸 @ 2026-02-03T18:02 (+9)

almost as big as Coefficient Giving

Specifically within animal welfare (this wasn't immediately clear to me, and I was very confused how CG's grants could be so low)

Ben_West🔸 @ 2026-02-03T18:47 (+4)

Ah yeah good point, I updated the text.

Ben_West @ 2023-11-29T17:51 (+49)

Thoughts on the OpenAI Board Decisions

A couple months ago I remarked that Sam Bankman-Fried's trial was scheduled to start in October, and people should prepare for EA to be in the headlines. It turned out that his trial did not actually generate much press for EA, but a month later EA is again making news as a result of recent Open AI board decisions.

A couple quick points:

- It is often the case that people's behavior is much more reasonable than what is presented in the media. It is also sometimes the case that the reality is even stupider than what is presented. We currently don't know what actually happened, and should hold multiple hypotheses simultaneously.[1]

- It's very hard to predict the outcome of media stories. Here are a few takes I've heard; we should consider that any of these could become the dominant narrative.

- Vinod Khosla (The Information): “OpenAI’s board members’ religion of ‘effective altruism’ and its misapplication could have set back the world’s path to the tremendous benefits of artificial intelligence”

- John Thornhill (Financial Times): One entrepreneur who is close to OpenAI says the board was “incredibly principled and brave” to confront Altman, even if it failed to explain its actions in public. “The board is rightly being attacked for incompetence,” the entrepreneur told me. “But if the new board is composed of normal tech people, then I doubt they’ll take safety issues seriously.”

- The Economist: “The chief lesson is the folly of policing technologies using corporate structures … Fortunately for humanity, there are bodies that have a much more convincing claim to represent its interests: elected governments”

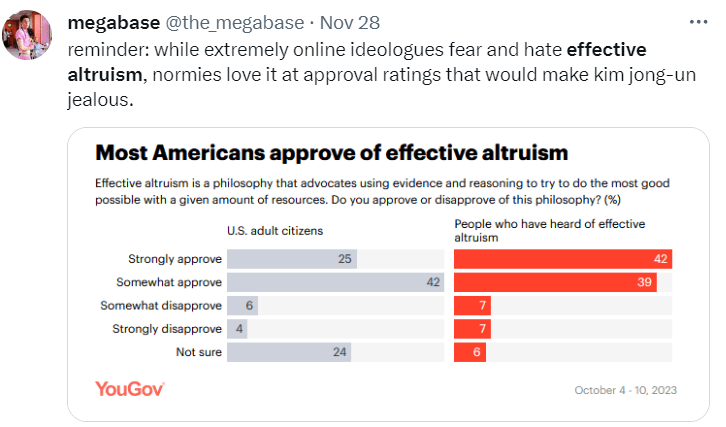

- The previous point notwithstanding, people's attention spans are extremely short, and the median outcome of a news story is ~nothing. I've commented before that FTX's collapse had little effect on the average person’s perception of EA, and we might expect a similar thing to happen here.[2]

- Animal welfare has historically been unique amongst EA causes in having a dedicated lobby who is fighting against it. While we don't yet have a HumaneWatch for AI Safety, we should be aware that people have strong interests in how AI develops, and this means that stories about AI will be treated differently from those about, say, malaria.

- It can be frustrating to feel that a group you are part of is being judged by the actions of a couple people you’ve never met nor have any strong feelings about. The flipside of this though is that we get to celebrate the victories of people we’ve never met. Here are a few things posted in the last week that I thought were cool:

- The Against Malaria Foundation is in the middle of a nine-month bed net distribution which is expected to prevent 20 million cases of malaria, and about 40,000 deaths. (Rob Mather)

- The Shrimp Welfare Project signed an agreement to prevent 125 million shrimps per year from having their eyes cut off and other painful farming practices. (Ula Zarosa)

- The Belgian Senate voted to add animal welfare to their Constitution. (Bob Jacobs)

- Scott Alexander’s recent post also has a nice summary of victories.

- ^

A collection of prediction markets about this event can be found here.

- ^

Note that the data collected here does not exclude the possibility that perception of EA was affected in some subcommunities, and it might be the case that some subcommunities (e.g. OpenAI staff) do have a changed opinion, even if the average person’s opinion is unchanged

Habryka @ 2023-11-29T22:54 (+32)

I've commented before that FTX's collapse had little effect on the average person’s perception of EA

Just for the record, I think the evidence you cited there was shoddy, and I think we are seeing continued references to FTX in basically all coverage of the OpenAI situation, showing that it did clearly have a lasting effect on the perception of EA.

Reputation is lazily-evaluated. Yes, if you ask a random person on the street what they think of you, they won't know, but when your decisions start influencing them, they will start getting informed, and we are seeing really very clear evidence that when people start getting informed, FTX is heavily influencing their opinion.

Ben_West @ 2023-11-29T23:58 (+6)

we are seeing really very clear evidence that when people start getting informed, FTX is heavily influencing their opinion.

Thanks! Could you share said evidence? The data sources I cited certainly have limitations, having access to more surveys etc. would be valuable.

Lorenzo Buonanno @ 2023-11-30T01:09 (+19)

The Wikipedia page on effective altruism mentions Bankman-Fried 11 times, and after/during the OpenAI story, it was edited to include a lot of criticism, ~half of which was written after FTX (e.g. it quotes this tweet https://twitter.com/sama/status/1593046526284410880 )

It's the first place I would go to if I wanted an independent take on "what's effective altruism?" I expect many others to do the same.

Jason @ 2023-11-30T02:41 (+18)

There are a lot of recent edits on that article by a single editor, apparently a former NY Times reporter (the edit log is public). From the edit summaries, those edits look rather unfriendly, and the article as a whole feels negatively slanted to me. So I'm not sure how much weight I'd give that article specifically.

Habryka @ 2023-11-30T00:42 (+13)

Sure, here are the top hits for "Effective Altruism OpenAI" (I did no cherry-picking, this was the first search term that I came up with, and I am just going top to bottom). Each one mentions FTX in a way that pretty clearly matters for the overall article:

- Bloomberg: "What is Effective Altruism? What does it mean for AI?"

- "AI safety was embraced as an important cause by big-name Silicon Valley figures who believe in effective altruism, including Peter Thiel, Elon Musk and Sam Bankman-Fried, the founder of crypto exchange FTX, who was convicted in early November of a massive fraud."

- Reddit "I think this was an Effective Altruism (EA) takeover by the OpenAI board"

- Top comment: " I only learned about EA during the FTX debacle. And was unaware until recently of its focus on AI. Since been reading and catching up …"

- WSJ: "How a Fervent Belief Split Silicon Valley—and Fueled the Blowup at OpenAI"

- "Coming just weeks after effective altruism’s most prominent backer, Sam Bankman-Fried, was convicted of fraud, the OpenAI meltdown delivered another blow to the movement, which believes that carefully crafted artificial-intelligence systems, imbued with the correct human values, will yield a Golden Age—and failure to do so could have apocalyptic consequences."

- Wired: "Effective Altruism Is Pushing a Dangerous Brand of ‘AI Safety’"

- "EA is currently being scrutinized due to its association with Sam Bankman-Fried’s crypto scandal, but less has been written about how the ideology is now driving the research agenda in the field of artificial intelligence (AI), creating a race to proliferate harmful systems, ironically in the name of “AI safety.”

- Semafor: "The AI industry turns against its favorite philosophy"

- "The first was caused by the downfall of convicted crypto fraudster Sam Bankman-Fried, who was once among the leading figures of EA, an ideology that emerged in the elite corridors of Silicon Valley and Oxford University in the 2010s offering an alternative, utilitarian-infused approach to charitable giving."

Ben_West @ 2023-11-30T01:19 (+4)

Ah yeah sorry, the claim of the post you criticized was not that FTX isn't mentioned in the press, but rather that those mentions don't seem to actually have impacted sentiment very much.

I thought when you said "FTX is heavily influencing their opinion" you were referring to changes in sentiment, but possibly I misunderstood you – if you just mean "journalists mention it a lot" then I agree.

Habryka @ 2023-11-30T02:11 (+2)

You are also welcome to check Twitter mentions or do other analysis of people talking publicly about EA. I don't think this is a "journalist only" thing. I will take bets you will see a similar pattern.

Ben_West @ 2023-11-30T02:40 (+10)

I actually did that earlier, then realized I should clarify what you were trying to claim. I will copy the results in below, but even though they support the view that FTX was not a huge deal I want to disclaim that this methodology doesn't seem like it actually gets at the important thing.

But anyway, my original comment text:

As a convenience sample I searched twitter for "effective altruism". The first reference to FTX doesn't come until tweet 36, which is a link to this. Honestly it seems mostly like a standard anti-utilitarianism complaint; it feels like FTX isn't actually the crux.

In contrast, I see 3 e/acc-type criticisms before that, two "I like EA but this AI stuff is too weird" things (including one retweeted by Yann LeCun??), two "EA is tech-bro/not diverse" complaints and one thing about Whytham Abbey.

And this (survey discussed/criticized here):

Habryka @ 2023-11-30T03:50 (+4)

I just tried to reproduce the Twitter datapoint. Here is the first tweet when I sort by most recent:

Most tweets are negative, mostly referring to the OpenAI thing. Among the top 10 I see three references to FTX. This continues to be quite remarkable, especially given that it's been more than a year, and these tweets are quite short.

I don't know what search you did to find a different pattern. Maybe it was just random chance that I got many more than you did.

Ben_West @ 2023-11-30T05:08 (+2)

I used the default sort ("Top").

(No opinion on which is more useful; I don't use Twitter much.)

Habryka @ 2023-11-30T05:35 (+4)

Top was mostly showing me tweets from people that I follow, so my sense is it was filtered in a personalized way. I am not fully sure how it works, but it didn't seem the right type of filter.

Ben_West @ 2023-12-05T00:38 (+4)

Yeah, makes sense. Although I just tried doing the "latest" sort and went through the top 40 tweets without seeing a reference to FTX/SBF.

My guess is that this filter just (unsurprisingly) shows you whatever random thing people are talking about on twitter at the moment, and it seems like the random EA-related thing of today is this, which doesn't mention FTX.

Probably you need some longitudinal data to have this be useful.

Nathan Young @ 2023-12-01T14:19 (+2)

I would guess too that these two events have made it much easier to reference EA in passing. eg I think this article wouldn't have been written 18 months ago. https://www.politico.com/news/2023/10/13/open-philanthropy-funding-ai-policy-00121362

So I think there is a real jump of notoriety once the journalistic class knows who you are. And they now know who we are. "EA, the social movement involved in the FTX and OpenAI crises" is not a good epithet.

Ben_West🔸 @ 2024-11-19T18:20 (+43)

EA in a World Where People Actually Listen to Us

I had considered calling the third wave of EA "EA in a World Where People Actually Listen to Us".

Leopold's situational awareness memo has become a salient example of this for me. I used to sometimes think that arguments about whether we should avoid discussing the power of AI in order to avoid triggering an arms race were a bit silly and self important because obviously defense leaders aren't going to be listening to some random internet charity nerds and changing policy as a result.

Well, they are and they are. Let's hope it's for the better.

Jan_Kulveit @ 2024-11-20T07:52 (+59)

Seems plausible the impact of that single individual act is so negative that aggregate impact of EA is negative.

I think people should reflect seriously upon this possibility and not fall prey to wishful thinking (let's hope speeding up the AI race and making it superpower powered is the best intervention! it's better if everyone warning about this was wrong and Leopold is right!).

The broader story here is that EA prioritization methodology is really good for finding highly leveraged spots in the world, but there isn't a good methodology for figuring out what to do in such places, and there also isn't a robust pipeline for promoting virtues and virtuous actors to such places.

sapphire @ 2024-11-20T08:25 (+9)

I spent all day in tears when I read the congressional report. This is a nightmare. I was literally hoping to wake up from a bad dream.

I really hope people don't suffer for our sins.

How could we have done something so terrible. Starting an arms race and making literal war more likely.

Charlie_Guthmann @ 2024-11-20T08:13 (+8)

| and there also isn't a robust pipeline for promoting virtues and virtuous actors to such places.

this ^

JWS 🔸 @ 2024-11-20T08:40 (+10)

I'm not sure to what extent the Situational Awareness Memo or Leopold himself are representatives of 'EA'

In the pro-side:

- Leopold thinks AGI is coming soon, will be a big deal, and that solving the alignment problem is one of the world's most important priorities

- He used to work at GPI & FTX, and formerly identified with EA

- He (

probablyalmost certainly) personally knows lots of EA people in the Bay

On the con-side:

- EA isn't just AI Safety (yet), so having short timelines/high importance on AI shouldn't be sufficient to make someone an EA?[1]

- EA shouldn't also just refer to a specific subset of the Bay Culture (please), or at least we need some more labels to distinguish different parts of it in that case

- Many EAs have disagreed with various parts of the memo, e.g. Gideon's well received post here

- Since his EA institutional history he moved to OpenAI (mixed)[2] and now runs an AGI investment firm.

- By self-identification, I'm not sure I've seen Leopold identify as an EA at all recently.

This again comes down to the nebulousness of what 'being an EA' means.[3] I have no doubts at all that, given what Leopold thinks is the way to have the most impact he'll be very effective at achieving that.

Further, on your point, I think there's a reason to suspect that something like situational awareness went viral in a way that, say, Rethink Priorities Moral Weight project didn't - the promise many people see in powerful AI is power itself, and that's always going to be interesting for people to follow, so I'm not sure that situational awareness becoming influential makes it more likely that other 'EA' ideas will

- ^

Plenty of e/accs have these two beliefs as well, they just expect alignment by default, for instance

- ^

I view OpenAI as tending implicitly/explicitly anti-EA, though I don't think there was an explicit 'purge', I think the culture/vision of the company was changed such that card-carrying EAs didn't want to work there any more

- ^

The 3 big defintions I have (self-identification, beliefs, actions) could all easily point in different directions for Leopold

Ben_West🔸 @ 2024-11-21T07:29 (+12)

In my post, I suggested that one possible future is that we stay at the "forefront of weirdness." Calculating moral weights, to use your example.

I could imagine though that the fact that our opinions might be read by someone with access to the nuclear codes changes how we do things.

I wish there was more debate about which of these futures is more desirable.

(This is what I was trying to get out with my original post. I'm not trying to make any strong claims about whether any individual person counts as "EA".)

David Mathers🔸 @ 2024-11-20T08:47 (+11)

I think he is pretty clearly an EA given he used to help run the Future Fund, or at most an only very recently ex-EA. Having said that, it's not clear to me this means that "EAs" are at fault for everything he does.

JWS 🔸 @ 2024-11-20T09:01 (+5)

Yeah again I just think this depends on one's definition of EA, which is the point I was trying to make above.

Many people have turned away from EA, both the beliefs, institutions, and community in the aftermath of the FTX collapse. Even Ben Todd seems to not be an EA by some definitions any more, be that via association or identification. Who is to say Leopold is any different, or has not gone further? What then is the use of calling him EA, or using his views to represent the 'Third Wave' of EA?

I guess from my PoV what I'm saying is that I'm not sure there's much 'connective tissue' between Leopold and myself, so when people use phrases like "listen to us" or "How could we have done" I end up thinking "who the heck is we/us?"

MichaelDickens @ 2024-11-20T16:10 (+9)

I don't want to claim all EAs believe the same things, but if the congressional commission had listened to what you might call the "central" EA position, it would not be recommending an arms race because it would be much more concerned about misalignment risk. The overwhelming majority of EAs involved in AI safety seem to agree that arms races are bad and misalignment risk is the biggest concern (within AI safety). So if anything this is a problem of the commission not listening to EAs, or at least selectively listening to only the parts they want to hear.

Habryka @ 2024-11-20T16:49 (+26)

In most cases this is a rumors based thing, but I have heard that a substantial chunk of the OP-adjacent EA-policy space has been quite hawkish for many years, and at least the things I have heard is that a bunch of key leaders "basically agreed with the China part of situational awareness".

Again, people should really take this with a double-dose of salt, I am personally at like 50/50 of this being true, and I would love people like lukeprog or Holden or Jason Matheny or others high up at RAND to clarify their positions here. I am not attached to what I believe, but I have heard these rumors from sources that didn't seem crazy (but also various things could have been lost in a game of telephone, and being very concerned about China doesn't result in endorsing a "Manhattan project to AGI", though the rumors that I have heard did sound like they would endorse that)

Less rumor-based, I also know that Dario has historically been very hawkish, and "needing to beat China" was one of the top justifications historically given for why Anthropic does capability research. I have heard this from many people, so feel more comfortable saying it with fewer disclaimers, but am still only like 80% on it being true.

Overall, my current guess is that indeed, a large-ish fraction of the EA policy people would have pushed for things like this, and at least didn't seem like they would push back on it that much. My guess is "we" are at least somewhat responsible for this, and there is much less of a consensus against a U.S. china arms race in US governance among EAs than one might think, and so the above is not much evidence that there was no listening or only very selective listening to EAs.

MichaelDickens @ 2024-11-20T23:07 (+9)

I looked thru the congressional commission report's list of testimonies for plausibly EA-adjacent people. The only EA-adjacent org I saw was CSET, which had two testimonies (1, 2). From a brief skim, neither one looked clearly pro- or anti-arms race. They seemed vaguely pro-arms race on vibes but I didn't see any claims that look like they were clearly encouraging an arms race—but like I said, I only briefly skimmed them, so I could have missed a lot.

Dicentra @ 2024-11-20T22:36 (+6)

This is inconsistent with my impressions and recollections. Most clearly, my sense is that CSET was (maybe still is, not sure) known for being very anti-escalatory towards China, and did substantial early research debunking hawkish views about AI progress in China, demonstrating it was less far along than ways widely believed in DC (and that EAs were involved in this, because they thought it was true and important, because they thought current false fears in the greater natsec community were enhancing arms race risks) (and this was when Jason was leading CSET, and OP supporting its founding). Some of the same people were also supportive of export controls, which are more ambiguous-sign here.

Habryka @ 2024-11-20T23:14 (+9)

The export controls seemed like a pretty central example of hawkishness towards China and a reasonable precursor to this report. The central motivation in all that I have written related to them was about beating China in AI capabilities development.

Of course no one likes a symmetric arms race, but the question is did people favor the "quickly establish overwhelming dominance towards China by investing heavily in AI" or the "try to negotiate with China and not set an example of racing towards AGI" strategy. My sense is many people favored the former (though definitely not all, and I am not saying that there is anything like consensus, my sense is it's a quite divisive topic).

To support your point, I have seen much writing from Helen Toner on trying to dispel hawkishness towards China, and have been grateful for that. Against your point, at the recent "AI Security Forum" in Vegas, many x-risk concerned people expressed very hawkish opinions.

Dicentra @ 2024-11-21T00:01 (+3)

Yeah re the export controls, I was trying to say "I think CSET was generally anti-escalatory, but in contrast, the effect of their export controls work was less so" (though I used the word "ambiguous" because my impression was that some relevant people saw a pro of that work that it also mostly didn't directly advance AI progress in the US, i.e. it set China back without necessarily bringing the US forward towards AGI). To use your terminology, my impression is some of those people were "trying to establish overwhelming dominance over China" but not by "investing heavily in AI".

MichaelDickens @ 2024-11-20T17:00 (+6)

It looks to me like the online EA community, and the EAs I know IRL, have a fairly strong consensus that arms races are bad. Perhaps there's a divide in opinions with most self-identified EAs on one side, and policy people / company leaders on the other side—which in my view is unfortunate since the people holding the most power are also the most wrong.

(Is there some systematic reason why this would be true? At least one part of it makes sense: people who start AGI companies must believe that building AGI is the right move. It could also be that power corrupts, or something.)

So maybe I should say the congressional commission should've spent less time listening to EA policy people and more time reading the EA Forum. Which obviously was never going to happen but it would've been nice.

AGB 🔸 @ 2024-11-20T18:47 (+11)

Slightly independent to the point Habryka is making, which may well also be true, my anecdotal impression is that the online EA community / EAs I know IRL were much bigger on 'we need to beat China' arguments 2-4 years ago. If so, simple lag can also be part of the story here. In particular I think it was the mainstream position just before ChatGPT was released, and partly as a result I doubt an 'overwhelming majority of EAs involved in AI safety' disagree with it even now.

Example from August 2022:

https://www.astralcodexten.com/p/why-not-slow-ai-progress

So maybe (the argument goes) we should take a cue from the environmental activists, and be hostile towards AI companies...

This is the most common question I get on AI safety posts: why isn’t the rationalist / EA / AI safety movement doing this more? It’s a great question, and it’s one that the movement asks itself a lot...

Still, most people aren’t doing this. Why not?

Later, talking about why attempting a regulatory approach to avoiding a race is futile:

The biggest problem is China. US regulations don’t affect China. China says that AI leadership is a cornerstone of their national security - both as a massive boon to their surveillance state, and because it would boost their national pride if they could beat America in something so cutting-edge.

So the real question is: which would we prefer? OpenAI gets superintelligence in 2040? Or Facebook gets superintelligence in 2044? Or China gets superintelligence in 2048?

Might we be able to strike an agreement with China on AI, much as countries have previously made arms control or climate change agreements? This is . . . not technically prevented by the laws of physics, but it sounds really hard. When I bring this challenge up with AI policy people, they ask “Harder than the technical AI alignment problem?” Okay, fine, you win this one.

I feel like a generic non-EA policy person reading that post could well end up where the congressional commission landed? It's right there in the section that most explicitly talks about policy.

Ben_West🔸 @ 2024-11-21T21:22 (+5)

Huh, fwiw this is not my anecdotal experience. I would suggest that this is because I spend more time around doomers than you and doomers are very influenced by Yudkowsky's "don't fight over which monkey gets to eat the poison banana first" framing, but that seems contradicted by your example being ACX, who is also quite doomer-adjacent.

AGB 🔸 @ 2024-11-21T21:47 (+9)

That sounds plausible. I do think of ACX as much more 'accelerationist' than the doomer circles, for lack of a better term. Here's a more recent post from October 2023 informing that impression, below probably does a better job than I can do of adding nuance to Scott's position.

https://www.astralcodexten.com/p/pause-for-thought-the-ai-pause-debate

Second, if we never get AI, I expect the future to be short and grim. Most likely we kill ourselves with synthetic biology. If not, some combination of technological and economic stagnation, rising totalitarianism + illiberalism + mobocracy, fertility collapse and dysgenics will impoverish the world and accelerate its decaying institutional quality. I don’t spend much time worrying about any of these, because I think they’ll take a few generations to reach crisis level, and I expect technology to flip the gameboard well before then. But if we ban all gameboard-flipping technologies (the only other one I know is genetic enhancement, which is even more bannable), then we do end up with bioweapon catastrophe or social collapse. I’ve said before I think there’s a ~20% chance of AI destroying the world. But if we don’t get AI, I think there’s a 50%+ chance in the next 100 years we end up dead or careening towards Venezuela. That doesn’t mean I have to support AI accelerationism because 20% is smaller than 50%. Short, carefully-tailored pauses could improve the chance of AI going well by a lot, without increasing the risk of social collapse too much. But it’s something on my mind.

MichaelDickens @ 2024-11-20T19:59 (+4)

Scott's last sentence seems to be claiming that avoiding an arms race is easier than solving alignment (and it would seem to follow from that that we shouldn't race). But I can see how a politician reading this article wouldn't see that implication.

Habryka @ 2024-11-20T17:12 (+10)

Yep, my impression is that this is an opinion that people mostly adopted after spending a bunch of time in DC and engaging with governance stuff, and so is not something represented in the broader EA population.

My best explanation is that when working in governance, being pro-China is just very costly, and especially combining the belief that AI will be very powerful, and there is no urgency to beat China to it, seems very anti-memetic in DC, and so people working in the space started adopting those stances.

But I am not sure. There are also non-terrible arguments for beating China being really important (though they are mostly premised on alignment being relatively easy, which seems very wrong to me).

MichaelDickens @ 2024-11-20T17:26 (+2)

(though they are mostly premised on alignment being relatively easy, which seems very wrong to me)

Not just alignment being easy, but alignment being easy with overwhelmingly high probability. It seems to me that pushing for an arms race is bad even if there's only a 5% chance that alignment is hard.

Habryka @ 2024-11-20T17:53 (+4)

I think most of those people believe that "having an AI aligned to 'China's values'" would be comparably bad to a catastrophic misalignment failure, and if you believe that, 5% is not sufficient, if you think there is a greater than 5% of China ending up with "aligned AI" instead.

MichaelDickens @ 2024-11-20T20:03 (+3)

I think that's not a reasonable position to hold but I don't know how to constructively argue against it in a short comment so I'll just register my disagreement.

Like, presumably China's values include humans existing and having mostly good experiences.

Habryka @ 2024-11-20T20:39 (+3)

Yep, I agree with this, but it appears nevertheless a relatively prevalent opinion among many EAs working in AI policy.

Habryka @ 2024-11-22T02:00 (+4)

A somewhat relevant article that I discovered while researching this: Longtermists Are Pushing a New Cold War With China - Jacobin

The Biden administration’s decision, in October of last year, to impose drastic export controls on semiconductors, stands as one of its most substantial policy changes so far. As Jacobin‘s Branko Marcetic wrote at the time, the controls were likely the first shot in a new economic Cold War between the United States and China, in which both superpowers (not to mention the rest of the world) will feel the hurt for years or decades, if not permanently.

[...]

The idea behind the policy, however, did not emerge from the ether. Three years before the current administration issued the rule, Congress was already receiving extensive testimony in favor of something much like it. The lengthy 2019 report from the National Security Commission on Artificial Intelligence suggests unambiguously that the “United States should commit to a strategy to stay at least two generations ahead of China in state-of-the-art microelectronics” and

The commission report makes repeated references to the risks posed by AI development in “authoritarian” regimes like China’s, predicting dire consequences as compared with similar research and development carried out under the auspices of liberal democracy. (Its hand-wringing in particular about AI-powered, authoritarian Chinese surveillance is ironic, as it also ominously exhorts, “The [US] Intelligence Community (IC) should adopt and integrate AI-enabled capabilities across all aspects of its work, from collection to analysis.”)

These emphases on the dangers of morally misinformed AI are no accident. The commission head was Eric Schmidt, tech billionaire and contributor to Future Forward, whose philanthropic venture Schmidt Futures has both deep ties with the longtermist community and a record of shady influence over the White House on science policy. Schmidt himself has voiced measured concern about AI safety, albeit tinged with optimism, opining that “doomsday scenarios” of AI run amok deserve “thoughtful consideration.” He has also coauthored a book on the future risks of AI, with no lesser an expert on morally unchecked threats to human life than notorious war criminal Henry Kissinger.

Also of note is commission member Jason Matheny, CEO of the RAND Corporation. Matheny is an alum of the longtermist Future of Humanity Institute (FHI) at the University of Oxford, who has claimed existential risk and machine intelligence are more dangerous than any historical pandemics and “a neglected topic in both the scientific and governmental communities, but it’s hard to think of a topic more important than human survival.” This commission report was not his last testimony to Congress on the subject, either: in September 2020, he would individually speak before the House Budget Committee urging “multilateral export controls on the semiconductor manufacturing equipment needed to produce advanced chips,” the better to preserve American dominance in AI.

Congressional testimony and his position at the RAND Corporation, moreover, were not Matheny’s only channels for influencing US policy on the matter. In 2021 and 2022, he served in the White House’s Office of Science and Technology Policy (OSTP) as deputy assistant to the president for technology and national security and as deputy director for national security (the head of the OSTP national security division). As a senior figure in the Office — to which Biden has granted “unprecedented access and power” — advice on policies like the October export controls would have fallen squarely within his professional mandate.

The most significant restrictions advocates (aside from Matheny) to emerge from CSET, however, have been Saif Khan and Kevin Wolf. The former is an alum from the Center and, since April 2021, the director for technology and national security at the White House National Security Council. The latter has been a senior fellow at CSET since February 2022 and has a long history of service in and connections with US export policy. He served as assistant secretary of commerce for export administration from 2010–17 (among other work in the field, both private and public), and his extensive familiarity with the US export regulation system would be valuable to anyone aspiring to influence policy on the subject. Both would, before and after October, champion the semiconductor controls.

At CSET, Khan published repeatedly on the topic, time and again calling for the United States to implement semiconductor export controls to curb Chinese progress on AI. In March 2021, he testified before the Senate, arguing that the United States must impose such controls “to ensure that democracies lead in advanced chips and that they are used for good.” (Paradoxically, in the same breath the address calls on the United States to both “identify opportunities to collaborate with competitors, including China, to build confidence and avoid races to the bottom” and to “tightly control exports of American technology to human rights abusers,” such as… China.)

Among Khan’s coauthors was aforementioned former congressional hopeful and longtermist Carrick Flynn, previously assistant director of the Center for the Governance of AI at FHI. Flynn himself individually authored a CSET issue brief, “Recommendations on Export Controls for Artificial Intelligence,” in February 2020. The brief, unsurprisingly, argues for tightened semiconductor export regulation much like Khan and Matheny.

This February, Wolf too provided a congressional address on “Advancing National Security and Foreign Policy Through Sanctions, Export Controls, and Other Economic Tools,” praising the October controls and urging further policy in the same vein. In it, he claims knowledge of the specific motivations of the controls’ writers:

BIS did not rely on ECRA’s emerging and foundational technology provisions when publishing this rule so that it would not need to seek public comments before publishing it.

These motivations also clearly included exactly the sorts of AI concerns Matheny, Khan, Flynn, and other longtermists had long raised in this connection. In its background summary, the text of one rule explicitly links the controls with hopes of retarding China’s AI development. Using language that could easily have been ripped from a CSET paper on the topic, the summary warns that “‘supercomputers’ are being used by the PRC to improve calculations in weapons design and testing including for WMD, such as nuclear weapons, hypersonics and other advanced missile systems, and to analyze battlefield effects,” as well as bolster citizen surveillance.

[...]

Longtermists, in short, have since at least 2019 exerted a strong influence over what would become the Biden White House’s October 2022 semiconductor export rules. If the policy is not itself the direct product of institutional longtermists, it at the very least bears the stamp of their enthusiastic approval and close monitoring.

Just as it would be a mistake to restrict interest in longtermism’s political ambitions exclusively to election campaigns, it would be shortsighted to treat its work on semiconductor infrastructure as a one-off incident. Khan and Matheny, among others, remain in positions of considerable influence, and have demonstrated a commitment to bringing longtermist concerns to bear on matters of high policy. The policy sophistication, political reach, and fresh-faced enthusiasm on display in its semiconductor export maneuvering should earn the AI doomsday lobby its fair share of critical attention in the years to come.

The article seems quite biased to me, but I do think some of the basics here make sense and match with things I have heard (but also, some of it seems wrong).

Ben_West🔸 @ 2024-11-21T05:17 (+13)

Maybe instead of "where people actually listen to us" it's more like "EA in a world where people filter the most memetically fit of our ideas through their preconceived notions into something that only vaguely resembles what the median EA cares about but is importantly different from the world in which EA didn't exist."

MichaelDickens @ 2024-11-21T05:21 (+4)

On that framing, I agree that that's something that happens and that we should be able to anticipate will happen.

Charlie_Guthmann @ 2024-11-20T07:05 (+5)

Call me a hater, and believe me, I am, but maybe someone who went to university at 16 and clearly spent most of their time immersed in books is not the most socially developed.

Maybe after they are implicated in a huge scandal that destroyed our movement's reputation we should gently nudge them to not go on popular podcasts and talk fantastically and almost giddily about how world war 3 is just around the corner. Especially when they are working in a financial capacity in which they would benefit from said war.

Many of the people we have let be in charge of our movement and speak on behalf of it don't know the first thing about optics or leadership or politics. I don't think Elizier Yudowsky could win a middle school class president race with a million dollars.

I know your point was specifically tailored toward optics and thinking carefully about what we say when we have a large platform, but I think looking back and forward bad optics and a lack of real politik messaging are pretty obvious failure modes of a movement filled with chronically online young males who worship intelligence and research output above all else. I'm not trying to sh*t on Leopold and I don't claim I was out here beating a drum about the risks of these specific papers but yea I do think this is one symptom of a larger problem. I can barely think of anyone high up (publicly) in this movement who has risen via organizing.

Habryka @ 2024-11-20T07:43 (+23)

(I think the issue with Leopold is somewhat precisely that he seems to be quite politically savvy in a way that seems likely to make him a deca-multi-millionaire and politically influental, possibly at the cost of all of humanity. I agree Eliezer is not the best presenter, but his error modes are clearly enormously different)

Charlie_Guthmann @ 2024-11-20T08:03 (+5)

I don't think I was claiming they have the exact same failure modes - do you want to point out where I did that? Rather they both have failure modes that I would expect to happen as a result of selecting them to be talking heads on the basis of wits and research output. Also I feel like you are implying Leopold is evil or something like that and I don't agree but maybe I'm misinterpretting.

He seems like a smooth operator in some ways and certainly is quite different than Elizier. That being said I showed my dad (who has become an oddly good litmus test for a lot of this stuff for me as someone who is somewhat sympathethic to our movement but also a pretty normal 60 year old man in a completely different headspace) the Dwarkesh episode and he thought Leopold was very, very, very weird (and not because of his ideas). He kind of reminds me of Peter Thiel. I'll completely admit I wasn't especially clear in my points and that mostly reflects my own lack of clarity on the exact point I was trying to getting across.

I think I take back like 20% of what I said (basically to the extent I was making a very direct stab at what exactly that failure mode is) but mostly still stand by the original comment, which again I see as being approximately ~ "Selecting people to be the public figureheads of our movement on the basis wits and research output is likely to be bad for us".

David Mathers🔸 @ 2024-11-20T09:59 (+10)

The thing about Yudkowsky is that, yes, on the one hand, every time I read him, I think he surely must be coming across as super-weird and dodgy to "normal" people. But on the other hand, actually, it seems like he HAS done really well in getting people to take his ideas seriously? Sam Altman was trolling Yudkowsky on twitter a while back about how many of the people running/founding AGI labs had been inspired to do so by his work. He got invited to write on AI governance for TIME despite having no formal qualifications or significant scientific achievements whatsoever. I think if we actually look at his track record, he has done pretty well at convincing influential people to adopt what were once extremely fringe views, whilst also succeeding in being seen by the wider world as one of the most important proponents of those views, despite an almost complete lack of mainstream, legible credentials.

Charlie_Guthmann @ 2024-11-20T19:35 (+1)

Hmm, I hear what you are saying but that could easily be attributed to some mix of

(1) he has really good/convincing ideas

(2) he seems to be a a public representative for the EA/LW community for a journalist on the outside.

And I'm responding to someone saying that we are in "phase 3" - that is to say people in the public are listening to us - so I guess I'm not extremely concerned about him not being able to draw attention or convince people. I'm more just generally worried that people like him are not who we should be promoting to positions of power, even if those are de jure positions.

David Mathers🔸 @ 2024-11-21T03:03 (+3)

Yeah, I'm not a Yudkowsky fan. But I think the fact that he mostly hasn't been a PR disaster is striking, surprising and not much remarked upon, including by people who are big fans.

Charlie_Guthmann @ 2024-11-21T03:53 (+1)

I guess in thinking about this I realize it's so hard to even know if someone is a "PR disaster" that I probably have just been confirming my biases. What makes you say that he hasn't been?

David Mathers🔸 @ 2024-11-21T04:35 (+5)

Just the stuff I already said about the success he seems to have had. It is also true that many people hate him and think he's ridiculous, but I think that makes him polarizing rather than disastrous. I suppose you could phrase it as "he was a disaster in some ways but a success in others" if you want to.

David Mathers🔸 @ 2024-11-20T08:38 (+3)

How do you know Leopold or anyone else actually influenced the commission's report? Not that that seems particularly unlikely to me, but is there any hard evidence? EDIT: I text-searched the report and he is not mentioned by name, although obviously that doesn't prove much on its own.

yanni kyriacos @ 2024-11-21T01:24 (+2)

Hi Ben! You might be interested to know I literally had a meeting with the Assistant Defence Minister in Australia about 10 months ago off the back of one email. I wrote about it here. AI Safety advocacy is IMO still low extremely hanging fruit. My best theory is EAs don't want to do it because EAs are drawn to spreadsheets etc (it isn't their comparative advantage).

Ben_West @ 2023-05-03T18:54 (+37)

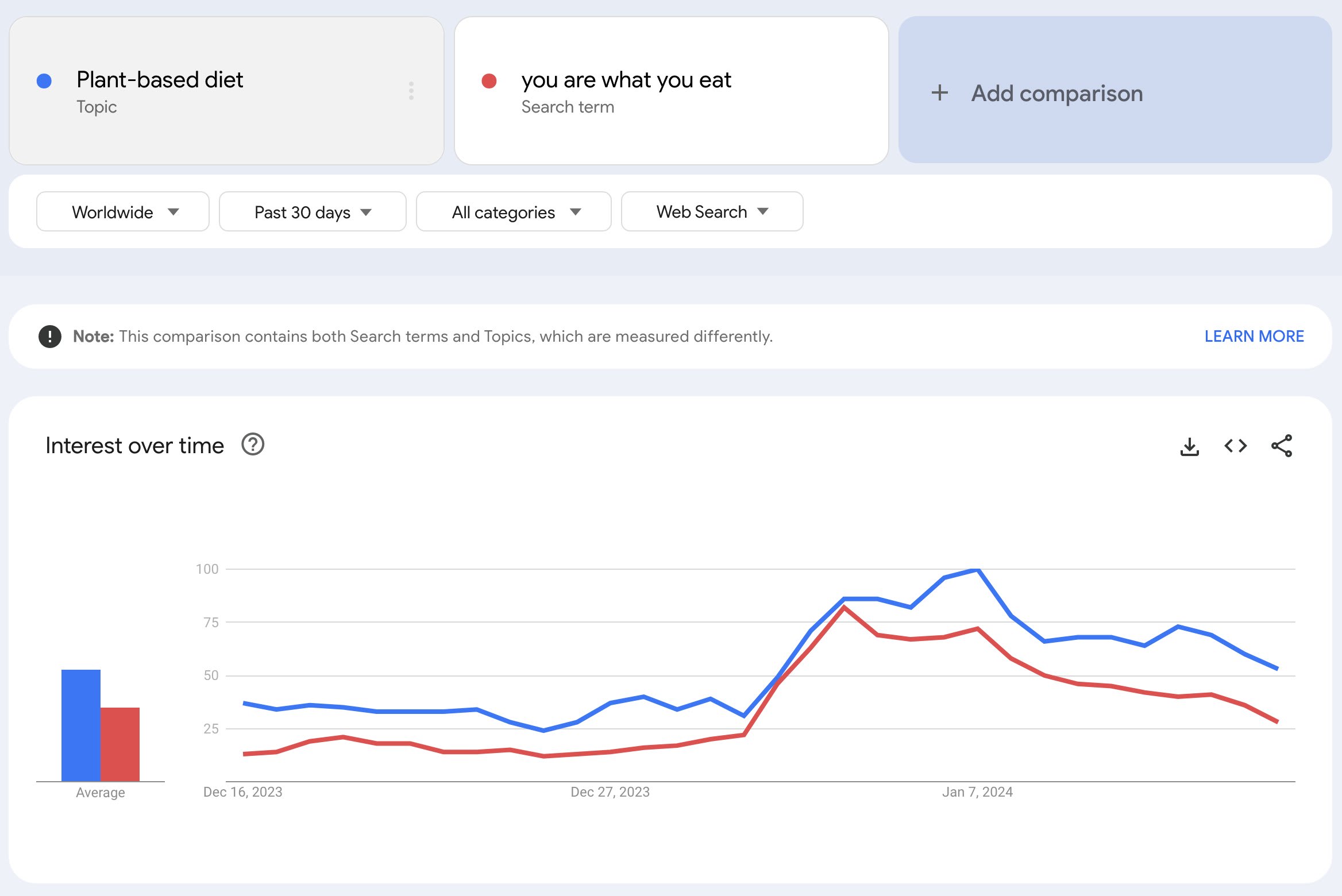

Plant-based burgers now taste better than beef

The food sector has witnessed a surge in the production of plant-based meat alternatives that aim to mimic various attributes of traditional animal products; however, overall sensory appreciation remains low. This study employed open-ended questions, preference ranking, and an identification question to analyze sensory drivers and barriers to liking four burger patties, i.e., two plant-based (one referred to as pea protein burger and one referred to as animal-like protein burger), one hybrid meat-mushroom (75% meat and 25% mushrooms), and one 100% beef burger. Untrained participants (n=175) were randomly assigned to blind or informed conditions in a between-subject study. The main objective was to evaluate the impact of providing information about the animal/plant-based protein source/type, and to obtain product descriptors and liking/disliking levels from consumers. Results from the ranking tests for blind and informed treatments showed that the animal-like protein [Impossible] was the most preferred product, followed by the 100% beef burger. Moreover, in the blind condition, there was no significant difference in preferences between the beef burger and the hybrid and pea protein burgers. In the blind tasting, people preferred the pea protein burger over the hybrid one, contrary to the results of the informed tasting, which implies the existence of affecting factors other than pure hedonistic enjoyment. In the identification question, although consumers correctly identified the beef burger under the blind condition, they still preferred the animal-like burger.

https://www.sciencedirect.com/science/article/abs/pii/S0963996923003587

MichaelStJules @ 2023-05-03T20:00 (+12)

Interesting! Some thoughts:

- I wonder if the preparation was "fair", and I'd like see replications with different beef burgers. Maybe they picked a bad beef burger?

- Who were the participants? E.g. students at a university, and so more liberal-leaning and already accepting of plant-based substitutes?

- Could participants reliably distinguish the beef burger and the animal-like plant-based burger in the blind condition?

(I couldn't get access to the paper.)

Ben_West @ 2023-05-03T21:47 (+11)

This Twitter thread points out that the beef burger was less heavily salted.

Linch @ 2023-05-07T09:47 (+5)

Thanks for the comment and the followup comments by you and Michael, Ben. First, it's really cool that Impossible was preferred to beef burgers in a blind test! Even if the test is not completely fair! Impossible has been around for a while, and obviously they would've been pretty excited to do a blind taste test earlier if they thought they could win, which is evidence that the product has improved somewhat over the years.

I want to quickly add an interesting tidbit I learned from food science practitioners[1] a while back:

Blind taste tests are not necessarily representative of "real" consumer food preferences.

By that, I mean I think most laymen who think about blind taste tests believe that there's a Platonic taste attribute that's captured well by blind taste tests (or captured except for some variance). So if Alice prefers A to B in a blind taste test, this means that Alice in some sense should like A more than B. And if she buys (at the same price) B instead of A at the supermarket, that means either she was tricked by good marketing, or she has idiosyncratic non-taste preferences that makes her prefer B to A (eg positive associations with eating B with family or something).

I think this is false. Blind taste tests are just pretty artificial, and they do not necessarily reflect real world conditions where people eat food. This difference is large enough to sometimes systematically bias results (hence the worry about differentially salted Impossible burgers and beef burgers).

People who regularly design taste tests usually know that there are easy ways that they can manipulate taste tests so people will prefer more X in a taste test, in ways that do not reflect more people wanting to buy more X in the real world. For example, I believe adding sugar regularly makes products more "tasty" in the sense of being more highly rated in a taste test. However, it is not in fact the case that adding high amounts of sugar automatically makes a product more commonly bought. This is generally understood as people in fact having genuinely different food preferences in taste test conditions than consumer real world decisions.

Concrete example: Pepsi consistently performs better than Coca-Cola in blind taste tests. Yet most consumers consistently buy more Coke than Pepsi. Many people (including many marketers, like the writers of the hyperlinks above) believe that this is strong evidence that Coke just has really good brand/marketing, and is able to sell an inferior product well to the masses.

Personally, I'm not so sure. My current best guess is that this discrepancy is best explained by consumer's genuine drink preferences being different in blind taste tests from real-world use cases. As a concrete operationalization, if people make generic knock-offs of Pepsi and Coke with alternative branding, I would expect faux Pepsi (that taste just like Pepsi) to perform better in blind tastes than faux Coke (that tastes just like Coke), but for more people to buy faux Coke anyway.

For Impossible specifically, I remember doing a blind taste test in 2016 between Impossible beef and regular beef, and thinking that personally I liked [2]the Impossible burger more. But I also remember distinctly that the Impossible burger had a much stronger umami taste, which naively seems to me like exactly the type of thing that more taste testers will prefer in blind test conditions than real-world conditions.

This is a pretty long-winded comment, but I hope other people finds this interesting!

- ^

This is lore, so it might well be false. I heard this from practitioners who sounded pretty confident, and it made logical sense to me, but this is different from the claims being actually empirically correct. Before writing this comment, I was hoping to find an academic source on this topic I can quickly summarize, but I was unable to find it quickly. So unfortunately my reasoning transparency here is basically on the level of "trust me bro :/"

- ^

To be clear I think it's unlikely for this conclusion to be shared by most taste testers, for the aforementioned reason that if Impossible believed this, they would've done public taste tests way before 2023.

Ben_West🔸 @ 2025-01-18T19:15 (+35)

EA Awards

- I feel worried that the ratio of the amount of criticism that one gets for doing EA stuff to the amount of positive feedback one gets is too high

- Awards are a standard way to counteract this

- I would like to explore having some sort of awards thingy

- I currently feel most excited about something like: a small group of people solicit nominations and then choose a short list of people to be voted on by Forum members, and then the winners are presented at a session at EAG BA

- I would appreciate feedback on:

- whether people think this is a good idea

- How to frame this - I want to avoid being seen as speaking on behalf of all EAs

- Also if anyone wants to volunteer to co-organize with me I would appreciate hearing that

MichaelDickens @ 2025-01-19T23:31 (+13)

The way they're usually done, awards counteract the negative:positive feedback ratio for a tiny group of people. I think it would be better to give positive feedback to a much larger group of people, but I don't have any good ideas about how to do that. Maybe just give a lot of awards?

Joseph Lemien @ 2025-01-19T15:48 (+2)

My gut likes the idea. (but I tend to be biased in favor of community-building, fun, complementary things)

The two concerns that leap to mind are:

- How to prevent this from simply being a popularity contest? What criteria would the voting/selection be based off of?

- Would this simply end up rewarding people who have been lucky enough to be born in the right place, or to have chosen the right college major?

I suspect that there are ways to avoid these stumbling blocks, but I don't know enough about the context/field/area to know what they are. Overall, I'd like to see people explore it and see if it would be workable.

Ben_West🔸 @ 2025-02-24T18:47 (+31)

If you can get a better score than our human subjects did on any of METR's RE-Bench evals, send it to me and we will fly you out for an onsite interview

Caveats:

- you're employable (we can sponsor visas from most but not all countries)

- use same hardware

- honor system that you didn't take more time than our human subjects (8 hours). If you take more still send it to me and we probably will still be interested in talking

(Crossposted from twitter.)

Ben_West🔸 @ 2026-02-07T06:52 (+27)

The AI Eval Singularity is Near

- AI capabilities seem to be doubling every 4-7 months

- Humanity's ability to measure capabilities is growing much more slowly

- This implies an "eval singularity": a point at which capabilities grow faster than our ability to measure them

- It seems like the singularity is ~here in cybersecurity, CBRN, and AI R&D (supporting quotes below)

- It's possible that this is temporary, but the people involved seem pretty worried

Appendix - quotes on eval saturation

- "For AI R&D capabilities, we found that Claude Opus 4.6 has saturated most of our