Effective Altruism Funds Project Updates

By SamDeere, Centre for Effective Altruism @ 2019-12-20T22:23 (+46)

I’m Sam, the Project Lead at Effective Altruism Funds. As the year closes, I wanted to write up a summary of what EA Funds is, how it’s organized, and what we’ve been up to lately. Hopefully this will be a useful resource for new donors deciding whether the Funds are right for them, give existing donors more information about how Funds is run, and provide an opportunity to give feedback that helps us make the product better.

If you’d like to donate with EA Funds, please click the link below.

Project Overview

What is EA Funds?

For anyone who hasn’t substantially interacted with Funds, here’s a quick overview to get you up to speed.

From the EA Funds About page:

Effective Altruism Funds is a platform where you can donate to expert-led philanthropic ‘funds’ with the aim of maximizing the effectiveness of your charitable donations.

As an individual donor, it’s often very difficult to know if your charitable donations will have an impact. It’s very hard to do thorough investigations of individual charitable projects. It’s also hard to know whether the charities you want to support are able to usefully deploy extra funding, or whether giving them more money would be subject to diminishing returns.

Effective Altruism Funds (or EA Funds for short) aims to solve these problems. We engage teams of subject-matter experts, who look for the highest-impact opportunities to do good.

EA Funds is comprised of four Funds, each covering a specific cause area. The Funds are Global Health and Development, Animal Welfare, Long-Term Future, and Effective Altruism Meta (formerly the EA Community Fund).

In addition to the Funds, the EA Funds platform also supports donations to a range of individual charities, as well as hosting the (currently annual) EA Funds Donor Lottery. It’s also the home of the Giving What We Can Pledge Dashboard, where Giving What We Can members/Try Givers can track/report progress against their Pledge, and donations made through the platform are reported automatically.

Who is EA Funds for?

EA Funds is probably best suited to relatively experienced donors, who are comfortable with delegating their donation decisions to the respective Fund management teams. Our Fund management teams are generally looking for grants that are high-impact in expectation, which means that they may choose to recommend grants that are more speculative. This is likely to lead to a higher variance in outcomes – some grants may achieve a lot and some may achieve very little. Overall, the hope is that the full portfolio of grants will be higher-impact than simply donating to a specific highly-recommended charity. This approach is sometimes referred to as hits-based giving (I personally like to think of it as ‘chasing positive black swans’).

If you’re thinking of donating to the Funds, you should read through the EA Funds website (including each Fund’s scope and past grant history) to make sure you’re comfortable with the level of risk offered by EA Funds.

My guess is that most individual donors (who aren’t spending significant time investigating donation opportunities) will probably improve the expected impact of their donations by donating to EA Funds (as compared to, say, donating directly to a charity in a cause area represented by one of the Funds). However, this is just a guess, and I am obviously not an impartial observer. As we build out better monitoring and evaluation infrastructure, it’s possible that in the future we’ll be able to make more definitive claims about the expected impact of donating to the Funds.

Structure of Fund management teams

EA Funds is a project of the Centre for Effective Altruism (CEA), a registered nonprofit in the US and the UK. CEA provides the legal and operational infrastructure for EA Funds to operate within, and is ultimately legally responsible for all grantmaking.

Each Fund is managed by a Fund management team, who are responsible for providing grant recommendations to CEA. Most Funds have a multi-member Fund management team, with the exception of the Global Development Fund.

Each Fund management team has a Fund Chair, who provides overall direction for the Fund and coordinates with CEA to provide grant recommendations. As it currently stands, the Chair does not get any additional voting rights, and grant decisions are made with input from the whole team (though the specific decision-making process differs slightly between Funds). The Chairs are also responsible for recommending the appointment of other members (decisions to appoint Fund management team members are ultimately made by CEA). Some Funds (Long-Term Future, EA Meta) have additional advisors, who do not make (or vote on) grant recommendations, but provide feedback and advice on grantmaking.

CEA ensures that grant recommendations are within the scope of the recommending Fund, screens grants for large potential downside risks, and conducts due diligence to ensure that grants are legally compliant (e.g. are grants for proper charitable purposes etc). Grant recommendations must be approved by CEA’s Board of Trustees before being paid out.

Direct donations to effective charities

In addition to the four Funds, the EA Funds platform allows donors to recommend direct donations to a number of individual effective charities. This is to increase the usefulness of the product for both donors (who can choose to donate to any combination of Funds and charities through the same platform, and Giving What We Can members can have their donations tracked automatically) as well as the charities themselves (who can receive tax-deductible donations in jurisdictions that they don’t currently operate in).

At the moment the process for adding charities to the platform has been fairly opportunistic/ad-hoc, and the inclusion criteria have largely been based on judgement calls about community evaluations of their relative impact. At this stage we’re not planning to actively solicit new additions to the platform, however we remain open to supporting highly-effective projects that would find inclusion on the platform advantageous for tax-efficiency reasons. If you run a project that you think has a defensible claim to being highly effective, and would benefit from being on EA Funds, please get in touch (please note that the project will need to be a registered charity or fiscally sponsored by one).

Project status and relationship to CEA

At the moment, EA Funds has a staff of ≈1.3 FTEs:

- I (Sam Deere) lead the project, handling strategy, technology, donor relations etc.

- Chloe Malone (from CEA’s Operations team) handles grantmaking logistics and due diligence

Other staff involved in the project include:

- Ben West, who oversees the project as my manager at CEA

- JP Addison, the other member of technical staff at CEA, who reviews code and splits some of the technical work with me

Currently EA Funds is a project wholly within the central part of the Centre for Effective Altruism (as opposed to a satellite project housed within the same legal organization, like 80,000 Hours or the Forethought Foundation). However, we’re currently investigating whether this should change. This is largely driven by a divergence in organizational priorities – specifically, that CEA is focusing on building communities and spaces for discussing EA ideas (e.g. local groups, EA Global and related events, and the EA Forum), whereas EA Funds is primarily fundraising-oriented. As such, we think it’s likely that EA Funds would fare better if it were set up to run as its own organization, with its own director, and a team specifically focused on developing the product, while CEA will benefit from having a narrower scope.

It’s still not clear what the best format for this would be, but in the medium term this will probably look like EA Funds existing as a separately managed organization within the Centre for Effective Altruism (à la 80,000 Hours or Forethought), reporting directly to CEA’s board. At first, CEA would still provide operational support in the form of payroll and grantmaking logistics etc., but eventually we may want to build capacity to do some or all of these things in-house.

Longer-term, it may be useful for EA Funds to incorporate as a separate legal entity, with a board specifically set up to provide oversight from a grantmaking perspective. Obviously this would represent a large change to the status quo, and so isn’t likely to happen any time soon.

(However things shake out, I wouldn't expect any organizational changes to materially change the Funds, their structure, or their management.)

Recent updates

Recent Grantmaking

- The Global Development Fund recommended a grant of just over $1 million to Fortify Health

- November 2019 grant recommendations have been made by the Animal Welfare Fund ($415k) and the EA Meta Fund ($330k).

- The Long-Term Future Fund has also submitted November grant recommendations, which are currently undergoing due diligence and will be published soon.

Project updates

- Most of the content on EA Funds (especially that describing individual Funds) hadn’t been substantially updated since its inception in early 2017. The structure was somewhat difficult to follow, and wasn’t particularly friendly to donors who were sympathetic to the aims of EA Funds, but had less familiarity with the effective altruism community (and the assumed knowledge that entails). At the beginning of December we conducted a major restructure/rewrite of the Funds pages and added additional information to help donors decide whether EA Funds is right for them.

- The Funds are again running a donor lottery, with $100k and $500k blocks, giving donors a chance to be able to make grant recommendations over large pots of money (both lotteries close on 17 January 2020).

- We're currently making plans for 2020. Our main priorities are likely to include improvements to our grantmaking process, with an emphasis on making past grants easier to monitor and evaluate. (This is still under active discussion, so our priorities could change if we come to the conclusion that other things are more important.)

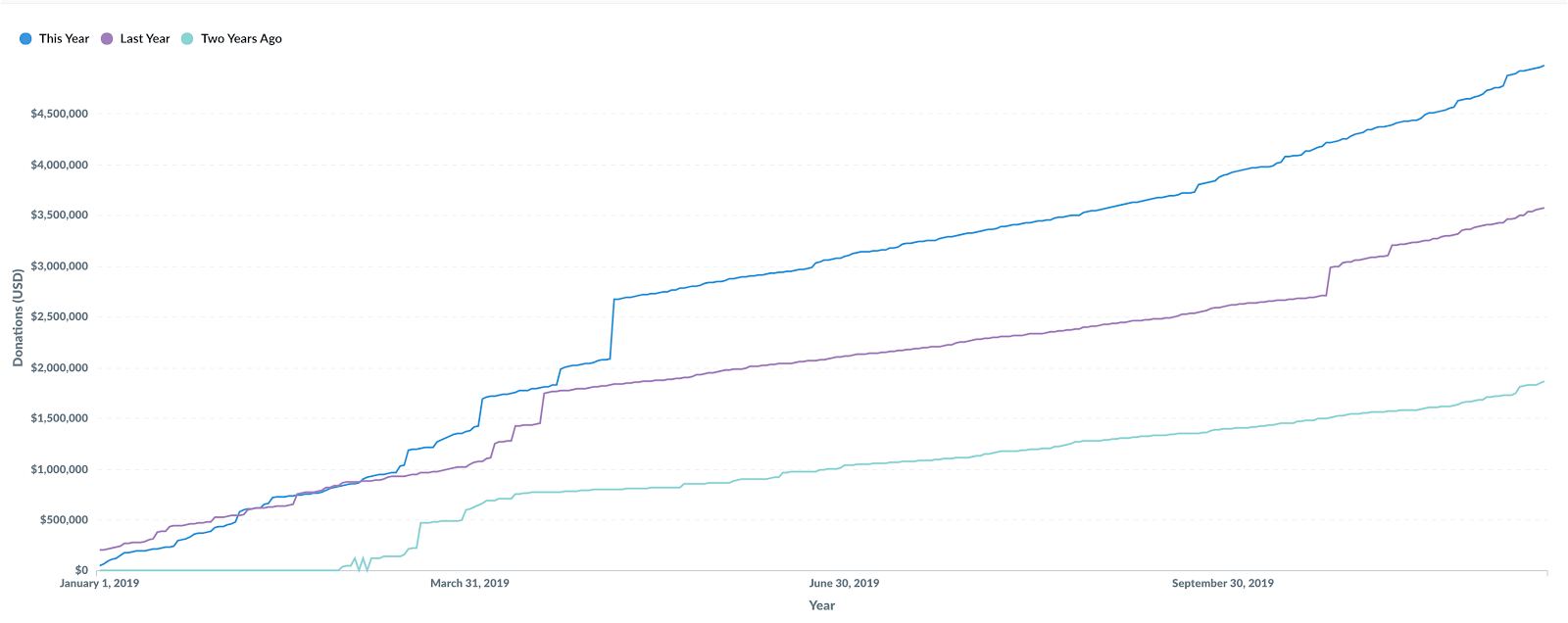

Public Data

We’ve recently launched a publicly-accessible dashboard to show various statistics about the Funds. It’s still fairly new, and currently just shows YTD donations, so if there’s a statistic you’d like included, leave a comment or email funds@effectivealtruism.org.

Khorton @ 2019-12-20T23:05 (+30)

Have you considered / do you plan to consider paying EA Funds grantmakers, so that they'd potentially have more time to consider where to direct grants and give feedback to unsuccessful applicants?

Misha_Yagudin @ 2019-12-21T15:31 (+14)

My not very informed guess is that only a minority of fund managers are primarily financially constrained. I think (a) giving detailed feedback is demanding [especially negative feedback]; (b) I expect that most of the fund managers are just very busy.

SamDeere @ 2020-01-10T18:51 (+11)

Yeah, I’d love to see this happen, both because I think that it’s good to pay people for their time, and also because of the incentives it creates. However, as Misha_Yagudin says, I don’t think financial constraints are the main bottleneck on getting good feedback or doing in-depth grant reviews, and time constraints are the bigger factor.

One thing I’ve been mulling over for some time is appointing full-time grantmakers to at least some of the Funds. This isn’t likely to be feasible in the near term (say, at least 6 months), and would depend a lot on how the product evolves, as well as funding constraints, but it’s definitely something we’ve considered.

Khorton @ 2020-01-10T19:22 (+2)

Thanks for answer! Another option in this vein would be to pay a Secretariat to handle correspondence with grant applicants, collecting grantmakers' views, giving feedback, writing up the recommendations based on jot notes from the grantmakers, etc. I'm not sure how much time a competent admin person could save Fund grantmakers, but it might be worth trying.

SamDeere @ 2020-01-23T12:12 (+3)

Yeah, this is something that's definitely been discussed, and I think this would be a logical first step between the current state of the world and hiring grantmakers to specific teams.

Misha_Yagudin @ 2019-12-22T20:54 (+18)

Hey Sam, I am curious about your estimates of (a) CEA's overhead, (b) grantmakers' overhead for an average grant.

For the context in April Oliver Habryka of EA LTFF wrote:

A rough fermi I made a few days ago suggests that each grant we make comes with about $2000 of overhead from CEA for making the grants in terms of labor cost plus some other risks (this is my own number, not CEAs estimate).

Habryka @ 2019-12-24T02:35 (+5)

I am also curious about this. I have since updated that the overhead is lower than I thought and I now think it's closer to $1k, since CEA has been able to handle a greater volume of grants than I thought they would.

SamDeere @ 2020-01-10T18:52 (+4)

Good question. My very rough Fermi estimate puts this at around $750/grant (based on something like $90k worth of staff costs directly related to grantmaking and ~120 grants/year). It’s hard to say how this scales, but we’ve continued to improve our grant processing pipeline, and I’d expect that we can continue to accommodate a relatively high number of grants per year. This is also only the average cost – I’d expect the marginal cost for each grant to be lower than this.

I don’t have a great sense of grantmaker overhead per individual grant, but I’d estimate the time cost something in the range of $500-$1000 per grant recommendation per Fund team member, or $2000-$4000 for a typical ~4-person team (noting of course that Fund management team members donate their spare time to work on the project).

AnonymousEAForumAccount @ 2019-12-23T20:22 (+10)

What’s the rationale behind categorizing the LTFF as “medium-high risk” vs. simply “high risk”? This isn’t meant as a criticism of the fund managers or any of the grants they’ve made, it’s just that trying to influence the LTF seems inherently high risk.

Habryka @ 2019-12-24T02:33 (+12)

I didn't make the relevant decision, but here is my current guess at the reasoning behind that classification:

I think we are currently making a mixture of grants, some of which could be described (in an ontology I don't fully agree with) as "medium-risk" and some of which could be described as "high-risk".

We are considering splitting the fund into a purely high-risk fund (primarily individuals and high-context grants), and a separate medium-risk fund (more to organizations and individuals with established track records), to allow donors more choice over what they want their money to go to. I think the current classification is kind of already making room for that future option by calling it "medium-high risk", which I think makes sense, though Sam should definitely clarify that if he disagrees.

AnonymousEAForumAccount @ 2019-12-31T00:36 (+2)

Thanks for sharing this potential shift Oli. If the fund split, would the same managers be in charge of both funds or would you add a new management team? Also, would you mind giving a couple of examples of grants that you’d consider “medium-risk”? And do you see these grants as comparably risky to the “medium risk” grants made by the other funds, or just less risky than other grants made by the LTFF?

My sense is that the other funds are making “medium-risk” grants that have substantially simpler paths to impact. Using the Health Fund’s grant to Fortify Health as an example, the big questions are whether FH can get the appropriate nutrients into food and then get people to consume that food, as there’s already strong evidence micronutrient fortification works. By contrast, I’d argue that the LTFF’s mandate comes with a higher baseline level of risk since “it is very difficult to know whether actions taken now are actually likely to improve the long-term future.” (Of course, that higher level of risk might be warranted; I’m not making any claims about the relative expected values of grants made by different funds).

Habryka @ 2019-12-31T22:44 (+27)

Note that I don't currently feel super comfortable with the "risk" language in the context of altruistic endeavors, and think that it conjures up a bunch of confusing associations with financial risk (where you usually have an underlying assumption that you are financially risk averse, which usually doesn't apply for altruistic efforts). So I am not fully sure whether I can answer your question as asked.

I actually think a major concern that is generating a lot of the discussion around this is much less "high variance of impact" and more something like "risk of abuse".

In particular, I think relatively few people would object if the funds were doing the equivalent of participating in the donor lottery, even though that would very straightforwardly increase the variance of our impact.

Instead, I think the key difference between a lot of grants that the LTFF made and that were perceived as "risky" and the grants of most other funds (and the grants we made that were perceived as "less risky") is that the risky grants were perceived as harder to judge from the outside of the fund, and were given to people to whom we have a closer personal connection to, both of which enable potential abuse by the fund managers by funneling funds to themselves and their personal connections.

I think people are justified in being concerned about risk of abuse, and I also think that people generally have particularly high standards for altruistic contributions not being funneled into self-enriching activities.

One observation I made that I think illustrates this pretty well is the response to last rounds grant for improving reproducibility in science. I consider that grant to be one of the riskiest (in the "variance of impact sense") that we ever made, with its effects being highly indirect, many steps removed from the long-term future and global catastrophic risk, and its effects only really being relevant in a somewhat smaller fraction of worlds where the reproducibility of cognitive science will become relevant to global catastrophic risks.

However, despite that, almost anyone I've talked to classified that grant as one of the least "risky" grants we made. I think this is because while the path to impact of the grant was long and indirect, the reasoning behind it was broadly available, and the information necessary to make the judgement was fully in-public. There was common-knowledge of grants of that type being a plausible path to impact, and there was no obvious way in which that grant would benefit us as the grantmakers.

Now, in this new frame, let me answer your original questions:

- At least from what I know the management team would stay the same for both funds

- I think in the frame of "risk of abuse", I consider the grant to reproducing science to be a "medium-risk" bet. I would also consider our grant to Ought and MIRI as "medium-risk" bets. I would classify many of our grants to individuals as high-risk bets.

- I think those "medium-risk" grants are indeed comparable in risk of abuse to the average grant of the meta-fund, which I think has generally exercised their individual judgement less, and have more deferred to a broad consensus on which things are positive impact (which I do think has resulted in a lot of value left on the table)

All of this said, I am not yet really sure whether the "risk of abuse" framing really accurately captures people's feelings here, and whether that's the appropriate frame from which to look at things through.

I do think that at the current-margin, using only granting procedures that have minimal risk of abuse is leaving a lot of value at the table, because I think evaluating individual people and their competencies, as well as using local expertize and hard-to-communicate experience, is a crucial component of good grant-making.

I do think we can build better incentive systems and accountability systems to lower the risk of abuse. Reducing the risk of abuse is one of the reasons why I've been investing so much effort into producing comprehensive and transparent grant writeups, since that exposes our reasoning more to the public, and allows people to cross-check and validate the reasoning for our grants, as well as call us out if they think our reasoning is spotty for specific grants. I think this is one way of reducing the risk of abuse, allowing us to overall make grants that take more advantage of our individual judgement, and being more effective on-net.

AnonymousEAForumAccount @ 2020-01-03T00:24 (+3)

Very helpful response! This (like much of the other detailed transparency you’ve provided) really helped me understand how you think about your grantmaking (strong upvote), though I wasn’t actually thinking about “risk of abuse” in my question.

I’d been thinking of “risk” in the sense that the EA Funds materials on the topic use the term: “The risk that a grant will have little or no impact.” I think this is basically the kind of risk that most donors will be most concerned about, and is generally a pretty intuitive framing. And while I’m open to counterarguments, my impression is that the LTFF’s grants are riskier in this sense than grants made by the other funds because they have longer and less direct paths to impact.

I think “risk of abuse” is an important thing to consider, but not something worth highlighting to donors through a prominent section of the fund pages. I’d guess that most donors assume that EA Funds is run in a way that “risk of abuse” is quite low, and that prospective donors would be turned off by lots of content suggesting otherwise. Also, I’m not sure “risk of abuse” is the right term. I’ve argued that some parts of EA grantmaking are too dependent on relationships and networks, but I’m much more concerned about unintentional biases than the kind of overt (and unwarranted) favoritism that “risk of abuse” implies. Maybe “risk of bias”?

Habryka @ 2020-01-03T00:31 (+18)

I’d been thinking of “risk” in the sense that the EA Funds materials on the topic use the term: “The risk that a grant will have little or no impact.” I think this is basically the kind of risk that most donors will be most concerned about, and is generally a pretty intuitive framing.

To be clear, I am claiming that the section you are linking is not very predictive of how I expect CEA to classify our grants, and is not very predictive of the attitudes that I have seen from CEA and other stakeholders and donors of the funds, in terms of whether they will have an intuitive sense that a grant is "risky". Indeed, I think that page is kind of misleading and think we should probably rewrite it.

I am concretely claiming that both CEA's attitudes, the attitudes of various stakeholders, and most donors attitudes is better predicted by the "risk of abuse" framing I have outlined. In that sense, I disagree with you that most donors will be primarily concerned about the kind of risk that is discussed on the EA Funds page.

Obviously, I do still think there is a place for considering something more like "variance of impact", but I don't actually think that that dimension has played a large role in people's historical reactions to grants we have made, and I don't expect it to matter too much in the future. I think in terms of impact, most people I have interacted with tend to be relatively risk-neutral when it comes to their altruistic impact (and I don't know of any good arguments for why someone should be risk-averse in their altruistic activities, since the case for diminishing marginal returns at the scales on which our grants tend to influence things seems pretty weak).

Edit: To give a more concrete example here, I think by far the grant that has been classified as the "riskiest" grant we have made, that from what I can tell has been motivating a lot of the split into "high risk" and "medium risk" grants, is our grant to Lauren Lee. That grant does not strike me as having a large downside risk, and I don't think anyone I've talked to has suggested that this is the case. The risk that people have talked about is the risk of abuse that I have been talking about, and associated public relations risks, and many have critiqued the grant as "the Long Term Future Fund giving money to their friends", which highlights to me the dimension of abuse risk much more concretely than the dimension of high variance.

In addition to that, grants that operate a higher level of meta than other grants, i.e. which tend to facilitate recruitment, training or various forms of culture-development, have not been broadly described as "risky" to me, even though from a variance perspective those kinds of grants are almost always much higher variance than the object-level activities that they tend to support (since their success and failure is dependent on the success of the object-level activities). Which again strikes me as strong evidence that variance of impact (which seems to be the perspective that the EA Funds materials appear to take) is not a good predictor of how people classify the grants.

Larks @ 2021-02-20T05:58 (+16)

Obviously, I do still think there is a place for considering something more like "variance of impact", but I don't actually think that that dimension has played a large role in people's historical reactions to grants we have made, and I don't expect it to matter too much in the future.

Relatedly, I don't recall anyone pointing out that funding a large number of 'risky' individuals, instead of a small number of 'safe' organisations, might be less risky (in the sense of lower variance), because the individual risks are largely independent, so you get a lot of portfolio diversification.

AnonymousEAForumAccount @ 2020-01-03T01:08 (+6)

To be clear, I am claiming that the section you are linking is not very predictive of how I expect CEA to classify our grants, and is not very predictive of the attitudes that I have seen from CEA and other stakeholders and donors of the funds, in terms of whether they will have an intuitive sense that a grant is "risky". Indeed, I think that page is kind of misleading and think we should probably rewrite it.

I am concretely claiming that both CEA's attitudes, the attitudes of various stakeholders, and most donors attitudes is better predicted by the "risk of abuse" framing I have outlined. In that sense, I disagree with you that most donors will be primarily concerned about the kind of risk that is discussed on the EA Funds page.

If risk of abuse really is the big concern for most stakeholders, then I agree rewriting the risk page would make a lot of sense. Since that’s a fairly new page, I’d assumed it incorporated current thinking/feedback.

Habryka @ 2020-01-03T03:23 (+6)

*nods* This perspective is currently still very new to me, and I've only briefly talked about it to people at CEA and other fund members. My sense was that people found the "risk of abuse" framing to resonate a good amount, but this perspective is definitely in no way consensus of the current fund-stakeholders, and is only the best way I can currently make sense of the constraints the fund is facing. I don't know yet to what degree others will find this perspective compelling.

I don't think anyone made a mistake by writing the current risk-page, which I think was an honest and good attempt at trying to explain a bunch of observations and perspectives. I just think I now have a better model that I would prefer to use instead.

SamDeere @ 2020-01-10T18:53 (+4)

I agree with you that on one framing, influencing the long-run future is risky, in the sense that we have no real idea of whether any actions taken now will have a long-run positive impact, and we’re just using our best judgement.

However, it also feels like there are also important distinctions in categories of risk between things like organisational maturity. For example, a grant to MIRI (an established organisation, with legible financial controls, and existing research outputs that are widely cited within the field) feels different to me when compared to, say, an early-career independent researcher working on an area of mathematics that’s plausibly but as-yet speculatively related to advancing the cause of AI safety, or funding someone to write fiction that draws attention to key problems in the field.

I basically tried to come up with an ontology that would make intuitive sense to the average donor, and then tried to address the shortcomings by using examples on our risk page. I agree with Oli that it doesn’t fully capture things, but I think it’s a reasonable attempt to capture an important sentiment (albeit in a very reductive way), especially for donors who are newer to the product and to EA. That said, everyone will have their own sense of what they consider too risky, which is why we encourage donors to read through past grant reports and see how comfortable they feel before donating.

The conversation with Oli above about ‘risk of abuse’ being an important dimension is interesting, and I’ll think about rewriting parts of the page to account for different framings of risk.

AnonymousEAForumAccount @ 2020-01-14T22:22 (+1)

I basically tried to come up with an ontology that would make intuitive sense to the average donor, and then tried to address the shortcomings by using examples on our risk page. I agree with Oli that it doesn’t fully capture things, but I think it’s a reasonable attempt to capture an important sentiment (albeit in a very reductive way), especially for donors who are newer to the product and to EA.

Yeah, I think the current ontology is a pretty reasonable/intuitive way to address a complex issue. I’d update if I learned that concerns about “risk of abuse” more common among donors than concerns about other types of risk, but my suspicion is that concerns about “risk of abuse” is mostly an issue for the LTFF since it makes more grants to individuals and the grant that was recommended to Lauren serves as something of a lightning rod.

I do think, per my original question about the LTFF’s classification, that the LTFF is meaningfully more risky than the other funds along multiple dimensions of risk: relatively more funding of individuals vs. established organizations, more convoluted paths to impact (even for more established grantees), and more risk of abuse (largely due to funding more individuals and perhaps a less consensus based grantmaking process).

everyone will have their own sense of what they consider too risky, which is why we encourage donors to read through past grant reports and see how comfortable they feel before donating.

Now that the new Grantmaking and Impact section lists illustrative grants for each fund, I expect donors will turn to that section rather than clicking through each grant report and trying to mentally aggregate the results. But as I pointed out in another discussion, that section is problematic in that the grants it lists are often misrepresentative and/or incorrect, and even if it were accurate to begin with the information would quickly grow stale.

As a solution (which other people seemed interested in), I suggested a spreadsheet that would list and categorize grants. If I created such a spreadsheet, would you be willing to embed it in the fund pages and keep it up to date as new grants are made? The maintenance is the kind of thing a (paid?) secretariat could help with.

AnonymousEAForumAccount @ 2019-12-23T21:25 (+6)

Btw, it's great that you're offering to have this post function as an AMA, but I think many/most people will miss that info buried in the commenting guidelines. Maybe add it to the post title and pin the post?

AnonymousEAForumAccount @ 2019-12-23T20:14 (+4)

We think it’s likely that EA Funds would fare better if it were set up to run as its own organization, with its own director, and a team specifically focused on developing the product, while CEA will benefit from having a narrower scope.

It’s still not clear what the best format for this would be, but in the medium term this will probably look like EA Funds existing as a separately managed organization within the Centre for Effective Altruism (à la 80,000 Hours or Forethought), reporting directly to CEA’s board. At first, CEA would still provide operational support in the form of payroll and grantmaking logistics etc., but eventually we may want to build capacity to do some or all of these things in-house.

Can you provide a ballpark estimate of how long the “medium term” is in this context? Should we expect to see this change in 2020?

Also, once this change is implemented, roughly how large would you expect a “right-sized” EA Funds team to be? This relates to Misha’s question about overhead, but on more of a forward looking basis.

SamDeere @ 2020-01-10T18:53 (+1)

It’s hard to say exactly, but I’d be thinking this would be on the timescale of roughly a year (so, a spinout could happen in late 2020 or mid 2021). However, this will depend a lot on e.g. ensuring that we have the right people on the team, the difficulty of setting up new processes to handle grantmaking etc.

Re the size question – are you asking how large the EA Funds organisation itself should be, or how large the Fund management teams should be?

If the former, I’d guess that we’d probably start out with a team of two people, maybe eventually growing ~4 people as we started to rely less on CEA for operational support (roughly covering some combination of executive, tech, grantmaking support, general operations, and donor relations), and then growing further if/when demand for the product grew and more people working on the project made sense.

If the latter, my guess is that something like 3-6 people per team is a good size. More people means more viewpoint diversity, more eyes on each grant, and greater surface area for sourcing new grants, but larger groups become more difficult to manage, and obviously the time (and potentially monetary) costs increase.

I’d caveat strongly that these are guesses based on my intuitions about what a future version of EA Funds might look like rather than established strategy/policy, and we’re still very much in the process of figuring out exactly what things could look like.

AnonymousEAForumAccount @ 2020-01-14T22:22 (+1)

I was asking about how large the EA Funds organization itself should be, but nice to get your thoughts on the management teams as well. Thank you!

Habryka @ 2019-12-22T04:55 (+4)

Thank you very much for this! I in particular found the interactive dashboard useful!

AnonymousEAForumAccount @ 2019-12-31T18:58 (+3)

Exciting to see the new dashboard! Two observations:

1) The donation figures seem somewhat off, or at least inconsistent with the payout report data. For example, the Meta Fund payout reports list >$2 million in “payouts to date”. But the dashboard shows donations of <$1.7 million from the inception of the fund through the most recent payout in November. Looks like the other funds have similar (though smaller) discrepancies.

2) I think the dashboard would be much more helpful if data for prior years were shown for the full year, not just YTD. That would essentially make all the data available and add a lot of valuable context for interpreting the current year YTD numbers. Otherwise, the dashboard will only be able to answer a very narrow set of questions once we move to the new year.

SamDeere @ 2020-01-10T18:54 (+1)

Yeah, that’s interesting – I think this is an artefact of the way we calculate the numbers. The ‘total donations’ figure is calculated from donations registered through the platform, whereas the Fund balances are calculated from our accounting system. Sometimes donations (especially by larger donors) are arranged outside of the EA Funds platform. They count towards the Fund balance (and accordingly show up in the payouts), but they won’t show up in the total donations figure. We’d love to get to a point where these donations are recorded in EA Funds, but it’s a non-trivial task to synchronise accounting systems in two directions, and so this hasn’t been a top priority so far.

I agree that the YTD display isn’t the most useful for assessing total inflows because it cuts out the busiest period of December (which takes in 4-5 times more than other months, and is responsible for ~35% of annual donations). It was useful for us internally (to see how we were tracking year-on-year), and so ended up being one of the first things we put on the dashboard, but I think that a whole-of-year view will be more useful for the public stats page.

AnonymousEAForumAccount @ 2020-01-14T22:23 (+1)

Got it, thanks for clarifying!

AnonymousEAForumAccount @ 2019-12-23T20:07 (+2)

Two questions about the money EA Funds has processed for specific organizations rather than the Funds themselves (and thanks for sharing data on this type of giving via the new dashboard!):

1. How much of the money raised for organizations is “incremental” in the sense that giving through EA Funds allowed donors to claim tax deductions that they otherwise wouldn’t be able to get? As an example, I wouldn’t consider gifts to AMF through EA Funds to be incremental since US and UK donors could already claim tax deductions by giving directly to AMF. But I would call donations to ACE through EA Funds by UK donors incremental, since these gifts wouldn’t be tax deductible if it weren’t for EA Funds. (I recognize giving through EA Funds might have other benefits besides incremental tax deductibility, such as the ability to give to multiple organizations at once.)

2. How does donor stewardship work for gifts made directly to organizations? Do the organizations receive information about the donors and manage the relationships themselves, or does CEA handle the donor stewardship?

SamDeere @ 2020-01-10T18:55 (+1)

1. I don’t have an exact figure, but a quick look at the data suggests we’ve moved close to $2m to US-based charities that don’t have a UK presence from donors in the UK (~$600k in 2019). My guess is that the amount going in the other direction (US -> UK) is substantially smaller than that, if only because the majority of the orgs we support are US-based. (There’s also some slippage here, e.g. UK donors giving to GiveWell’s current recommendation could donate to AMF/Malaria ConsortiumSCI etc.)

2. Due to privacy regulations (most notably GDPR) we can’t, by default, hand over any personally identifying information to our partner charities. We ask donors for permission to pass their details onto the recipient charities, and in these cases stewardship is handled directly by the orgs themselves. CEA doesn’t do much in terms of stewardship specific to each partner org (e.g. we don’t send AMF donors an update on what AMF has been up to recently), but we do send out email newsletters with updates about how money from EA Funds has been spent.

AnonymousEAForumAccount @ 2020-01-14T22:24 (+3)

1. Thanks for sharing this data!

2. Ah, that makes sense re: privacy issues. However, I’m a bit confused by this: “we do send out email newsletters with updates about how money from EA Funds has been spent.” Is this something new? I’ve given to EA Funds and organizations through the Funds platform for quite some time, and the only non-receipt email I’ve ever gotten from EA Funds was a message in late December soliciting donations and sharing the OP. To be clear, I’d love to see more updates and solicitations for donors (and not just during giving season), as I believe not asking past donors to renew their giving is likely leaving money on the table.

AnonymousEAForumAccount @ 2019-12-23T20:06 (+2)

Thanks for this update!

Are the current fund balances (reasonably) accurate? Do they reflect the November grants made by the Animal and Meta Funds? And has the accounting correction you mentioned for the Global Health fund been implemented yet?

SamDeere @ 2020-01-10T18:55 (+1)

Most Fund balances are in general reasonably accurate (although the current balances don’t account from the latest round that were only paid out last month). The exception here is the Global Development Fund, which is still waiting on the accounting correction you mentioned to post, but I’ve just been informed that this has just been given over to the bookkeepers to action, so this should be resolved very soon.

AnonymousEAForumAccount @ 2020-01-14T22:24 (+1)

I was asking about the end of November balances that were displayed throughout December. It sounds like those did not reflect grants instructed in November if I’m correctly understanding what you mean by “the current balances don’t account from the latest round that were only paid out last month”. Can you clarify the timing of when grants do get reflected in the fund balances? Do the fund balances get updated when the grants are recommended to CEA? Approved by CEA? Disbursed by CEA? When the payout reports are published? FWIW, I’d find it most helpful if they were updated when the payout reports are published, since that would make the information on the fund pages internally consistent.

Thanks for the update on the Global Development Fund!

SamDeere @ 2020-01-23T12:09 (+2)

Yeah, the Fund balances are updated when the entries for the grants are entered into our accounting system (typically at the time that the grants are paid out). Because it can take a while to source all the relevant information from recipients (bank details etc), this doesn't always happen immediately. Unfortunately this means that there's always going to be some potential for drift here, though (absent accounting corrections like that applicable to the Global Development Fund) this should resolve itself within ~ a month. The November balances included ~ half of the payments made from the Animal Welfare and Meta Funds from their respective November grant rounds.

AnonymousEAForumAccount @ 2020-01-24T19:00 (+1)

OK, thanks for explaining how this works!

SamDeere @ 2020-01-10T18:51 (+1)

Meta: Just wanted to say thanks to all for the excellent questions, and to apologise for the slow turnaround on responses – I got pretty sick just before Christmas and wasn’t in any state to respond coherently. Ideally I would have noted that at the time, mea culpa.