Announcing Animal Welfare vs Global Health Debate Week (Oct 7-13)

By Toby Tremlett🔹 @ 2024-09-23T08:27 (+145)

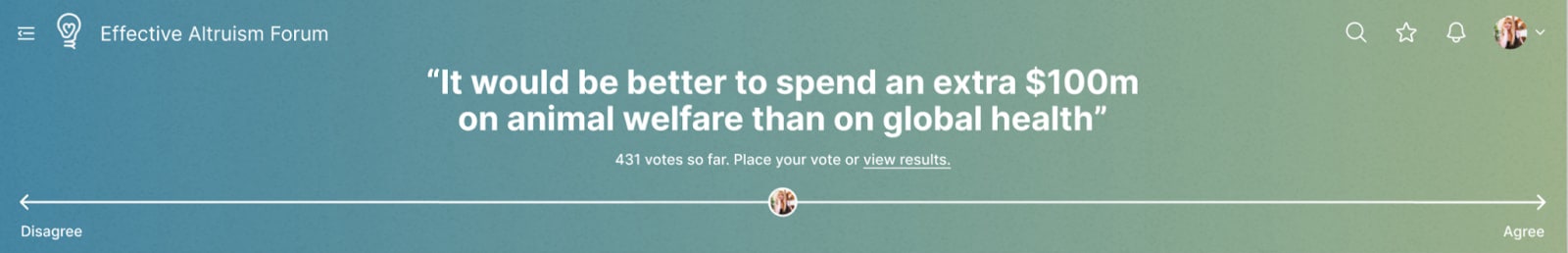

October 7-13th will be Animal Welfare vs Global Health Debate Week. We will be discussing the debate statement “It would be better to spend an extra $100m on animal welfare than on global health”.

As in our last debate week, you will be able to take part by:

- Writing posts with the debate week tag.

- Voting on the debate banner.

- Using our dialogue feature to co-write a discussion with someone you substantively disagree with.

This time, we are adding a feature so that you can explain your vote on the banner, and respond to other people’s explanations on a post (explained below).

If you’d like to improve the quality of the debate, you can also:

- Comment any links you think should be added to the reading list in this post.

- Reach out to friends who might have interesting takes on the debate, and encourage them to take part.

Debate week features

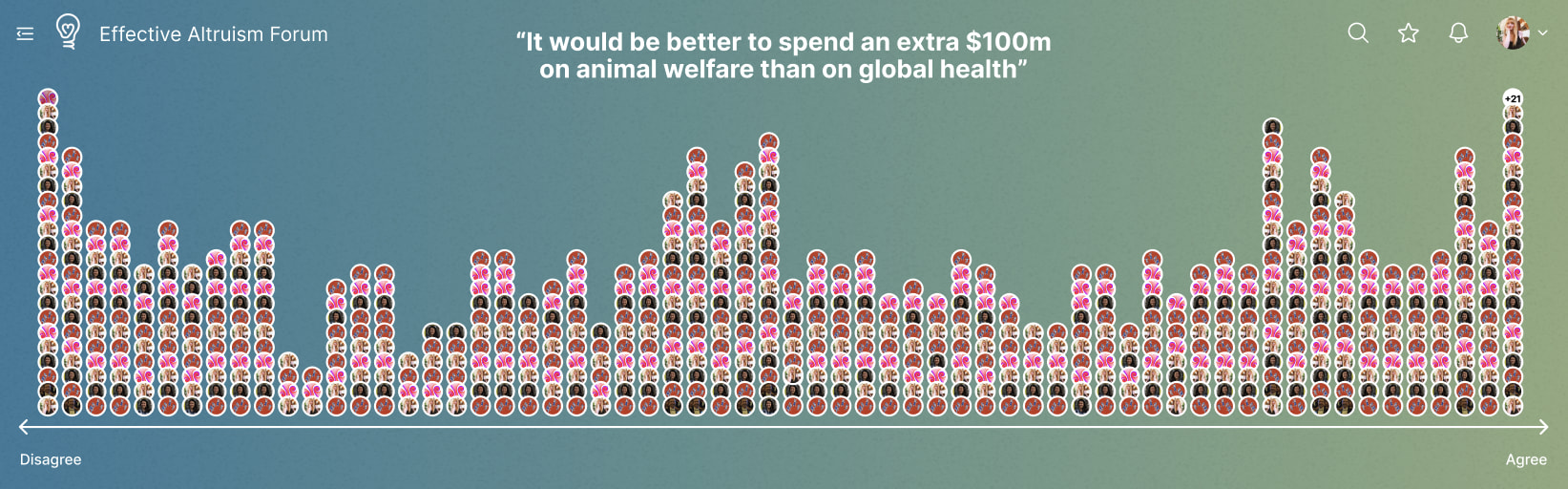

During the debate week (7-13 October), we will have a banner on the front page, where logged in users can vote (non-anonymously) by placing their avatar anywhere from strongly agree to strongly disagree on a slider.

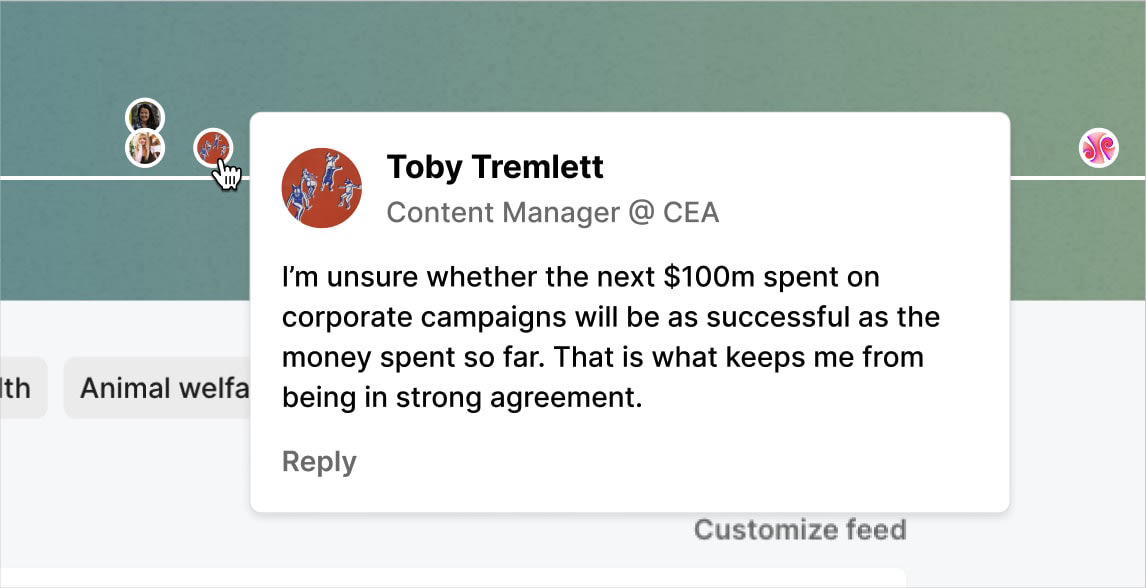

When a user votes, they’ll be given the option to add a comment explaining their vote, or highlighting their remaining uncertainties. This comment will be visible when users hover over each vote on the banner, and separately, it’ll appear as a comment on a debate week discussion thread, so users can respond to it.

Anyone can view the distribution of votes on the banner, in a convenient histogram format, even before they have voted, by clicking the reveal button on the banner.

We’ve added the histogram view, and the ability to comment on your vote, because of feedback from last time. Please feel free to give more feedback in the comments, or in dms.

Why this debate?

Figuring out how to prioritise between animal welfare and global health is difficult, but crucial. Prioritisation is a key principle that makes effective altruism unique, but we sometimes wonder where it happens. Why not here?

For the purpose of this debate, I’m defining animal welfare as any intervention which primarily aims to increase the wellbeing of animals, or decrease their suffering, and global health as the same for humans.

We’ve had the discussion publicly a few times, including in this popular post from last November: Open Phil Should Allocate Most Neartermist Funding to Animal Welfare. Some of the anecdata we collected from the Donation Election last year, and the recent Forum user survey, suggested that this post and Forum discussion in general, have updated people towards animal welfare. I’d love to see the most persuasive arguments out in the open, so we can examine them further.

The Forum’s past engagement on the question suggests that it matters to people, and that we are open to truth-seeking and productive debate on the question.

Let’s discuss!

Crucial considerations

A crucial consideration is a question which, if answered, might substantially change your cause prioritisation.

Below are a few questions which I think may be crucial considerations in this debate. Feel free to add more in the comments. If you are interested in taking a crack at any of these questions, I strongly encourage you to write a post for debate week.

- What are the most promising uses of $100 million in animal welfare and global health?

- How should we weigh the suffering of humans and animals?

- To what extent are farmed animals suffering?

- How should we compare the value of near-certain near-term life improvements with speculative research?

- After how much funding would animal welfare interventions face diminishing returns?

Reading list

- Open Phil Should Allocate Most Neartermist Funding to Animal Welfare – Ariel Simnegar

- Prioritising animal welfare over global health and development? – Vasco Grilo

- The Moral Weight Project Sequence – Rethink Priorities

- Check out @MichaelStJules's more comprehensive reading list in this comment.

- Suggest more in the comments!

Useful tools

- You can play around with OpenPhilanthropy’s grant data in this spreadsheet (thanks @Hamish McDoodles)

- Rethink Priorities’ cross-cause cost-effectiveness model can help you figure out how you should vote/ what your key uncertainties are.

FAQs

Why $100m?

I chose a precise number because I wanted to make the debate more precise (last time the phrasing of the debate statement was a bit too vague).

I chose $100m because it lets us think about very ambitious projects, on the scale of projects available to major foundations such as OpenPhilanthropy. For scale, consider that the Against Malaria Foundation has raised $627m since it started in 2005, and the amount donated to farmed animal advocacy in the US annually has been estimated to be $91m.

Is this $100m this year? Or over ten years?

Imagine this is a new $100m trust that can be spent down today, or over any time period you desire. All you have to do now, is decide whether the trust will be bound to promote animal welfare, or global health.

What is in scope for the debate?

You may wonder about the scope of the debate— “what if I think that traditional global health interventions aren’t as cost-effective as animal welfare interventions, but increasing economic growth would be more cost-effective? How should I vote?”

The answer is: I think this debate should be very permissive about the interventions that we include. Discussions about the best approaches within the causes of animal welfare and global health are very much in-scope, even if those interventions are less discussed.

If you’re unsure whether the intervention you are considering is in scope for the debate, refer to my definition: “I’m defining animal welfare as any intervention which primarily aims to increase the wellbeing of animals, or decrease their suffering, and global health as the same for humans”.

When you vote, you will have the option to briefly explain your vote. This will be visible on the banner on the frontpage, and separately as a comment on a post-page, so that other users can reply to it. If you think you might have a non-standard interpretation of the question, feel free to explain that in your comment, or in a post.

Do I have to pretend to be a neartermist[1] for this debate?

For example, perhaps you are thinking — “I think AI is the most important cause, and animal welfare and global health are only important insofar as they impact the chances of AI alignment”. This is a reasonable position.

Though I would guess that the most useful/ influential posts during debate week will be based on object level discussion of animal welfare and global health interventions, it is also reasonable to base your decision on second order considerations.

As mentioned above, this is part of the reason we are setting up an easy way for you to explain your vote. For example, if you want to argue that we should prioritise global health because we want people to be economically empowered before the singularity— go ahead.

What should a debate week post look like?

You can contribute to the debate by:

- Writing a full justification of your current vote, and inviting people to disagree with you in the comments.

- Providing an answer to a crucial consideration question, such as those listed above.

- Linkposting interesting work that is relevant to the debate.

- Bring up new considerations, which haven’t been discussed.

Note that: a valuable post during debate week is one that helps people update their opinions. How you do that is up to you.

Let me know your thoughts

If you are reading this, this event is for you. I’m very grateful to receive feedback, positive or constructive, either in the comments here, or via direct message.

- ^

I know that you don't have to be a neartermist to care about animals, or a longtermist to care about AI. I've included this section just to make it clear that, although this debate is limited to animal welfare and global health, that doesn't mean you have to pretend to have a different philosophy of cause prioritisation in order to vote.

MichaelStJules @ 2024-09-23T14:01 (+101)

Some other potentially useful references for this debate:

- Emily Oehlsen's/Open Phil's response to Open Phil Should Allocate Most Neartermist Funding to Animal Welfare, and the thread that follows, (EDIT) and other comments there.

- How good is The Humane League compared to the Against Malaria Foundation? by Stephen Clare and AidanGoth for Founders Pledge (using old cost-effectiveness estimates).

- Discussion of the two envelopes problem for moral weights (can get pretty technical):

- GiveWell's marginal cost-effectiveness estimates for their top charities, of course

- Some recent-ish (mostly) animal welfare intervention cost-effectiveness estimates:

- Track records of Charity Entrepreneurship-incubated charities (animal and global health)

- Charity Entrepreneurship prospective animal welfare reports and global health reports

- Charity Entrepreneurship Research Training Program (2023) prospective reports

- on animal welfare with cost-effectiveness estimates: Intervention Report: Ballot initiatives to improve broiler welfare in the US by Aashish K and Exploring Corporate Campaigns Against Silk Retailers by Zuzana Sperlova and Moritz Stumpe

- Electric Shrimp Stunning: a Potential High-Impact Donation Opportunity by MHR

- Prospective cost-effectiveness of farmed fish stunning corporate commitments in Europe by Sagar K Shah for Rethink Priorities

- Estimates for some Healthier Hens interventions ideas (and a comment thread)[1]

- Emily Oehlsen's/Open Phil's response above

- Animal welfare cost-effectiveness estimates based on older intervention work:

- Corporate campaigns affect 9 to 120 years of chicken life per dollar spent by saulius for Rethink Priorities

- A Cost-Effectiveness Analysis of Historical Farmed Animal Welfare Ballot Initiatives by Laura Duffy for Rethink Priorities

- Megaprojects for animals by JamesÖz and Neil_Dullaghan🔹

- Meat-eater problem and related posts

- Wild animal effects of human population and diet change:

- How Does Vegetarianism Impact Wild-Animal Suffering? by Brian Tomasik and his related posts

- Does the Against Malaria Foundation Reduce Invertebrate Suffering? by Brian Tomasik

- Finding bugs in GiveWell's top charities by Vasco Grilo🔸

- My recent posts on fishing: Sustainable fishing policy increases fishing, and demand reductions might, too and The moral ambiguity of fishing on wild aquatic animal populations.

- ^

Healthier Hens is shutting down or has already shut down, according to the Charity Entrepreneurship Newsletter. Their website is also down.

Vasco Grilo🔸 @ 2024-09-24T17:12 (+30)

Thanks, Michael! Here are a few more posts:

- Founders Pledge’s Climate Change Fund might be more cost-effective than GiveWell’s top charities, but it is much less cost-effective than corporate campaigns for chicken welfare?, where I Fermi estimate corporate campaigns for chicken welfare are 1.51 k times as cost-effective as GiveWell’s top charities.

- Cost-effectiveness of buying organic instead of barn eggs, where I Fermi estimate that buying organic instead of barn eggs in the European Union is 2.11 times as cost-effective as GiveWell’s top charities.

- Cost-effectiveness of School Plates, where I Fermi estimate that School Plates[1] is 60.2 times as cost-effective as GiveWell’s top charities.

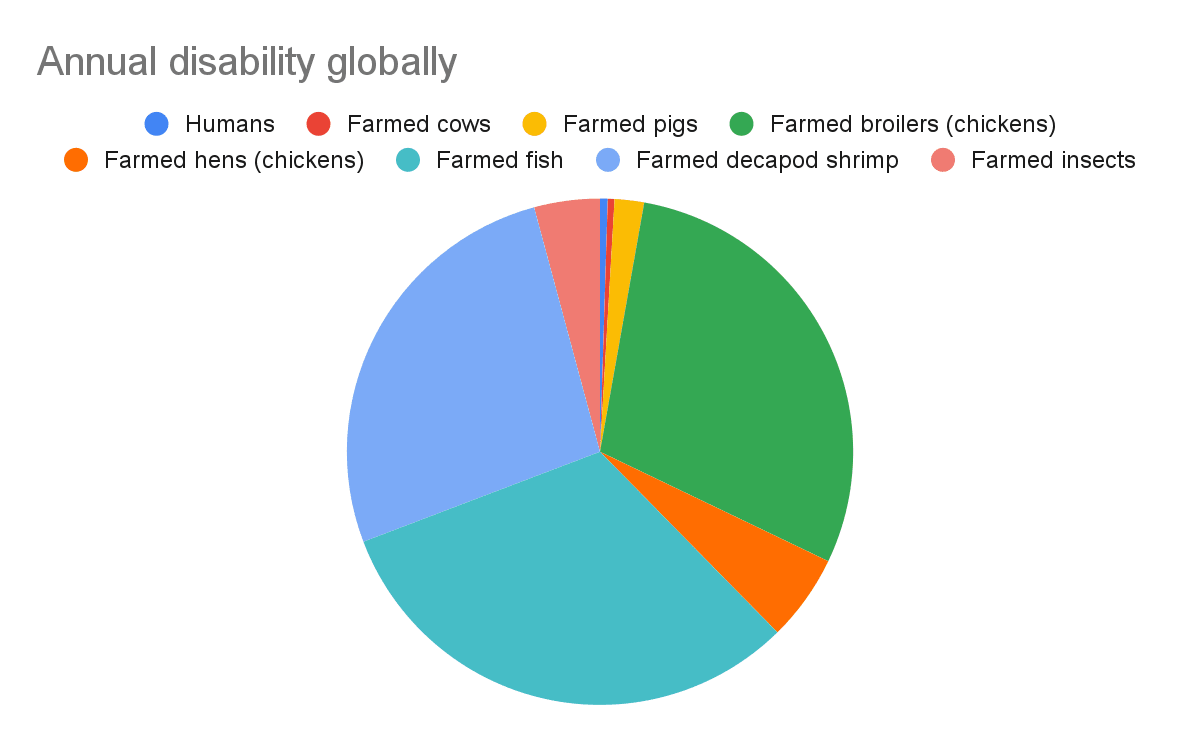

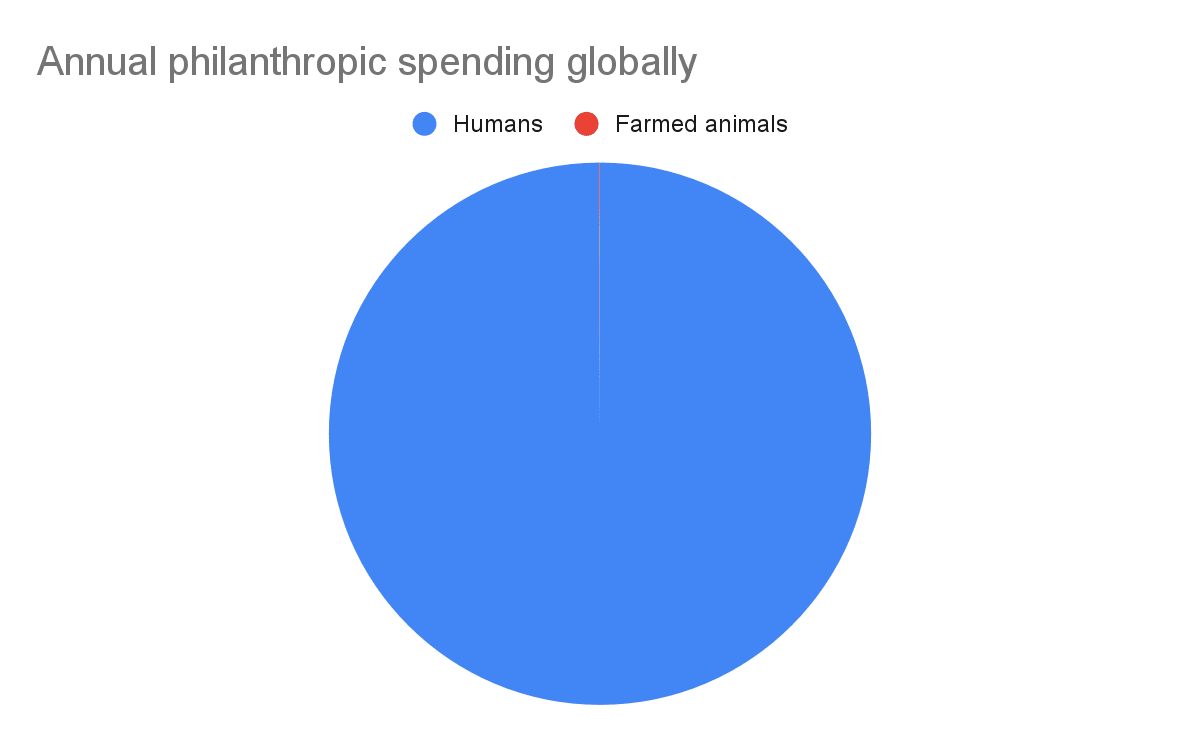

- Farmed animals are neglected, where I conclude the annual disability of farmed animals is much larger than that of humans, whereas the annual funding helping farmed animals is much smaller than that helping humans:

- ^

Program aiming to increase the consumption of plant-based foods at schools and universities in the United Kingdom (UK).

JackM @ 2024-09-26T00:50 (+15)

I'd also add the cluelessness critique as relevant reading. I think it's a problem for global health interventions, although realize that one could also argue that it is a problem for animal welfare interventions. In any case it seems highly relevant for this debate.

justsaying @ 2024-09-26T02:35 (+7)

This critique seems to me to be applicable to the entire EA project.

David Mathers🔸 @ 2024-09-27T12:59 (+4)

Probably far beyond as well, right? There's nothing distinctive about EA projects that make them [EDIT: more] subject to potential far future bad consequences we don't know about. And even (sane) non-consequentialists should care about consequences amongst other things, even if they don't care only about consequences.

JackM @ 2024-09-28T17:10 (+3)

I dispute this. I'm admittedly not entirely sure but here is my best explanation.

A lot of EA interventions involve saving lives which influences the number of people who will live in the future. This in turn, we know, will influence the following (to just give two examples):

- The number of animals who will be killed for food (i.e. impacting animal welfare).

- CO2 emissions and climate change (i.e. impacting the wellbeing of humans and wild animals in the future).

Importantly, we don't know the sign and magnitude of these "unintended" effects (partly because we don't in fact know if saving lives now causes more or fewer people in the future). But we do know that these unintended effects will predictably happen and that they will swamp the size of the "intended" effect of saving lives. This is where the complex cluelessness comes in. Considering predictable effects (both intended and not intended), we can't really weigh them. If you think you can weigh them, then please tell me more.

So I think it's the saving lives that really gets us into a pickle here - it leads to so much complexity in terms of predictable effects.

There are some EA interventions that don't involve saving lives and don't seem to me to run into a cluelessness issue e.g. expanding our moral circle through advocacy, building AI governance structures to (for instance) promote global cooperation, global priorities research. I don't think these interventions run into the complex cluelessness issue because, in my opinion, it seems easy to say that the expected positives outweigh expected negatives. I explain this a little more in this comment chain.

Also, note that under Greaves' model there are types of cluelessness that are not problematic, which she calls "simple cluelessness". An example is if we are deciding whether to conceive a child on a Tuesday or a Wednesday. Any chance that one of the options might have some long-run positive or negative consequence will be counterbalanced by an equal chance that the other will have that consequence. In other words there is evidential symmetry across the available choices.

A lot of "non-EA" altruistic actions I think we will have simple cluelessness about (rather than complex), in large part because they don't involve saving lives and are often on quite a small scale so aren't going to predictably influence things like economic growth. For example, giving food to a soup kitchen - other than helping people who need food it isn't at all predictable what other unintended effects will be so we have evidential symmetry and can ignore them. Basically, a lot of "non-EA" altruistic actions might not have predictable unintended effects, in large part because they don't involve saving lives. So I don't think they will run us into the cluelessness issue.

I need to think about this more but would welcome thoughts.

justsaying @ 2024-09-29T15:00 (+1)

You don't think a lot of non-EA altruistic actions involve saving lives??

JackM @ 2024-09-29T17:16 (+3)

Yes but if I were to ask my non-EA friends what they give to (if they give to anything at all) they will say things like local educational charities, soup kitchens, animal shelters etc. I do think EA generally has more of a focus on saving lives.

JackM @ 2024-09-26T09:27 (+4)

I'm not so sure about that. The link above argues longtermism may evade cluelessness (which I also discuss here) and I provide some additional thoughts on cause areas that may evade the cluelessness critique here.

justsaying @ 2024-09-27T14:57 (+7)

I am pretty unmoved by this distinction, and based on the link above, it seems that Greaves is really just making the point that a longtermism mindset incentivizes us to find robustly good interventions, not that it actually succeeds. I think it's pretty easy to make the cluelessness case about AI alignment as a cause area, for example. Seems quite plausible to me that a lot of so-called alignment work is actually just serving to speed capabilities. Also seems to me that you could align an AI to human values and find that human values are quite bad. Or you could successfully align AI enough to avoid extinction and find that the future is astronomically bad and extinction would have been preferable.

JackM @ 2024-09-27T18:38 (+2)

Just to make sure I understand your position - are you saying that the cluelessness critique is valid and that it affects all altruistic actions? So Effective Altruism and altruism generally are doomed enterprises?

I don't buy that we are clueless about all actions. For example, I would say that something like expanding our moral circle to all sentient beings is robustly good in expectation. You can of course come up with stories about why it might be bad, but these stories won't be as forceful as the overall argument that a world that considers the welfare of all beings (that have the capacity for welfare) important is likely better than one that doesn't.

justsaying @ 2024-09-27T20:14 (+1)

The point that I was initially trying to make was only that I don't think the generalized cluelessness critique particularly favors one cause (for example animal welfare ) over another (for example human health--or vice versa). I think you might make specific arguments about uncertainty regarding particular causes or interventions, but pointing to a general sense of uncertainty does not really move the needle towards any particular cause area.

Separate from that point, I do sort of believe in cluelessness (moral and otherwise) more generally, but honestly just try to ignore that belief for the most part.

JackM @ 2024-09-27T21:37 (+2)

I see where Greaves is coming from with the longtermist argument. One way to avoid the complex cluelessness she describes is to ensure the direct/intended expected impact of your intervention is sufficiently large so as to swamp the (forseeable) indirect expected impacts. Longtermist interventions target astronomical / very large value, so they can in theory meet this standard.

I’m not claiming all longtermist interventions avoid the cluelessness critique. I do think you need to consider interventions on a case-by-case basis. But I think there are some fairly general things we can say. For example, the issue with global health interventions is that they pretty much all involve increasing the consumption of animals by saving human lives, so you have a negative impact there which is hard to weigh against the benefits of saving a life. You don’t have this same issue with animal welfare interventions.

justsaying @ 2024-09-29T14:55 (+1)

I think about the meat eater problem as pretty distinct from general moral cluelessness. You can estimate how much additional meat people will eat as their incomes increase or as they continue to live. You might be highly uncertain about weighing animal vs. Humans as moral patients, but that is also something you can pretty directly debate, and see the implications of different weights. I think of cluelessness as applying only when there are many many possible consequences that could be highly positive or negative and it's nearly impossible to discuss/attempt to quantify because the dimensions of uncertainty are so numerous.

JackM @ 2024-09-29T17:14 (+2)

You're right that the issue at its core isn't the meat eater problem. The bigger issue is that we don't even know if saving lives now will increase or decrease future populations (there are difficult arguments on both sides). If we don't even know that, then we are going to be at a complete loss to try to conduct assessments on animal welfare and climate change, even though we know there are going to be important impacts here.

MHR @ 2024-09-26T12:26 (+6)

Great list (and thanks for the shoutout)!

I would add @Laura Duffy's How Can Risk Aversion Affect Your Cause Prioritization? post

Toby Tremlett🔹 @ 2024-09-23T14:24 (+5)

Thank you! These are great. I'll link the comment in the text.

CB🔸 @ 2024-09-24T07:10 (+20)

Great idea ! I really support this debate, I think it is a topic that is currently not taken into account enough.

I'd be surprised if there isn't something in the order of a 100x difference in cost effectiveness in favour of animals interventions (as indicated in some of the resources above).

Animals are much more numerous, neglected, and have terrible living conditions, so there's simply much more to do. And as indicated through research on the moral weight Project, it's hard to have a high confidence that their level of sentience is very low compared to humans.

We have a natural tendency to prefer humans (we know them well, after all), so a context in which we can challenge this assumption is welcome.

Jason @ 2024-09-23T14:51 (+9)

I wonder if it would be worthwhile to include a yes/no/undefined set of buttons that people could use to share if they are basing their decision primarily on second order considerations. Conditional on a significant fraction of people doing so, we might learn something interesting from the vote split in each category. That wouldn't provide the richness of data that a custom narrative yields, but it is easier to statistically analyze a fixed-response question, and more people may respond to a three-second question than provide a narrative.

Toby Tremlett🔹 @ 2024-09-23T16:17 (+4)

That's interesting- I guess I'm expecting so much diversity in responses that one fixed response question would probably raise more questions than it answered (i.e. "which second-order consideration?"). An alternative would be to send out a short survey afterwards to a randomised group of voters from across the spectrum. Depending on the content of people's comments maybe we could also categorise them and do some kind of basic analysis (i.e. without sending a survey out).

Jason @ 2024-09-23T21:59 (+2)

Makes sense -- one use case for me is that I'd be more inclined to defer to community judgment based on certain grounds than on others in allocating my own (much more limited!) funds.

E.g., if perspective X already gets a lot of weight from major funders, or if I think I'm in a fairly good position to weigh X relative to others, then I'd probably defer less. On the other hand, there are some potential cruxes on which various factors point toward more deference.

The specific statement I was reacting to was that people might vote based on their views about what happens after a singularity. For various reasons, I would not be inclined to defer to GH/animal welfare funding splits that were promised on that kind of reasoning. (Not that the reasoning is somehow invalid, it's just not the kind of data that would materially update how I donate.)

Barry Grimes @ 2024-09-23T12:23 (+9)

It would be better to call this: Animal Welfare vs Human Welfare Debate Week

When your scope extends to "any intervention which primarily aims to increase the wellbeing of animals, or decrease their suffering, and...the same for humans”, the term "global health" only represents a sub-set of possible interventions.

Toby Tremlett🔹 @ 2024-09-23T14:23 (+4)

Thanks for bringing this up Barry! The exact phrasing has gone through a lot of workshopping, but I didn't spot this. Perhaps I should change the debate statement to "global health and wellbeing" to cover this area.

On your specific suggestion, I disagree because "Animal Welfare vs Human Welfare" seems to suggest a necessary trade-off between animal and human welfare, whereas I'm hoping that we will be discussing the best ways to increase welfare overall, whether for humans or animals.

Barry Grimes @ 2024-09-23T16:16 (+4)

Thanks Toby! Health is part of wellbeing, so "global wellbeing" would be sufficient.

However, it's worth noting that "global wellbeing" should apply to all moral patients, whereas "global health" is usually understood as a human-specific set of interventions.

Toby Tremlett🔹 @ 2024-09-23T16:21 (+2)

Exactly- I was also thinking of "global wellbeing" but then realised that would also include animals. "Global health and wellbeing" is the name for the cause area in OpenPhilanthropy's terminology which only applies to humans, so I think that would have meaning to some people (although it is easy to overestimate average EA context when you've been around it so long).

Another alternative is to do something like we did on the last debate week, and have definitions of the terms appear when you hover over them in the banner. I'll chat to Will, who is developing the banner, about our options when I see him tomorrow.

Cheers!

Toby Tremlett🔹 @ 2024-09-23T17:01 (+2)

I've just been informed that "global health and wellbeing" actually is intended by OP to include animal welfare- so I'm disagree-reacting the above comment.

Barry Grimes @ 2024-09-23T16:27 (+2)

Maybe you should have a separate debate week on the most appropriate name for the "global health" cause area ;o)

justsaying @ 2024-09-23T19:00 (+1)

It seems like the whole premise of this debate is (rightly) based on the idea that there is in fact a necessary trade-off between human and animal welfare, no? I.e. if we give the $100 million towards the most cost-effective human focused intervention we can think of then we are necessarily not giving it towards the most cost-effective animal-focused intervention we can think of, no? Of course it is theoretically possible that there exists some intervention which is simultaneously the most cost-effective intervention on both a humans-per-dollar and animals-per-dollar but that seems extremely unlikely.

Toby Tremlett🔹 @ 2024-09-24T08:30 (+2)

Yep- there is a trade-off in the sense that the money will go to one, and the other will miss out. I wasn't very clear in my previous comment- sorry!

What I meant is that we ideally aren't pitting human and animal welfare against each other. Most arguments, I expect, will be claiming that giving the money in one direction will increase welfare overall. In fact, this increase in welfare will accrue to either animals or humans, but the question was never "which is more deserving of welfare", it was "which option will produce the most welfare". Does that make it clearer? It's a subtler point than I thought while making it.

Leo @ 2024-09-30T18:04 (+5)

As I said last time, trying to quantify agreement/disagreement is much more confusing to determine and to read, than just measuring, out of an extra $100m, how many $ millions people would assign to global health/animal welfare. The banner would go from 0 to 100, and whatever you vote, let say 30m, would mean that 30m should go to one cause and 70m to the other. As it is, just to mention one paradox, if I wholly disagree with the question, it means that I think it wouldn't be better to spend the money on animal welfare than on global health, which in turn could mean a) I want all the extra funding to go to global health, b) I don't agree at all with the statement, because I think it would be better to allocate the money differently, say 10m/90m. Now if you vote as having a 90% of agreement, it could mean b, or it could mean that you almost fully agree for other reasons, for example, because you think there's a 10% chance that you are wrong.

Toby Tremlett🔹 @ 2024-10-01T08:55 (+5)

Thanks Leo! I remember your comment from last time, it's a fair point.

We did consider framing the question exactly like that (i.e. splitting 100m between the two), but I decided against it. The main reason was that a vote would actually seem to project far more certainty if you had to give a precise number than with this question, which might introduce a far higher barrier to voting. The reason we have voting in a debate week is not to produce a perfectly accurate aggregation of everyone's opinion (though, all else equal, a more accurate aggregation is better), but rather to encourage and enable valuable conversation on crucial questions. So a question that is framed in a way which still makes sense and represents a preference, but will get more votes, is probably a better one.

I do understand that the meaning of a vote is ambiguous, but this is why we are introducing commenting, so you can explain the reason behind your vote. Hopefully, this means that ambiguities like the one you mention won't matter too much.

Leo @ 2024-10-01T10:02 (+1)

I see, thanks. I guess I would have preferred a more accurate, unambiguous aggregation of everyone’s opinion, to have a clearer sense of the preferences of the community as a whole, but I'm starting to think that it's just me.

Toby Tremlett🔹 @ 2024-10-01T10:57 (+3)

That's fair enough Leo! It's definitely not just you. But if that was my only goal, I'd probably run a survey rather than a debate week. During this week we are hoping to see conversations which change minds, which means juggling a few goals.

Maybe in the future (no promises) we will introduce polls or debate sliders to add in posts, and then we could get more niche and precise data.

Nirmal George @ 2024-09-30T19:38 (+3)

In a world that often prioritizes human needs above all else, we stand at a critical juncture where we must reconsider our moral compass. The narrative of human exceptionalism has long permeated our thinking, allowing us to justify neglecting the plight of non-human animals in favour of our own interests. However, this perspective is not only ethically troubling but also profoundly short-sighted. It is time to embrace an inclusive vision of animal welfare that recognizes the intrinsic value of all sentient beings, rather than clinging to a narrow, species-centric approach cloaked in the benevolent terminology of "global health."

Imagine for a moment the voice of a cow confined to a dimly lit barn, her sorrowful eyes reflecting a lifetime spent in confinement, deprived of the sun’s warmth and the freedom to roam. Picture the millions of chickens crammed into battery cages, each one a mere number in a system that values profit over life, her cries drowned out by the hum of machinery. These are not mere commodities; they are beings capable of experiencing joy, pain, and a deep longing for connection and freedom. By dismissing their suffering in the name of human-centric welfare, we perpetuate a cycle of exploitation that diminishes our own humanity.

In advocating for inclusive animal welfare, we recognize that the health of our planet and the well-being of humanity are inextricably linked to the treatment of non-human animals. The rise of zoonotic diseases, driven by the degradation of animal habitats and intensive farming practices, starkly illustrates that our fates are intertwined. By addressing the welfare of all species, we not only safeguard their rights but also create a healthier, more sustainable world for ourselves. When we extend compassion beyond our own species, we foster a deeper connection to the natural world, cultivating a sense of responsibility that reverberates through ecosystems and communities alike.

Moreover, embracing inclusive animal welfare is a powerful act of solidarity. It challenges us to confront the uncomfortable truths of our consumption patterns and the systems that underpin them. It invites us to reimagine a world where ethical considerations guide our choices, leading to a future where both human and animal lives are valued equally. By advocating for the welfare of all beings, we dismantle the hierarchies that permit suffering and create pathways toward empathy, justice, and coexistence.

In a time when environmental degradation, climate change, and social inequities loom large, we cannot afford to ignore the suffering of our fellow sentient beings. To prioritize human welfare at the expense of others is to turn a blind eye to the interconnected web of life that sustains us all. We must reject the notion that our species is the pinnacle of existence and instead embrace a holistic approach that champions the welfare of all animals, recognizing that their well-being is integral to our own survival and flourishing.

By investing in inclusive animal welfare, we make a profound statement about our values and the legacy we wish to leave behind. Let us strive to build a world where compassion knows no bounds, where the cries of the voiceless resonate in the hearts of all who call this planet home. In doing so, we will not only uplift those who suffer in silence but also elevate our own humanity, creating a future that honours the dignity of every living being.

Toby Tremlett🔹 @ 2024-09-23T09:06 (+3)

Agree react to this comment if you'd be interested in taking part in a virtual co-working session (probably in gather.town) to get some accountability on writing a post for debate week.