GovAI: Towards best practices in AGI safety and governance: A survey of expert opinion

By Zach Stein-Perlman @ 2023-05-15T01:42 (+68)

This is a linkpost to https://arxiv.org/pdf/2305.07153.pdf

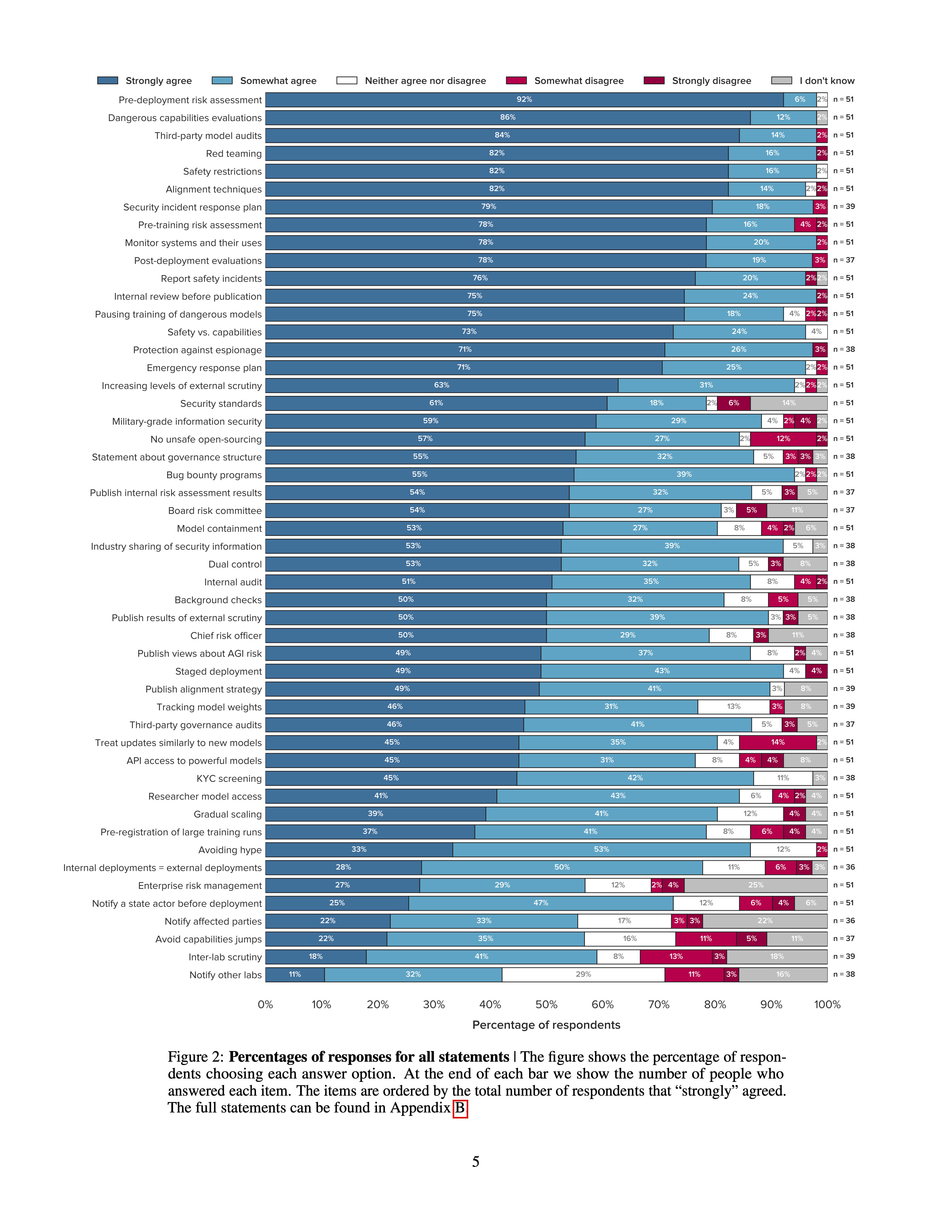

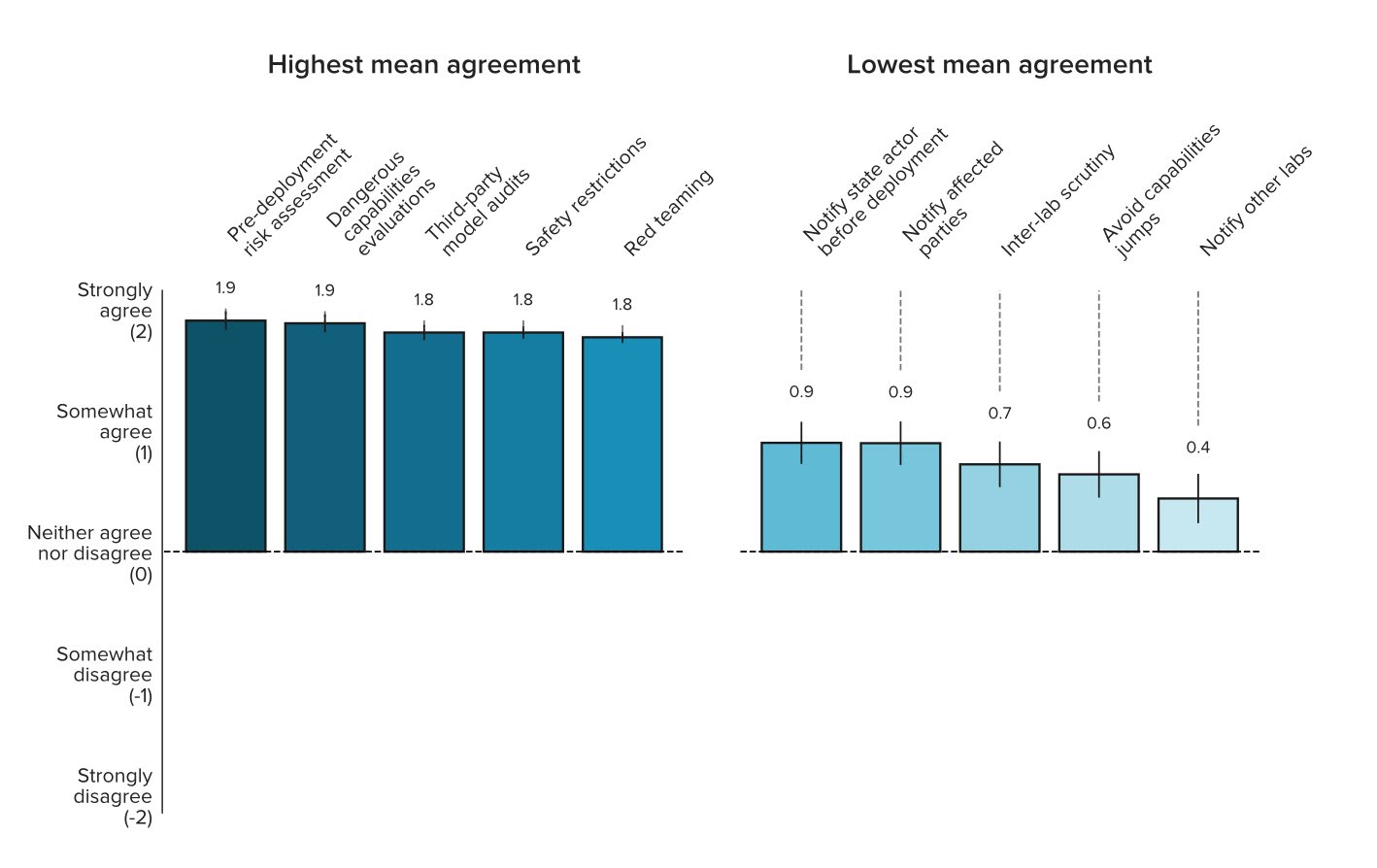

A number of leading AI companies, including OpenAI, Google DeepMind, and Anthropic, have the stated goal of building artificial general intelligence (AGI) - AI systems that achieve or exceed human performance across a wide range of cognitive tasks. In pursuing this goal, they may develop and deploy AI systems that pose particularly significant risks. While they have already taken some measures to mitigate these risks, best practices have not yet emerged. To support the identification of best practices, we sent a survey to 92 leading experts from AGI labs, academia, and civil society and received 51 responses. Participants were asked how much they agreed with 50 statements about what AGI labs should do. Our main finding is that participants, on average, agreed with all of them. Many statements received extremely high levels of agreement. For example, 98% of respondents somewhat or strongly agreed that AGI labs should conduct pre-deployment risk assessments, dangerous capabilities evaluations, third-party model audits, safety restrictions on model usage, and red teaming. Ultimately, our list of statements may serve as a helpful foundation for efforts to develop best practices, standards, and regulations for AGI labs.

I'm really excited about this paper. It seems to be great progress toward figuring out what labs should do and making that common knowledge.

(And apparently safety evals and pre-deployment auditing are really popular, hooray!)

Edit: see also the blogpost.

Lizka @ 2023-05-26T11:03 (+4)

I'm surprised at how much agreement there is about the top ideas. The following ideas all got >70% "Strongly agree" and at most "3%" "strong disagree" (note that not everyone answered each question, although most of these 14 did have all 51 responses):

- Pre-deployment risk assessment. AGI labs should take extensive measures to identify, analyze, and evaluate risks from powerful models before deploying them.

- Dangerous capability evaluations. AGI labs should run evaluations to assess their models’ dangerous capabilities (e.g. misuse potential, ability to manipulate, and power-seeking behavior).

- Third-party model audits. AGI labs should commission third-party model audits before deploying powerful models.

- Safety restrictions. AGI labs should establish appropriate safety restrictions for powerful models after deployment (e.g. restrictions on who can use the model, how they can use the model, and whether the model can access the internet).

- Red teaming. AGI labs should commission external red teams before deploying powerful models.

- Monitor systems and their uses. AGI labs should closely monitor deployed systems, including how they are used and what impact they have on society.

- Alignment techniques. AGI labs should implement state-of-the-art safety and alignment techniques.

- Security incident response plan. AGI labs should have a plan for how they respond to security incidents (e.g. cyberattacks).

- Post-deployment evaluations. AGI labs should continually evaluate models for dangerous capabilities after deployment, taking into account new information about the model’s capabilities and how it is being used.

- Report safety incidents. AGI labs should report accidents and near misses to appropriate state actors and other AGI labs (e.g. via an AI incident database).

- Safety vs capabilities. A significant fraction of employees of AGI labs should work on enhancing model safety and alignment rather than capabilities.

- Internal review before publication. Before publishing research, AGI labs should conduct an internal review to assess potential harms.

- Pre-training risk assessment. AGI labs should conduct a risk assessment before training powerful models.

- Emergency response plan. AGI labs should have and practice implementing an emergency response plan. This might include switching off systems, overriding their outputs, or restricting access.

The ideas that had the most disagreement seem to be:

- 49 (48 in the graphic above) - Avoid capabilities jumps. AGI labs should not deploy models that are much more capable than any existing models.

- 11% somewhat disagree, 5% strong disagree, and only 22% strong agree, 35% somewhat agree, 37 responses

- 48 (49 in the graphic above) - Inter-lab scrutiny. AGI labs should allow researchers from other labs to scrutinize powerful models before deployment.

- 13% somewhat disagree, 3% strong disagree, 41% somewhat agree, 18% strong agree, 37 responses

- 37 - No [unsafe] open-sourcing. AGI labs should not open-source powerful models, unless they can demonstrate that it is sufficiently safe to do so.

- 14% (somewhat and strong) disagree, 57% strong-agree, and 27% somewhat-agree, 51 responses

- 42 - Treat updates similarly to new models. AGI labs should treat significant updates to a deployed model (e.g. additional fine-tuning) similarly to its initial development and deployment. In particular, they should repeat the pre-deployment risk assessment.

- 14% somewhat disagree, but 45% strong agree and 35% somewhat agree, 51 responses

- 50 - Notify other labs. AGI labs should notify other labs before deploying powerful models.

- 11% somewhat disagree, 3% strong disagree, 11% strong agree, 32% somewhat disagree, 38 responses

And

- 21 - Security standards. AGI labs should comply with information security standards (e.g. ISO/IEC 27001 or NIST Cybersecurity Framework). These standards need to be tailored to an AGI context.

- Got the most strong disagreement: 6% (51 responses), although it's overall still popular (61% strong-agree, 18% somewhat agree)

(Ideas copied from here — thanks!)

Lizka @ 2023-05-26T10:41 (+4)

In case someone finds it interesting, Jonas Schuett (one of the authors) shared a thread about this: https://twitter.com/jonasschuett/status/1658025266541654019?s=20

He says that the thread is to discuss the survey's:

- Purpose

- Main findings

- "Our main finding is that there is already broad support for many practices!"

- "Interestingly, respondents from AGI labs had significantly higher mean agreement with statements than respondents from academia and civil society."

- Sample (response rate 55.4%, 51 responses)

- Questions

- Limitations

- E.g.: "We likely missed leading experts in our sampling frame that should have been surveyed." and "The abstract framing of the statements probably led to higher levels of agreement."

- Policy implications

Also, there's a nice graphic from the paper in the thread:

david_reinstein @ 2025-08-09T22:34 (+2)

By the way, the paper "Towards best practices in AGI safety and governance: A survey of expert opinion" was evaluated by the Unjournal - see unjournal.pubpub.org. (Semi-automated comment)

david_reinstein @ 2025-08-29T08:39 (+2)

A made post about this with some takeaways. TLDR/opinionated: The paper is a strong start but has some substantial limitations and needs more caveating.

I realize the paper is already a few years old. Would like to see and evaluate more recent work in this area.

Naturally this paper is several years old. My own take" we need more work in this area... perhaps follow-up work doing a similar survey, taking sample selection and question design more seriously.

I hope we can identify & evaluate such work in a timely fashion.

E.g., there is some overlap with

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=5021463

which "focuses on measures to mitigate systemic risks associated with general-purpose AI models, rather than addressing the AGI scenario considered in this paper"

Lizka @ 2023-05-26T11:10 (+2)

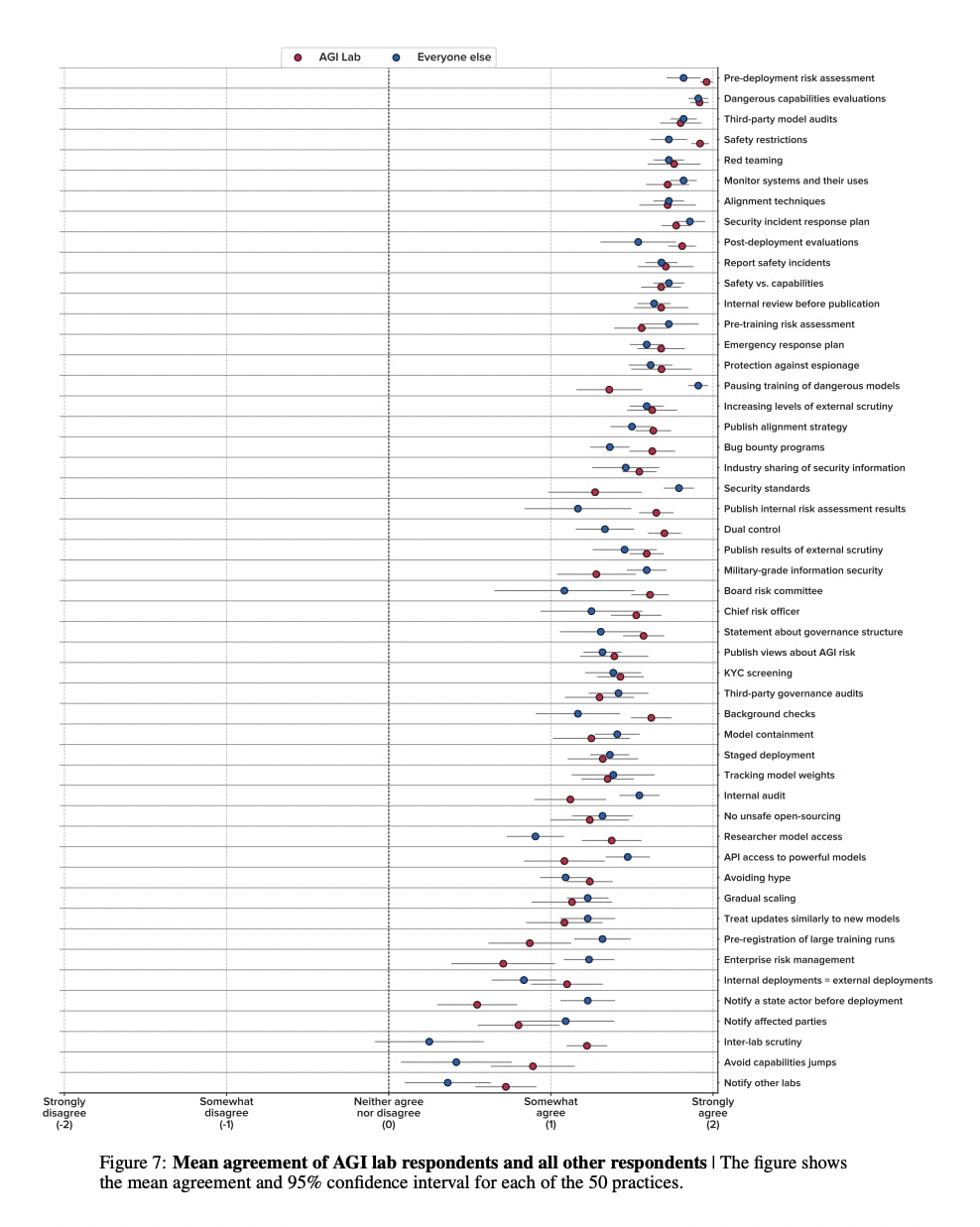

I also find the following chart interesting (although I think none of this is significant) — particularly the fact that pausing training of dangerous models and security standards have more agreement from people who aren't in AGI labs, and (at a glance):

- inter-lab scrutiny

- avoid capabilities jumps

- board risk committee

- researcher model access

- publish internal risk assessment results

- background checks

got more agreement from people who are in labs (in general, apparently "experts from AGI labs had higher average agreement with statements than respondents from academia or civil society").

Note that 43.9% of respondents (22 people?) are from AGI labs.