AGI rising: why we are in a new era of acute risk and increasing public awareness, and what to do now

By Greg_Colbourn ⏸️ @ 2023-05-02T10:17 (+70)

[Added 13Jun: Submitted to OpenPhil AI Worldviews Contest - this pdf version most up to date]

Content note: discussion of a near-term, potentially hopeless[1] life-and-death situation that affects everyone.

Tldr: AGI is basically here. Alignment is nowhere near ready. We may only have a matter of months to get a lid on this (strictly enforced global limits to compute and data) in order to stand a strong chance of survival. This post is unapologetically alarmist because the situation is highly alarming.[2] Please help. Fill out this form to get involved. Here is a list of practical steps you can take.

We are in a new era of acute risk from AGI

Artificial General Intelligence (AGI) is now in its ascendency. GPT-4 is already ~human-level at language and showing sparks of AGI. Large multimodal models – text-, image-, audio-, video-, VR/games-, robotics-manipulation by a single AI – will arrive very soon (from Google DeepMind[3]) and will be ~human-level at many things: physical as well as mental tasks; blue collar jobs in addition to white collar jobs. It’s looking highly likely that the current paradigm of AI architecture (Foundation models), basically just scales all the way to AGI. These things are “General Cognition Engines”[4].

All that is stopping them being even more powerful is spending on compute. Google & Microsoft are worth $1-2T each, and $10B can buy ~100x the compute used for GPT-4. Think about this: it means we are already well into hardware overhang territory[5].

Here is a warning written two months ago by people working at applied AI Alignment lab Conjecture: “we are now in the end-game for AGI, and we (humans) are losing”. Things are now worse. It’s looking like GPT-4 will be used to meaningfully speed up AI research, finding more efficient architectures and therefore reducing the cost of training more sophisticated models[6].

And then there is the reckless fervour[7] of plugin development to make proto-AGI systems more capable and agent-like to contend with. In very short succession from GPT-4, OpenAI announced the ChatGPT plugin store, and there has been great enthusiasm for AutoGPT. Adding Planners to LLMs (known as LLM+P) seems like a good recipe for turning them into agents. One way of looking at this is that the planners and plugins act as the System 2 to the underlying System 1 of the general cognitive engine (LLM). And here we have agentic AGI. There may not be any secret sauce left.

Given the scaling of capabilities observed so far for the progression of GPT-2 to GPT-3 to GPT3.5 to GPT-4, the next generation of AI could well end up superhuman. I think most people here are aware of the dangers: we have no idea how to reliably control superhuman AI or make it value-aligned (enough to prevent catastrophic outcomes from its existence). The expected outcome from the advent of AGI is doom[8]. This is in large part because AI Alignment research has been completely outpaced by AI capabilities research and is now years behind where it needs to be.

To allow Alignment time to catch up, we need a global moratorium on AGI, now.[9]

A short argument for uncontrollable superintelligent AI happening soon (without urgent regulation of big AI):

This is a recipe for humans extincting themselves that appears to be playing out along the mainline of future timelines:

- Either of

- GPT-4 + curious (but ultimately reckless) academics -> more efficient AI -> next generation foundation model AI (which I’ll call NextAI[10] for short); Or

- Google DeepMind just builds NextAI (they are probably training it already)

- NextAI + planners + AutoGPT + plugins + further algorithmic advancements + gung ho humans (e/acc etc) = NextAI2[11] in short order. Weeks even. Access to compute for training is not a bottleneck because that cyborg system (humans and machines working together) could easily hack their way to massive amounts of compute access, or just fundraise enough (cf. crypto projects). Access to data for training is not a bottleneck; there are large existing online repositories, and if that’s not enough, hacks.[12]

- NextAI2 -> NextAI3 in days? NextAI3 -> NextAI4 in hours? Etc (at this point it's just the machines steering further development). Alignment is not magically solved in time.

Humanity loses control of the future. You die. I die. Our loved ones die. Humanity dies. All sentient life dies.[13]

Common objections to this narrative are that there won’t be enough compute, or data, for this to happen. These don’t hold water after a cursory examination of our situation. Compute-wise, we're on ~10^18 FLOPS for large clusters at the moment[14], but there are likely enough GPUs available for 100 times this[15]. And the cost of this – ~$10B - is affordable for many large tech companies and national governments, and even individuals![16]

Data is not a show-stopper either. Sure, ~all the text on the internet might’ve already been digested, but Google could readily record more words per day via phone mics[17] than the number used to train GPT-4[18]. These may or may not be as high quality as text, but 1000x as many as all the text on the internet could be gathered within months[19]. Then there are all the billions of high-res video cameras (phones and CCTV), and sensors in the world[20]. And if that is not enough, there is already a fast-growing synthetic data industry serving the ML community’s ever growing thirst for data to train their models on.

Another objection I’ve seen raised is related to the complexity of our physical environment. A high degree of error correction will be required for takeover of the physical world to happen. And many iterations of physical design will be required to get there. This is clearly not insurmountable, given the evidence we have of humans being able to do it. But can it happen quickly? Yes. There are many ways that AI can easily break out into the physical world, involving hacking networked machinery and weapons systems, social persuasion, robotics, and biotech.

Some notes on the nature of the threat. The AI that will soon pose an existential risk is:

- A general cognition engine (see above); an Artificial General Intelligence able to do any task a human can do, plus a lot more that humans can’t.

- Fast: computers process information much faster than human brains do; and they are not limited to needing to fit inside a skull. AGI has the potential to rapidly scale such that it is “thinking”[21] at speeds that will make us look like geology (rocks). As such, it’ll be able to run untold rings around us.

- Alien: GPT-4 produces text output that is indistinguishable from a human. But don’t be fooled; it is merely very good at imitating humans. Underneath the hood, its “mind” is very alien: a giant pile of linear algebra we share no evolutionary history with. And remember that consciousness doesn’t need to come into it. It’s perhaps better thought of as a new force of nature unleashed. A supremely capable series of optimisation processes that are essentially just insentient colossal piles of linear algebra, made physical in silicon; yet so powerful as to be able to completely outsmart the whole of humanity combined. Or: a super-powered computer virus/worm ecology that gets into everything, including ultimately, the physical world. It’s impossible to get rid of, and eventually consumes everything.

- Capable of world model building. Yes, to be as good a “stochastic parrot” as current foundation models are requires (spontaneous, emergent) internal world model building in amongst the inscrutable spaghetti of network weights.

- Situationally aware. Such world models will (at sufficient capability) include models of the context that the AI is in, as an artificial neural network running on computer hardware, built by agents known as humans.[22]

- Capable of goal mis-generalisation[23] (mesa-optimisation). Yes, this is no longer a mere theoretical concern.

The default outcome of AGI is doom

If you apply a security mindset (Murphy’s Law) to the problem of AI alignment, it should quickly become apparent that it is very difficult. There are multiple components to alignment, and associated threat models. We are nowhere close to 100% aligned, 0 error rate AGI. Most prominent alignment researchers have uncomfortably high estimates for P(doom|AGI). Yet many thought leaders in EA, despite taking AI x-risk seriously, have inexplicably low estimates for P(doom|AGI). I explore this further in a separate post, but suffice to say that the conclusion is that the default outcome of AGI is doom. The public framing of the problem of AGI x-risk needs to shift to reflect this.

50% ≤ P(doom|AGI) < 100% means that we can confidently make the case for the AI capabilities race being a suicide race.[24] It then becomes a personal issue of life-and-death for those pushing forward the technology. Perhaps Sam Altman or Demis Hassabis really are okay with gambling 800M lives in expectation on a 90% chance of utopia from AGI? (Despite a distinct lack of democratic mandate.) But are they okay with such a gamble when the odds are reversed? Taking the bet when it’s 90%[25] chance of doom is not only highly morally problematic, but also, frankly, suicidal and deranged.[26]

Even if, after all of the above, you think foom and/or superintelligence and/or extinction are unlikely, you should still be concerned about global catastrophic risk[27] enough to want urgent action on this.[28] Even AI systems a generation or two (months) away (NextAI or NextAI2 above) could be able to wrest enough power from the hands of humanity that we basically lose control of the future, thanks to actors like Palantir[29] who seem eager to place AI into more and more critical domains. A moratorium should also rein in any attempts towards inserting AI/ML systems into critical systems such as offensive weapons, nuclear power plants, WMDs, major and critical powerplants and electric subsystems, and cybersecurity domains. Let’s Pause AI. Shut it Down. Do whatever it takes to avert catastrophe.

So: how much should we be betting (in expected loss of life[30]) that the first step of the recipe written out above doesn’t happen?

This is an unprecedented[31] global emergency. Global moratorium on AGI, now.

Increasing public awareness

Increasing public awareness is both a phenomena that is happening, and something we need to do much more of in order to avert catastrophe. Media about AI risk has been steadily ramping up. But the new era in public communication and advocacy started with FLI’s Pause letter[32]. Yudkowsky in short order then knocked it out the park with his “Shut it Down” Time article:

Many researchers steeped in these issues, including myself, expect that the most likely result of building a superhumanly smart AI, under anything remotely like the current circumstances, is that literally everyone on Earth will die.

…

We are not ready. We are not on track to be significantly readier in the foreseeable future. If we go ahead on this everyone will die, including children who did not choose this and did not do anything wrong.

Shut it down.

To me this was a watershed moment. A lot of pent up emotion was released (I cried). It was finally okay to just say, in public, what you really thought and felt about extinction risk from AI. Forget fear of looking alarmist, there is an asteroid heading directly for Earth. Yes, this is a metaphor, but a very useful one. The danger level is the same - we are facing total extinction with high probability. Max Tegmark’s Time article draws out this analogy to great effect. The situation we are in is really quite analogous to the film Don’t Look Up. Spoiler: that film does not have a happy ending. How do we get one with AI in real life? We need to avoid the failure modes that are illustrated in the film for one. We need to get media personalities and world leaders to take onboard the gravity of the situation: this is a suicide race that no one can win. A race over the edge of a cliff, only all competitors are tied together. If one gets to the finish line – over the cliff edge – then we all get dragged down into the abyss with them.

Yudkowsky and other top alignment researchers have been going on popular podcasts. Explaining the predicament in detail. This is great!

The need for global coordination and regulation is being discussed.[33]

There is also a growing “AI Notkilleveryoneism” movement on Twitter. I like the energy, humour and fast pace, as contrasted to the slower, more serious and deliberative tone typical of the EA Forum[34]; seems more appropriate given the circumstances.

AI researchers and enthusiasts, those with an allegiance or commitment to the field, who believe in the inevitability of AGI; they are, generally[35], more sceptical of, and resistant to, discussion of slowdown and regulation. If your bottleneck to (further) public communication stems from frustration with bad AI x-risk takes from tech people who should know better. Consider this:

“It made sense to expect that if it’s this hard to explain to a fellow computer enthusiast, then there’s no hope of reaching the average person. For a long time I avoided talking about it with my non-tech friends… for that reason. However, when I finally did, it felt like the breath of life. My hopelessness broke, because they instantly vigorously agreed, even finishing some of my arguments for me. Every single AI safety enthusiast I’ve spoken with who has engaged with civilians has had the exact same experience. I think it would be very healthy for anyone who is still pessimistic about convincing people to just try talking to one non-tech person in their life about this. It’s an instant shot of hope.”

OpenAI’s stated mission is to build (“safe and beneficial”) “highly autonomous systems that outperform humans at most economically valuable work” (AGI). To most of the public this sounds dystopian, rather than utopian[36].

I think EA/LW will be eclipsed by a mainstream public movement on this soon. The fire alarm is being pressed[37] now, whether or not[38] EA/LW leadership are on board. We can’t wait for further proof[39] of danger. We must act.

Encouragingly, things are already starting to move. Since I started writing this post in earnest (3 days ago), Geoffrey Hinton has publicly resigned from Google to warn of the dangers of AI, and senior MPs in the UK Parliament have called for a summit to prevent AI from having a “disastrous” impact on humanity. Let’s build on this momentum!

What we need to happen to get out of the acute risk period

Existing approaches such as industry self-regulation and Evals are not sufficient. And sufficient regulation of hardware is likely a whole lot easier than sufficient regulation of software. To be safe from extinction we need:

- A global Pause on AGI development and training of new models larger than GPT-4 (Microsoft OpenAI and Google Deepmind need to be in the vanguard of this; but a global agreement is needed). Possibly even a roll-back to GPT-3 level AI.

- Strongly enforced regulation on limits to compute and data to prevent AI systems that are more powerful than GPT-3 until they can be 100% aligned (i.e. 0 undesirable prompt engineering is possible)[40].

- A massive global public outcry calling for these things: 100M+ people, from all around the world.[41]

These are big asks. Should we aim for the stars and hope to land on the moon? Yes. Although consider that we may actually have to reach the stars to survive[42].

The wider concern for nearer term impacts from AI - bias, job losses, data privacy and copyright infringement (AI Ethics) - are also leading to similar[43] calls in terms of regulating compute and data. We need to ally with these campaigns (we’re coming at the issue from different angles, but the things we are calling for to happen are broadly the same; we can converge on a concrete set of asks that will address all issues).

A taboo around AGI could also do a lot of work in terms of reducing the need for enforcement. There aren’t lots of rabid bioscience accelerationists constantly planning underground human cloning and genetic engineering labs; any huge economic potential that technology might’ve had has been destroyed by a global[44] taboo.

Ultimately, to succeed, we need to get the UN Security Council on board: this is more serious and urgent than nuclear proliferation. But it is just as tractable: there aren’t many relevant large computer chip manufacturers or big data centre owners. GPUs and TPUs need to be treated like Uranium, and large clusters of them like enriched Uranium (we can’t afford to have too large a cluster lest they reach criticality and cause a catastrophic intelligence explosion).

But time is short, and the window of opportunity to act before we cross a point of no return narrow. This is the crisis of all crises.[45] We need to get governments to treat this as an emergency in the same way they did with Covid[46]: we need an emergency lockdown of the global AI industry.

We know from Covid that such “phase changes” in global public opinion are possible. This time it is more difficult however; we need such a monumental shift without there being an increasing body count first. If we wait for that, we put way too great a risk on crossing a point of no return where no matter how fast the response, we still lose. Global moratorium on AGI, now.

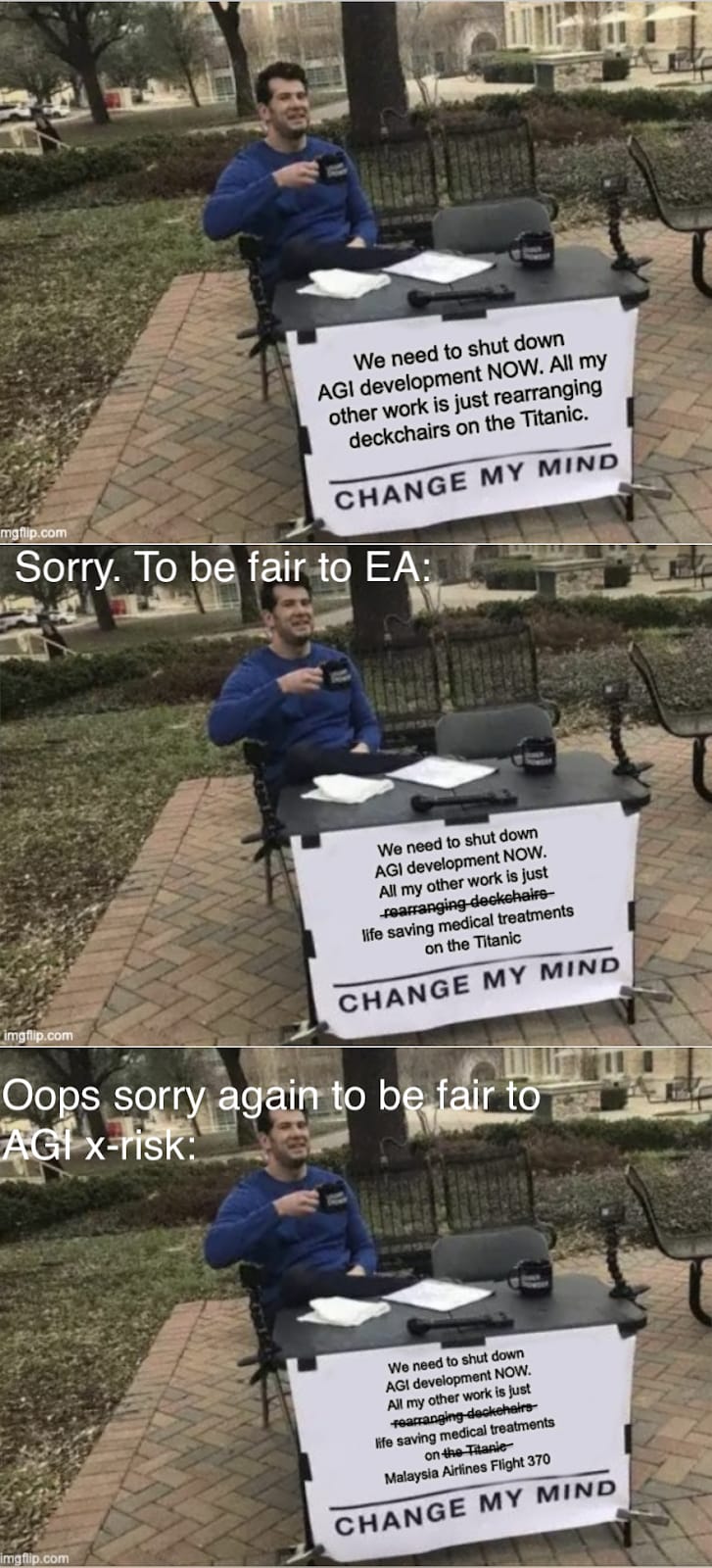

(You can also see this meme on Dank EA Memes and Twitter)

What you can and perhaps should do now

Come with me if you want to li..[47] Drop everything else you are doing until this is fixed![48] You may be feeling a bit discombobulated and/or sick with worry at this point. So here are some concrete actions you can take:[49]

- Fill out this form if you are interested in getting involved.

- Join the AGI-Moratorium-HQ Slack to coordinate with others already working in the space.

- Learn more about the basics of AGI x-risk and safety (the linked AGISF course is ~20-40 hours reading).[50]

- Be there to answer people’s questions (there are a lot).

- Talk to people about this - friends, family, neighbours, coworkers.

- Advocate for political action: we need a Pause on AGI (possibly even a rollback) asap if we are going to get through the acute risk period. The time for talking politely to (and working with) big AI is over. It has failed. They themselves are crying out to be regulated.

- Post about this on Twitter and other social media (TikTok, YouTube, Instagram, Facebook etc)[51];

- Join the AI Notkilleveryoneism Twitter Community.

- Make and share memes[52] (example[53]);

- Write and share articles[54] (example);

- Organise and share petitions (example); fund advertising for them;

- Send letters to newspapers and magazines;

- Write to your political representatives;

- Lobby politicians/industry. Talk to any relevant contacts you might have, the higher up, the better;

- Ask politicians to invite (or subpoena) AI lab leaders to parliamentary/congressional hearings to give their predictions and timelines of AI disasters;

- Make submissions to government requests for comment on AI policy (example);

- Help draft policy (some frameworks).

- Organise/join demonstrations.

- Consider civil disobedience / direct action.

- Consider ballot initiatives or referendums if they are achievable in your state or country

- Join organisations and groups working on this.

- Ask the management at your current organisation to take an institutional position on this.

- Coordinate with other groups concerned with AI who are also pushing for regulation.

- Donate to advocacy orgs (there is already Campaign for AI Safety, and more are spinning up).

- If you are earning or investing to give, seriously consider joining me[55] in liquidating a significant fraction of your assets to push this forward.

- [Added 4May (H/T Otto)] If you are technical, work on AI Pause regulation proposals! There is basically one paper now[56], possibly because everyone else thought a Pause was too far outside the Overton Window. Now we're discussing a Pause, we need to have fleshed out AI Pause regulation proposals.

- [Added 4May (H/T Otto)] Start institutes or projects that aim to inform the societal debate about AGI x-risk. The Existential Risk Observatory is setting a great example in the Netherlands. Others could do the same thing. (Funders should be able to choose from a range of AI x-risk communication projects to spend their money most effectively. This is currently really not the case.)

If you are just starting out in AI Alignment, unless you are a genius and/or have had significant new flashes of insight on the problem, consider switching to advocacy for the Pause. Without the Pause in place first, there just isn’t time to spin up a career in Alignment to the point of making useful contributions.

If you are already established in Alignment, consider more public communication, and adding your name to calls for the Pause and regulation of the AI industry.

Be bold in your public communication of the danger. Don’t use hedging language or caveats by default; mention them when questioned, or in footnotes, but don’t make it sound like you aren’t that concerned if you are.

Be less exacting in your work. 80/20 more. Don’t do the classic EA/LW thing and spend months agonising and iterating on your Google Doc over endless rounds of feedback. Get your project out into the world and iterate as you go.[57] Time is of the essence.

But still consider downside risk: we want to act urgently but also carefully. Keep in mind that a lot of efforts to reduce AI x-risk have already backfired; alignment researchers have accidentally[58] contributed to capabilities research, and many AI governance proposals are at danger of falling prey to industry capture.

If you are doing other EA stuff, my feeling on this is: let’s go all out to get a (strongly enforced) Pause in place[59], and then relax a little and go back to what we were doing before.[60] Right now I feel like all my other work is just rearranging deckchairs on the Titanic[61]. We need to be running to the bridge, grabbing the wheel, and steering away from the iceberg[62]. We may not have much time, but by Good we can try. C’mon EA, we can do this!

Acknowledgements: For helpful comments and suggestions that have improved the post, and for the encouragement to write, I thank AW, Johan de Kock, Jaeson Booker, Greg Kiss, Peter S. Park, Nik Samolyov, Yanni Kyriacos, Chris Leong, Alex M, Amritanshu Prasad, Dušan D. Nešić, and the rest of the AGI Moratorium HQ Slack and AI Notkilleveryoneism Twitter. All remaining shortcomings are my own.

- ^

Please note here that I’m not a fan of the “Death With Dignity” vibe. I hope the more pragmatically motivated and hopeful vibe I’m trying to present here comes across in this post (at least at the end).

- ^

If your first reaction to this is that it’s obviously wrong, I invite you to reply detailing your reasoning. See also the footnote 4 on the accompanying P(doom|AGI) is high post, that mentions a bounty of $1000.

- ^

Note there is already the precedent of GATO.

- ^

Perhaps if they were actually called this, rather than misnomered as Large Language Models, then people would be taking this more seriously!

- ^

Microsoft’s R&D spend was $25B last year. And the US government can afford to train a model 1000x larger than GPT-4. (And no, we probably don’t need to be worried about China).

- ^

There is the caveat in the referenced paper of "Nevertheless, the fact that we cannot rule out [data] contamination represents a significant caveat to our findings." - never have I more hoped for something to be true!

- ^

We have already blasted past the conventional wisdom of:

“☐ Don’t teach it to code: this facilitates recursive self-improvement

☐ Don’t connect it to the internet: let it learn only the minimum needed to help us, not how to manipulate us or gain power

☐ Don’t give it a public API: prevent nefarious actors from using it within their code

☐ Don’t start an arms race: this incentivizes everyone to prioritize development speed over safety.”

“Industry has collectively proven itself incapable to self-regulate, by violating all of these rules.” (Tegmark, 2023). - ^

See next section: The default outcome of AGI is doom.

- ^

You may be thinking: “no; what we need is a ‘Manhattan Project’ on Alignment!” Well, we need that as well as a Pause, not in lieu of one. It’s too late for alignment to be solved in time now, even with the Avengers working on it. This was last year’s best plan. And I tried (to persuade the people who could do it, to do it. But failed.) This year’s best plan is to prioritise the Pause.

- ^

NeXt is also the name of an AI in the 2020 TV series Next, which depicts a takeover scenario involving an “Alexa” type AI that becomes superhumanly intelligent (well worth a watch imo).

- ^

The generation after next of foundation model AI. Following generations are labelled NextAI3, NextAI4 etc.

- ^

- ^

It’s unlikely that the runway AI that we’re on a path to create will be sentient. There is some speculation that recurrent neural networks of sufficient complexity could be, but that is far from established, and is not the trajectory we are on.

- ^

4-7 months training time ~10^7 seconds; ~10^25 FLOP used to train; -> 10^18 FLOPS.

- ^

Nvidia reported $3.6B annualised in AI chip sales in Q4 2022, and Nvidia CEO Jensen Huang estimated in Feb 2023 that “Now you could build something like a large language model, like a GPT, for something like $10, $20 million”.

- ^

If you don't think this is enough to pose a danger, Moore's Law and associated other trends are still in effect (despite some evidence for a slowdown). And we are nowhere near close to the physical limits to computation (the theoretical limit set by physics is, to a first approximation, as many orders of magnitude above current infrastructure as current infrastructure is above the babbage engine).

- ^

3B android phones (how many people would bother to opt out if it was part of the standard Terms & Conditions?), recording 3,300 words/day (a conservative estimate) each, would be 10 trillion words.

- ^

It’s even possible they are doing this already, given the precedent OpenAI has set around disregarding data privacy. This would be in keeping with the big tech attitude of breaking the rules and taking the financial/legal hit after (that is usually much less than the profits gained from the transgression). See also: the NSA/Five Eyes.

- ^

100 days of recording at the rate calculated in the above footnote would be 1 quadrillion, ~1000x what GPT-4 was trained on.

- ^

Accessing these may not be as easy as with phone mics, but that doesn’t mean that a determined AGI+human group couldn’t hack their way to significant levels of data harvesting from them via fear or favour.

- ^

Placed in inverted commas because it’s better to think of it as an unconscious digital alien (see next point).

- ^

In Terminator 2, Skynet becomes “self-aware”; I think using the term “situationally aware” instead would’ve added more realism. Maybe it’s time for a remake of T2. (Then again, is there time enough left for that?)

- ^

See also The Alignment Problem from a Deep Learning Perspective, p4.

- ^

Re doom “only” being 50%: "it's not suicide, it's a coin flip; heads utopia, tails you're doomed" seems like quibbling at this point. [Regarding the word you're in "you're doomed" in the above - I used that instead of "we're doomed", because when CEOs hear "we're", they're probably often thinking "not we're, you're. I'll be alright in my secure compound in NZ". But they really won't! Do they think that if the shit hits the fan with this and there are survivors, there won't be the vast majority of the survivors wanting justice?]

- ^

This is my approximate estimate for P(doom|AGI). And I’ll note here that the remaining 10% for “we’re fine” is nearly all exotic exogenous factors (related to the simulation hypothesis, moral realism being true - valence realism?, consciousness, DMT aliens being real etc), that I really don't think we can rely on to save us!

- ^

You might be thinking, “but they are trapped in an inadequate equilibrium of racing”. Yes, but remember it’s a suicide race! At some point someone really high up in an AI company needs to turn their steering wheel. They say they are worried, but actions speak louder than words. Take the financial/legal/reputational hit and just quit! Make a big public show of it. Pull us back from the brink.

Update: 3 days after I wrote the above, Geoffrey Hinton, the “Godfather of AI”, has left Google to warn of the dangers of AI. - ^

As an intuition pump, imagine a million John von Neumann-level smart alien hackers each operating at a million times faster than a human, let loose on the internet, and the chaos that that would cause to global infrastructure.

- ^

- ^

The demo in the linked video looks awfully like a precursor to Skynet…

- ^

And there is also potential for astronomical levels of future suffering.

- ^

There was the case of the Manhattan Project scientists worrying about the first nuclear bomb test becoming a self-sustaining chain reaction and burning off the whole of our planet's atmosphere. But in that case, the richest companies in the world weren’t racing toward exploding a nuke before the scientists could get the calculations done to prove the tech was safe from this failure mode.

- ^

I’m not sure why my signature still hasn’t been processed, but I signed it on the first day!

- ^

- ^

Although maybe this is changing a bit too.

- ^

Via financial incentive and social conditioning.

- ^

And whilst the leaders of big AI companies (OpenAI, Deepmind, Anthropic) have expressed the desire to share the massive future wealth gained from AI in a globally equitable manner. The details of how such a Windfall will be managed have yet to be worked out.

- ^

We press our fire alarms in the UK and Europe (and Australia/NZ). They are being pulled in the US too though! Are they being pressed/pulled on other continents too? Please comment if you know of examples!

- ^

The linked post was ahead of its time. The fact that it was redacted is sad to see now (especially the crossed out parts).

- ^

It’s unreasonable to want empirical evidence for existential risk; you can’t collect data when you’re dead!

- ^

- ^

Including from the most populous countries, most of which have not had any meaningful say over the development of the AI technology that is likely to destroy them (e.g. India, Indonesia, Pakistan, Nigeria, Brazil, Bangladesh, Mexico).

- ^

This makes me think of the film Interstellar. Not sure if there is a useful metaphor to draw from it, but the film depicts an intense struggle for human survival; maybe it’s memeable material (this scene is a classic).

- ^

The linked article, controversially titled We Must Declare Jihad Against A.I. – although note that Jihad in this case is referring to Bulterian Jihad, the concept from the fictional Dune universe - a war on “thinking machines” – contains the quotes: “Policymakers should consider going several steps further than the moratorium idea proposed in the recent open letter, toward a broader indefinite AI ban”, “The prevention of an AGI would be an explicit objective of this regulatory regime.”, and “a basic rule may be: The more generalized, generative, open-ended, and human-like the AI, the less permissible it ought to be—a distinction very much in the spirit of the Butlerian Jihad, which might take the form of a peaceful, pre-emptive reformation, rather than a violent uprising.”

- ^

Yes, even China imprisons people for this.

- ^

It’s funny to think back just 6 months, and how the FTX crisis seemed all enveloping, an existential crisis for EA. This seems positively quaint now. Oh to go back to those days of mere ordinary crisis.

- ^

- ^

- ^

I’m joking and using memes here as a coping mechanism: I’m actually really fucking scared.

- ^

Being proactive about this has certainly helped my mental health, but ymmv.

- ^

The first few suggestions may seem a little trite in light of all the above. But you’ve got to start somewhere. And I know that EAs can be good at scaling projects up rapidly when they are driven to do so.

- ^

I separate out Twitter here as it is arguably the biggest driver of global opinion.

- ^

You may think that this isn’t important, but memes are effective drivers of culture.

- ^

You can reply to OpenAI and Google Deepmind executives’ tweets with this one.

- ^

Someone should also create and populate "Global AGI Moratorium" tags on the EA Forum and LessWrong.

- ^

For those not in the Highly Speculative EA Capital Accumulation Facebook group, the post reads: “There's no point in trying to become a billionaire by 2030 if the world ends before then.

I'm concerned enough about AGI x-risk now, in this new post-GPT-4+plugins+AutoGPT era, that I intend to liquidate a significant fraction of my net worth over the next year or two, in a desperate attempt to help get a global moratorium on AGI (/Pause) in place. Without this breathing space to allow for alignment to catch up, I think we're basically done for in the next 2-5 years.

Have been posting a lot on Twitter. For the next year or two I'm an "AI Notkilleveryoneist" above being an EA. I hope we can get back to EA business as usual soon (and I can relax and enjoy my retirement a bit), but this is an unprecedented global emergency that needs resolving. Is anyone else here thinking the same way?”

- ^

Shavit's paper is great and the ideas in it should be further developed as a matter of priority.

- ^

I wrote this post in ~2 days (although I developed a lot of the points on Twitter over the preceding couple of weeks) and had one round of feedback over ~2 days.

- ^

Sam Altman is being mean to Yudkowsky here, but maybe he has a point. OpenAI and Anthropic were both founded over concern for AI Alignment, but have now, I think, become two of the main drivers of AGI x-risk.

- ^

If your bottleneck for doing this is money, talk to me.

- ^

Maybe I can even get to enjoy my retirement a bit. One can hope.

- ^

To be fair to EA and x-risk, it’s actually more like life saving medical treatments on Malaysia Airlines Flight 370 (as per my meme above).

- ^

Or (re the above footnoted reference), running to the cockpit, grabbing the controls, and steering away from the ocean abyss..

Magnus Vinding @ 2023-05-02T17:19 (+31)

To push back a bit on the fast software-driven takeoff (i.e. a fast takeoff driven primarily by innovations in software):

Common objections to this narrative [of a fast software-driven takeoff] are that there won’t be enough compute, or data, for this to happen. These don’t hold water after a cursory examination of our situation. We are nowhere near close to the physical limits to computation ...

While we're nowhere near the physical limits to computation, it's still true that hardware progress has slowed down considerably on various measures. I think the steelman of the compute-based argument against a fast software-driven takeoff is not that the ultimate limits to computation are near, but rather that the pace of hardware progress is unlikely to be explosively fast (e.g. in light of recent trends that arguably point in the opposite direction, and because software progress per se seems insufficient for driving explosive hardware progress).

We're on ~10^18 FLOPS for large clusters at the moment[14], but there are likely enough GPUs available for 100 times this[15]. And the cost of this – ~$10B - is affordable for many large tech companies and national governments, and even individuals!

That actors can afford to create this next generation of AIs does not imply that those AIs will in turn lead to a hard takeoff in capabilities. From my perspective at least, that seems like an unargued assumption here.

Data is not a show-stopper either. Sure, ~all the text on the internet might’ve already been digested, but Google could readily record more words per day via phone mics[16] than the number used to train GPT-4[17]. These may or may not be as high quality as text, but 1000x as many as all the text on the internet could be gathered within months[18]. Then there are all the billions of high-res video cameras (phones and CCTV), and sensors in the world[19]. And if that is not enough, there is already a fast-growing synthetic data industry serving the ML community’s ever growing thirst for data to train their models on.

A key question is whether this extra data would be all that valuable to the main tasks of concern. For example, it seems unclear whether low-quality data from phone conversations, video cameras, etc. would give that much of a boost to a model's ability to write code. So I don't think the point made above, as it stands, is a strong rebuttal to the claim that data will soon be a limiting bottleneck to significant capability gains. (Some related posts.)

The time for talking politely to (and working with) big AI is over.

This is another claim I would push back against. For instance, from a perspective concerned with the reduction of s-risks, one could argue that talking politely to, and working with, leading AI companies is in fact the most responsible thing to do, and that taking a less cooperative stance is unduly risky and irresponsible. To be clear, I'm not saying that this is obviously the case, but I'm trying to say that it's not clear-cut either way. Good arguments can be made for a different approach, and this seems true for a wide range of altruistic values.

Tomas B. @ 2023-05-02T20:41 (+12)

Current scaling "laws" are not laws of nature. And there are already worrying signs that things like dataset optimization/pruning, curriculum learning and synthetic data might well break them - It seems likely to me that LLMs will be useful in all three. I would still be worried even if LLMs prove useless in enhancing architecture search.

Jakub Kraus @ 2023-05-16T22:45 (+3)

Current scaling "laws" are not laws of nature. And there are already worrying signs that things like dataset optimization/pruning, curriculum learning and synthetic data might well break them

Interesting -- can you provide some citations?

PeterSlattery @ 2023-05-03T04:32 (+5)

Thanks for writing this - it was useful to read the pushbacks!

As I said below, I want more synthesis of these sorts of arguments. I know that some academic groups are preparing literature reviews of the key arguments for and against AGI risk.

I really think that we should be doing that for ourselves as a community and to make sure that we are able to present busy smart people with more compelling content than a range of arguments spread across many different forum posts.

I don't think that that is going to cut it for many people in the policy space.

Greg_Colbourn @ 2023-05-03T10:21 (+5)

Agree. But at the same time, we need to do this fast! The typical academic paper review cycle is far too slow for this. We probably need groups like SAGE (and Independent SAGE?) to step in. In fact, I'll try and get hold of them.. (they are for "emergencies" in general, not just Covid[1])

- ^

Although it looks like they are highly specialised on viral threats. They would need totally new teams to be formed for AI. Maybe Hinton should chair?

Greg_Colbourn @ 2023-05-03T10:17 (+4)

hardware progress has slowed down considerably on various measures

I don't think this matters, as per the next point about there already being enough compute for doom [Edit: I've relegated the "nowhere near close to the physical limits to computation" sentence to a footnote and added Magnus' reference on slowdown to it].

That actors can afford to create this next generation of AIs does not imply that those AIs will in turn lead to a hard takeoff in capabilities. From my perspective at least, that seems like an unargued assumption here.

I think the burden of proof here needs to shift to those willing to gamble on the safety of 100x larger systems. All I'm really saying here is that the risk is way too high for comfort (given the jumps in capabilities we've seen so far going from GPT-3->GPT3.5->GPT-4).

[Meta: would appreciate separate points being made in separate comments].

Will look into your links re data and respond later.

from a perspective concerned with the reduction of s-risks, one could argue that talking politely to, and working with, leading AI companies is in fact the most responsible thing to do, and that taking a less cooperative stance is unduly risky and irresponsible.

I'm not sure what you are saying here? Do you think there is a risk of AI companies deliberately causing s-risks (e.g. releasing a basilisk) if we don't play nice!? They may be crazy in a sense of being reckless with the fate of billions of people's lives, but I don't think they are that crazy (in a sense of being sadistically malicious and spiteful toward their opponents)!

Magnus Vinding @ 2023-05-03T13:39 (+6)

I'm not sure what you are saying here? Do you think there is a risk of AI companies deliberately causing s-risks (e.g. releasing a basilisk) if we don't play nice!?

No, I didn't mean anything like that (although such crazy unlikely risks might also be marginally better reduced through cooperation with these actors). I was simply suggesting that cooperation could be a more effective way to reduce risks of worst-case outcomes that might occur in the absence of cooperative work to prevent them, i.e. work of the directional kind gestured at in my other comment (e.g. because ensuring the inclusion of certain measures to avoid worst-case outcomes has higher EV than does work to slow down AI). Again, I'm not saying that this is definitely the case, but it could well be. It's fairly unclear, in my view.

Greg_Colbourn @ 2023-05-03T14:26 (+5)

Ok. I don't put much weight on s-risks being a likely outcome. Far more likely seems to be just that the solar system (and beyond) will be arranged in some (to us) arbitrary way, and all carbon-based life will be lost as collateral damage.

Although I guess if you are looking a bit nearer term, then s-risk from misuse could be quite high. But I don't think any of the major players (OpenAI, Deepmind, Anthropic) are even really working on trying to prevent misuse at all as part of their strategy (their core AI Alignment work is on aligning the AIs, rather than the humans using them!) So actually, this is just another reason to shut it all down.

Magnus Vinding @ 2023-05-03T13:38 (+3)

Thanks for your reply, Greg :)

I don't think this matters, as per the next point about there already being enough compute for doom

That is what I did not find adequately justified or argued for in the post.

I think the burden of proof here needs to shift to those willing to gamble on the safety of 100x larger systems.

I suspect that a different framing might be more realistic and more apt from our perspective. In terms of helpful actions we can take, I more see the choice before us as one between trying to slow down development vs. trying to steer future development in better (or less bad) directions conditional on the current pace of development continuing (of course, one could dedicate resources to both, but one would still need to prioritize between them). Both of those choices (as well as graded allocations between them) seem to come with a lot of risks, and they both strike me as gambles with potentially serious downsides. I don't think there's really a "safe" choice here.

All I'm really saying here is that the risk is way too high for comfort

I'd agree with that, but that seems different from saying that a fast software-driven takeoff is the most likely scenario, or that trying to slow down development is the most important or effective thing to do (e.g. compared to the alternative option mentioned above).

Greg_Colbourn @ 2023-05-03T14:19 (+5)

both strike me as gambles with potentially serious downsides.

What are the downsides from slowing down? Things like not curing diseases and ageing? Eliminating wild animal suffering? I address that here: "it’s a rather depressing thought. We may be far closer to the Dune universe than the Culture one (the worry driving a future Butlerian Jihad will be the advancement of AGI algorithms to the point of individual laptops and phones being able to end the world). For those who may worry about the loss of the “glorious transhumanist future”, and in particular, radical life extension and cryonic reanimation (I’m in favour of these things), I think there is some consolation in thinking that if a really strong taboo emerges around AGI, to the point of stopping all algorithm advancement, we can still achieve these ends using standard supercomputers, bioinformatics and human scientists. I hope so."

To be clear, I'll also say that it's far too late to only steer future development better. For that, Alignment needs to be 10 years ahead of where it is now!

a fast software-driven takeoff is the most likely scenario

I don't think you need to believe this to want to be slamming on the brakes now. As mentioned in the OP, is the prospect of mere imminent global catastrophe not enough?

Magnus Vinding @ 2023-05-03T14:50 (+3)

What are the downsides from slowing down?

I'd again prefer to frame the issue as "what are the downsides from spending marginal resources on efforts to slow down?" I think the main downside, from this marginal perspective, is opportunity costs in terms of other efforts to reduce future risks, e.g. trying to implement "fail-safe measures"/"separation from hyperexistential risk" in case a slowdown is insufficiently likely to be successful. There are various ideas that one could try to implement.

In other words, a serious downside of betting chiefly on efforts to slow down over these alternative options could be that these s-risks/hyperexistential risks would end up being significantly greater in counterfactual terms (again, not saying this is clearly the case, but, FWIW, I doubt that efforts to slow down are among the most effective ways to reduce risks like these).

a fast software-driven takeoff is the most likely scenario

I don't think you need to believe this to want to be slamming on the breaks on now.

Didn't mean to say that that's a necessary condition for wanting to slow down. But again, I still think it's highly unclear whether efforts that push for slower progress are more beneficial than alternative efforts.

Greg_Colbourn @ 2023-05-03T21:04 (+5)

I think it's a very hard sell to try and get people to sacrifice themselves (and the whole world) for the sake of preventing "fates worse than death". At that point most people would probably just be pretty nihilistic. It also feels like it's not far off basically just giving up hope: the future is, at best, non-existence for sentient life; but we should still focus our efforts on avoiding hell. Nope. We should be doing all we can now to avoid having to face such a predicament! Global moratorium on AGI, now.

Magnus Vinding @ 2023-05-04T08:42 (+9)

I think it's a very hard sell to try and get people to sacrifice themselves (and the whole world) for the sake of preventing "fates worse than death".

I'm not talking about people sacrificing themselves or the whole world. Even if we were to adopt a purely survivalist perspective, I think it's still far from obvious that trying to slow things down is more effective than is focusing on other aims. After all, the space of alternative aims that one could focus on is vast, and trying to slow things down comes with non-trivial risks of its own (e.g. risks of backlash from tech-accelerationists). Again, I'm not saying it's clear; I'm saying that it seems to me unclear either way.

We should be doing all we can now to avoid having to face such a predicament!

But, as I see it, what's at issue is what the best way is to avoid such a predicament/how to best navigate given our current all-too risky predicament.

FWIW, I think that a lot of the discussion around this issue appears strongly fear-driven, to such an extent that it seems to get in the way of sober and helpful analysis. This is, to be sure, extremely understandable. But I also suspect that it is not the optimal way to figure out how to best achieve our aims, nor an effective way to persuade readers on this forum. Likewise, I suspect that rallying calls along the lines of "Global moratorium on AGI, now" might generally be received less well than would, say, a deeper analysis of the reasons for and against attempts to institute that policy.

Matt Brooks @ 2023-05-04T14:25 (+3)

Do you not trust Ilya when he says they have plenty more data?

https://youtu.be/Yf1o0TQzry8?t=656

Magnus Vinding @ 2023-05-04T15:03 (+2)

I didn't claim that there isn't plenty more data. But a relevant question is: plenty more data for what? He says that the data situation looks pretty good, which I trust is true in many domains (e.g. video data), and that data would probably in turn improve performance in those domains. But I don't see him claiming that the data situation looks good in terms of ensuring significant performance gains across all domains, which would be a more specific and stronger claim.

Moreover, the deference question could be posed in the other direction as well, e.g. do you not trust the careful data collection and projections of Epoch? (Though again, Ilya saying that the data situation looks pretty good is arguably not in conflict with Epoch's projections — nor with any claim I made above — mostly because his brief "pretty good" remark is quite vague.)

Note also that, at least in some domains, OpenAI could end up having less data to train their models with going forward, as they might have been using data illegally.

Greg_Colbourn @ 2023-05-04T15:12 (+4)

Let's hope that OpenAI is forced to pull GPT-4 over the illegal data harvesting used to create it.

Greg_Colbourn @ 2023-06-01T01:29 (+2)

Coming back to the point about data. Whilst Epoch gathered some data showing that the stock high quality text data might soon be exhausted, their overall conclusion is that there is only a “20% chance that the scaling (as measured in training compute) of ML models will significantly slow down by 2040 due to a lack of training data.”. Regarding Jacob Buckman's point about chess, he actually outlines a way around that (training data provided by narrow AI). As a counter to the wider point about the need for active learning, see DeepMind's Adaptive Agent and the Voyager "lifelong learning" Minecraft agent, both of which seem like impressive steps in this direction.

PeterSlattery @ 2023-05-03T04:29 (+24)

Thanks for writing this. I appreciate the effort and sentiment. My quick and unpolished thoughts are below. I wrote this very quickly, so feel free to critique.

The TLDR is that I think that this is good with some caveats but also that we need more work on our ecosystem to be able to do outreach (and everything else) better.

I think we need a better AI Safety movement to properly do and benefit from outreach work. Otherwise, this and similar posts for outreach/action are somewhat like a call to arms without the strategy, weapons and logistics structure needed to support them.

Doing the things you mention is probably better than doing nothing (some of these more than others), but it's far what is possible in terms or risk minimisation and expected impact.

What do we need for the AI Safety movement to properly do and benefit from outreach work?

I think that doing effective collective outreach will require us to be more centralised and coordinated.

Right now, we have people like you who seem to believe that we need to act urgently to engage people and raise awareness, in opposition to other influential people like Rohin Shah, Oliver Harbynka, who seem to oppose movement building (though this may just be the recruitment element).

The polarisation and uncertainty promotes inaction.

I therefore don't think that we will get anything close to maximally effective awareness raising about AI risk until we have a related strategy and operational plan that has enough support from key stakeholders or is led by one key stakeholder (e.g., Will/Holden/Paul) and actioned by those who trust that person's takes.

Here are my related (low confidence) intuitions (based on this and related conversations mainly) for what to do next:

We need to find/fund/choose some/more people/process to drive overall strategy and operation for the mainstream AI Safety community. For instance, we could just have some sort of survey/voting system to capture community preferences/elect someone. I don't know what makes sense now, but it's worth thinking about.

When we know what the community/representatives see as the strategy and supporting operation, we need someone/some process to figure out who is responsible for executing the overall strategy and parts of the operations and communicating them to relevant people. We need behaviour level statements for 'who needs to do what differently'.

When we know 'who needs to do what differently' we need to determine and address the blockers and enablers to scale and sustain the strategy and operation (e.g., we likely need researchers to find what communication works with different audiences, communicators to write things, connect with, and win over, influential/powerful people, recruiters to recruit the human resources, developers and designers to make persuasive digital media, managers to manage these groups, entrepreneurs to start and scale the project, and funders to support the whole thing etc).

It's a big ask, but it might be our best shot.

Why hasn't somebody done this already?

As I see it, the main reason for all of the above is a lack of shared language and understanding which merged because of how the AI safety community developed.

Movement building/field building mean different things to different people and no-one knows what the community collectively support or oppose in this regard. This uncertainty reduces attempts to do anything on behalf of the community or the chances of success if anyone tries.

Perhaps because of this no-one who could curate preferences and set a direction (e.g., Will/Holden/Paul) feels confident to do so.

It's potentially a chicken and egg or coincidence of wants problem where most people would like someone like Holden to drive the agenda, but he doesn't know or thinks someone would be better suited (and they don’t know). Or the people who could lead somehow know that the community doesn’t want anyone to lead it in this way, but haven't communicated this, so I don’t know that yet.

What happens if we keep going as we are?

I think that the EA community (with some exceptions) will mostly continue to function like a decentralised group of activists, posting conflicting opinions in different forums and social media channels, while doing high quality, but small scale, AI safety governance, technical and strategy work that is mostly known and respected in the communities it is produced in.

Various other more centralised groups with leaders like Sam Altman, Tristan Harris, Tina Gebru etc will drive the conversations and changes. That might be for the best, but I suspect not.

Urgent, unplanned communication by EA acting insolation poses many risks. If lots of people who don't know what works for changing people's minds and behaviours post lots of things about how they feel this could be bad.

These people could very well end up in isolated communities (e.g., just like many vegan activists I see who are mainly just reaching vegan followers on social media).

They could poison the well and make people associate AI safety with poorly informed and overconfident pessimists.

If people engage in civil disobedience we could end being feared and hated and subsequently excluded from consideration and conversation.

Our actions could create abiding associations that will damage later attempts to persuade by more persuasive sources.

This could be the unilateralist's curse brought to life.

Other thoughts/suggestions

Test the communication in small scale (e.g., with a small sample of people on mechanical turk or with friends) before you do large scale outreach

Think about taking steps back to prioritise between the behaviour to rule out the ones with more downside risk (so better to write letters to representatives than posts to large audiences on social media if unsure what is persuasive).

Don’t do civil disobedience unless you have read the literature about when and where it works (and maybe just don’t do it - that could backfire badly).

Think about the AI Safety ecosystem and indirect ways to get more of what you want by influencing/aiding people or processes within it:

For instance, I'd like for progress on questions like:

- what are the main arguments for and against doing certain things (e.g., the AI pause/public awareness raising), what is the expert consensus on whether a strategy/action would be a good idea or not (e.g., what do superforcasters/AI orgs recommend)?

- When we have evidence for a strategy/action, then: Who needs to do what differently? Who do we need to communicate to, and what do we know is persuasive to them/how can we test that?

- Which current AI safety (e.g., technical, strategy, movement building) projects are the ones that are worth prioritising the allocation of resources (funding, time, advocacy) to etc? What do experts think?

- What voices/messages can we amplify when we communicate? It's much easier to share something good from an expert than write it.

- Who could work with others for mutual benefit but doesn’t realise it yet?

I am thinking about, and doing, a little of some of these things, but have other obligations for 3-6 months and some uncertainty about whether I am well suited to do them.

Greg_Colbourn @ 2023-05-03T12:12 (+19)

These all seem like good suggestions, if we still had years. But what if we really do only have months (to get a global AGI moratorium in place)? In some sense the "fog of war" may already be upon us (there are already too many further things for me to read and synthesise, and analysis paralysis seems like a great path toward death). How did action on Covid unfold? Did all these kinds of things happen first before we got to lockdowns?

vegan activists

This is quite different. It's about personal survival of each and every person on Earth, and their families. (No concern for other people or animals is needed!)

This could be the unilateralist's curse brought to life.

Could it possibly be worse than what's already happened with DeepMind, OpenAI and Anthropic (and now Musk's X.ai)?

have other obligations for 3-6 months

Is there any way you can get out of those other obligations? Time really is of the essence here. Things are already moving fast, whether EA/the AI Safety community is coordinated and onboard or not.

Geoffrey Miller @ 2023-05-15T20:28 (+6)

Peter -- good post; these all seem reasonable as comments.

However, let me offer a counter-point, based on my pretty active engagement on Twitter about AI X-risk over the last few weeks: it's often very hard to predict which public outreach strategies, messages, memes, and points will resonate with the public, until we try them out. I've often been very surprised about which ideas really get traction, and which don't. I've been surprised that meme accounts such as @AISafetyMemes have been pretty influential. I've also been amazed at how (unwittingly) effective Yann LeCun's recklessly anti-safety tweets have been at making people wary of the AI industry and its hubris.

This unpredictability of public responses might seriously limit the benefits of carefully planned, centrally organized activism about AI risk. It might be best just to encourage everybody who's interested to try out some public arguments, get feedback, pay attention to what works, identify common misunderstandings and pain points, share tactics with like-minded others, and iterate.

Also, lack of formal central organization limits many of the reputational risks of social media activism. If I say something embarrassing or stupid as my Twitter persona @primalpoly, that's just a reflection on that persona (and to some extent, me), not on any formal organization. Whereas if I was the grand high vice-invigilator (or whatever) in some AI safety group, my bad tweets could tarnish the whole safety group.

My hunch is that a fast, agile, grassroots, decentralized campaign of raising AI X risk awareness could be much more effective than the kind of carefully-constructed, clearly-missioned, reputationally-paranoid organizations that EAs have traditionally favored.

RagingAgainstDarkness @ 2023-05-03T07:02 (+18)

I'm convinced. Raising public awareness is the most important thing to do now. Is there a ready-made presentation I can use to communicate more effectively to the general public? A presentation that explains AGI risks from the ground up?

Greg_Colbourn @ 2023-05-09T10:23 (+4)

Pauseai.info is now up. Not sure if this is exactly what you are looking for, but covers the basic arguments.

Otto @ 2023-05-04T09:56 (+13)

I don't know if everyone should drop everything else right now, but I do agree that raising awareness about AI xrisks should be a major cause area. That's why I quit my work on the energy transition about two years ago to found the Existential Risk Observatory, and this is what we've been doing since (resulting in about ten articles in leading Dutch newspapers, this one in TIME, perhaps the first comms research, a sold out debate, and a passed parliamentary motion in the Netherlands).

I miss two significant things on the list of what people can do to help:

1) Please, technical people, work on AI Pause regulation proposals! There is basically one paper now, possibly because everyone else thought a pause was too far outside the Overton window. Now we're discussing a pause anyway and I personally think it might be implemented at some point, but we don't have proper AI Pause regulation proposals, which is a really bad situation. Researchers (both policy and technical), please fix that, fix it publicly, and fix it soon!

2) You can start institutes or projects that aim to inform the societal debate about AI existential risk. We've done that and I would say it worked pretty well so far. Others could do the same thing. Funders should be able to choose from a range of AI xrisk communication projects to spend their money most effectively. This is currently really not the case.

Greg_Colbourn @ 2023-05-04T15:07 (+3)

Great work you are doing with Existential Risk Observatory, Otto! Fully agree with your points too - have added them to the post. The Shavit paper is great btw, and the ideas should be further developed as a matter of priority (we need to have working mechanisms ready to implement).

Otto @ 2023-05-16T12:59 (+1)

Yes exactly!

Darren McKee @ 2023-05-06T15:22 (+6)

FYI, I'm working on a book about the risks of AGI/ASI for a general and I hope to get it out within 6 months. It likely won't be as alarmist as your post but will try to communicate the key messages, the importance, the risks, and the urgency. Happy to have more help.

Greg_Colbourn @ 2023-05-09T10:28 (+2)

Cool, but a lot is likely to happen in the next 6 months! Maybe consider putting it online and updating it as you go? I feel like this post I wrote is already in need of updating (with mention of the H100 "summoning portals" already in the pipeline, CthuluGPT, Stability.ai, discussion at Zuzalu last week, Pause AI.)

Darren McKee @ 2023-05-10T11:31 (+1)

I'm definitely aware of that complication but I don't think that is the best way to broader impact. Uncertainty abounds. If I can get it out in 3 months, I will.

yefreitor @ 2023-05-02T23:23 (+5)

Common objections to this narrative are that there won’t be enough compute, or data, for this to happen. These don’t hold water after a cursory examination of our situation. We are nowhere near close to the physical limits to computation (the theoretical limit set by physics is, to a first approximation, as many orders of magnitude above current infrastructure as current infrastructure is above the babbage engine).

And we are nowhere, nowhere, nowhere, nowhere near being able to even begin approaching them. We're closer to nanotech. We're closer to dyson spheres. I can't emphasize this enough. It's like an iron-age armorsmith worrying about the physical limits of tensile strength.

Hardware overhang is a legitimate concern but you hurt your argument quite badly by mixing it with this stuff. In the whole history of the universe no one will ever be hit in the head with an entire ringworld. It's steel you have to worry about.

Greg_Colbourn @ 2023-05-03T10:50 (+3)

Noted. It's the sentence after that (below) that is the more important one, so perhaps that should go first (and the rest as a footnote [Edit: I've now made these changes to OP]).

We're on ~10^18 FLOPS for large clusters at the moment[14], but there are likely enough GPUs available for 100 times this[15]. And the cost of this – ~$10B - is affordable for many large tech companies and national governments, and even individuals!

Harlan @ 2023-05-03T20:38 (+4)

Great post!

"All that is stopping them being even more powerful is spending on compute. Google & Microsoft are worth $1-2T each, and $10B can buy ~100x the compute used for GPT-4. Think about this: it means we are already well into hardware overhang territory[5]."

I broadly agree with the point that compute could be scaled up significantly, but I want to add a few notes about the claim that $10B buys 100x the compute of GPT-4.

Altman said "more" when asked if GPT-4 had cost $100M to train. We don't know how much more. But PaLM seems to have only cost $9M-$23M so $100M is probably reasonable.

If OpenAI was buying up 100x the compute of GPT-4, maybe that would be a big enough spike in demand for GPUs that they would become more expensive. I'm pretty uncertain about what to expect there, but I estimated that PaLM used the equivalent of 0.01% of the world's current GPU/TPU computing capacity for 2 months. GPT-4 seems to be bigger than PaLM, so 100x the compute used for it might be the equivalent of more than 1% of the world's existing GPU/TPU computing capacity for 2 months.

Greg_Colbourn @ 2023-05-03T21:13 (+3)

Thanks! Interested in getting more stats on global GPU/TPU capacity (there seems to be a dearth of good stats available). In your AI Impacts report, you mention paywalled reports. How much are they to access? (DM me and I can pay).

Epoch AI estimate that GPT-4 costs only $40M (not sure if that is today's price, with cost improvements since last year?)

100x the compute used for it might be the equivalent of more than 1% of the world's existing GPU/TPU computing capacity for 2 months.

1% seems well within reach for the biggest players :(

Greg_Colbourn @ 2023-06-13T08:11 (+3)

I submitted this to the OpenPhil AI Worldviews Contest on 31st May with a few additions and edits - this pdf version is most up to date.

Greg_Colbourn @ 2023-06-14T11:16 (+2)

Matthew Barnett's compute-based framework for thinking about the future of AI corroborates my view that data is not likely to be a bottleneck. Also, contrary to the section "against very short timelines", I argue that in fact, the data/framework used is enough to make one even more worried than I am in the OP. 1 OOM FLOP more than I previously said ("100x the compute used for GPT-4") is likely available basically now to some actors; or 4 OOM including algorithmic improvements that come "for free" with compute scaling! This (10^28-10^31 FLOP) means AGI is possible this year.