We can do better than argmax

By Jan_Kulveit, technicalities @ 2022-10-10T10:32 (+113)

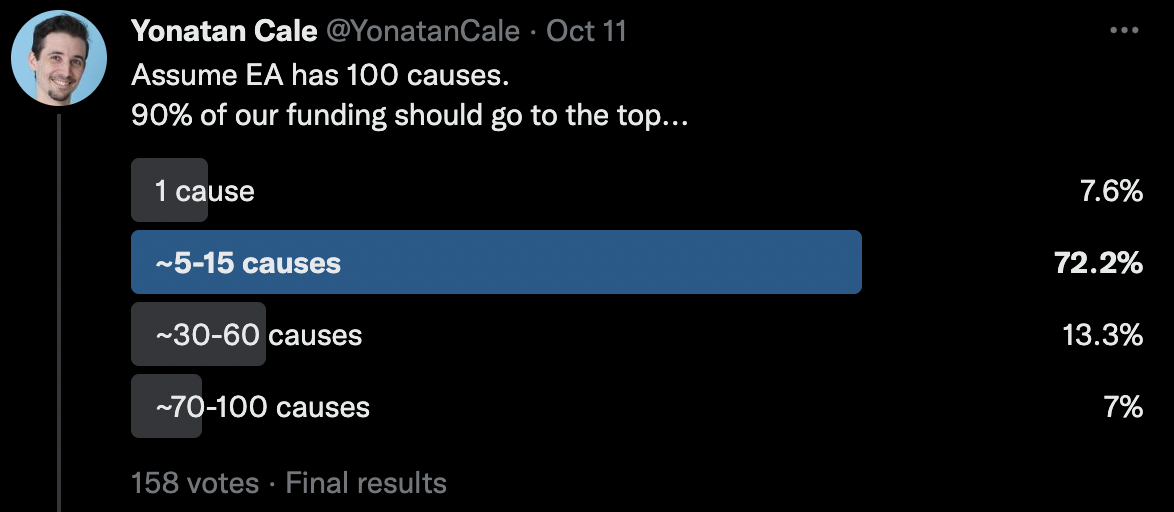

Summary: A much-discussed normative model of prioritisation in EA is akin to argmax (putting all resources on your top option). But this model often prescribes foolish things, so we rightly deviate from it – but in ad hoc ways. We describe a more principled approach: a kind of softmax, in which it is best to allocate resources to several options by confidence. This is a better yardstick when a whole community collaborates on impact; when some opportunities are fleeting or initially unknown; or when large actors are in play.

Epistemic status: Relatively well-grounded in theory, though the analogy to formal methods is inexact. You could mentally replace “argmax” with “all-in” and “softmax” with “smooth” and still get the gist.

Gavin wrote almost all of this one, based on Jan’s idea.

> many EAs’ writings and statements are much more one-dimensional and “maximizy” than their actions.

Cause prioritisation is often talked about like this:

- Evaluate a small number of options (e.g. 50 causes);

- Estimate their {importance, tractability, and neglectedness} from expert point estimates;

- Give massive resources to the top option.

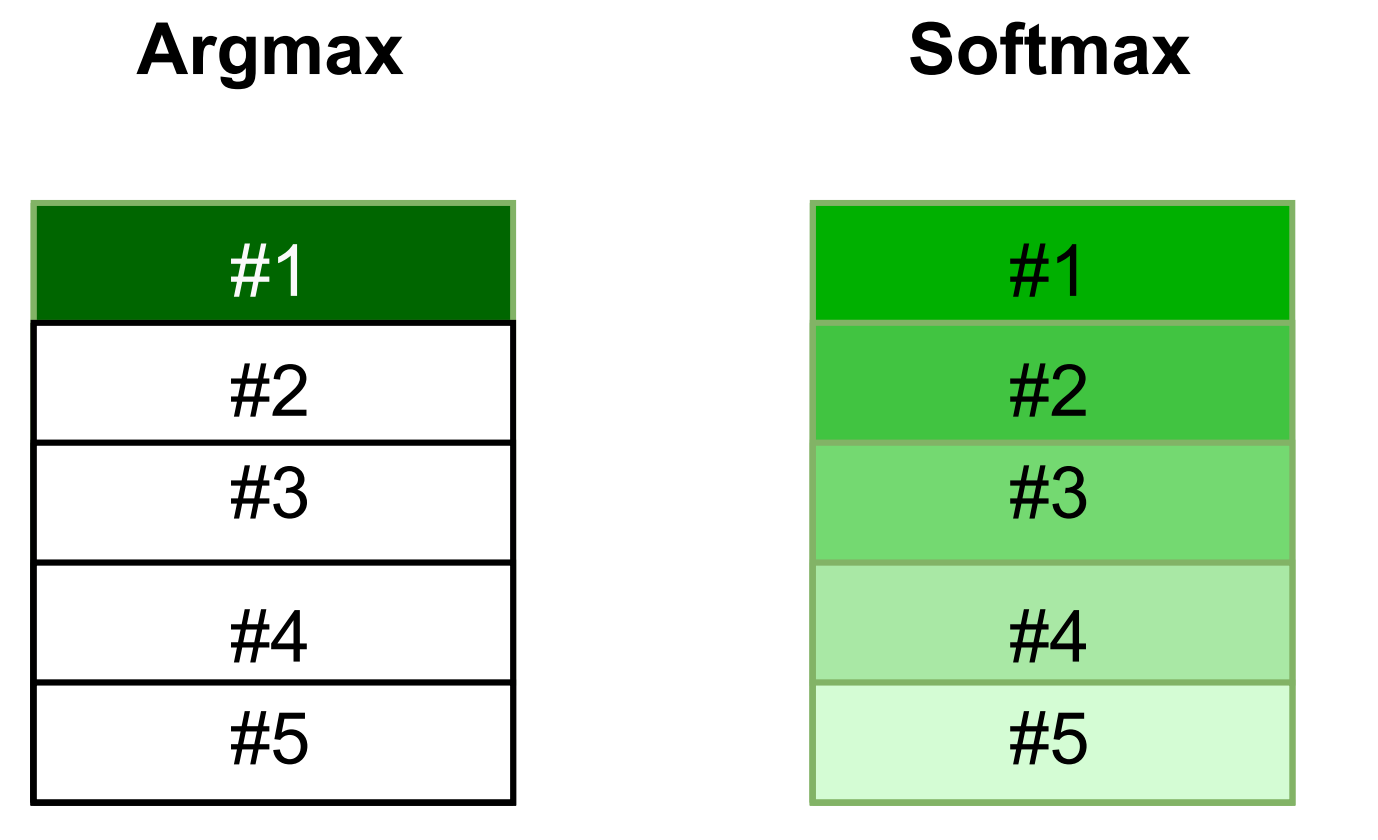

You can see this as taking the argmax: as figuring out which input (e.g. “trying out AI safety”; “going to grad school”) will get us the most output (expected impact). So call this argmax prioritisation (AP).

AP beats the hell out of the standard procedure (“do what your teachers told you you were good at”; “do what polls well”). But it’s a poor way to run a portfolio or community, because it only works when you’re allocating marginal resources (e.g. one additional researcher); when your estimates of the effect or cost-effect are not changing fast; and when you already understand the whole action space. [1]

It serves pretty well in global health. But where these assumptions are severely violated, you want a different approach – and while alternatives are known in technical circles, they are less understood by the community at large.

Problems with AP, construed naively:

- Monomania: the argmax function returns a single option; the winner takes all the resources. If people naively act under AP without coordinating, we get diminishing returns and decreased productivity (because of bottlenecks in the complements to adding people to a field, like ops and mentoring). Also, under plausible assumptions, the single cause it picks will be a poor fit for most people. To patch this, the community has responded with the genre "You should work on X instead of AI safety" or “Why X is actually the best way to help the long-term future”. We feel we need to justify not argmaxing, or to represent our thing as the true community argmax. And in practice justification often involves manipulating your own beliefs (to artificially lengthen your AI timelines, say), appealing to ad hoc principles like worldview diversification [2], or getting into arguments about the precise degree of crowdedness of alignment.

- Stuckness: Naive argmax gives no resources to exploration (because we assume at the outset that we know all the actions and have good enough estimates of their rank). As a result, decisions can get stuck at local maxima. The quest for "Cause X" is a meta patch for a lack of exploration in AP. Also, from experience, existing frameworks treat value-of-information as an afterthought, sometimes ignoring it entirely. [3]

- Flickering: If the top two actions have similar utilities, small changes in the available information lead to endless costly jumps between options. (Maybe even cycles!) Given any realistic constraints about training costs or lags or bottlenecks, you really don't want to do this. This has actually happened in our experience, with some severe switching costs (years lost per person). OpenPhil intentionally added inertia to their funding pledges to build trust and ride out this kind of dynamic.

(But see the appendix for a less mechanical approach.)

Softmax prioritisation

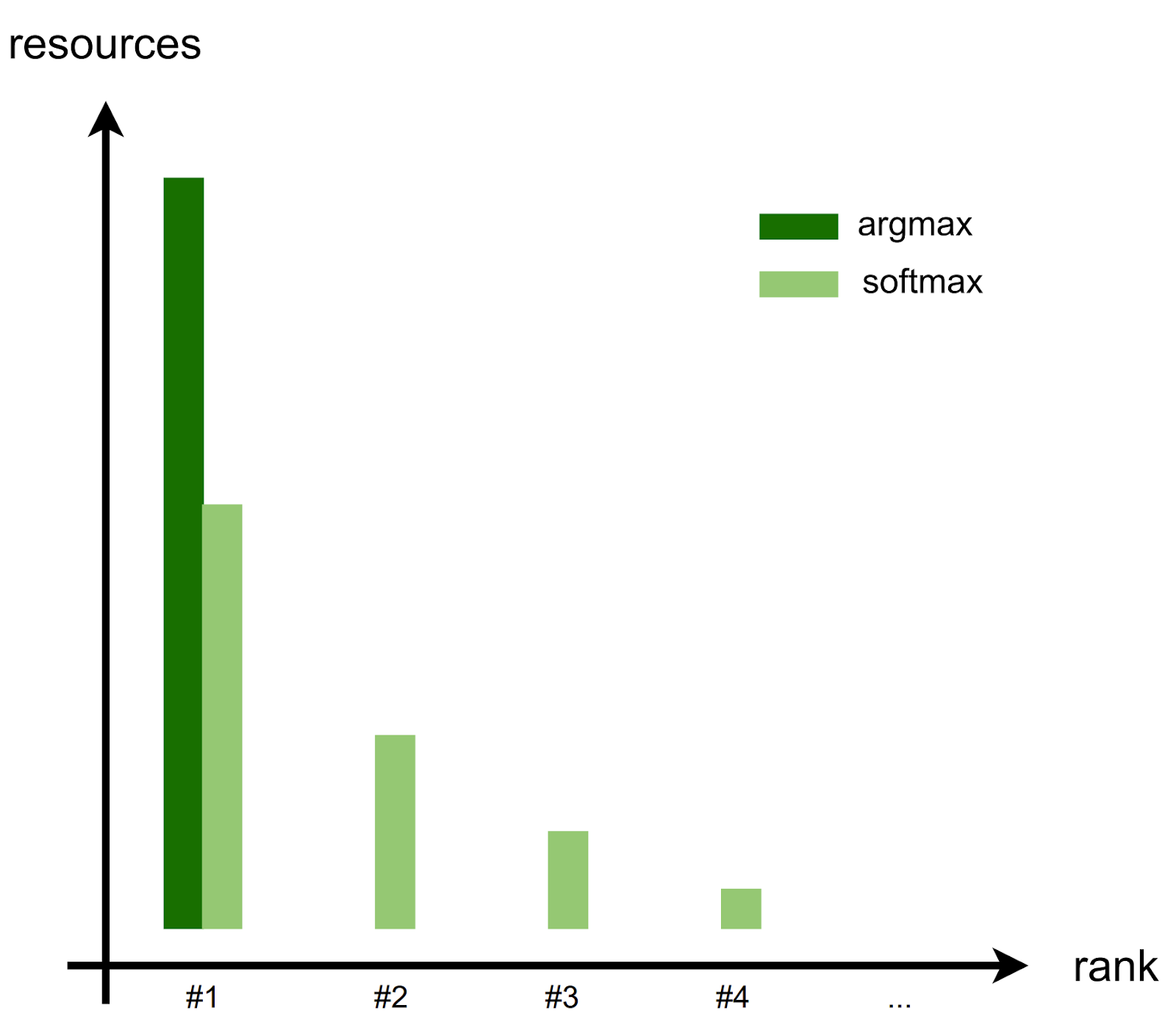

Softmax (“soft argmax”) is a function which approximates the argmax in a smooth fashion. Instead of giving you one top action, it gives you a set of probabilities. See this amazing (and runnable!) tutorial from Evans and Stuhlmüller.

Softmax is a natural choice to inject a bit of principled exploration. Say you were picking where to eat, instead of what to devote your whole life to. Example with made-up numbers: where argmax goes to the best restaurant every day, softmax might go to the best restaurant ~70% of the time, the second best ~20% of the time, the third best ~5% of the time... The weights here are the normalised exponential of how good you think each is.

The analogy is nicer when we consider softmax’s temperature parameter, which controls how wide your uncertainty is, and so how much exploration you want to do. This lets us update our calculation as we explore more: under maximum uncertainty, softmax just outputs a uniform distribution over options, so that we explore literally every option with equal intensity. Under maximum information, the function outputs just the argmax option. In real life we are of course in the middle for all causes – but this is useful, if you can guess how far along the temperature scale your area (or humanity!) currently is.

We have been slowly patching AP over the years, approximating a principled alternative. We suggest that softmax prioritisation incorporates many of these patches. It goes like this:

- Start at high temperature, placing lots of weight on each option.

- Observe what happens after taking each option.

- Decrease your temperature as you explore and learn more, thus gradually approaching argmax.

(To be clear, we’re saying the community should run this, and that this indirectly implies individual attitudes and decisions. See Takeaways below.)

So "softmax" prioritisation says that it is normative for some people to work on the second and third-ranked things, even if everyone agrees on the ranking.

Why should the EA community as a whole do softmax prioritisation?

- Smoothing. As discussed in Monomania, in practice it’s not optimal to throw everything at one cause, because of uncertainty, diminishing returns, limited mentoring and room for funding, and poor fit with most individuals. So we should spread our resources out (and in fact we do).

- Total allocation. Allocating marginal resources (e.g. as a single altruistic donor looking to improve global health) is really different from allocating a large fraction of total resources (e.g. when the resources of an entire nation-state are allocated among pandemic interventions). For marginal allocation, what matters is the local cost/benefit slope. When managing total resource allocation, understanding the whole curve is vital. [4]

Consider EA as one big "collective agent", and think about the allocation of people as if it was collaborative. Notably, the optimal allocation of people is not achieved by everyone individually doing argmax prioritisation, since we need to take into account crowding and elasticities (“room for more funding” and so on). - Due weight on exploration and optionality. When you're choosing between many possible interventions and new interventions are constantly appearing and disappearing, then you should spend significant resources on 1) finding options, 2) trying them out, and 3) preserving options that might otherwise vanish. (e.g. Going to the committee meetings and fundraisers so that people see you as a live player.)

Takeaways

Lots of people already act as if the community was using something like softmax prioritisation. So our advice is more about cleaning up the lore; resolving cognitive dissonance between our argmax rhetoric and softmax actions; and pushing status gradients to align with the real optima. In practice:

- Explore with a clear conscience! List new causes; look for new interventions within them; look for new approaches within interventions; one might be better than all current ones.

- Stand tall! Work on stuff which is not the argmax with a clear conscience, because you are part of an optimal community effort. Similarly, praise people for exploring new options and prioritising in a “softmax” fashion; they are vital to the collective project.

- Similarly, you don’t need to distort your beliefs to say that what you’re working on is the argmax.

- Don’t flicker! Think twice about switching causes if you’ve already explored several.

Ultimately: we have made it high status to throw yourself at 80,000 Hours’ argmax output, AI. (Despite their staff understanding all of the above and communicating it!) We have not made it very high status to do anything else. [5]

So: notice that other directions are also uphill; help lift things wrongly regarded as downhill. Diversifying is not suboptimal pandering. Under more sensible assumptions, it’s just sound.

See also

- This wonderful post by Katja Grace

- Halstead’s classic critique of ITN

- Cotton-Barratt on overoptimising, Karnofsky on perils, Douglas on goodhart, Manheim on vaguely related issues.

“argmax stuck in a local maximum, photorealistic 4k”

Thanks to Fin Moorhouse, Misha Yagudin, Owen Cotton-Barratt, Raymond Douglas, Stag Lynn, Andis Draguns, Sahil Kulshrestha, and Arden Koehler for comments. Thanks to Ramana and Eliana for conversations about the concept.

Appendix: Other reasons to diverge from argmax

In order of how much we endorse them:

- Value of information is usually incredibly high

- You don’t know the whole option set

- diminishing returns

- Moral uncertainty

- Concave altruism (i.e. Jensen’s inequality!)

- The optimiser’s curse

- Worldview diversification

- Principled risk aversion, as at GiveWell

- Strategic skulduggery

- Decrease variance of your portfolio for more impact compounding(?)

For individual deviations from the community's argmax ("this is the best thing to do, ignoring who you are and your aptitudes"), your (lack of) personal fit and local opportunities can let you completely outdo the impact of you working on cause #1. (But this deviation is still a one-person argmax.)

Appendix: Technical details

The main post doesn’t distinguish all of the ways argmax prioritisation fails:

When you have uncertain rank estimates

The optimizer’s curse means that you will in general (even given an unbiased estimator) screw up and have inflated estimates for the utility of option #1. The associated inflation can be more than a standard deviation, which will often cause the top few ranks to switch. Softmax prioritisation softens the blow here by spreading resources in a way which means that the true top option will not be totally neglected.

When you have an incomplete action set

The first justification for exploration (i.e. optimal allocations which sample actions besides the current top few) is that we just do not know all of the actions that are available. This idea is already mainstream in the form of “Cause X” and Cause Exploration, so all we’re doing is retroactively justifying these. See also Macaskill’s discussion on Clearer Thinking.

When you have a time-varying action set

If actions are only available for a window in time, then you have a much more complicated decision problem. At minimum, you need to watch for new opportunities (research which takes some of your allocation) and rerun the prioritisation periodically.

When we further consider actions with a short lifespan, we have even more reason to devote permanent resources to the order and timing of actions.

When you’re doing total allocation instead of marginal

When we’re allocating many people, we don’t get the optimal allocation by everyone individually doing argmax prioritisation, since we need to take into account diminishing returns, limiting factors (“room for more funding”, “the mentor bottleneck”, and so on), and other scaling effects.

Fin gives a nice visual overview of the production functions involved. As well as letting us exploit interventions with increasing returns to scale, total allocation means you have the resources to escape local minima. This bears on the perennial “systemic change” critique: that critique is ~true when you are large!

When you have light tails

The power-law distribution of global health charity impacts was the key founding insight of EA. But there is nothing inevitable about altruistic acts taking this distribution, and Covid policy is a good example: the effects were mostly pretty additive, or multiplicative in the unfortunate way (when each effect is bounded in [0,1] and coupled, so that failure at one diminishes your overall impact). Argmax is much less bad under heavy tails.

Appendix: Softmax against Covid

With Covid in February 2020, it was hard to estimate the importance, tractability, or neglectedness of different actions; most options were unknown to me, and the impact of a given actor was highly contextual. Also, it was possible to get into a position to influence the total allocation of resources.

When planning his Covid work, Jan usually relied on a softmax heuristic: repeatedly re-evaluating his estimates of the options, putting extra weight on the value of information. While he’s happy with the results, on some occasions it was difficult to explain what he was trying to do to people who grew up with ITN and argmax prioritisation more generally.

Future crises will have different subsets of assumptions holding. Granted that the softmax approach is important, and sometimes practically diverges from the argmax, it seems good to have more people think about the tradeoff between argmax and softmax, exploration, VoI, and so on.

In dynamic environments like crises and politics, you benefit from rapidly re-evaluating actions and chaining together sequences of low-probability outcomes. ("Can I get invited to the ‘room where it happens’? Can I be then taken seriously as a scientist? Can I then be appointed to a committee? Can we then write unprecedented policy? Can that policy then be enforced?")

Classify crises by how many things you need to get right to solve them. In some crises, discovering the single silver bullet and investing in it heavily is the best approach. In others, solutions require e.g. 8 out of 10 pieces in place, and getting all of them highly functional.

Covid, at least after spreading out of Wuhan, seems closer to the second case: the winning strategy consisted of many policies, implemented well. Countries which did even better (e.g. Taiwan) did even more: "do a hundred different things, some of them won't have any effect, but all together it’ll work".

Note that this goes against the community instinct that some solutions will be much more effective than others. This expectation is often unsound outside the original context of global health projects. (Again, this context models the marginal returns to investing more or less on given actions, assuming we have no (market) power. But national policy is closer to total allocation than marginal allocation: you can have profound effects on other actors’ incentives, up to and including full power.)

i.e. Emergency policy impact differs from global health NGO impact! Governments are better modelled as performing total allocation or as monopsonies. And Covid national policy arguably didn’t have a single "silver bullet" policy with 10x the impact of the next best policy. [6]

Appendix: Enlightened argmax

In theory there’s nothing stopping the argmaxer and their argmaxer friends from doing something cleverer. For instance, you should argmax over lots of local factors (like each person’s comparative advantage and personal fit, the room for funding each cause has, the mentor bottlenecks, etc), which would let you reach quite different answers than the naive “what would be best, being agnostic about individual factors and bottlenecks?” we do to produce consensus reality.

Done optimally (i.e. by a set of perfectly coordinated epistemic peers with all the necessary knowledge of every cause), argmax could get all the good stuff. But in practice, people can't compute the argmax for themselves (well), so we rely on cached calculations from a few central estimators, which produces the above issues. A lot of us simply take the output of 80k's argmax and add a little noise from personal fit.

e.g. Does argmax really say to not explore?

If you have perfectly equal estimates of impact of everything, argmax sensibly says you must explore to distinguish them better. But once you know a bit, or if your estimates are noisy and something appears slightly better, a naive argmax over actions tells you to do just that one thing

You can always rescue argmax by going meta – instead of taking argmax over object-level actions, you instead take the argmax decision procedure. “What way of deciding actions is best? Enumerate, rank, and saturate.” (This is a relative of what Michael Nielsen calls “EA judo”.)

Still: yes, you can reframe the whole post as "EAs are often using argmax at the wrong level, evaluating "actions" (like career choices). This is often suboptimal: they should be using argmax on the meta-level of decision procedures and community portfolios".

FAQ

Q: It's not clear to me that “softmax” prioritisation avoids flickering? Specifically: At low temperature, there's lots of moving around. At high temperature, it converges to argmax, which supposedly has the flickering problem!

A: Yes, this is vague. This is because there's several levels at play: the literal abstract algorithm, the EA community’s pseudoalgorithm mixture, and each individual realising that it is or should be the community-level algorithm.

First, assume a middling temperature (if nothing else because human optimisation efforts are so young). "Softmax" prioritisation says that it is normative for some people to work on the second and third, even if everyone agreed on the ranking. Flickering is suppressed at the level of an individual switching their cause (because we all recognise that some stickiness is optimal for the community). That’s the idea anyway.

Q: How do I do this in practice? Am I even supposed to calculate anything?

A: If you share all of the intuitions already there's maybe not much point. But I expect thinking in terms of temperature and community portfolio to help, even if it's informal. One stronger formal claim we can make is that "the type signature of prioritisation is W --> D", where

- W = a world model including uncertainty and a gap where missing options should be

- D = a distribution of weights over causes.

Q: Is this post about creating defensibility for doing more normal things? That is, making it easier to persuade someone who needs a new formalism ("softmax") to be moved to depart from an earlier formalism ("argmax"). There's something broken about banning sensible intuitions if they don't align with a formalism. If this is the way a large part of the community works, you might have to play up to it anyway. If that is indeed the context & goal, details are probably irrelevant. But I think it would be more honest to say so.

A: It’s somewhere in between a methodology and a bid to shift social reality. I can't deny that maths is therapy for a certain part of the audience. But I actually do think softmax is better than existing justifications for sensible practices. We made up a bunch of bad coping mechanisms for our own argmax rhetoric. We want the sensible practices without the cognitive dissonance and suboptimal spandrels.

- ^

This form is a legacy of argmax prioritisation’s original context: evaluating global health charities. It seems to work quite well there because the assumptions are not violated very hard. (Though note that rank uncertainty continues to bite, even in this old and intensely studied domain.) The trouble is when it is used as a universal prioritisation algorithm where it will reliably fail.

- ^

Worldview diversification is way less ad hoc than the other things: it is a lot like Thompson sampling.

- ^

This is changing! OpenPhil recently commissioned Tom Adamczewski to build this cool tool.

- ^

We wrote this with government policy advice in mind as a route to affecting large fractions of the total allocation. But it also applies to optimistic scenarios of EA scaling.

- ^

OK, it’s also high-status to pontificate about the problems of EA-as-a-whole, like us.

- ^

At the individual level, there were some silver bullets, like not going to the pub or wearing a P100 mask.

elifland @ 2022-10-10T10:58 (+25)

(I've only skimmed the post)

This seems right theoretically, but I'm worried that people will read this and think this consideration ~conclusively implies fewer people should go into AI alignment, when my current best guess is the opposite is true. I agree sometimes people make the argmax vs. softmax mistake and there are status issues, but I still think not enough people proportionally go into AI for various reasons (underestimating risk level, it being hard/intimidating, not liking rationalist/Bay vibes, etc.).

Gavin @ 2022-10-10T13:31 (+4)

Agree that this could be misused, just as the sensible 80k framework is misused, or as anything can be.

Some skin in the game then: me and Jan both spend most of our time on AI.

elifland @ 2022-10-10T13:39 (+4)

Thanks for clarifying. Might be worth making clear in the post (if it isn’t already, I may have missed something).

Jan_Kulveit @ 2022-10-10T13:33 (+2)

I'm a bit confused if by 'fewer people' / 'not enough people proportionally' you mean 'EAs'. In my view, while too few people (as 'humans') work on AI alignment, too large fraction of EAs 'goes into AI'.

elifland @ 2022-10-10T13:39 (+5)

I mean EAs. I’m most confident about “talent-weighted EAs”. But probably also EAs in general.

elifland @ 2022-10-10T13:43 (+17)

In particular, I think many of the epistemically best EAs go into stuff like grant making, philosophy, general longtermist research, etc. which leaves a gap of really epistemically good people focusing full-time on AI. And I think the current epistemic situation in the AI alignment field (both technical and governance) is pretty bad in part due to this.

Linch @ 2022-10-18T18:25 (+5)

Interestingly, I have the opposite intuition, that entire subareas of EA/longtermism are kinda plodding along and not doing much because our best people keep going into AI alignment. Some of those areas are plausibly even critical for making the AI story go well.

Still, it's not clear to me whether the allocation is inaccurate, just because alignment is so important.

Technical biosecurity and maybe forecasting might be exceptions though.

MichaelPlant @ 2022-10-10T15:24 (+23)

A couple of comments.

(1), I found this post quite hard to understand - it was quite jargon-heavy.

(2) I'd have appreciated it if you'd located this in what you take to be the relevant literature. I'm not sure if you're making an argument about (A) why you might want to diversify resources across various causes, even if certain in some moral view (for instance because there are diminishing marginal returns, so you fund option X up to some point and then switch to Y) or (B) why you might want to diversify because you are morally uncertain.

(3), because of (2), I'm not sure what your objection to 'argmax' is. You say 'naive argmax' doesn't work. But isn't that a reason to do 'non-naive argmax' rather than do something else? Cf. debates where people object to consequentialism by claiming it implies you ought to kill people and harvest their organs, and the consequentialist says that's naive and not actually what consequentialism would recommend.

Fwiw, the standard approaches to moral uncertainty ('my favourite theory' and 'maximise expected choiceworthiness') provide no justification in themselves for splitting your resources. In contrast, the 'worldview diversfication' approach does do this. You say that worldview diversification is ad hoc, but I think it can be justified by a non-standard approach to moral uncertainty, one I call 'internal bargaining' and have written about here.

Jan_Kulveit @ 2022-10-14T08:51 (+19)

(1) The post attempts to skirt between being completely non-technical, and being very technical. It's unclear if successfully.

(2) The technical claim is mostly that argmax(actions) is a dumb decision procedure in the real world for boundedly rational agents, if the actions are not very meta.

Softmax is one of the more principled alternative choices (see eg here)

(3) That argmax(actions) is not the optimal thing to do for boundedly rational agents is perhaps best illuminated by information-theoretic bounded rationality.

In my view the technical research useful for developing good theory of moral uncertainty for bounded agents in the real world is currently mostly located in other fields of research (ML, decision theory, AI safety, social choice theory, mechanism design, etc), so I would not expect lack of something in the moral uncertainty literature to be evidence of anything.

E.g., the internal bargaining you link is mostly simply OCB and HG applying bargaining theory to bargaining between moral theories.

We say worldview diversification is less ad hoc than the other things: worldview diversification is mostly Thompson sampling.

(4) You can often "rescue" some functional form if you really want. Love argmax()? Well, do argmax(ways how to choose actions) or something. Really attached to the label of utilitarianism, but in practice want to do something closer to virtues? Well, do utilitarianism but just on the of actions of the type "select your next self" or similar.

jh @ 2022-10-10T16:24 (+19)

A comment and then a question. One problem I've encountered in trying to explain ideas like this to a non-technical audience is that actually the standard rationales for 'why softmax' are either a) technical or b) not convincing or even condescending about its value as a decision-making approach. Indeed, the 'Agents as probabilistic programs' page you linked to introduces softmax as "People do not always choose the normatively rational actions. The softmax agent provides a simple, analytically tractable model of sub-optimal choice." The 'Softmax demystified' page offers relatively technical reasons (smoothing is good, flickering bad) and an unsupported claim (it is good to pick lower utility options some of the time). Implicitly this makes presentations of ideas like this have the flavor of "trust us, you should use this because it works in practice, even it has origins in what we think is irrational or that we can't justify". And, to be clear, I say that as someone who's on your side, trying to think of how to share these ideas with others. I think there is probably a link between what I've described above and Michael Plant's point (3).

So, I'm wonder if 'we can do better' in justifying softmax (and similar approaches). What is the most convincing argument you've seen?

I feel like the holy grail would be an empirical demonstration that an RL agent develops softmax like properties across a range of realistic environments. And/or a theoretical argument for why this should happen.

anonymous6 @ 2022-10-12T17:26 (+18)

One justification might be that in an online setting where you have to learn which options are best from past observations, the naive "follow the leader" approach -- exactly maximizing your action based on whatever seems best so far -- is easily exploited by an adversary.

This problem resolves itself if you make actions more likely if they've performed well, but regularize a little to smooth things out. The most common regularizer is entropy, and then as described on the "Softmax demystified" page, you basically end up recovering softmax (this is the well-known "multiplicative weight updates" algorithm).

jh @ 2022-10-20T00:44 (+8)

Yes, and is there a proof of this that someone has put together? Or at least a more formal justification?

anonymous6 @ 2022-10-20T13:02 (+1)

Here's one set of lecture notes (don't endorse that they're necessarily the best, just first I found quickly) https://lucatrevisan.github.io/40391/lecture12.pdf

Keywords to search for other sources would be "multiplicative weight updates", "follow the leader", "follow the regularized leader".

Note that this is for what's sometimes called the "experts" setting, where you get full feedback on the counterfactual actions you didn't take. But the same approach basically works with some slight modification for the "bandit" setting, where you only get to see the result of what you actually did.

MichaelStJules @ 2022-10-10T17:01 (+16)

Do we have reason to believe softmax is a better approximation to "Enlightened argmax" than just directly trying to approximate Enlightened argmax or its outputs?

NunoSempere @ 2022-10-10T14:12 (+11)

See also the muti-armed bandit <https://en.wikipedia.org/wiki/Multi-armed_bandit> problem.

Benjamin_Todd @ 2022-10-20T11:50 (+9)

Upvoted, though I was struck by this part of the appendix:

Appendix: Other reasons to diverge from argmax

In order of how much we endorse them:

- Value of information is usually incredibly high

- You don’t know the whole option set

- Moral uncertainty

- Concave altruism (i.e. Jensen’s inequality!)

- The optimiser’s curse

- Worldview diversification

- Principled risk aversion, as at GiveWell

- Strategic skulduggery

- Decrease variance of your portfolio for more impact compounding(?)

While I totally agree with the the conclusion of the post (the community should have a portfolio of causes, and not invest everything in the top cause), I feel very unsure that a lot of these reasons are good ones for spreading out from the most promising cause.

Or if they do imply spreading out, they don't obviously justify the standard EA alternatives to AI Risk.

I noticed I felt like I was disagreeing with your reasons for not doing argmax throughout the post, and this list helped to explain why.

1. Starting with VOI, that assumes that you can get significant information about how good a cause is by having people work on it. In practice, a ton of uncertainty is about scale and neglectedness, and having people work on the cause doesn't tell you much about that. Global priorities research usually seems more useful.

VOI would also imply working on causes that might be top, but that we're very uncertain about. So, for example, that probably wouldn't imply that that longtermist-interested people should work on global health or factory farming, but rather spread out over lots of weirder small causes, like those listed here: https://80000hours.org/problem-profiles/#less-developed-areas

2. "You don't know the whole option set" sounds like a similar issue to VOI. It would imply trying to go and explore totally new areas, rather than working on familiar EA priorities.

3. Many approaches to moral uncertainty suggest that you factor in uncertainty in your choice of values, but then you just choose the best option with respect to those values. It doesn't obviously suggest supporting multiple causes.

4. Concave altruism. Personally I think there are increasing returns on the level of orgs, but I don't think there are significant increasing returns at the level of cause areas. (And that post is more about exploring the implications of concave altruism rather than making the case it actually applies to EA cause selection.)

5. Optimizer's curse. This seems like a reason to think your best guess isn't as good as you think, rather than to support multiple causes.

6. Worldview diversification. This isn't really an independent reason to spread out – it's just the name of Open Phil's approach to spreading out (which they believe for other reasons).

7. Risk aversion. I don't think we should be risk averse about utility, so agree with your low ranking of it.

8. Strategic skullduggery. This actually seems like one of the clearest reasons to spread out..

9. Decreased variance. I agree with you this is probably not a big factor.

You didn't add diminishing returns to your list, though I think you'd rank it near the top. I'd also agree it's a factor, though I also think it's often oversold. E.g. if there are short-term bottlenecks in AI that create diminishing returns, it's likely the best response is to invest in career capital and wait for the bottlenecks to disappear, rather than to switch into a totally different cause. You also need big increases in resources to get enough diminishing returns to change the cause ranking e.g. if you think AI safety is 10x as effective as pandemics at the margin, you might need to see the AI safety community roughly 10x in size relative to biosecurity before they'd equalise.

I tried to summarise what I think the good reasons for spreading out are here.

For a longtermist, I think those considerations would suggest a picture like:

- 50% into the top 1-3 issues

- 20% into the next couple of issues

- 20% into exploring a wide range of issues that might be top

- 10% into other popular issues

If I had to list a single biggest driver, it would be personal fit / idiosyncratic opportunities, which can easily produce orders of magnitude differences in what different people should focus on.

The question of how to factor in neartermism (or other alternatives to AI-focused longtermism) seems harder. It could easily imply still betting everything on AI, though putting some % of resources into neartermism in proportion to your credence in it also seems sensible.

Some more here about how worldview diversification can imply a wide range of allocations depending on how you apply it: https://twitter.com/ben_j_todd/status/1528409711170699264

Gavin @ 2022-10-24T16:49 (+2)

3. Tarsney suggests one other plausible reason moral uncertainty is relevant: nonunique solutions leaving some choices undetermined. But I'm not clear on this.

Gavin @ 2022-10-24T16:44 (+2)

Excellent comment, thanks!

Yes, wasn't trying to endorse all of those (and should have put numbers on their dodginess).

1. Interesting. I disagree for now but would love to see what persuaded you of this. Fully agree that softmax implies long shots.

2. Yes, new causes and also new interventions within causes.

3. Yes, I really should have expanded this, but was lazy / didn't want to disturb the pleasant brevity. It's only "moral" uncertainty about how much risk aversion you should have that changes anything. (à la this.)

4. Agree.

5. Agree.

6. I'm using (possibly misusing) WD to mean something more specific like "given cause A, what is best to do?; what about under cause B? what about under discount x?..."

7. Now I'm confused about whether 3=7.

8. Yeah it's effective in the short run, but I would guess that the loss of integrity hurts us in the long run.

Will edit in your suggestions, thanks again.

Gavin @ 2022-10-22T14:09 (+7)

Looking back two weeks later, this post really needs

- to discuss of the cost of prioritisation (we use softmax because we are boundedly rational) and the Price of Anarchy;

- to have separate sections for individual prioritisation and collective prioritisation;

- to at least mention bandits and the Gittins index, which is optimal where softmax is highly principled suboptimal cope.

MichaelStJules @ 2022-10-22T15:52 (+2)

FWIW, I didn't get the impression there's a very principled justification for softmax in this post, if that's what you intended by "highly principled". That it might work better than naive argmax in practice on some counts isn't really enough, and there wasn't really much comparison to enlightened argmax, which is optimal in theory.

I'd probably require being provably (approximately) optimal for a principled justification. Quickly checking bandits and the Gittins index on Wikipedia, bandits are general problems and the Gittins index is just the value of the aggregate reward. I guess you could say "maximize Gittins index" (use the Gittins index policy), but that's, imo, just a formal characterization of what enlightened argmax should be under certain problem assumptions, and doesn't provide much useful guidance on its own. Like what procedure should we follow to maximize the Gittins index? Is it just calculate really hard?

Also, according to the Wikipedia page, the Gittins index policy is optimal if the projects are independent, but not necessarily if they aren't, and the problem is NP-hard in general if they can be dependent.

Gavin @ 2022-10-24T16:34 (+2)

Not in this post, we just link to this one. By "principled" I just mean "not arbitrary, has a nice short derivation starting with something fundamental (like the entropy)".

Yeah, the Gittins stuff would be pitched at a similar level of handwaving.

Buhl @ 2022-10-13T00:01 (+5)

Curious what people think of the argument that, given that people in the EA community have different rankings of the top causes, a close-to-optimal community outcome could be reached if individuals argmax using their own ranking?

(At least assuming that the number of people who rank a certain cause as the top one is proportional to how likely it is to be the top one.)

Lakin @ 2022-10-12T22:24 (+5)

Animals do this intuitively:

Pigeons were presented with two buttons in a Skinner box, each of which led to varying rates of food reward. The pigeons tended to peck the button that yielded the greater food reward more often than the other button, and the ratio of their rates to the two buttons matched the ratio of their rates of reward on the two buttons.

Gavin @ 2022-10-10T12:55 (+5)

The above makes EA's huge investment in research seem like a better bet: "do more research" is a sort of exploration. Arguably we don't do enough active exploration (learning by doing), but we don't want less research.

MichaelStJules @ 2022-10-10T14:52 (+4)

Are there any principled probability assignments we could use? E.g., the probability that this would be my top choice after N further hours of investigation into it and alternatives (including realistically collecting data or performing experiments), maybe allowing N to be unrealistic?

From my understanding, softmax is formally sensitive to parametrizations, so the specific outputs seem pretty unprincipled unless you actually have feedback and are doing some kind of optimization like minimizing some kind of softmax loss.

MichaelStJules @ 2022-10-10T15:47 (+2)

On the other hand, I can see even using the credences like I proposed to be way too upside-focused, i.e. focused on picking the best interventions, but little concern for avoiding the worst (badly net negative in EV) interventions. Consider an intervention that has a 55% chance of being the best and vastly net positive in expectation after further investigation, but a 45% chance of being the worst and vastly net negative in expectation (of similar magnitude), and your current overall belief is that it's vastly net positive and highest in EV. It's plausible some high-leverage interventions are sensitive in this way, because they involve tradeoffs for existential risks (tradeoffs between different x-risks, but also within x-risks, like differential progress), or, in the near-term, because of wild animal effects dominating and having uncertain sign. Would we really want to put the largest share of resources, let alone most resources, into such an intervention?

Alternatively, we may have multiple choices, among which three, A, B and C are such that, for some c>0, after further investigation, we expect that:

- A is 40% likely to be the best, with EV = c, and 35% likely to be the worst, with EV=-c, and and the rest of the time EV=0.

- B is 35% likely to be the best, with EV=c and 30% likely to be the worst, with EV=-c, and the rest of the time EV=0.

- C is 5% likely to be the best, with EV=c, and otherwise has EV=0 and has probability 0 of being the worst.

How should we weight our resources between these three (ignoring other options)? Currently, they all have the same overall EV (=5%*c). What if we increase the probability that A is best slightly, without changing anything else? Or, what if we increase the probability that C is best slightly, without changing anything else?

Gavin @ 2022-10-29T10:56 (+2)

Ord's undergrad thesis is a tight argument in favour of enlightened argmax: search over decision procedures and motivations and pick the best of those instead of acts or rules.

jh @ 2022-10-30T11:43 (+8)

Interesting thesis! Though, it's his doctoral thesis, not from one of his bachelor's degrees, right?

Gavin @ 2022-10-31T10:48 (+2)

Yep ta, even says so on page 1.

Benjamin_Todd @ 2022-10-20T11:54 (+2)

Also maybe of interest, I think the current EA portfolio is actually allocated pretty well in line with what this heuristic would imply:

I think the bigger issue might be that it's currently demoralising not to work on AI or meta. So I appreciate this post as an exploration of ways to make it more intuitive that everyone shouldn't work on AI.

Benjamin_Todd @ 2022-10-20T11:12 (+2)

Also see Brian Christian briefly suggesting a cause allocation rule a bit like this towards the end of 80k's interview with him.

We were discussing solutions to the explore-exploit problem, and one is that you allocate resources in proportion to your credence the option is best.

Gavin @ 2022-10-11T14:56 (+2)

Oh full disclosure I guess: I am a well-known shill for argmin.

NunoSempere @ 2022-10-15T22:50 (+2)

Booo <3

niplav @ 2022-10-10T13:07 (+1)

In practice the thing that the EA community is doing is much closer to quantilization (video explanation) than maximization anyway, and that's okay. The goal could be an ever-increasing .

Gavin @ 2022-10-10T13:17 (+3)

Mostly true, but a string of posts about the risks attests to there being some unbounded optimisers. (Or at least that we are at risk of having some.)