AISN #16: White House Secures Voluntary Commitments from Leading AI Labs and Lessons from Oppenheimer

By Center for AI Safety, Dan H, Corin Katzke @ 2023-07-25T16:45 (+7)

This is a linkpost to https://newsletter.safe.ai/p/ai-safety-newsletter-16

Welcome to the AI Safety Newsletter by the Center for AI Safety. We discuss developments in AI and AI safety. No technical background required.

Subscribe here to receive future versions.

White House Unveils Voluntary Commitments to AI Safety from Leading AI Labs

Last Friday, the White House announced a series of voluntary commitments from seven of the world's premier AI labs. Amazon, Anthropic, Google, Inflection, Meta, Microsoft, and OpenAI pledged to uphold these commitments, which are non-binding and pertain only to forthcoming "frontier models" superior to currently available AI systems. The White House also notes that the Biden-Harris Administration is developing an executive order alongside these voluntary commitments.

The commitments are timely and technically well-informed, demonstrating the ability of federal policymakers to respond capably and quickly to AI risks. The Center for AI Safety supports these commitments as a precedent for cooperation on AI safety and legally binding legislation.

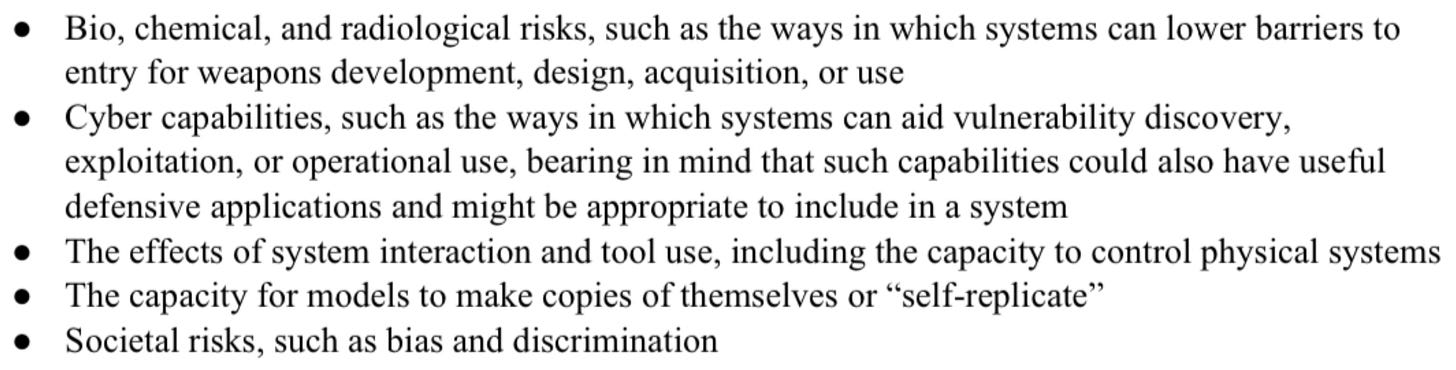

Commitments to Red Teaming and Information Sharing. AI systems often develop unexpected capabilities during training, so it’s important to screen models for potentially harmful abilities. The companies promised to “red team” their models by searching for ways the models could cause harm, particularly in areas of potential catastrophe including biological weapons acquisition, offensive cyberattacks, and self-replication. External red teamers will also be offered a chance to find vulnerabilities in these frontier AI systems.

The companies have additionally agreed to share information about best practices in AI safety with governments and each other. Public reports on system capabilities and risks are also part of this pledge, though companies have not agreed to the disclosure of model training data, which likely contains large volumes of copyrighted content.

Cybersecurity to protect AI models, if companies choose. The AI labs also reiterated their dedication to cybersecurity. This helps prevent potent AI models from being accessed and misused by malicious actors. By reducing the likelihood that someone else will steal their cutting edge secrets, cybersecurity also alleviates the pressure of the AI race and gives companies more time to evaluate and enhance the safety features of their models.

Notably, while most labs aim to prevent their models from being used for malicious purposes, companies are not prohibited from sharing their models publicly. Meta, after accidentally leaking their first LLaMA model, has published their LLaMA 2 model online for nearly anyone to use. Meta published a report about LLaMA 2’s safety features, but only a week after its release, someone has published an “uncensored” version of the model.

Identifying AI outputs in the wild. Companies also committed to watermarking AI outputs so they can be differentiated from text written by humans and real world images and videos. Without this crucial ability to distinguish AI outputs from real life, society would be vulnerable to scams, counterfeit people, personalized persuasion, and propaganda campaigns.

Technical researchers had speculated that watermarking might be unsolvable because AI systems are trained to mimic real world data as closely as possible. But companies already keep databases of most of their AI outputs, which a recent paper suggests can be used to verify AI outputs. Consumers would be able to submit text, audio, or video to a company’s website and find out if it had been generated by the company’s AI models.

Goals for future AI policy work. While the voluntary commitments offer a starting point, they cannot replace legally binding obligations to guide AI development for public benefit. OpenAI’s announcement of the commitments says, “Companies intend these voluntary commitments to remain in effect until regulations covering substantially the same issues come into force.”

A more ambitious proposal comes from a new paper proposing a three pronged approach to AI governance. First, safety standards must be developed by experts external to AI labs, similar to safety standards in healthcare, nuclear engineering, and aviation. Governments need visibility on the most powerful models being trained by AI labs, facilitated by registration of advanced models and protections for whistleblowers. Equipped with safety standards and a clear view of advanced AI systems, the government can ensure the standards are upheld, first by securing voluntary commitments from AI labs and later with binding laws and enforcement.

Other future commitments could include accepting legal liability for AI-induced damages, sharing training data of large pretrained models, and funding research on AI safety topics such as robustness, monitoring, and control. Notably, while companies promised to assess the risks posed by their AI systems, they did not commit to any particular response to those risks, nor promise not to deploy models that cross a “red line” of dangerous capabilities.

Lessons from Oppenheimer

Last week, Oppenheimer opened in theaters. The film’s director, Christopher Nolan, said in an interview that the film parallels the rise and risks of AI. AI, he said, is having its “Oppenheimer moment.” There are indeed many parallels between the development of nuclear weapons and AI. In this story, we explore one: the irrational dismissal of existential risk.

The ultimate catastrophe. Before Los Alamos, Oppenheimer met with fellow physicist Edward Teller to discuss the end of the world. Teller had begun to worry that a nuclear detonation might fuse atmospheric nitrogen in a catastrophic chain-reaction that would raze the surface of the earth. Disturbed, they reported the possibility to Arthur Compton, an early leader of the Manhattan Project. Compton later recalled that it would have been “the ultimate catastrophe."

After some initial calculations, another physicist on the project, Hans Bethe, concluded that such a catastrophe was impossible. The project later commissioned a full report on the possibility, which came to the same conclusion. Still, the fear of catastrophe lingered on the eve of the Trinity test. Just before the test, Enrico Fermi jokingly took bets on the end of the world.

Photo of the Trinity test, which some feared could cause a global catastrophe.

Objective and subjective probability. In Nolan’s film, Oppenheimer tells the military director of the Manhattan Project, Leslie Groves, that the probability of a catastrophe during the Trinity test was “near zero.” The film might be drawing from an interview with Compton, who recalled that the probability was three parts in a million. Bethe was less equivocal. He writes that “there was never a possibility of causing a thermonuclear chain reaction in the atmosphere,” and that “[i]gnition is not a matter of probabilities; it is simply impossible.”

Bethe is right in one sense: the report did not give any probabilities. It concluded that catastrophe was objectively impossible. Where Bethe errs is in conflating objective and subjective probability. The calculations in the report implied certainty — but Bethe should have been uncertain about the calculations themselves, and the assumptions those calculations relied on.

The mathematician R.W. Hamming recalls that at least one of the report’s authors was uncertain leading up to the Trinity test:

Shortly before the first field test (you realize that no small scale experiment can be done — either you have a critical mass or you do not), a man asked me to check some arithmetic he had done, and I agreed, thinking to fob it off on some subordinate. When I asked what it was, he said, "It is the probability that the test bomb will ignite the whole atmosphere." I decided I would check it myself! The next day when he came for the answers I remarked to him, "The arithmetic was apparently correct but I do not know about the formulas for the capture cross sections for oxygen and nitrogen — after all, there could be no experiments at the needed energy levels." He replied, like a physicist talking to a mathematician, that he wanted me to check the arithmetic not the physics, and left. I said to myself, "What have you done, Hamming, you are involved in risking all of life that is known in the Universe, and you do not know much of an essential part?"

Doubt lingered in the final moments preceding the Trinity test about the possibility of a catastrophic chain reaction. Hans Bethe’s calculations may have shown that the probability of catastrophe was zero, but it remained possible that Bethe had miscalculated.

Scientific and mathematical claims that are widely accepted can still be false. For example, a peer-reviewed mathematical proof of the famous four color problem was accepted for years until, finally, a flaw was uncovered. While Bethe’s model was confident, he shouldn’t have placed his full faith in a model without the test of time.

The Castle Bravo Disaster. Indeed, the probability of a theoretical mistake may have been quite high. Trinity didn’t end in catastrophe, but another initial test — Castle Bravo — did. Castle Bravo was the first test of a dry-fuel hydrogen bomb. Because of an unexpected nuclear interaction, the payload of the bomb was three times greater than predicted. Its fallout reached the inhabitants of the Marshall Islands, as well as a nearby Japanese fishing vessel, leading to dozens of cases of acute radiation sickness.

There is no reason to think that the authors of the atmospheric ignition report couldn’t have made a similar mistake. Indeed, one of the authors, Edward Teller, designed the bomb used in the Castle Bravo test. Trinity didn’t end in catastrophe, but it could have.

Lessons for AI safety. Despite a firm grasp of the nuclear reaction principles at the time of the Trinity test, there were still a handful of experts voicing concerns about the potentially disastrous outcomes. Those who conducted the test accepted a risk that, if their calculations were wrong, the atmosphere would be ignited and all of humanity thrown into catastrophe. While the test was ultimately successful, it would have been prudent to consider these concerns more seriously before the Trinity test at Los Alamos.

Artificial intelligence, on the other hand, is understood far less than nuclear reactions were. Moreover, a substantial number of experts have publicly warned about the existential risk emanating from AI. It’s crucial to ensure there’s consensus that risks are negligible or zero and that this consensus passes the test of time before taking actions that could cause extinction.

Links

- To help AI companies assess the risks of their models, a new paper reviews common risk assessment techniques in other fields.

- A detailed explanation of how Chinese AI policy gets made.

- To verify the data that an AI was trained on, a new paper proposes a solution involving checkpoints of the model at different points during the training process.

- WormGPT is a new AI tool for launching offensive cyber attacks.

- Prominent technologists are pushing for no regulation of AI under the banner of a Darwinian ideology.

- A senate subcommittee is having a hearing on AI regulation. All three speakers (Russell, Amodei, Bengio) have signed the statement on AI risk.

- The UN Security Council had its first meeting on AI risk. A number of representatives, including the UN Secretary General, explicitly mentioned AI x-risk.

See also: CAIS website, CAIS twitter, A technical safety research newsletter, and An Overview of Catastrophic AI Risks

Subscribe here to receive future versions.