Graphical Representations of Paul Christiano's Doom Model

By Nathan Young @ 2023-05-07T13:03 (+48)

Paul gives some numbers on AI doom (text below). Here they are in graphical forms, which I find easier to understand. Please correct me if wrong.

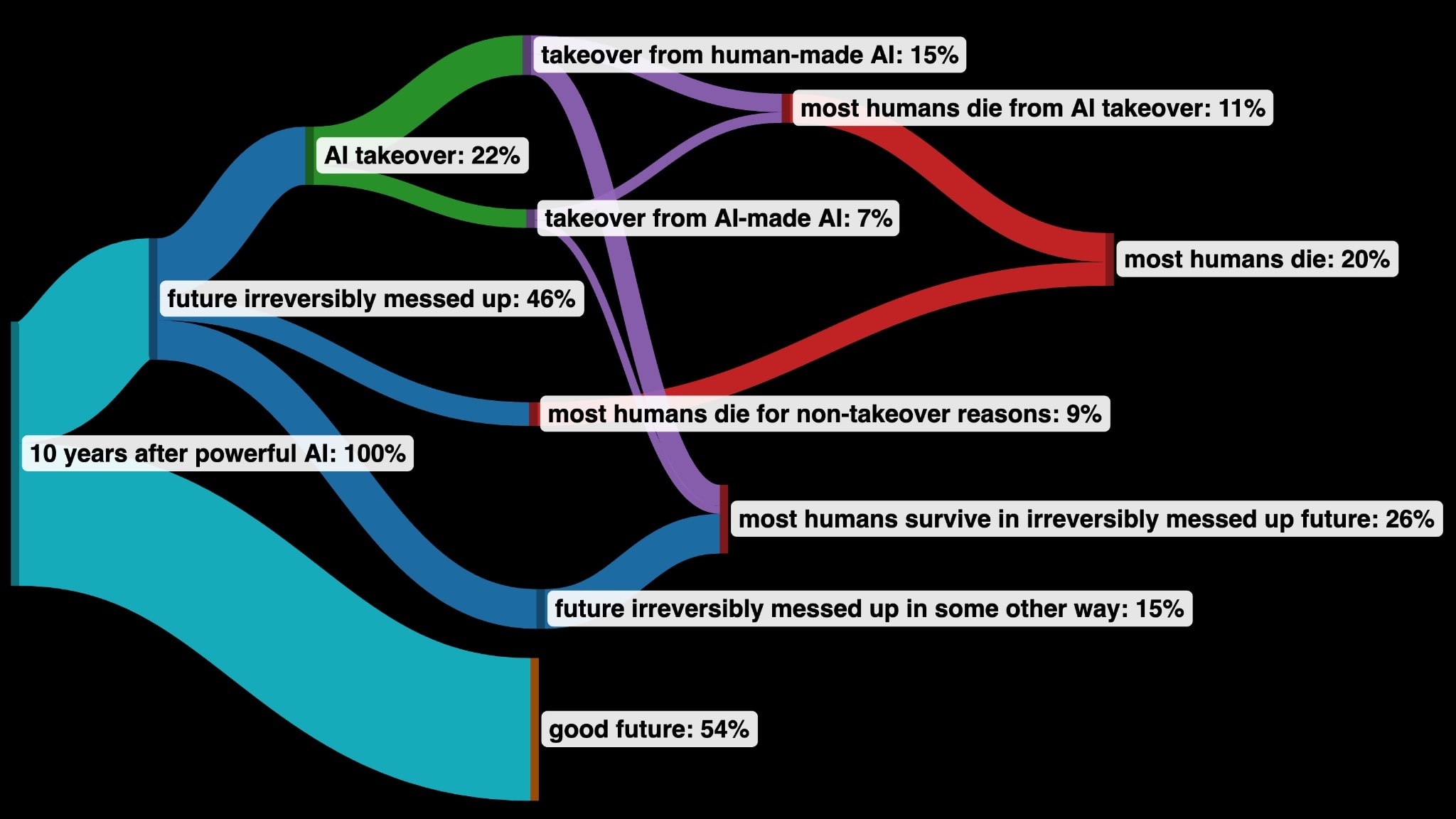

Michael Trazzi's Probability Flow Diagram

I really like this one. I can really easily read how he thinks future worlds are distributed. I guess the specific flows are guesses from Paul's model so might be wrong but I think it's fine.

Link to tweet: https://twitter.com/MichaelTrazzi/status/1651990282282631168/photo/1

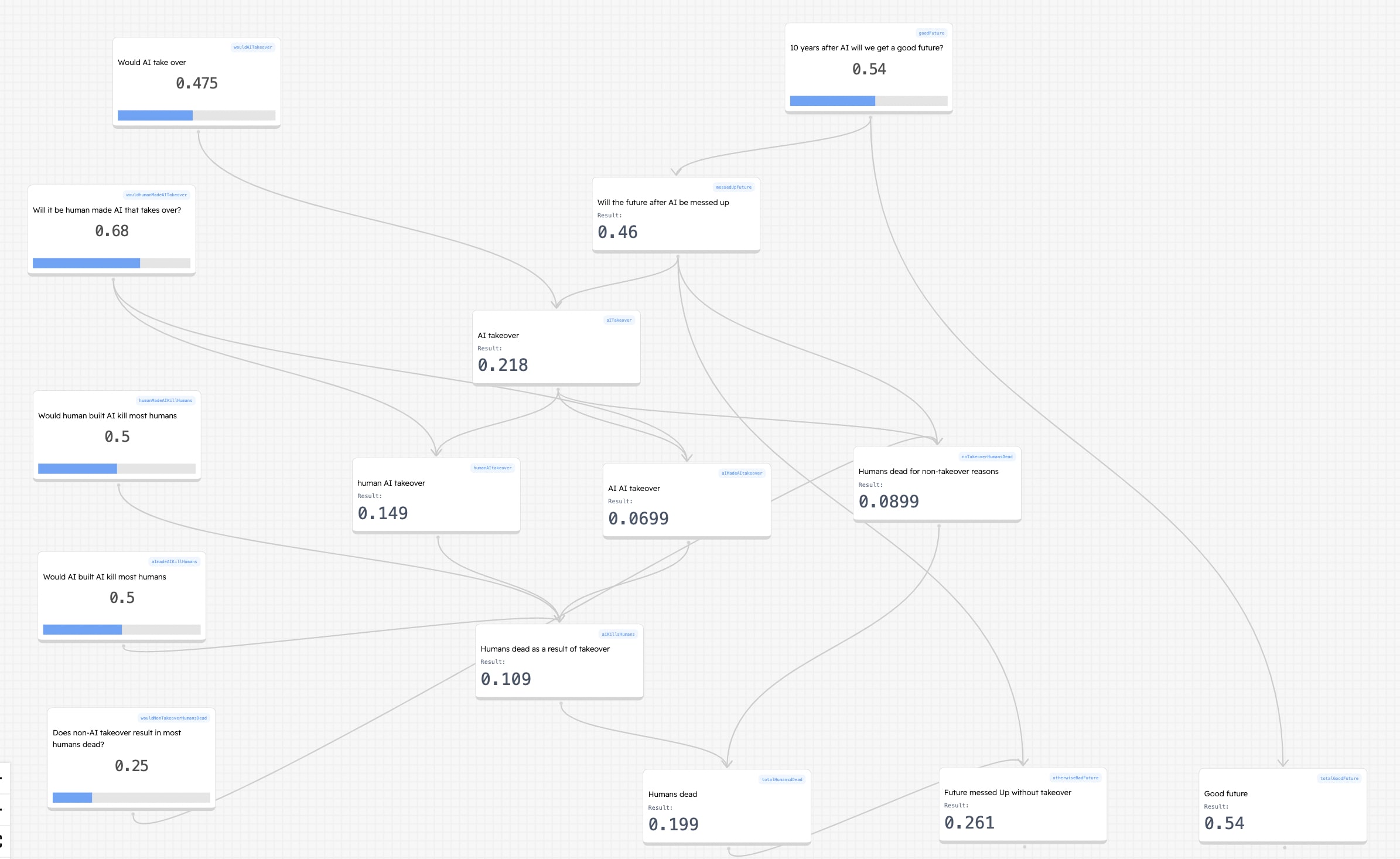

My probability model version

This is messier, but interactive. You get to see what the chances Paul puts on specific breakpoints are. Do you disagree with any?

Link: https://bit.ly/AI-model-Chrisitaino

Paul's model in text

Probability of an AI takeover: 22%

- Probability that humans build AI systems that take over: 15%

(Including anything that happens before human cognitive labor is basically obsolete.)- Probability that the AI we build doesn’t take over, but that it builds even smarter AI and there is a takeover some day further down the line: 7%

Probability that most humans die within 10 years of building powerful AI (powerful enough to make human labor obsolete): 20%

- Probability that most humans die because of an AI takeover: 11%

- Probability that most humans die for non-takeover reasons (e.g. more destructive war or terrorism) either as a direct consequence of building AI or during a period of rapid change shortly thereafter: 9%

Probability that humanity has somehow irreversibly messed up our future within 10 years of building powerful AI: 46%

- Probability of AI takeover: 22% (see above)

- Additional extinction probability: 9% (see above)

- Probability of messing it up in some other way during a period of accelerated technological change (e.g. driving ourselves crazy, creating a permanent dystopia, making unwise commitments…): 15%

yefreitor @ 2023-05-08T04:11 (+4)

Do you disagree with any?

Treating "good future" and "irreversibly messed up future" as exhaustive seems clearly incorrect to me.

Consider for instance the risk of an AI-stabilized personalist dictatorship, in which literally all political power is concentrated in a single immortal human being.[1]Clearly things are not going great at this point. But whether they're irreversibly bad hinges on a lot of questions about human psychology - about the psychology of one particular human, in fact - that we don't have answers to.

- There's some evidence Big-5 Agreeableness increases slowly over time. Would the trend hold out to thousands of years?

- How long-term are long-term memories (augmented to whatever degree human mental architecture permits)?

- Are value differences between humans really insurmountable or merely very very very hard to resolve? Maybe spending ten thousand years with the classics really would cultivate virtue.

- Are normal human minds even stable in the very long run? Maybe we all wirehead ourselves eventually, given the chance.

So it seems to me that if we're not ruling out permanent dystopia we shouldn't rule out "merely" very long lived dystopia either.

This is clearly not a "good future", in the sense that the right response to "100% chance of a good future" is to rush towards it as fast as possible, and the right response to "10% chance of utopia 'till the stars go cold, 90% chance of spending a thousand years beneath Cyber-Caligula's sandals followed by rolling the dice again"[2] is to slow down and see if you can improve the odds a bit. But it doesn't belong in the "irreversibly messed up" bin either: even after Cyber-Caligula takes over, the long-run future is still almost certainly utopian.

- ^

Personally I think this is far less likely than AI-stabilized oligarchy (which, if not exactly a good future, is at least much less likely to go off into rotating-golden-statue-land) but my impression is that it's the prototypical "irreversible dystopia" for most people.

- ^

Obviously our situation is much worse than this

rime @ 2023-05-08T00:29 (+1)

Prime work. Super quick read and I gained some value out of it. Thanks!