Things CEA is not doing

By MaxDalton @ 2021-01-15T09:33 (+92)

This is a linkpost to https://www.centreforeffectivealtruism.org/blog/things-cea-is-not-doing/

There are many things in EA community-building that the Centre for Effective Altruism is not doing. We think some of these things could be impactful if well-executed, even though we don't have the resources to take them on. Therefore, we want to let people know what we're not doing, so that they have a better sense of how neglected those areas are.

To see more about what we are doing, look at our plans for 2021, and our summary of our long-term focus.

Things we're not actively focused on

We are not actively focusing on:

- Reaching new mid- or late-career professionals

- Reaching or advising high-net-worth donors

- Fundraising in general

- Cause-specific work (such as community building specifically for effective animal advocacy, AI safety, biosecurity, etc.)

- Career advising

- Research, except about the EA community

- Content creation

- Donor coordination

- Supporting other organizations

- Supporting promising individuals

By “not actively focusing on”, I mean that some of our work will occasionally touch on or facilitate some of the above (e.g. if groups run career fellowships, or city groups do outreach to mid-career professionals), but our main efforts will be spent on other goals.

One caveat to the below is that our community health team sometimes advises people who are working in the areas below (but don’t do the object-level work themselves). For example, they will sometimes advise on projects related to policy (even though none of them work on policy).

Reaching new mid- or late-career professionals

As mentioned in our 2021 plans, we intend to focus our efforts to bring new people into the community on students (especially at top universities) and young professionals. We intend to work to retain mid- and late-career professionals who are already highly engaged in EA, but we do not plan to work to recruit more mid- or late-career people.

Reaching or advising high-net-worth donors

We haven't done this for a while, but other EA-aligned organizations are working in this area, including Longview Philanthropy and Effective Giving.

Fundraising in general

Not focusing on fundraising is a change for us; we used to run EA Funds and Giving What We Can. These projects have now spun out of CEA, and we hope that this will give both these projects and CEA a clearer focus.

Cause-specific work (such as community building specifically for effective animal advocacy, AI safety, biosecurity, etc.)

As part of our work with local groups, we may work with group leaders to support cause-specific fellowships, workshops, or 1:1 content. However, we do not have any other plans in this area.

Career advising

As part of our work with local groups, we may work with group leaders to support career fellowships, workshops, or 1:1 content. And at our events, we try to match people with mentors who can advise them on their careers. We do not have any other plans in this area.

80,000 Hours clarified what they are and aren’t doing in this post.

Research, except about the EA community

We haven't had full-time research staff since ~2017, although we did support the CEA summer research fellowship in 2018 and 2019. We’ll continue to work with Rethink Priorities on the EA Survey, and to do other research that informs our own work.

We’ll also continue to run the EA Forum and EA Global, which are venues where researchers can share and discuss their ideas. We believe that supporting these discussions ties in with our goals of recruiting students and young professionals and keeping existing community members up to speed with EA ideas.

Content creation

We curate content when doing so supports productive discussion spaces (e.g. inviting speakers to events, developing curricula for fellowships run by groups). We occasionally write content on community health issues. We also try to incentivize the creation of quality content by giving speakers a platform and giving out prizes on the EA Forum. Aside from this, we don’t have plans to create more content.

Donor coordination

As mentioned above, we have now spun out EA Funds, which has some products (e.g. the Funds and the donor lottery) that help with donor coordination.

Supporting other organizations

We do some work to support organizations as they work through internal conflicts and HR issues. We also provide operational support to 80,000 Hours, Forethought Foundation, EA Funds, Giving What We Can, and a longtermist project incubator. We also occasionally share ideas and resources — related to culture, epistemics, and diversity, equity, and inclusion — with other organizations. Other than this, we don’t plan to work in this space.

Supporting promising individuals

The groups team provides support and advice to group organizers, and the community health team provides support to people who experience problems within the community. We also run several programs for matching people up with mentors (e.g. as part of EA Global).

We do not plan to financially support individuals. Individuals can apply for financial support from EA Funds and other sources.

Conclusion

A theme in the above is that I view CEA as one organization helping to grow and support the EA community, not the sole organization which determines the community’s future. I think that this isn’t a real change: the EA community’s development was always influenced by a coalition of organizations. But I do think that CEA has sometimes aimed to determine the community’s future, or represented itself as doing so. I think this was often a mistake.

Starting a project in one of these areas

CEA has limited resources. In order to focus on some things, we need to deprioritize others.

We hope the information above encourages others to start projects in these areas. However, there are two things to consider before starting such a project.

First, we think that additional high-quality work in the areas listed above will probably be valuable, but we haven’t carefully considered this, and some projects within an area are probably much more promising than others. We recommend carefully considering which areas and approaches to pursue.

Second, we think there’s potential to cause harm in the areas above (e.g. by harming the reputation of the effective altruism movement, or poisoning the well for future efforts). However, if you take care to reflect on potential paths to harm and adjust based on critical feedback, we think you’re likely to avoid causing harm. (For more resources on avoiding harm, see these resources by Claire Zabel, 80,000 Hours, and Max Dalton and Jonas Vollmer.)

Finally, even if CEA is doing something, it doesn’t mean no one else should be doing it. There can be benefits from multiple groups working in the same area.

If you’d like to start something related to EA community building, let us know by emailing Joan Gass; we can let you know what else is going on in the area. Since we’re focused on our core projects, we probably won’t be able to provide much help, but we can let you know about our plans and about any other similar projects we’re aware of. Additionally, the Effective Altruism Infrastructure Fund at EA Funds might be able to provide funding and/or advice.

Ozzie Gooen @ 2021-01-15T17:59 (+40)

Happy to see this clarification, thanks for this post.

While I understand the reasoning behind this, part of me really wants to see some organization that can do many things here.

Right now things seem headed towards a world where we have a whole bunch of small/tiny groups doing specialized things for Effective Altruism. This doesn't feel ideal to me. It's hard to create new nonprofits, there's a lot of marginal overhead, and it will be difficult to ensure quality and prevent downside risks with a highly decentralized structure. It will make it very difficult to reallocate talent to urgent new programs.

Perhaps CEA isn't well positioned now to become a very large generalist organization, but I hope that either that changes at some point, or other strong groups emerge here. It's fine to continue having small groups, but I really want to see some large (>40 people), decently general-purpose organizations around these issues.

MaxDalton @ 2021-01-15T21:58 (+33)

Thanks for sharing this feedback Ozzie, and for acknowledging the tradeoffs too.

I have a different intuition on the centralization tradeoff - I generally feel like things will go better if we have a lot of more focused groups working together vs. one big organization with multiple goals. I don’t think I’ll be able to fully justify my view here. I’m going to timebox this, so I expect some of it will be wrong/confused/confusing.

Examples: I think that part of the reason why 80,000 Hours has done well is that they have a clear and relatively narrow mission that they’ve spent a lot of time optimizing towards. Similarly, I think that GWWC has a somewhat different goal from CEA, and I think both CEA and GWWC will be better able to succeed if they can focus on figuring out how to achieve their goals. I hope for a world where there are lots of organizations doing similar things in different spaces. I think that when CEA was doing grantmaking and events and a bunch of other things it was less able to learn and get really good at any one of those things. Basically, I think there are increasing returns to work on lots of these issues, so focusing more on fewer issues is good.

It matters to get really good at things because these opportunities can be pretty deep: I think returns don’t diminish very quickly. E.g. we’re very far from having a high-quality widely-known EA group in every highly-ranked university in the world, and that’s only one of the things that CEA is aiming to do in the long run. If we tried to do a lot of other things, we’d make slower progress towards that goal. Given that, I think we’re better off focusing on a few goals and letting others pick up other areas.

I also think that, as a movement, we have some programs (e.g. Charity Entrepreneurship, the longtermist entrepreneurship project, plus active grantmaking from Open Philanthropy and EA Funds) which might help to set up new organizations for success.

We will continue to do some of the work we currently do to help to coordinate different parts of the community - for instance the EA Coordination Forum (formerly Leaders Forum), and a lot of the work that our community health team do. The community health team and funders (e.g. EA Funds) also do work to try to minimize risks and ensure that high-quality projects are the ones that get the resources they need to expand.

I also think your point about ops overhead is a good one - that’s why we plan to continue to support 80k, Forethought, GWWC, EA Funds, and the longtermist incubator operationally. Together, our legal entities have over 40 full time staff, nearly 100 people on payroll, and turnover of over $20m. So I think we’re reaping some good economies of scale on that front.

Finally, I think a more decentralized system will be more robust - I think that previously CEA was too close to being a single point of failure.

Ozzie Gooen @ 2021-01-17T05:30 (+52)

Hi Max,

Thanks for clarifying your reasoning here.

Again, if you think CEA shouldn’t expand, my guess is that it shouldn’t.

I respect your opinion a lot here and am really thankful for your work.

I think this is a messy issue. I tried clarifying my thoughts for a few hours. I imagine what’s really necessary is broader discussion and research into expectations and models of the expansion of EA work, but of course that’s a lot of work. Note that I'm not particularly concerned with CEA becoming big; I'm more concerned with us aiming for some organizations to be fairly large.

Feel free to ignore this or just not respond. I hope it might provide information on a perspective, but I’m not looking for any response or to cause controversy.

What is organization centrality?

This is a complex topic, in part because the concept of “organizations” is a slippery one. I imagine what really matters is something like, “coordination ability”, which typically requires some kind of centralization of power. My impression is that there’s a lot of overlap in donors and advisors around the groups you mention. If a few people call all the top-level shots (like funding decisions), then “one big organization” isn’t that different from a bunch of small ones. I appreciate the point about operations sharing; I’m sure there are some organizations that have had subprojetts that have shared fewer resources than what you described. It’s possible to be very decentralized within an organization (think of a research lab with distinct product owners) and to be very centralized within a collection of organizations.

Ideally I’d imagine that the choice of coordination centralization would be quite separate from that about the formal Nonprofit structure. You’re already sharing operations in an unconventional way. I could imagine cases where it could makes sense to have many nonprofits under a single ownership (even if this ownership is not legally binding), perhaps to help for targeted fundraising or to spread out legal liability. I know many people and companies own several sub LLCs and similar, I could see this being the main case.

“We will continue to do some of the work we currently do to help to coordinate different parts of the community - for instance the EA Coordination Forum (formerly Leaders Forum), and a lot of the work that our community health team do. The community health team and funders (e.g. EA Funds) also do work to try to minimize risks and ensure that high-quality projects are the ones that get the resources they need to expand.“

-> If CEA is vetting which projects get made and expand, and hosts community health and other resources, then it’s not *that* much different from technically bringing in these projects formally under its wing. I imagine finding some structure where CEA continues to offer organizational and coordination services as the base of organizations grows, will be a pretty tricky one.

Again, what I would like to see is lots of “coordination ability”, and I expect that this could go further with the centralization of power with capacity to act on it. (I could imagine funders who technically have authority, but don’t have the time to do much that’s useful with it). It’s possible that if CEA (or another group) is able to be a dominant decision maker, and perhaps grow that influence over time, then that would represent centralized control of power.

What can we learn from the past?

I’ve heard of the histories of CEA and 80,000 Hours being used in this way before. I agree with much of what you said here, but am unsure about the interpretations. What’s described is a very small sample size and we could learn different kinds of lessons from them.

Most of the non-EA organizations that I could point to that have important influence in my life are much bigger than 20 people. I’m very happy Apple, Google, The Bill & Melinda Gates Foundation, OpenAI, Deepmind, The Electronic Frontier Foundation, Universities, The Good Food Institute, and similar, exist.

It’s definitely possible to have too many goals, but that’s relative to size and existing ability. It wouldn’t have made sense for Apple to start out making watches and speakers, but it got there eventually, and is now doing a pretty good job at it (in my opinion). So I agree that CEA seems to have over-applied itself, but don’t think that means it shouldn’t be aiming to grow later on.

Many companies have had periods where they’ve diversified too quickly and suffered. Apple, famously, before Jobs came back, Amazon apparently had a period post-dot-com bubble, arguably Google with Google X, the list goes on and on. But I’m happy these companies eventually fixed their mistakes and continued to expand.

“Many Small EA Orgs”

“I hope for a world where there are lots of organizations doing similar things in different spaces… I think we’re better off focusing on a few goals and letting others pick up other areas….”

I like the idea of having lots of organizations, but I also like the idea of having at least some really big organizations. The Good Food Institute now seems to have a huge team and was just created a few years ago, and they seem to correspondingly be taking big projects.

I’m happy that we have few groups that coordinate political campaigns. Those seem pretty messy. True, the DNC in the US might have serious problems, but I think the answer would be a separate large group, not hundreds of tiny ones.

I’m also positive about 80,000 Hours, but I feel like we should be hoping for at least some organizations (like The Good Food Institute) to have much better outcomes. 80,000 Hours took quite some time to get to where it is today (I think it started in around 2012?), and is still rather small in the scheme of things. They have around 14 full time employees; they seem quite productive, but not 2-5 orders of magnitude more than other organizations. GiveWell seems much more successful; not only did they also grow a lot, but they convinced a Billionaire couple to help them spin off a separate entity which now is hugely important.

The costs of organizational growth vs. new organizations

Trust of key figures

It seems much more challenging to me to find people I would trust as nonprofit founders than people I would trust as nonprofit product managers. Currently we have limited availability of senior EA leaders, so it seems particularly important to select people in positions of power who already understand what these leaders consider to be valuable and dangerous. If a big problem happens, it seems much easier to remove a PM than a nonprofit Executive Director or similar.

Ease

Founding requires a lot of challenging tasks like hiring, operations, and fundraising, which many people aren’t well suited to. I’m founding a nonprofit now, and have been having to learn how to set up a nonprofit and maintain it, which has been a major distraction. I’d be happier at this stage making a department inside a group that would do those things for me, even if I had to pay a fee.

It seems great that CEA did operations for a few other groups, but my impression is that you’re not intending to do that for many of the new groups you are referring to.

One related issue is that it can be quite hard for small organizations to get talent. Typically they have poor brands and tiny reputations. In situations where these organizations are actually strong (which should be many), having them be part of the bigger organization in brand alone seems like a pretty clear win. On the flip side, if some projects will be controversial or done poorly, it can be useful to ensure they are not part of a bigger organization (so they don't bring it down).

Failure tolerance

Not having a “single point of failure” sounds nice in theory, but it seems to me that the funders are the main thing that matters, and they are fairly coordinated (and should be). If they go bad, then little amount of reorganization will help us. If they’re able to do a decent job, then they should help select leadership of big organizations that could do a good job, and/or help spin-off decent subgroups in the case of emergencies.

I think generally effort going into “making sure things go well” is better than effort going into “making sure that disasters won’t be too terrible”; and that’s better achieved by focusing on sizable organizations.

Smaller failure tolerance could also be worse with a distributed system; I expect it to be much easier to fire or replace a PM than to kick out a founder or move them around.

Expectations of growth

One question might be how ambitious we are regarding the growth of meta and longtermist efforts. I could imagine a world where we’re 100x the size, 20 years from now, with a few very large organizations, but it’s hard to imagine how many people we could manage with tiny organizations.

TLDR

My read of your posts is that you are currently aiming for / expecting a future of EA meta where there are a bunch of very small (<20 person) organizations. This seems quite unusual compared to other similar movements I’m aware of. Very unusual actions often require much stronger cases than usual ones, and I don’t yet see it. The benefits of having at least a few very powerful meta organizations seems greater than the costs.

I’m thankful for whatever work you decide to pursue, and more than encourage trying stuff out, like trying to encourage many small groups. I think I mainly wouldn’t want us to over-commit to any strategy like that though, and I also would like to encourage some more reconsideration, especially as new evidence emerges.

MaxDalton @ 2021-01-18T19:29 (+20)

Thanks for the detailed reply! I'll give a few shorter comment responses. I'm not running these by people at CEA, so this is just my view.

Organizational growth

First, I want to clarify that I'm not saying that I want CEA/other orgs to stay small. I think we might well end up as one of those big orgs with >40 people.

Given that I think there are some significant opportunities here, choosing to be focused doesn't mean we can't get big. We'd just have lots of people focused on one issue rather than lots focused on lots of issues. For some of the reasons I gave above about learning how to do an area really well, I think that would go better.

I also think that as we grow, we might then saturate the opportunities in the area we're focused in and then decide to expand to a new area.

So then there are questions about how fast to grow and when to stop growing. I think that if you grow quickly it's harder to grow in a really high-quality way. So you need to make a decision about how much to focus on quality vs. size. I think this is a tricky question which doesn't have a great general answer. If I could make CEA big, really high quality, and focused on the most important things, I'd obviously do that in a heartbeat. But I think in reality it's more about navigating these tradeoffs and improving quality/size/direction as quickly as you can.

Ozzie Gooen @ 2021-01-18T23:52 (+2)

Thanks for all the responses!

I've thought about this a bit more. Perhaps the crux is something like this:

From my (likely mistaken) read of things, the community strategy seems to want something like:

1) CEA doesn't expand its staff or focus greatly in the next 3-10 years.

2) CEA is able to keep essential control and ensure quality of community expansion in the next 3-10 years.

3) We have a great amount of EA meta / community growth in the next 3-10 years.

I could understand strategies where one of those three is sacrificed for the other two, but having all three sounds quite tricky, even if it would be really nice ideally.

The most likely way I could see (3) and (1) both happening is if there is some new big organization that comes in and gains a lot of control, but I'm not sure if we want that.

My impression is that (3) is the main one to be restricted. We could try encouraging some new nonprofits, but it seems quite hard to me to imagine a whole bunch being made quickly in ways we would be comfortable with (not actively afraid of), especially without a whole lot of oversight.

I think it's totally fine, and normally necessary (though not fun) to accept some significant sacrifices as part of strategic decision making.

I don't particularly have an opinion on which of the three should be the one to go.

Ben_West @ 2021-01-20T01:48 (+6)

(These are personal comments, I'm not sure to what extent they are endorsed by others at CEA.)

Thanks for writing this up Ozzie! For what it's worth, I'm not sure that you and Max disagree too much, though I don't want to speak for him.

Here's my attempt at a crux: suppose CEA takes on some new thing, and as a result Max manages me less well, making my work worse, but does that new thing better (or at all) because Max is spending time on it.

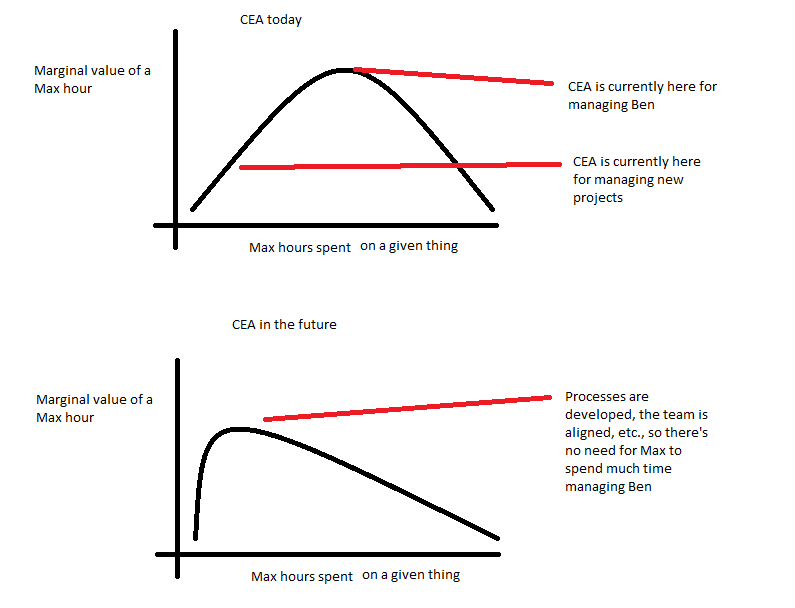

My view is that the marginal value of a Max hour is inverse U-shaped for both of these, and the maxima are fairly far out. (E.g. Max meeting with his directs once every two weeks would be substantially worse than meeting once a week.) As CEA develops, the maximum marginal value of his management hour will shift left while the curve for new projects remains constant, and at some point it will be more valuable for him to think about a new thing than speak with me about an old thing.

Please enjoy my attached paint image illustrating this:

I can think of two objections:

1. Management: Max is currently spending too much time managing me. Processes are well-developed and don't need his oversight (or I'm too stubborn to listen to him anyway or something) so there's no point in him spending so much time. (I.e. my "CEA in the future" picture is actually how CEA looks today for management.)

2. New projects: there is super low hanging fruit, and even doing a half-assed version of some new project would be way more valuable than making our existing projects better. (I.e. my "CEA in the future" picture is actually how CEA looks today for new projects.)

I'm curious if either of those seem right/useful to you?

Ozzie Gooen @ 2021-01-20T06:30 (+6)

Thanks for the diagrams and explanation!

I think when I see the diagrams, I think of these as "low overhead roles" vs "high overhead roles"; where "low overhead roles" have peak marginal value much earlier than high overhead roles. If one is interested in scaling work, and assuming that requires also scaling labor, then scalable strategies would be ones that would have many low overhead roles, similar to your second diagram of "CEA in the Future"

That said, my main point above wasn't that CEA should definitely grow, but that if CEA is having trouble/hesitancy/it-isn't-ideal growing, I would expect that the strategy of "encouraging a bunch of new external nonprofits" to be limited in potential.

If CEA thinks it could help police new nonprofits, that would also take Max's time or similar; the management time is coming from the same place, it's just being used in different ways and there would ideally be less of it.

In the back of my mind, I'm thinking that OpenPhil theoretically has access to +$10Bil, and hypothetically much of this could go towards promotion of EA or EA-related principles, but right now there's a big bottleneck here. I could imagine that it's possible it could make sense to be rather okay wasting a fair bit of money and doing things quite unusual in order to get expansion to work somehow.

Around CEA and related organizations in particular, I am a bit worried that not all of the value of taking in good people is transparent. For example, if an org takes in someone promising and trains them up for 2 years, and then they leave for another org, that could have been a huge positive externality, but I'd bet it would get overlooked by funders. I've seen this happen previously. Right now it seems like there are a bunch of rather young EAs who really could use some training, but there are relatively few job openings, in part because existing orgs are quite hesitant to expand.

I imagine that hypothetically this could be an incredibly long conversation, and you definitely have a lot more inside knowledge than I do. I'd like to personally do more investigation to better understand what the main EA growth constraints are, we'll see about this.

One thing we could make tractable progress in is in forecasting movement growth or these other things. I don't have things in mind at the moment, but if you ever have ideas, do let me know, and we could see about developing them into questions in Metaculus or similar. I imagine having a group understanding of total EA movement growth could help a fair bit and make conversations like this more straightforward.

MaxDalton @ 2021-01-19T09:27 (+4)

This all seems reasonable. Some scattered thoughts:

- To be clear, I'm not claiming 1), I'm more like "I'm still figuring out how fast/how to grow)"

- I still think that "expanding staff/focus" is getting a bit too much emphasis: I think that if we focus on the right things we might be able to scale our impact faster than we scale our staff

Ozzie Gooen @ 2021-01-20T06:35 (+3)

Thanks!

Maybe I misunderstood this post. You wrote,

Therefore, we want to let people know what we're not doing, so that they have a better sense of how neglected those areas are.

When you said this, what timeline were you implying? I would imagine that if there were a new nonprofit focusing on a subarea mentioned here they would be intending to focus on it for 4-10+ years, so I was assuming that this post meant that CEA was intending to not get into these areas on a 4-10 year horizon.

Were you thinking of more of a 1-2 year horizon? I guess this would be fine as long as you're keeping in communication with other potential groups who are thinking about these areas, so we don't have a situation where there's a lot of overlapping (or worse, competing) work all of a sudden.

MaxDalton @ 2021-01-21T15:49 (+4)

No, I think you understood the original post right and my last comment was confusing. When I said "grow" I was imagining "grow in terms of people/impact in the areas we're currently occupying and other adjacent areas that are not listed as "things we're not doing"".

I don't expect us to start doing the things listed in the post in the next 4-10 years (though I might be wrong on some counts). We'll be less likely to do something if it's less related to our current core competencies, and if others are doing good work in the area (as with fundraising).

MaxDalton @ 2021-01-18T20:00 (+6)

Another argument: I think that startup folk wisdom is pretty big on focus. (E.g. start with a small target audience, give each person one clear goal). I think it's roughly "only start doing new things when you're acing your old things". But maybe startup folk wisdom is wrong, or maybe I'm wrong about what startup folk wisdom says.

I also think most (maybe basically all?) startups that got big started by doing one smaller thing well (e.g. Google did search, Amazon did books, Apple did computers, Microsoft did operating systems). Again, I think this was something like "only start new products when your old products are doing really well" ("really well" is a bit vague: e.g. Amazon's distribution systems were still a mess when they expanded into CDs/toys, but they were selling a lot of books).

Ozzie Gooen @ 2021-01-18T23:34 (+4)

Having been in the startup scene, wisdom there is a bit of a mess.

It's clear that the main goal of early startups is to identify "product market fit", which to me seems like, "an opportunity that's exciting enough to spend effort scaling".

Startups "pivot" all the time. (See The Lean Startup, though I assume you're familiar)

Startups also experiment with a bunch of small features, listen to what users want, and ideally choose some to focus on. For instance, Instagram started with a general-purpose app; from this they found out that users just really liked the photo feature, so they removed the other stuff and just focussed on that. AirBnB started out in many cities, but later were encouraged to focus on one; but in part because of their expertise (I imagine) they were able to make a good decision.

It's a known bug for startups to scale before "product market fit", or scale poorly (bad hires), both of which are quite bad.

However, it's definitely the intention of basically all startups to eventually get to the point where they have an exciting and scalable opportunity, and then to expand.

MaxDalton @ 2021-01-19T09:30 (+4)

Yeah, agree that experimentation and listening to customers is good. I mostly think this is a different dimension from things like "expand into a new area", although maybe the distinction is a bit fuzzy.

I also agree that aiming to find scaleable things is a great model.

I do think of CEA as looking for things that can scale (e.g. I think Forum, groups, and EAGx could all be scaled relatively well once we work out the models) (but we also do things that don't scale, which I think is appropriate while we're still searching for a really strong product-market fit).

(Edited to add: below I make a difference between scaling programs and scaling staff numbers. My sense is that startups' first goal is to find a scalable business model/program, and that only after that should they focus on being able to scale staff numbers to capitalize on the opportunity.)

Ozzie Gooen @ 2021-01-20T06:38 (+2)

Happy to hear you're looking for things that could scale, I'd personally be particularly excited about those opportunities.

I'd guess that internet-style things could scale particularly well; like the Forum / EA Funds / online models, etc, but that's also my internet background talking :). In particular, things could be different if it makes sense to focus on a very narrow but elite group.

I agree that a group should scale staff only after finding a scalable opportunity.

MaxDalton @ 2021-01-18T19:55 (+6)

I think my view was that (while they still think they're cost effective), orgs should be on the Pareto frontier of {size, quality}. However, they should also try to communicate clearly about what they won't be able to take on, so that others can take on those areas.

I imagine your reply being something like "That's OK, but org-1 is essentially creating an externality on potential-org-2 by growing more slowly: it means that potential-org-2 has to build a reputation, set up ops etc, rather than just being absorbed into org-1. It's better for org-1 to not take in potential-org-2, but it's best for the world if org-1 to take in potential-org-2."

I think the externality point is correct, but I'm still not sure what's best for the world: you also need to account for the benefits of quality/focus/learning/independence, which can be pretty significant (according to me).

I think the answer is going to depend a bit on the situation: e.g. I think it's better for org-1 to take in potential-org-2 if potential-org-2 is doing something more closely related to org-1's area of expertise, and quite bad for it to try to do something totally different. (E.g. I think it would probably be bad for CEA to try to set up your org with you as PM.) I also think it's better for org-1 to take on new things if it's doing it's current things well and covering more of the ground (since this means that the opportunity cost of it's growth-attention are lower).

MaxDalton @ 2021-01-18T19:43 (+6)

I also agree with your point about different forms of centralization, and I think that we are in a world where e.g. funding is fairly centralized.

I also wanted to emphasize that I agree with Edo that it's good to have centralized discussion platforms etc. - e.g. I think it would probably be bad if someone set up a competitor to the Forum that took 50% of the viewers. (But maybe fine if they quickly stole 100% of the Forum's viewers by being much better.)

MaxDalton @ 2021-01-18T19:40 (+4)

I generally feel like there's a bit too much focus in your model on number of people vs. getting those people to do high-quality work, or directing those people towards important problems. I also think it's worth remembering that staff (time, compensation) come on the cost side of the cost-effectiveness calculation.

E.g. I don't think that GW succeeded because it had more staff than 80k. I think they succeeded because they were doing really high-quality work on an important problem. To do that they had to have a reasonable number of staff, but that was a cost they had to pay to do the high-quality work.

And then related to the question about how fast to grow, it looks like it took them 6 years to get to 10 staff, and 9 years to get to 20. They had 18 staff around the time that Good Ventures was founded. So I think that simply being big wasn't a critical factor in their success, and I suspect that that relatively slow growth was in fact critical to figuring out what the org was doing and keeping quality high.

Ozzie Gooen @ 2021-01-18T23:43 (+4)

Thanks for the details and calculation of GW.

It's of course difficult to express a complete worldview in a few (even long) comments. To be clear, I definitely acknowledge that hiring has substantial costs (I haven't really done it yet for QURI), and is not right for all orgs, especially at all times. I don't think that hiring is intrinsically good or anything.

I also agree that being slow, in the beginning in particular, could be essential.

All that said, I think something like "ability to usefully scale" is a fairly critical factor in success for many jobs other than, perhaps, theoretical research. I think the success of OpenPhil will be profoundly bottlenecked if it can't find some useful ways to scale much further (this could even be by encouraging many other groups).

It could take quite a while of "staying really small" to "be able to usefully scale", but "be able to usefully scale" is one of the main goals I'd want to see.

MaxDalton @ 2021-01-19T09:33 (+4)

Yeah, I agree that "be able to usefully scale" is a pretty positive instrumental goal (and maybe one I should pay attention to more).

Maybe there are different versions of "be able to usefully scale":

- I'm mostly thinking of this in terms of "work on area X such that you have a model you can scale to make X much higher impact". I think this generally doesn't help you much to explore/scale area Y. (Maybe it helps if X and Y are really related.)

- I think you're thinking of this mostly in terms of "Be able to hire, manage, and coordinate large numbers of people quickly". I think this is also useful, and it's also something I'm aiming towards (though probably less than more object-level goals, like figuring out how to make our programs great, at the moment).

MaxDalton @ 2021-01-18T19:48 (+2)

I agree with your points about it being easier to find a PM than an ED, brand, centralizing operations etc., and I think they're costs to setting up new non-profits.

vaidehi_agarwalla @ 2021-01-15T16:24 (+13)

I'm really glad to see this post and the clear list of topics you won't be working on, and the clear guidance/information for other community builders looking to work in this space.

Habryka @ 2021-01-15T20:00 (+12)

Thank you for writing this! I think this is quite helpful.