The “Moral Uncertainty” Rabbit Hole, Fully Excavated

By Lukas_Gloor @ 2022-04-01T13:14 (+23)

This is the ninth and final post in my moral anti-realism sequence.

In an earlier post, I argued that moral uncertainty and confident moral realism don’t go together. Accordingly, if we’re morally uncertain, we must either endorse moral anti-realism or at least put significant credence on it.

In previous posts, I also argued that moral anti-realism doesn’t imply that nothing matters (at least not for the common-sense way that “things matter”) and that the label “nihilism” is inappropriate. Therefore, the insight “moral uncertainty and confident moral realism don’t go together” has implications for our moral reasoning.

This post discusses those implications – it outlines how uncertainty over object-level moral views and metaethical uncertainty (here: uncertainty over moral realism vs. anti-realism) interact. l will present a proposal for replacing the broad concept “moral uncertainty” with related, more detailed ideas:

- Deferring to moral reflection (and uncertainty over one’s “idealized values”)

- Having under-defined values (deliberately or by accident)

- Metaethical uncertainty (and wagering on moral realism)

I’ll argue that these concepts (alongside some further context, distinctions, and caveats) add important nuances to “moral uncertainty.”

This post is the culmination of my sequence; it builds on insights from previous posts.

Specifically, readers may benefit from reading the following two posts:

- Moral Uncertainty and Moral Realism Are in Tension – why I think moral uncertainty implies metaethical uncertainty (or confident moral anti-realism)

- The Life-Goals Framework: Ethical Reasoning from an Anti-Realist Perspective – for context on how I approach moral reasoning

That said, it should be possible to understand the gist of this post without any prior reading.

Refinement concepts (summary)

Where precision is helpful, I propose to replace talk about “moral uncertainty” with one or several of the three concepts below:

- Deferring to moral reflection (and uncertainty over one’s “idealized values”): Deferring to moral reflection is an instrumental strategy to discount current intuitions or inklings about what to value and defer authority to one’s “idealized values” – the values one would arrive at in a state of “being perfectly wise and informed.” This strategy is robust under metaethical uncertainty: if an objective/speaker-independent moral reality exists, our “idealized values” can hopefully track it (otherwise, we never had a chance in the first place). Even without an objective/speaker-independent morality, “idealized values” still point to something meaningful and personally relevant.[1]

- Having under-defined values (deliberately or by accident): The appeal behind deferring to moral reflection is that idealized values are well-specified, “here for us to discover.” However, suppose we were “perfectly wise and informed.” It remains an open question whether, in that state, we would readily form specific convictions on what to value. If someone continues to feel uncertain about what to value, or if subtle differences to our operationalization of “being perfectly wise and informed” led them to confidently adopt very different values across runs of the moral reflection, then that person’s “idealized values'' would be under-defined. (Under-definedness comes on a spectrum.) Someone holds under-defined values if there is no uniquely correct way to identify what they value, given everything there’s to know about them. While people can accept the possibility of ending up with under-defined values deliberately when deferring to moral reflection, some may not be aware of that possibility (i.e., they may lock in potentially under-defined values unthinkingly, by accident).

- Metaethical uncertainty (and wagering on moral realism): Some people may act as though moral realism is true despite placing much less than total confidence in it. This is the strategy “wagering on moral realism.” Someone may wager on moral realism out of conviction that their actions matter less without it. They may think this for different reasons. In particular, there are two wager types. One wager is targeted at irreducible normativity (“moral non-naturalism”) and one at naturalist moral realism (“moral naturalism”), respectively. Those two wagers work very differently. The irreducible normativity wager mostly sidesteps the framework I’ve presented in this sequence (but it seems question-begging and has inadvisable implications). The moral naturalism wager is weaker (it doesn’t always dominate anti-realist/subjectivist convictions) and fits well within this sequence’s moral-reasoning framework.

In the body of this post, I’ll go through each of the above concepts and describe my thinking.

“Idealized values”

While anti-realists don’t believe in speaker-independent (“objective”) moral truths, that doesn’t mean they have no notion of moral insights or being wrong about morality in a for-them-relevant sense.

Personally, when I contemplate where I’ve changed my mind in the past, it doesn’t seem like I’ve stumbled randomly through the landscape. Instead, I aspired to be open to logical arguments and appeals that resonate with stable, deeply held intuitions related to the universalizability of moral judgments. I want to think I’ve made progress towards that, that I uncovered to-me-salient features in the option space. Likewise, and for the same reasons, I may expect similar updates in the future.

Without assuming moral realism, the critical question is, “What’s the object of our uncertainty? What is it that we can get right or wrong?”

One answer is that someone can be right or wrong about their “idealized values” – what they’d value if they were “perfectly wise and informed.” So idealized values are a tool to make sense of uncertainty over object-level moral views and the belief that changing one’s mind through moral arguments can (in many/most instances?) represent a moral insight and not a failure of goal preservation.

Reflection procedures

To specify the meaning of “perfectly wise and informed,” we can envision a suitable procedure for moral reflection that a person would hypothetically undergo. Such a reflection procedure comprises a reflection environment and a reflection strategy. The reflection environment describes the options at one’s disposal; the reflection strategy describes how a person would use those options.

An example reflection environment

Here’s one example of a reflection environment:

- My favorite thinking environment: Imagine a comfortable environment tailored for creative intellectual pursuits (e.g., a Google campus or a cozy mansion on a scenic lake in the forest). At your disposal, you find a well-intentioned, superintelligent AI advisor fluent in various schools of philosophy and programmed to advise in a value-neutral fashion. (Insofar as that’s possible – since one cannot do philosophy without a specific methodology, the advisor must already endorse certain metaphilosophical commitments.) Besides answering questions, they can help set up experiments in virtual reality, such as ones with emulations of your brain or with modeled copies of your younger self. For instance, you can design experiments for learning what you'd value if you first encountered the EA community in San Francisco rather than in Oxford or started reading Derek Parfit or Peter Singer after the blog Lesswrong, instead of the other way around.[2] You can simulate conversations with select people (e.g., famous historical figures or contemporary philosophers). You can study how other people’s reflection concludes and how their moral views depend on their life circumstances. In the virtual-reality environment, you can augment your copy’s cognition or alter its perceptions to have it experience new types of emotions. You can test yourself for biases by simulating life as someone born with another gender(-orientation), ethnicity, or into a family with a different socioeconomic status. At the end of an experiment, your (near-)copies can produce write-ups of their insights, giving you inputs for your final moral deliberations. You can hand over authority about choosing your values to one of the simulated (near-)copies (if you trust the experimental setup and consider it too difficult to convey particular insights or experiences via text). Eventually, the person with the designated authority has to provide to your AI assistant a precise specification of values (the format – e.g., whether it’s a utility function or something else – is up to you to decide on). Those values then serve as your idealized values after moral reflection.

(Two other, more rigorously specified reflection procedures are indirect normativity and HCH.[3] Indirect normativity outputs a utility function whereas HCH attempts to formalize “idealized judgment,” which we could then consult for all kinds of tasks or situations.)[4]

“My favorite thinking environment” leaves you in charge as much as possible while providing flexible assistance. Any other structure is for you to specify: you decide the reflection strategy.[5] This includes what questions to ask the AI assistant, what experiments to do (if any), and when to conclude the reflection.

Reflection strategies: conservative vs. open-minded reflection

Reflection strategies describe how a person would proceed within a reflection environment to form their idealized values. I distinguish between two general types of reflection strategies: conservative and open-minded. These reflection strategies lie on a spectrum. (I will later argue that neither extreme end of the spectrum is particularly suitable.)

Someone with a conservative reflection strategy is steadfast in their moral reasoning framework.[6] They guard their opinions, which turns these into convictions (“convictions” being opinions that one safeguards against goal drift). At its extreme, someone with a maximally conservative reflection strategy has made up their mind and no longer benefits from any moral reflection. People can have moderately conservative reflection strategies where they have formed convictions on some issues but not others.

By contrast, people with open-minded moral reflection strategies are uncertain about either their moral reasoning framework or (at least) their object-level moral views. As the defining feature, they take a passive (“open-minded”) reflection approach focused on learning as much as possible without privileging specific views[7] and without (yet) entering a mode where they form convictions.

That said, “forming convictions” is not an entirely voluntary process – sometimes, we can’t help but feel confident about something after learning the details of a particular debate. As I’ll elaborate below, it is partly for this reason that I think no reflection strategy is inherently superior.

Evaluating reflection strategies

A core theme of this post is comparing conservative vs. open-minded reflection strategies. (Again, note that we’re talking about a spectrum.) To start this section, I’ll give a preview of my position, which I’ll then explain more carefully as the post continues.

In short, I believe that no reflection strategy is categorically superior to the other, but that reflection strategies can be wrong for particular situations reasoners can find themselves in. Moreover, I believe that the extreme ends of the spectrum (maximally conservative or maximally open-minded moral reflection) are inadvisable for the vast majority of people and circumstances.

I think people fall onto different parts of the spectrum due to different intellectual histories (e.g., whether they formed a self-concept around a specific view/position) and differences in “cognitive styles” – perhaps on traits like need for cognitive closure.

However, metaethical beliefs can also influence people’s reflection strategies. Since people can be wrong about their metaethical beliefs,[8] such beliefs can cause someone to employ a situation-inappropriate reflection strategy.

The value of reflecting now

Reflection procedures are thinking-and-acting sequences we'd undergo if we had unlimited time and resources. While we cannot properly run a moral reflection procedure right now in everyday life, we can still narrow down our uncertainty over the hypothetical reflection outcome. Spending time on that endeavor is worth it if the value of information – gaining clarity on one’s values – outweighs the opportunity cost from acting under one’s current (less certain) state of knowledge.

Gaining clarity on our values is easier for those who would employ a more conservative reflection strategy in their moral reflection procedure. After all, that means their strategy involves guarding some pre-existing convictions, which gives them advance knowledge of the direction of their moral reflection.[9]

By contrast, people who would employ more open-minded reflection strategies may not easily be able to move past specific layers of indecision. Because they may be uncertain how to approach moral reasoning in the first place, they can be “stuck” in their uncertainty. (Their hope is to get unstuck once they are inside the reflection procedure, once it becomes clearer how to proceed.)

When is someone ready to form convictions?

The timing when people form convictions distinguishes between the two ends of the spectrum of reflection strategies. On the more open-minded side, people haven’t yet formed (m)any convictions. However, open-minded reflection strategies cannot stay that way indefinitely. Since a reflection strategy's goal is to output a reflection outcome eventually, it has to become increasingly more conservative as the reflection gets closer to its endpoint.

If moral realism were true…

If moral realism were true, the timing of that transition (“the reflection strategy becoming increasingly conservative as the person forms more convictions”) is obvious. It would happen once the person knows enough to see the correct answers, once they see the correct way of narrowing down their reflection or (eventually) the correct values to adopt at the very end of it.

In the moral realist picture, expressions like “safeguarding opinions” or “forming convictions” (which I use interchangeably) seem out of place. Obviously, the idea is to “form convictions” about only the correct principles!

However, as I’ve argued in previous posts, moral realism is likely false. Notions like “altruism/doing good impartially” are under-defined. There are several equally defensible options to systematize these notions and apply them to (“out-of-distribution”) contested domains like population ethics. (See this section in my previous post.)

That said, it is worth considering whether there’s a wager for acting as though naturalist moral realism is true. I will address that question in a later section. (In short, the answer is “sort of, but the wager only applies to people who lack pre-existing moral convictions.”)

Two versions of anti-realism: are idealized values chosen or discovered?

Under moral anti-realism, there are two empirical possibilities[10] for “When is someone ready to form convictions?.” In the first possibility, things work similarly to naturalist moral realism but on a personal/subjectivist basis. We can describe this option as “My idealized values are here for me to discover.” By this, I mean that, at any given moment, there’s a fact of the matter to “What I’d conclude with open-minded moral reflection.” (Specifically, a unique fact – it cannot be that I would conclude vastly different things in different runs of the reflection procedure or that I would find myself indifferent about a whole range of options.)

The second option is that my idealized values aren’t “here for me to discover.” In this view, open-minded reflection is too passive – therefore, we have to create our values actively. Arguments for this view include that (too) open-minded reflection doesn’t reliably terminate; instead, one must bring normative convictions to the table. “Forming convictions,” according to this second option, is about making a particular moral view/outlook a part of one’s identity as a morality-inspired actor. Finding one’s values, then, is not just about intellectual insights.

I will argue that the truth is somewhere in between. Still, the second view, that we have to actively create our (idealized) values, definitely holds to a degree that I often find underappreciated. Admittedly, many things we can learn about the philosophical option space indeed function like “discoveries.” However, because there are several defensible ways to systematize under-defined concepts like “altruism/doing good impartially,” personal factors will determine whether a given approach appeals to someone. Moreover, these factors may change depending on different judgment calls taken in setting up the moral reflection procedure or in different runs of it. (If different runs of the reflection procedure produce different outcomes, it suggests that there’s something unreliable about the way we do reflection.)

Deferring to moral reflection

I use the phrase “deferring to moral reflection” (see, e.g., the mentions in this post’s introduction and its summary) to describe how much a person expects moral reflection to change their current guess on what to value. The more open-minded someone’s reflection strategy, the more they defer to moral reflection. Someone who defers completely would follow a maximally open-ended reflection strategy. Only people with maximally conservative reflection strategies don't defer at all.

No place on the reflection spectrum is categorically best/superior

In this section, I will defend the view that, because idealized values aren’t “here for us to discover” (at least not entirely), there’s no obvious/ideal timing for the transition from open-minded to more conservative moral reflection. In other words, there’s no obvious/ideal timing for “when to allow oneself to form convictions” (on both object-level moral views or moral reasoning principles). There’s no correct degree to which everyone should defer to moral reflection.

There are two reasons why I think open-minded reflection isn’t automatically best:

- We have to make judgment calls about how to structure our reflection strategy. Making those judgment calls already gets us in the business of forming convictions. So, if we are qualified to do that (in “pre-reflection mode,” setting up our reflection procedure), why can’t we also form other convictions similarly early?

- Reflection procedures come with an overwhelming array of options, and they can be risky (in the sense of having pitfalls – see later in this section). Arguably, we are closer (in the sense of our intuitions being more accustomed and therefore, arguably, more reliable) to many of the fundamental issues in moral philosophy than to matters like “carefully setting up a sequence of virtual reality thought experiments to aid an open-minded process of moral reflection.” Therefore, it seems reasonable/defensible to think of oneself as better positioned to form convictions about object-level morality (in places where we deem it safe enough).

Reflection strategies require judgment calls

In this section, I’ll elaborate on how specifying reflection strategies requires many judgment calls. The following are some dimensions alongside which judgment calls are required (many of these categories are interrelated/overlapping):

- Social distortions: Spending years alone in the reflection environment could induce loneliness and boredom, which may have undesired effects on the reflection outcome. You could add other people to the reflection environment, but who you add is likely to influence your reflection (e.g., because of social signaling or via the added sympathy you may experience for the values of loved ones).

- Transformative changes: Faced with questions like whether to augment your reasoning or capacity to experience things, there’s always the question “Would I still trust the judgment of this newly created version of myself?”

- Distortions from (lack of) competition: As Wei Dai points out in this Lesswrong comment: “Current human deliberation and discourse are strongly tied up with a kind of resource gathering and competition.” By competition, he means things like “the need to signal intelligence, loyalty, wealth, or other ‘positive’ attributes.” Within some reflection procedures (and possibly depending on your reflection strategy), you may not have much of an incentive to compete. On the one hand, a lack of competition or status considerations could lead to “purer” or more careful reflection. On the other hand, perhaps competition functions as a safeguard, preventing people from adopting values where they cannot summon sufficient motivation under everyday circumstances. Without competition, people’s values could become decoupled from what ordinarily motivates them and more susceptible to idiosyncratic influences, perhaps becoming more extreme.

- Lack of morally urgent causes: In the blogpost On Caring, Nate Soares writes: “It's not enough to think you should change the world — you also need the sort of desperation that comes from realizing that you would dedicate your entire life to solving the world's 100th biggest problem if you could, but you can't, because there are 99 bigger problems you have to address first.”

In that passage, Soares points out that desperation can strongly motivate why some people develop an identity around effective altruism. Interestingly enough, in some reflection environments (including “My favorite thinking environment”), the outside world is on pause. As a result, the phenomenology of “desperation” that Soares described would be out of place. If you suffered from poverty, illnesses, or abuse, these hardships are no longer an issue. Also, there are no other people to lift out of poverty and no factory farms to shut down. You’re no longer in a race against time to prevent bad things from happening, seeking friends and allies while trying to defend your cause against corrosion from influence seekers. This constitutes a massive change in your “situation in the world.” Without morally urgent causes, you arguably become less likely to go all-out by adopting an identity around solving a class of problems you’d deem urgent in the real world but which don’t appear pressing inside the reflection procedure. Reflection inside the reflection procedure may feel more like writing that novel you’ve always wanted to write – it has less the feel of a “mission” and more of “doing justice to your long-term dream.”[11] - Ordering effects: The order in which you learn new considerations can influence your reflection outcome. (See page 7 in this paper. Consider a model of internal deliberation where your attachment to moral principles strengthens whenever you reach reflective equilibrium given everything you already know/endorse.)

- Persuasion and framing effects: Even with an AI assistant designed to give you “value-neutral” advice, there will be free parameters in the AI’s reasoning that affect its guidance and how it words things. Framing effects may also play a role when interacting with other humans (e.g., epistemic peers, expert philosophers, friends, and loved ones).

Pitfalls of reflection procedures

There are also pitfalls to avoid when picking a reflection strategy. The failure modes I list below are avoidable in theory,[12] but they could be difficult to avoid in practice:

- Going off the rails: Moral reflection environments could be unintentionally alienating (enormous option space; time spent reflecting could be unusually long). Failure modes related to the strangeness of the moral reflection environment include existential breakdown and impulsively deciding to lock in specific values to be done with it.

- Issues with motivation and compliance: When you set up experiments in virtual reality, the people in them (including copies of you) may not always want to play along.

- Value attacks: Attackers could simulate people’s reflection environments in the hope of influencing their reflection outcomes.

- Addiction traps: Superstimuli in the reflection environment could cause you to lose track of your goals. For instance, imagine you started asking your AI assistant for an experiment in virtual reality to learn about pleasure-pain tradeoffs or different types of pleasures. Then, next thing you know, you’ve spent centuries in pleasure simulations and have forgotten many of your lofty ideals.

- Unfairly persuasive arguments: Some arguments may appeal to people because they exploit design features of our minds rather than because they tell us “What humans truly want.” Reflection procedures with argument search (e.g., asking the AI assistant for arguments that are persuasive to lots of people) could run into these unfairly compelling arguments. For illustration, imagine a story like “Atlas Shrugged” but highly persuasive to most people. We can also think of “arguments” as sequences of experiences: Inspired by the Narnia story, perhaps there exists a sensation of eating a piece of candy so delicious that many people become willing to sell out all their other values for eating more of it. Internally, this may feel like becoming convinced of some candy-focused morality, but looking at it from the outside, we’ll feel like there’s something problematic about how the moral update came about.)

- Subtle pressures exerted by AI assistants: AI assistants trained to be “maximally helpful in a value-neutral fashion” may not be fully neutral, after all. (Complete) value-neutrality may be an illusory notion, and if the AI assistants mistakenly think they know our values better than we do, their advice could lead us astray. (See Wei Dai’s comments in this thread for more discussion and analysis.)

Conclusion: “One has to actively create oneself”

“Moral reflection” sounds straightforward – naively, one might think that the right path of reflection will somehow reveal itself. However, as we think of the complexities of setting up a suitable reflection environment and how we’d proceed inside it, what it would be like and how many judgment calls we’d have to make, we see that things can get tricky.

Joe Carlsmith summarized it as follows in an excellent post (what Carlsmith calls “idealizing subjectivism” corresponds to what I call “deferring to moral reflection”):

My current overall take is that especially absent certain strong empirical assumptions, idealizing subjectivism is ill-suited to the role some hope it can play: namely, providing a privileged and authoritative (even if subjective) standard of value. Rather, the version of the view I favor mostly reduces to the following (mundane) observations:

- If you already value X, it’s possible to make instrumental mistakes relative to X.

- You can choose to treat the outputs of various processes, and the attitudes of various hypothetical beings, as authoritative to different degrees.

This isn’t necessarily a problem. To me, though, it speaks against treating your “idealized values” the way a robust meta-ethical realist treats the “true values.” That is, you cannot forever aim to approximate the self you “would become”; you must actively create yourself, often in the here and now. Just as the world can’t tell you what to value, neither can your various hypothetical selves — unless you choose to let them. Ultimately, it’s on you.

In my words, the difficulty with deferring to moral reflection too much is that the benefits of reflection procedures (having more information and more time to think; having access to augmented selves, etc.) don’t change what it feels like, fundamentally, to contemplate what to value. For all we know, many people would continue to feel apprehensive about doing their moral reasoning “the wrong way” since they’d have to make judgment calls left and right. Plausibly, no “correct answers” would suddenly appear to us. To avoid leaving our views under-defined, we have to – at some point – form convictions by committing to certain principles or ways of reasoning. As Carslmith describes it, one has to – at some point – “actively create oneself.” (The alternative is to accept the possibility that one’s reflection outcome may be under-defined.)

It is possible to delay the moment of “actively creating oneself” to a time within the reflection procedure. (This would correspond to an open-minded reflection strategy; there are strong arguments to keep one’s reflection strategy at least moderately open-minded.) However, note that, in doing so, one “actively creates oneself” as someone who trusts the reflection procedure more than one’s object-level moral intuitions or reasoning principles. This may be true for some people, but it isn’t true for everyone. Alternatively, it could be true for someone in some domains but not others.[13]

Empirical expectations

Open-minded reflection seems unambiguously the right strategy when someone has an incomplete grasp of the option space. People defer to moral reflection because they expect (a significant chance of) discoveries that would radically change the way they reason.

Such expectations are by no means unreasonable. Civilizationally, our moral attitudes have undergone tremendous changes. We regard most of those changes as instances of moral progress. Sadly, many times, intelligent and otherwise decent-seeming people failed to recognize ongoing moral catastrophes. Unless we think our philosophical sophistication has reached the ceiling, we should expect new insights and perhaps even “moral revolutions” under improved thinking conditions.[14] More specifically, if the future goes well, we should be in a superior position to decide what to value. Scientific advances may feed into the discussion, making us aware that our previous conceptualization of the option space was incomplete or included confused categories.[15] In addition, philosophers of the future may illuminate the option space for us.

Having under-defined values

If idealized values aren’t “here for us to discover,” someone’s moral reflection could result in under-defined values. (I’ve already hinted at this in the preceding sections.) A person has under-defined values if, based on everything there is to know about their beliefs and psychology, there’s no unique set of values we can identify as theirs. Under-definedness comes on a spectrum.

Degrees of under-definedness

Under-definedness doesn’t necessarily pose a problem. For many dimensions along which someone's moral views may be under-defined, it wouldn’t make a difference to the person’s prioritization if they formed more detailed convictions. Instead, under-definedness is more interesting when a person ends up without any guidance on questions that would affect their day-to-day prioritization.[16]

Still, under-definedness only poses a problem if there’s reflective inconsistency, i.e., if someone would change their reflection approach if they became aware of implicit premises in their thinking. For example, consider someone who holds the (according to this sequence) mistaken metaethical belief that moral realism is true. Let’s say that based on that belief, they adopted a passive approach to moral reasoning. So, they rely less on their intuitions than they otherwise would, and they assume an identity as someone who doesn’t form convictions on matters where their epistemic peers may disagree. Now, upon learning that anti-realism is likely true, that forming convictions may be inevitable, and that they otherwise could end up with under-defined values, they then change their moral reasoning approach. (They would no longer reason themselves out of mental processes that could lead to them forming convictions early because they no longer hold some beliefs that discouraged it.)[17]

By contrast, if someone has a clear grasp of their options and the implications that come with them, then it seems perfectly unobjectionable to have under-defined values or defer to moral reflection knowing that the reflection outcome could be under-defined. For instance, all the following options are unobjectionable:

- People can decide not to have life goals. (For a discussion on the merits and drawbacks of adopting life goals, see the “Why have life goals?” section in my previous post.)

- Some people with the indirect life goal of deferring to moral reflection may be comfortable in accepting the possibility of an under-defined reflection outcome. For instance, they may care primarily about figuring out the correct moral theory in worlds where moral realism is true. By valuing open-minded moral reflection, they stay receptive to the recognizable features of the true moral reality, should it exist. I call this approach “wagering on moral realism.” I will discuss it in the next section.

- People may feel indifferent about the parts of morality contested among experts and not deem it essential that their moral views fulfill all the axioms about “rational preferences.”[18]

Wagering on moral realism

A common intuition says that if morality is under-defined, what we do matters a lot less. People with this intuition may employ the strategy “Wagering on moral realism” – they might act as though moral realism is true despite suspecting that it isn’t.

There are two wagers for two types of moral realism.

I have already discussed the wager for irreducible normativity and why I advise against it – see this post (“Why the Irreducible Normativity Wager (Mostly) Fails”) and its supplement (“Metaethical Fanaticism”).

I’ll focus on the wager that targets naturalist moral realism in the remaining sections.[19]

Reflection helps with uncovering the “true morality”…

The wager for naturalist moral realism is a wager for deferring strongly to moral reflection. By keeping ourselves as open-minded as possible (“avoiding forming convictions”) in the earlier stages of our moral deliberations, we maximize the chances of uncovering the moral reality inside the reflection procedure.

… and with many other things

That said, open-minded reflection also makes sense for other reasons, including some assumptions under moral anti-realism. (See the considerations I listed in the section “Empirical expectations.”) Wagers are only of practical significance if they cause us to act differently. Therefore, to assess the force of the naturalist moral realism wager, we have to investigate situations where someone decides against wanting more reflection based on their metaethical beliefs. These will generally be situations where the person feels like they have a solid grasp of the moral option space and already have convictions related to what to value. (If they didn’t already feel that way, moral reflection would be in their best interest already, regardless of their take on realism vs. anti-realism.)

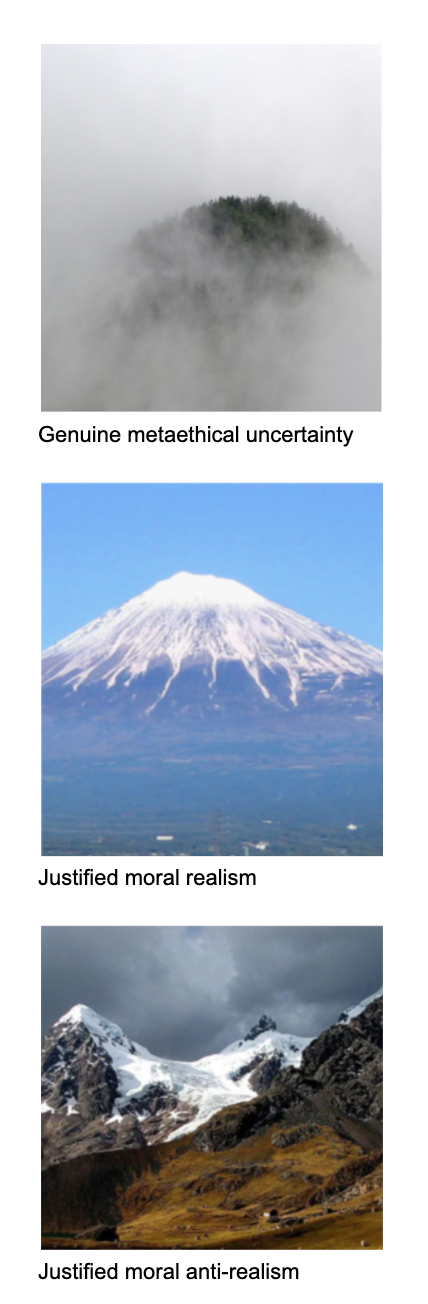

Genuine uncertainty, justified realism, justified anti-realism: What those epistemic states “look like”

In this subsection, I’ll introduce an analogy with the help of pictures. That analogy aims to describe what the corresponding epistemic states (“what we believe we know about the moral option space”) would look like for various metaethical positions.

(Image sources[20])

In the analogy, the fields of vision represent how the moral option space appears to someone based on everything the person knows. Clearly visible mountains represent complete and coherent moral frameworks. Rocks/mountain parts in the analogy represent salient features in the moral option space. (If all the rocks/mountain parts form a whole and unmistakable mountain, moral realism is true.)

In the first picture (“Genuine metaethical uncertainty”), a wall of fog hides the degree to which the “mountain space” (the moral option space) behind it has structure. Given the obscured field of vision, the appropriate metaethical position is uncertainty between realism and anti-realism.

In the second picture (“Justified moral realism”), we grasp the moral option space and can tell nothing is left to interpretation. One would have to squint and interpret things strangly to come away with a conclusion other than that there’s a single, well-specified mountain.

In the third picture (“Justified moral anti-realism”), our view over the option space is also clear, but the image before us is a more complicated one: we see multiple mountains. So the question “Which mountain is the correct one?” doesn’t make sense – there are several defensible options.

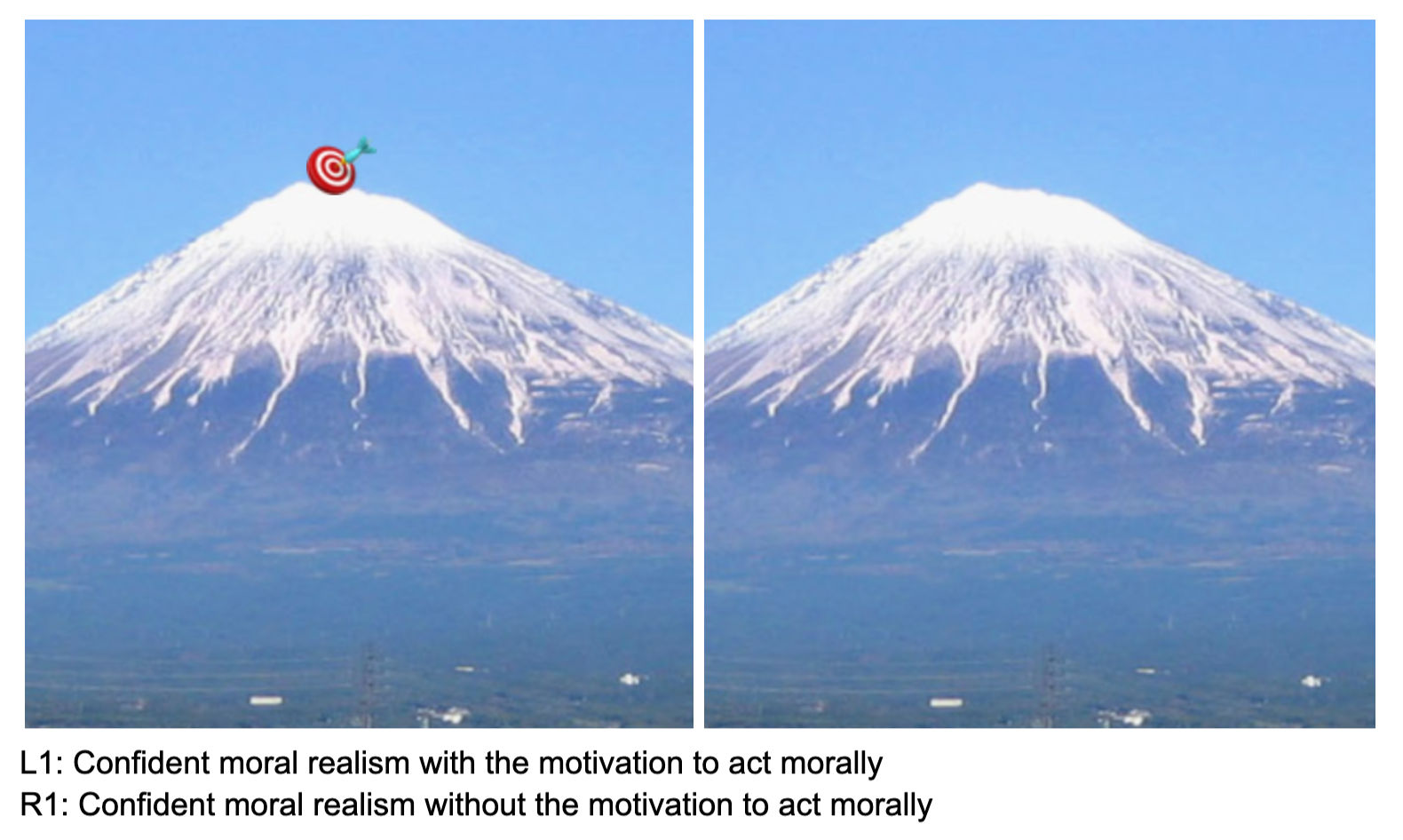

Moral motivation

In my post on life goals, I distinguished between morality as an academic interest and morality as a life goal. The distinction is particularly relevant in the context of the moral realism wager. There would be no naturalist moral realism wager for someone only interested in morality as an academic interest. The wager comes into play for people who are motivated to act morally. (By that, I mean that they want to adopt a life goal (or life goal component – it’s possible to have multiple life goals) about doing what’s best for others, taking an “impartial perspective.”)

Below, I will add arrows to the above pictures to illustrate how the motivation to act morally interacts with various perceptions of the moral option space.

(“L” stands for “left;” “R” stands for “right.”)

In the moral realism picture, the situation is straightforward. People either have moral motivation (L1), or they don’t.

(Image source[21])

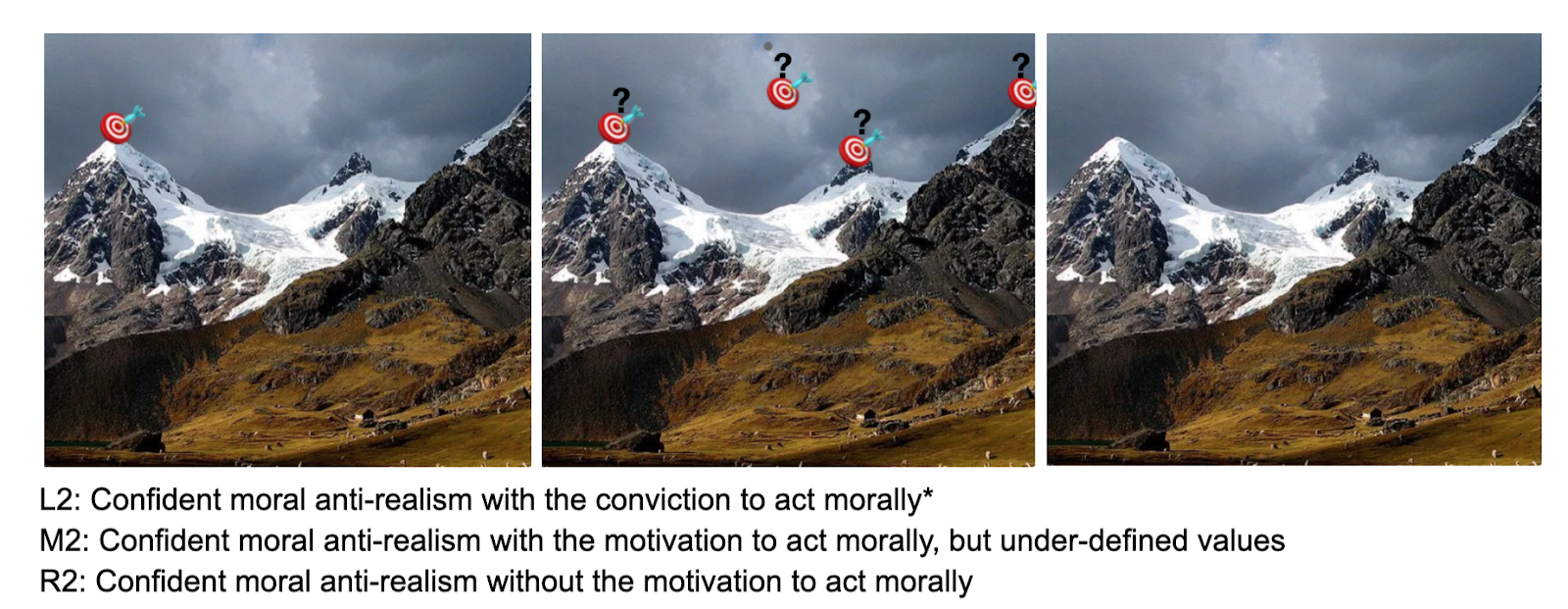

(“M” stands for “middle.”)

By contrast, if our mental picture suggests moral anti-realism, then there are more options, and the situation becomes more unusual.

One option (L2) is that someone may be aware of “other mountains” (other coherent and complete moral positions), but the person may have already found their mountain. This means that the person already has a well-specified life goal inspired by morality. Being convinced of moral anti-realism (and justifiably so if they are representing the option space correctly!), they can maintain that conviction despite knowing that others wouldn’t necessarily share it.

To give an analogy, consider someone motivated to improve their athletic fitness by steering toward the ideal body of a marathon runner, knowing perfectly well that other people may want to be “athletically fit” in the way of a 100m-sprinter instead. Since “athletic fitness” can be cashed out in multiple ways, it is defensible for different athletes to dedicate their training efforts in different directions. (See also the dialogue further below for a more detailed discussion of that analogy.)

A second option is the following (M2). Upon learning that there are multiple mountains, someone may feel indecisive or disoriented. When the person envisions adopting one of the defensible moral positions, the choice feels arbitrary. Maybe they even prefer to go with a mixture of views, such as, for instance, a moral parliament approach that gives weight to several mountains. (Note that using a moral parliament approach here wouldn’t reflect any factual uncertainty; instead, it would serve as an ad hoc strategy for dealing with indecisiveness.)

Thirdly, it’s possible (R3) for a person to see that there are multiple mountains without having any motivation to make one of them “theirs.” Instead, the person may hold exclusively non-moral life goals (or no life goals).

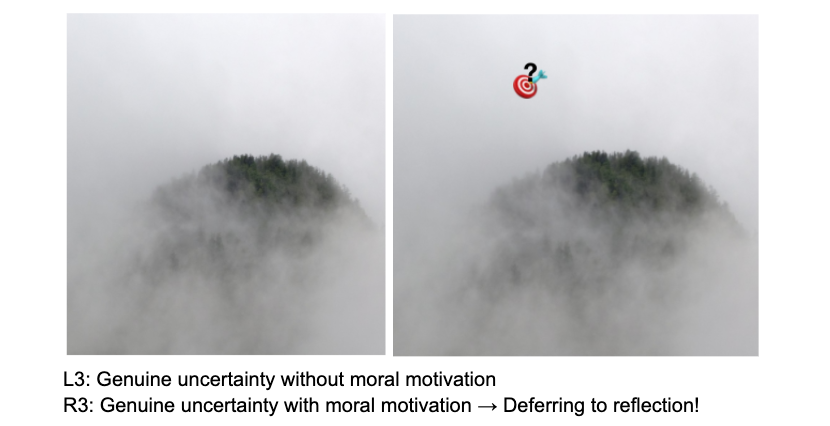

(Image source[22])

Lastly, we can picture how moral motivation interacts with genuine metaethical uncertainty. In the scenario where such motivation is present (R3), the motivation to act morally has to point at some fog (at least partly). In that situation, one would want to defer to moral reflection to better understand the option space.

How to end up with under-defined values

With the mountain analogy, we can now talk about what happens when people unintentionally end up with under-defined values. Starting at R3, unintentionally under-defined values can come about in two ways. First, someone could go from R3 to M2 via the moral reflection. (“The fog goes away, but no answer jumps out at them.”) Alternatively, someone’s reflection procedure could take them from R3 to something like L2, except that the person’s moral motivation could point at different mountains after different runs of the reflection, depending on subtle changes to their reflection strategy (or execution thereof).

“Grasping the moral option space” comes in degrees

The mountain pictures in the analogy represent our map of the moral option space, not the territory. We cannot be sure whether the way we envision the moral option space is accurate or complete. There is always at least some fog – fog generates a pull toward deferring more to moral reflection. The strength of that pull depends on (1) how much fog there is and (2) how much our moral motivation is already attached to a clearly visible mountain(-part) vs. how much it points exclusively at the fog.

The analogy becomes more tricky when we add the possibility that we may have blind spots, hallucinate, or otherwise be mistaken about the mountain space. For instance, maybe we see a bunch of hills, which makes us think that moral anti-realism must be true, but in reality, the hills are just the outskirts of a massive mountain that we fail to recognize in the distance.

Examining the naturalist moral realism wager

Finally, we can now analyze the naturalist moral realism wager. That wager applies where someone has the motivation to act morally and suspects moral anti-realism. There are two ways this situation (this mental picture of the moral option space) could look (M2 and L2).

In M2, picking out a particular mountain feels arbitrary – therefore, we don’t have anything to lose from further reflection. The chance that we’re mistaken about the moral option space, that we should instead be seeing a picture like L1 (or perhaps also L2), is reason enough to want more moral reflection. If the situation looks to us like M2, we might say, “the naturalist moral realism wager goes through for me.”

By contrast, in situation L2, we already have our mountain. We’d be giving up that mountain if we went back to an open-minded moral reflection strategy. If we’re right about the picture as it appears to us, that’s an actual loss! I’ll now argue that the loss would be just as bad as missing out on the “true moral reality.”

Commensurate currencies

The differences between the L1 naturalist moral realism picture and the M2 moral anti-realism picture seem entirely incidental; the two pictures have a commensurate currency in people’s moral motivation.

What I mean by this is the following. Whether a person’s moral convictions describe the “true moral reality” (L1) or “one well-specified morality out of several defensible options” (M2) comes down to other people’s thinking. As far as that single person is concerned, the “stuff” moral convictions are made from remains the same. That “stuff,” the common currency, consists of features in the moral option space that the person considers to be the most appealing systematization of “altruism/doing good,” so much so that they deem them worthy of orienting their lives around. If everyone else has that attitude about the same exact features, then moral realism is true. Otherwise, moral anti-realism is true. The common currency – the stuff moral convictions are made from – matters in both cases.[23]

Conclusion

Unless we already have a conviction to dedicate our efforts to some object-level moral view, the moral naturalism wager pushes morally-motivated people toward more moral reflection. Conversely, if the conviction to act according to an object-level morality is already present, then there's no real “wager,” no extra boost in favor of assuming that naturalist moral realism is true. Because the conviction to act according to a given moral theory is the same in both naturalist moral realism[24] and the moral anti-realism I’ve defended in this sequence, neither metaethical view can overpower the other. Instead, how to act under metaethical uncertainty will depend on our respective credences (e.g., low credence in moral realism implies a correspondingly weak reason to defer to moral reflection more than one otherwise would).

Anticipating objections (Dialogue)

In the dialogue below, I try to anticipate and address objections to the mountain analogy and my analysis of the naturalist moral realism wager.

Critic: Why would moral anti-realists bother to form well-specified moral views? If they know that their motivation to act morally points in an arbitrary direction, shouldn’t they remain indifferent about the more contested aspects of morality? It seems that it’s part of the meaning of “morality” that this sort of arbitrariness shouldn’t happen.

Me: Empirically, many anti-realists do bother to form well-specified moral views. We see many examples among effective altruists who self-identify as moral anti-realists. That seems to be what people’s motivation often does in these circumstances.

Critic: Point taken, but I’m saying maybe they shouldn’t? At the very least, I don’t understand why they do it.

Me: You said that it’s “part of the meaning of morality” that arbitrariness “shouldn’t happen.” That captures the way moral non-naturalists think of morality. But in the moral naturalism picture, it seems perfectly coherent to consider that morality might be under-defined (or “indefinable”). If there are several defensible ways to systematize a target concept like “altruism/doing good impartially,” you can be indifferent between all those ways or favor one of them. Both options seem possible.

Critic: I understand being indifferent in the light of indefinability. If the true morality is under-defined, so be it. That part seems clear. What I don’t understand is favoring one of the options. Can you explain to me the thinking of someone who self-identifies as a moral anti-realist yet has moral convictions in domains where they think that other philosophically sophisticated reasoners won’t come to share them?

Me: I suspect that your beliefs about morality are too primed by moral realist ways of thinking. If you internalized moral anti-realism more, your intuitions about how morality needs to function could change.

Consider the concept of “athletic fitness.” Suppose many people grew up with a deep-seated need to study it to become ideally athletically fit. At some point in their studies, they discover that there are multiple options to cash out athletic fitness, e.g., the difference between marathon running vs. 100m-sprints. They may feel drawn to one of those options, or they may be indifferent.

Likewise, imagine that you became interested in moral philosophy after reading some moral arguments, such as Singer’s drowning child argument in Famine, Affluence and Morality. You developed the motivation to act morally as it became clear to you that, e.g., spending money on poverty reduction ranks “morally better” (in a sense that you care about) than spending money on a luxury watch. You continue to study morality. You become interested in contested subdomains of morality, like theories of well-being or population ethics. You experience some inner pressure to form opinions in those areas because when you think about various options and their implications, your mind goes, “Wow, these considerations matter.” As you learn more about metaethics and the option space for how to reason about morality, you begin to think that moral anti-realism is most likely true. In other words, you come to believe that there are likely different systematizations of “altruism/doing good impartially” that individual philosophically sophisticated reasoners will deem defensible. At this point, there are two options for how you might feel: either you’ll be undecided between theories, or you find that a specific moral view deeply appeals to you.

In the story I just described, your motivation to act morally comes from things that are very “emotionally and epistemically close” to you, such as the features of Peter Singer’s drowning child argument. Your moral motivation doesn’t come from conceptual analysis about “morality” as an irreducibly normative concept. (Some people do think that way, but this isn’t the story here!) It also doesn’t come from wanting other philosophical reasoners to necessarily share your motivation. Because we’re discussing a naturalist picture of morality, morality tangibly connects to your motivations. You want to act morally not “because it’s moral,” but because it relates to concrete things like helping people, etc. Once you find yourself with a moral conviction about something tangible, you don’t care whether others would form it as well.

I mean, you would care if you thought others not sharing your particular conviction was evidence that you’re making a mistake. If moral realism was true, it would be evidence of that. However, if anti-realism is indeed correct, then it wouldn’t have to weaken your conviction.

Critic: Why do some people form convictions and not others?

Me: It no longer feels like a choice when you see the option space clearly. You either find yourself having strong opinions on what to value (or how to morally reason), or you don’t.

Fanaticism vs. respecting others' views

Critic: If morality comes in multiple defensible options, isn’t it self-defeating when people form convictions about different interpretations? What about the potential for conflict in cases where the practical priorities of do-gooders diverge? Wouldn’t it be better if we remained moral realists?

Me: People becoming radicalized through moral philosophy seems more dangerous with moral realism. Being a moral anti-realist doesn’t mean you think “anything goes.” As an anti-realist, you believe yourself that there are several defensible options to cash out target concepts like “altruism/doing good impartially.” In that case, why would you think that your preferred option gives you the right to play dirty against others? Admittedly, having a preferred option in the form of a morality-inspired life goal means that you care deeply about doing good by those lights – it’s why you get up in the morning and give your best. So I understand that there’s tension if well-intentioned others work on things you think are bad. Still, people can care deeply about something and even care about it in a moral sense but maintain respect and civility toward well-intentioned others who disagree. The question is what place your personal moral views adopt in your thinking. Are they the only thing that matters to you? Or is there some higher-order “ethics of respect/coordination/cooperation” to which you subordinate your views?

It’s a mark of maturity when people can learn to compromise with well-intentioned and reasonable others.[25] Democrats and Republicans have different convictions, but that doesn’t mean they do right by their lights to play dirty. In the same way someone can have political views and still respect the overarching democratic process, someone can have morality-inspired life goals and still respect other people with different life goals.

As I outlined in the appendix of my last post, there are at least two aspects to morality. (1) What are my life goals? (2) What do I do about others not sharing my life goals? Moral realists may think that a single moral theory answers both questions. After all, if you have the right life goals, those who don’t share your thinking are making a mistake. That’s how moral realism may induce fanaticism. Arguably, if it were more obvious to people that moral realism is likely false, fewer people would go from “I’m convinced of some moral view” to “Maybe I should play dirty against people with other views.”

Selected takeaways: good vs. bad reasons for deferring to (more) moral reflection

To list a few takeaways from this post, I made a list of good and bad reasons for deferring (more) to moral reflection. (Note, again, that deferring to moral reflection comes on a spectrum.)

In this context, it’s important to note that deferring to moral reflection would be wise if moral realism is true or if idealized values are “here for us to discover.” In this sequence, I argued that neither of those is true – but some (many?) readers may disagree.

Assuming that I’m right about the flavor of moral anti-realism I’ve advocated for in this sequence, below are my “good and bad reasons for deferring to moral reflection.”

(Note that this is not an exhaustive list, and it’s pretty subjective. Moral reflection feels more like an art than a science.)

Bad reasons for deferring strongly to moral reflection:

- You haven’t contemplated the possibility that the feeling of “everything feels a bit arbitrary; I hope I’m not somehow doing moral reasoning the wrong way” may never go away unless you get into a habit of forming your own views. Therefore, you never practiced the steps that could lead to you forming convictions. Because you haven’t practiced those steps, you assume you’re far from understanding the option space well enough, which only reinforces your belief that it’s too early for you to form convictions.

- You observe that other people’s fundamental intuitions about morality differ from yours. You consider that an argument for trusting your reasoning and your intuitions less than you otherwise would. As a result, you lack enough trust in your reasoning to form convictions early.

- You have an unreflected belief that things don’t matter if moral anti-realism is true. You want to defer strongly to moral reflection because there’s a possibility that moral realism is true. However, you haven’t thought about the argument that naturalist moral realism and moral anti-realism use the same currency, i.e., that the moral views you’d adopt if moral anti-realism were true might matter just as much to you.

Good reasons for deferring strongly to moral reflection:

- You don’t endorse any of the bad reasons, and you still feel drawn to deferring to moral reflection. For instance, you feel genuinely unsure how to reason about moral views or what to think about a specific debate (despite having tried to form opinions).

- You think your present way of visualizing the moral option space is unlikely to be a sound basis for forming convictions. You suspect that it is likely to be highly incomplete or even misguided compared to how you’d frame your options after learning more science and philosophy inside an ideal reflection environment.

Bad reasons for forming some convictions early:

- You think moral anti-realism means there’s no for-you-relevant sense in which you can be wrong about your values.

- You think of yourself as a rational agent, and you believe rational agents must have well-specified “utility functions.” Hence, ending up with under-defined values (which is a possible side-effect of deferring strongly to moral reflection) seems irrational/unacceptable to you.

Good reasons for forming some convictions early:

- You can’t help it, and you think you have a solid grasp of the moral option space (e.g., you’re likely to pass Ideological Turing tests of some prominent reasoners who conceptualize it differently).

- You distrust your ability to guard yourself against unwanted opinion drift inside moral reflection procedures, and the views you already hold feel too important to expose to that risk.

Acknowledgments

Many thanks to Adriano Mannino and Lydia Ward for their comments on this post.

Relevant to many people, at least. People whose only life goal is following irreducible normativity – a stance I’ve called “metaethical fanaticism” in previous posts – wouldn’t necessarily consider their idealized values “personally relevant,” since these values may not track normative truth. ↩︎

For context, these examples describe popular pathways where people discovered effective altruist ideas. In particular, it seems noteworthy how people’s (meta)ethical views cluster geographically and reflect differences in introductory reading pathways. ↩︎

Both indirect normativity and HCH are reflection procedures according to my definition. In HCH, the reflection environment is people thinking inside boxes with varying equipment, decomposing questions or answering subquestions; the reflection strategy is how the people in the boxes actually operate (perhaps guided by an overseer’s manual). In indirect normativity, the reflection environment resembles “My favorite thinking environment,” and the reflection strategy the behavior of the person doing the reflection. ↩︎

These specifications are in some ways the most concrete proposals for ethics desiderata and ethical methodology that I know. The fact that an AI alignment researcher wrote both examples is a testament to Daniel Dennett’s slogan “AI makes philosophy honest.” ↩︎

We can conceive of reflection environments that impose more structure. For instance, a highly structured reflection environment (which would leave little control to people using their reflection strategies) would be a decision tree with pre-specified “yes or no” questions composed by a superintelligent AI advisor. Each set of answers would then correspond to a type/category of values – similar to how a personality quiz might tell you what “type” you are. (I assume that many people, myself included, would not want to undergo such a reflection procedure because it imposes too much structure / doesn’t give us enough understanding to control the outcome. However, it at least has the advantage that it can be designed to reliably avoid outputting fanaticism or unintended reflection results – see the section “Pitfalls of reflection procedures.”) ↩︎

What I mean by “moral-reasoning framework” is similar to what Wei Dai calls “metaphilosophy” – it implies having confidence in a particular metaphilosophical stance and using that stance to form convictions about one’s reasoning methodology or object-level moral views. ↩︎

Insofar as this is possible – in practice, even the most “open-minded” approach to philosophical learning will have some directionality. ↩︎

Some readers may point out that it’s questionable whether metaethical beliefs can be right or wrong. I grant that, when it comes to belief in non-naturalist moral realism, someone who uses irreducibly normative concepts in their philosophical repertoire cannot be wrong per se (irreducible normative facts don’t interact with physical facts, so one isn’t making any sort of empirical claim). Still, I’d argue that their stance could be ill-informed and reflectively inconsistent. If the person thought more carefully about how irreducible normativity is supposed to work as a concept, they might decide to give up on it. Then, when it comes to naturalist moral realism, I grant that not everything that typically goes under the label comes with empirical predictions. However, I’ve argued that a lot of what goes under “naturalist moral realism” is too watered down to count as moral realism proper – it seems identical to what I’m calling “moral anti-realism.” Insofar we’re talking about moral realism that has important practical implications for effective altruists (“naturalist moral realism worthy of the name,” as I’ve called it), that position does make empirical predictions. Specifically, it predicts convergence among philosophically sophisticated reasoners in what they would come to believe after ideal moral reflection. In this sense, people can hold empirically wrong metaethical beliefs. ↩︎

Note that the option spaces for moral reasoning principles and object-level moral views are vast, and they contain many domains and subdomains, so that people may be certain in some (sub)parts but not others. ↩︎

The possibilities roughly correspond to Wei Dai’s option 4 on the one hand, and his options 5 and 6 on the other hand, in the post Six Plausible Metaethical Alternatives. ↩︎

Note that whether this is good or bad is an open question. It continues to matter what you decide in the reflection procedure. The stakes remain high because your deliberations determine how to allocate your caring capacity. Still, you’re deliberating from a perspective where all is well (“no moral urgency”). Unless you take counteracting measures, you’re more likely to form an identity as “someone who prevents plans for future utopia from going poorly” than “someone who addresses ongoing/immediately foreseeable risks or injustices.” For better or worse, the state of the world in the reflection procedure could change the nature of your moral reflection (as compared to how people adopt moral convictions in more familiar circumstances). ↩︎

One might argue that because these failure modes are avoidable, they shouldn’t count for a normative analysis on the appropriateness of forming convictions early. There’s some merit to this, but I also find myself unsure how to think about this in detail. Arguably, the fact that we’d likely make mistakes inside the reflection procedure isn’t something we can ignore and imagine ourselves as infallible practitioners of reflection. The fact that we aren’t infallible reasoners is an argument against forming convictions now. By the same logic, that we aren’t infallible reflectors is an argument against placing too much hope into open-minded reflection strategies. ↩︎

For instance, someone may be more certain about wanting other-regarding life goals than they feel confident they could reliably set up a reflection procedure for reaching the perfect decision on altruism vs. self-orientedness. Still, that same person could have the converse credences on some subquestion of “altruism/doing good impartially.” For instance, they may prefer open-ended learning about population ethics over locking in specific frameworks or theories. ↩︎

As a counterpoint, consider that while moral progress certainly seems impressive compared to the average past person’s views, it doesn’t necessarily look all that impressive compared to the thinking of Jeremy Bentham, who got many things “right” in the late 18th century already. From Bentham’s Wikipedia article: “He advocated individual and economic freedoms, the separation of church and state, freedom of expression, equal rights for women, the right to divorce, and (in an unpublished essay) the decriminalising of homosexual acts. He called for the abolition of slavery, capital punishment and physical punishment, including that of children. He has also become known as an early advocate of animal rights.” To get a sense for the clarity and moral thrust of Bentham’s reasoning, see this now-famous quote: “The day may come when the rest of the animal creation may acquire those rights which never could have been withholden from them but by the hand of tyranny. The French have already discovered that the blackness of the skin is no reason why a human being should be abandoned without redress to the caprice of a tormentor. It may one day come to be recognised that the number of the legs, the villosity of the skin, or the termination of the os sacrum, are reasons equally insufficient for abandoning a sensitive being to the same fate. What else is it that should trace the insuperable line? Is it the faculty of reason, or perhaps the faculty of discourse? But a fullgrown horse or dog is beyond comparison a more rational, as well as a more conversable animal, than an infant of a day, or a week, or even a month, old. But suppose they were otherwise, what would it avail? The question is not, Can they reason? nor Can they talk? but, Can they suffer?” ↩︎

For instance, the scientific insights summarized in the Lesswrong posts The Neuroscience of Pleasure and Not for the Sake of Pleasure Alone don’t directly imply any specific normative view on well-being. Still, people who used to think about human motivation in overly simplistic terms may have done their moral reasoning with assumptions that turned out to be empirically wrong or naively simplistic. In that case, learning more neuroscience (for instance) could cause them to change their framework. ↩︎

I could imagine that people are more likely to form convictions on matters they consider action-relevant. For instance, my impression is that few people are completely undecided about population ethics, despite it being a notoriously controversial subject among experts. ↩︎

Changing one’s moral-reasoning approach isn’t a forced action, however. Suppose someone’s aversion to forming convictions in the absence of expert agreement is a deeply held principle rather than a consequence of potentially false metaethical beliefs. In that case, the person could continue unchanged. ↩︎

I don’t have a strong view on this but suspect that it is philosophically defensible to reject axioms such as the ones in the von Neumann-Morgenstern utility theorem. (Note that this means I consider full-on realism about rationality implausible.) (By “philosophically defensible,” I mean that people may reject the axioms even after understanding them perfectly. It is uncontroversial that some philosophers reject these axioms, but we can wonder if their thinking might be confused.) ↩︎

I speak of naturalist moral realism the way I idiosyncratically defined it in earlier posts, e.g. here. In particular, note that I’m not making any linguistic claims about the meaning of moral claims. In metaethics, we can distinguish between analyzing moral claims on the linguistic and the substantive levels. The linguistic level concerns what people mean when they make moral claims, whereas the substantive level concerns whether there is a “moral reality.” When I speak of naturalist moral realism, I’m talking about the substantive level. I’ve argued in previous posts that the only way to cash out what “moral reality” could mean, in a way that produces action-relevant implications for effective altruists, is something like “That which is responsible for producing convergence in the judgments of philosophically sophisticated reasoners.” ↩︎

Image sources:

First picture: “Misty Mountain.” Author: XoMEoX. CC Licence (CC BY 2.0). Second picture: Mount Fuji, public domain.Third picture: Hillside of peruvian Ausangate mountain. Marturius (own work), CC Licence (CC BY-SA 3.0). Edited to add targets and question marks further below. ↩︎Image source: Hillside of peruvian Ausangate mountain. Marturius (own work), CC Licence (CC BY-SA 3.0). Adapted in the first and second picture to add targets and question marks. ↩︎

“Misty Mountain.” Author: XoMEoX. CC Licence (CC BY 2.0). Adapted to add target and question mark in the second picture. ↩︎

Moral non-naturalists sometimes object that naturalist moral realism is too watered down. There’s truth to that, arguably. Naturalist moral realism seems close to moral anti-realism. According to naturalist moral realism, the “moral reality” isn’t made up of anything special – it consists of features in the moral option space that philosophically sophisticated reasoners would recognize as the best systematization of “altruism/doing good for others.” From there, it’s only a small step to moral anti-realism, where there are multiple defensible systematizations. ↩︎

When you ask a naturalist moral realist why they want to act morally, their answer cannot be “Because it’s what moral.” That’s what a non-naturalist would say. Instead, moral naturalists would refer to the tangible features of morality, and they could describe these features in non-moral terms. That’s why one’s motivation to act morally functions the same way also under moral anti-realism. ↩︎

Of course, if other people would defect against you, either consciously or because they’re self-deceiving, fighting back is usually the sensible option. ↩︎

Wei Dai @ 2024-03-27T04:28 (+4)

We have to make judgment calls about how to structure our reflection strategy. Making those judgment calls already gets us in the business of forming convictions. So, if we are qualified to do that (in “pre-reflection mode,” setting up our reflection procedure), why can’t we also form other convictions similarly early?

- I'm very confused/uncertain about many philosophical topics that seem highly relevant to morality/axiology, such as the nature of consciousness and whether there is such a thing as "measure" or "reality fluid" (and if so what is it based on). How can it be right or safe to form moral convictions under such confusion/uncertainty?

- It seems quite plausible that in the future I'll have access to intelligence-enhancing technologies that will enable me to think of many new moral/philosophical arguments and counterarguments, and/or to better understand existing ones. I'm reluctant to form any convictions until that happens (or the hope of it ever happening becomes very low).

Also I'm not sure how I would form object-level moral convictions even if I wanted to. No matter what I decide today, why wouldn't I change my mind if I later hear a persuasive argument against it? The only thing I can think of is to hard-code something to prevent my mind being changed about a specific idea, or to prevent me from hearing or thinking arguments against a specific idea, but that seems like a dangerous hack that could mess up my entire belief system.

Therefore, it seems reasonable/defensible to think of oneself as better positioned to form convictions about object-level morality (in places where we deem it safe enough).

Do you have any candidates for where you deem it safe enough to form object-level moral convictions?

Lukas_Gloor @ 2024-03-27T14:15 (+2)

Thank you for engaging with my post!! :)

Also I'm not sure how I would form object-level moral convictions even if I wanted to. No matter what I decide today, why wouldn't I change my mind if I later hear a persuasive argument against it? The only thing I can think of is to hard-code something to prevent my mind being changed about a specific idea, or to prevent me from hearing or thinking arguments against a specific idea, but that seems like a dangerous hack that could mess up my entire belief system.

I don't think of "convinctions" as anywhere near as strong as hard-coding something. "Convictions," to me, is little more than "whatever makes someone think that they're very confident they won't change their mind." Occasionally, someone will change their minds about stuff even after they said it's highly unlikely. (If this happens too often, one has a problem with calibration, and that would be bad by the person's own lights, for obvious reasons. It seems okay/fine/to-be-expected for this to happen infrequently.)

I say "litte more than [...]" rather than "is exactly [...]" because convictions are things that matter in the context of one's life goals. As such, there's a sense of importance attached to them, which will make people more concerned than usual about changing their views for reasons they wouldn't endorse (while still staying open for low-likelihood ways of changing their minds through a process they endorse!). (Compare this to: "I find it very unlikely that I'd ever come to like the taste of beetroot." If I did change my mind on this later because I joined a community where liking beetroot is seen as very cool, and I get peer-pressured into trying it a lot and trying to form positive associations with it when I eat it, and somehow this ends up working and I actually come to like it, I wouldn't consider this to be as much of a tragedy as if a similar thing happened with my moral convictions.)

Also I'm not sure how I would form object-level moral convictions even if I wanted to.

Some people can't help it. I think this has a lot to do with reasoning styles. Since you're one of the people on LW/EA forum who place the most value on figuring out things related to moral uncertainty (and metaphilosophy), it seems likely that you're more towards the far end of the spectrum of reasoning styles around this. (It also seems to me that you have a point, that these issues are indeed important/underappreciated – after all, I wrote a book-length sequence on something that directly bears on these questions, but coming from somewhere more towards the other end of the spectrum of reasoning styles.)

- I'm very confused/uncertain about many philosophical topics that seem highly relevant to morality/axiology, such as the nature of consciousness and whether there is such a thing as "measure" or "reality fluid" (and if so what is it based on). How can it be right or safe to form moral convictions under such confusion/uncertainty?

Those two are some good examples of things that I imagine most or maybe even all people* are still confused about. (I remember also naming consciousness/"Which computations do I care about?" in a discussion we had long ago on the same topic, as an example of something where I'd want more reflection.)

*(I don't say "all people" outright because I find it arrogant when people who don't themselves understand a topic declare that no one can understand it – for all I know, maybe Brian Tomasik's grasp on consciousness is solid enough that he could form convictions about certain aspects of it, if forming convictions there were something that his mind felt drawn to.)

So, especially since issues related to consciousness and reality don't seem too urgent for us to decide on, it seems like the most sensible option here, for people like you and me at least, is to defer.

Do you have any candidates for where you deem it safe enough to form object-level moral convictions?

Yeah; I think there are many other issues in morality/in "What are my goals?" that are independent of the two areas of confusion you brought up. We can discuss whether forming convictions early in those independent areas (and in particular, in areas where narrowing down our uncertainty would already be valuable** in the near term) is a defensible thing to do. (Obviously, it will depend on the person: it requires having a solid grasp of the options and the "option space" to conclude that you're unlikely to encounter view-shaking arguments or "better conceptualizations of what the debate is even about" in the future.)

**If someone buys into ECL, making up one's mind on one's values becomes less relevant because the best action according to ECL is to work on your comparative advantage among interventions that are valuable from the perspective of ECL-inclined, highly-goal-driven people around you. (One underlying assumption here is that, since we don't have much info about corners of the multiverse that look too different from ours, it makes sense to focus on cooperation partners that live in worlds relevantly similar to ours, i.e., worlds that contain the same value systems we see here among present-day humans.) Still, "buying into ECL" already involves having come to actionably-high confidence on some tricky decision theory questions. I don't think there's a categorical difference between "values" and "decision theory," so having confidence in ECL-allowing decision theories already involves having engaged in some degree of "forming convictions."

The most prominent areas I can think of where I think it makes sense for some people to form convictions early:

- ECL pre-requirements (per the points in the paragraph above).

- Should I reason about morality in ways that match my sequence takeaways here, or should I instead reason more like some moral realists would think that we should reason?

- Should I pursue self-oriented values or devote my life to altruism (or do something in between)?

- What do I think about population ethics and specifically the question of "How likely is it that I would endorse a broadly 'downside-focused' morality after long reflection?"

These questions all have implications for how we should act in the near future. Furthermore, they're the sort of questions where I think it's possible to get a good enough grasp on the options and option space to form convictions early.

Altruism vs self-orientedness seems like the most straightforward one. You gotta choose something eventually (including the option of going with a mix), and you may as well choose now because the question is ~as urgent as it gets, and it's not like the features that make this question hard to decide on have much do with complicated philosophical arguments or future-technology-requiring new insights. (This isn't to say that philosophical arguments have no bearing on the question – e.g., famine affluence and morality, or Parfit on personal identity, contain arguments that some people might find unexpectedly compelling, so there's something that's lost if someone were to make up their mind without encountering those arguments. Or maybe some unusually privileged people would find themselves surprised if they read specific accounts of how hard life can be for non-privileged people, or if they became personally acquainted with some of these hardships. But all these things seem like things that a person can investigate right here and now, without the need to wait for future superintelligent AI philosophy advisors. [Also, some of these seem like they may not just be "new considerations," but actually "transformative experiences" that change you into a different person. E.g., encountering someone who is facing hardship and you help them and you feel very fulfilled can become the seed you form your altruistic identity around.])

Next, for moral realism vs anti-realism (which is maybe more about “forming convictions on metaphilosophy” than about direct values, but just like with "decision theory vs values," I think "metaphilosophy vs values" is also a fluid/fuzzy distinction), I see it similarly. The issue has some urgent implications for EAs to decide on (though I don't think of it as the most important question), and there IMO are some good reasons to expect that future insights won't make it significantly easier/won't change the landscape in which we have to find our decision. Namely, the argument here is that this is a question that already permeates all the ways in which one would go about doing further reflection. You need to have some kind of reasoning framework to get started with thinking about values, so you can't avoid choosing. There's no "by default safe option." As I argued in my sequence, thinking that there's a committing wager for non-naturalist moral realism only works if you've formed the conviction I labelled "metaethical fanaticism" (see here), while the wager for moral naturalism (see the discussion in this post we're here commenting on) isn't strong enough to do all the work on its own.