Part 1: The AI Safety community has four main work groups, Strategy, Governance, Technical and Movement Building

By PeterSlattery @ 2022-11-25T03:45 (+73)

Epistemic status

Written as a non-expert to develop and get feedback on my views, rather than persuade. It will probably be somewhat incomplete and inaccurate, but it should provoke helpful feedback and discussion.

Aim

This is the first part of my series ‘A proposed approach for AI safety movement building’. Through this series, I outline a theory of change for AI Safety movement building. I don’t necessarily want to immediately accelerate recruitment into AI safety because I take concerns (e.g., 1,2) about the downsides of AI Safety movement building seriously. However, I do want to understand how different viewpoints within the AI Safety community overlap and aggregate.

I start by attempting to conceptualise the AI Safety community. I originally planned to outline my theory of change in my first post. However, when I got feedback, I realised that i) I conceptualised the AI Safety community differently from some of my readers, and ii) I wasn’t confident in my understanding of all the key parts.

TLDR

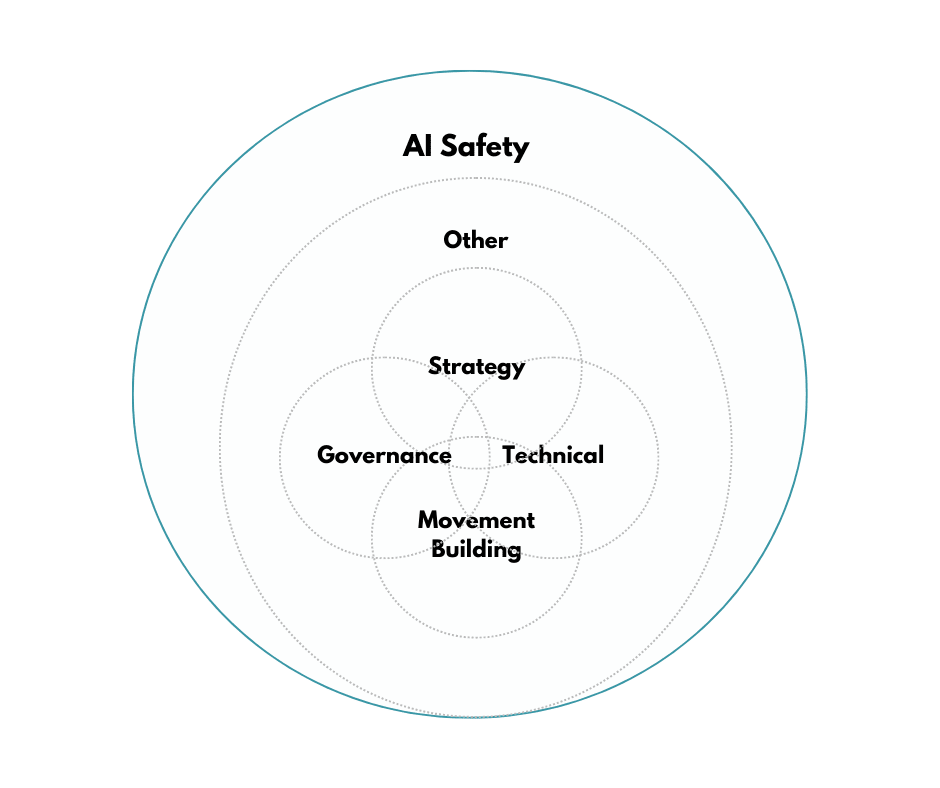

- I argue that the AI Safety community mainly comprises four overlapping, self-identifying, groups: Strategy, Governance, Technical and Movement Building.

- I explain what each group does and what differentiates it from the other groups

- I outline a few other potential work groups

- I integrate these into an illustration of my current conceptualisation of the AI Safety community

- I request constructive feedback.

My conceptualisation of the AI Safety community

At a high level of simplification and low level of precision, the AI Safety community mainly comprises four overlapping, self-identifying, groups who are working to prevent an AI-related catastrophe. These groups are Strategy, Governance, Technical and Movement Building. These are illustrated below.

We can compare the AI Safety community to a government and relate each work group to a government body. I think this helps clarify how the parts of the community fit together (though of course, the analogies are imperfect).

Strategy

The AI Safety Strategy group seeks to mitigate AI risk by understanding and influencing strategy.

Their work focuses on developing strategies (i.e., plans of action) that maximise the probability that we achieve positive AI-related outcomes and avoid catastrophes. In practice, this includes researching, evaluating, developing, and disseminating strategy (see this for more detail).

They attempt to answer questions such as i) ‘how can we best distribute funds to improve interpretability?’, ii) ‘when should we expect transformative AI?’, or iii) “What is happening in areas relevant to AI?”.

Due to a lack of ‘strategic clarity/consensus’ most AI strategy work focuses on research. However, Toby Ord’s submission to the UK parliament is arguably an example of developing, and disseminating an AI Safety related strategy.

We can compare the Strategy group to the Executive Branch of a government, which sets a strategy for the state and parts of the government, while also attempting to understand and influence the strategies of external parties (e.g., organisations and nations).

AI Safety Strategy exemplars: Holden Karnofsky, Toby Ord and Luke Muehlhauser.

AI Safety Strategy post examples (1,2,3,4).

Governance

The AI Safety Governance group seeks to mitigate AI risk by understanding and influencing decision-making.

Their work focuses on understanding how decisions are made about AI and what institutions and arrangements help those decisions to be made well. In practice, this includes consultation, research, policy advocacy and policy implementation (see 1 & 2 for more detail).

They attempt to answer questions such as i) ‘what the best policy for interpretability in a specific setting?’, ii) ‘who should regulate transformative AI and how?’, or iii) “what is happening in areas relevant to AI Governance?”.

AI strategy and governance overlap in cases where i) the AI Safety governance group focus on their internal strategy, or ii) AI Safety governance work is relevant to the AI Safety strategy group (e.g., when strategizing about how to govern AI).

Outside this overlap, AI Safety Governance is distinct from AI Safety strategy because it is more narrowly focused on relatively specific and concrete decision-making recommendations (e.g., Organisation X should not export semiconductors to country Y) than relatively general and abstract strategic recommendations (e.g., ‘we should review semiconductor supply chains’).

We can compare the Governance group to a government’s Department of State, which supports the strategy (i.e., long term plans) of the Executive Branch by understanding and influencing foreign affairs and policy.

AI Safety Governance group exemplars: Alan Dafoe and Ben Garfinkel.

AI Safety Governance group post examples (see topic).

Technical

The AI Safety Technical group seeks to mitigate AI risk by understanding and influencing AI development and implementation

Their work focuses on understanding current and potential AI systems (i.e., interactions of hardware, software and operators) and developing better variants. In practice, this includes theorising, researching, evaluating, developing, and testing examples of AI (see this for more detail).

They attempt to answer questions such as i) ‘what can we do to improve interpretable machine learning?’, ii) ‘how can we safely build transformative AI and how?’, or iii) “what is happening in technical areas relevant to AI Safety?”.

AI technical work overlaps with AI strategy work in cases where i) the AI technical group focuses on their internal strategy or ii) AI Safety technical work is relevant to the AI Safety strategy group (e.g. when strategizing how to prevent AI from deceiving us).

AI technical work overlaps with AI governance work where AI Safety technical work is relevant to decision-making policy (e.g. policy to reduce the risk that AI will deceive us).

Outside these overlaps, AI Safety technical work is distinct from AI governance and strategy because it is focused on technical approaches (e.g., how to improve interpretable machine learning) rather than strategic approaches (e.g., how to distribute funds to improve interpretability) or governance approaches (e.g., what the best policy for interpretability in a specific setting).

We can compare the Technical group to the US Cybersecurity and Infrastructure Security Agency (CISA) which supports the strategy (i.e., long term plans) of the Executive Branch by identifying and mitigating technology-related risks. While CISA does not directly propose or drive external strategy or policy, it can have indirect influence by impacting the strategy of the Executive Branch, and the policy of the Department of State.

AI Safety Technical group exemplars: Paul Christiano, Buck Schlegeris and Rohin Shah.

AI Safety Technical group post examples(1).

Movement Building

The AI Safety Movement Building group seeks to mitigate AI risk by helping the AI Safety community to succeed.

Their work focuses on understanding, supporting and improving the AI Safety community. In practice, this includes activities to understand community needs and values such as collecting and aggregating preferences, and activities to improve the community such as targeted advertising, recruitment and research dissemination. See 1 & 2 for more detail.

They attempt to answer questions such as i) ‘what does the AI safety community need to improve its work on interpretable machine learning?’, ii) ‘when does the AI safety community expect transformative AI to arrive?’, or iii) “what is happening within the AI safety community?”.

AI Movement Building work overlaps with the work of the other groups where (i) Movement Building work is relevant to their work, or (ii) the other groups' work is relevant to Movement Building. It also overlaps with Strategy when the Movement Building group focuses on their internal strategy

Outside these overlaps, movement building work is distinct from the work of other groups because it is focused on identifying and solving community problems (e.g., a lack of researchers, or coordination) rather than addressing strategy, governance or technical problems.

We can compare the AI Technical group to the Office of Administrative Services and USAJOBS, organisations which support the strategy (i.e., long term plans) of the Executive Branch by identifying and solving resource and operational issues in government. Such organisations do not manage strategy, policy, or technology but indirectly affect each via impacts on the Executive Branch, Department of State and CISA.

AI Safety Movement Building group exemplars: Jamie Bernardi, AW, and Thomas Larsen. AI Safety Movement Building group example post (1)

Outline of the major work groups within the AI safety community

| Group | Focus | Government Analog |

| Strategy | Developing strategies (i.e., plans of action) that maximise the probability that we achieve positive AI -related outcomes and avoid catastrophes | An Executive Branch (e.g., Executive Office of the President) |

| Governance | Understanding how decisions are made about AI and what institutions and arrangements help those decisions to be made well | A Foreign Affairs Department (e.g., Department of State) |

| Technical | Understanding current and potential AI systems (i.e., interactions of hardware, software and operators) and developing better variants | A Technical Agency (e.g., CISA) |

| Movement Building | Understanding, supporting and improving the AI Safety community | A Human Resources Agency (e.g., USAJOBS) |

Many people in the AI Safety community are involved in more than one work group. In rare cases, someone might have involvement in all four. For instance, they may do technical research, consult on strategy and policy and give talks to student groups. In many cases, movement builders are involved with at least one of the other work groups. As I will discuss later in the series, I think that cross-group involvement is beneficial and should be encouraged.

Other work groups

I am very uncertain here and would welcome thoughts/disagreement.

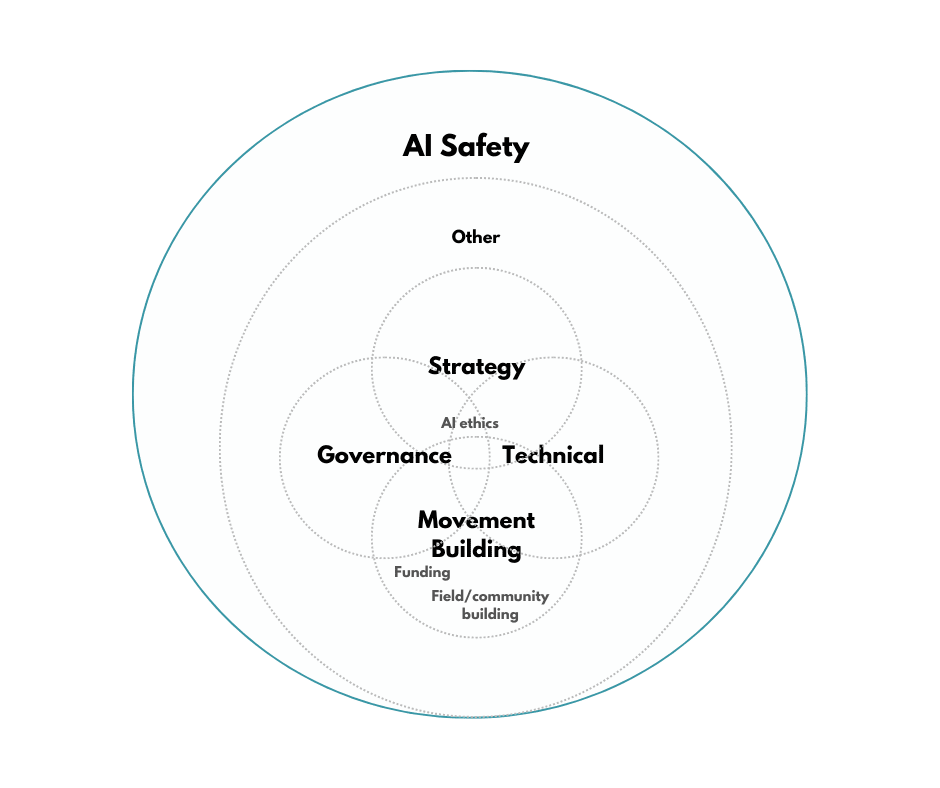

Field-builders

Field-building refers to influencing existing fields of research or advocacy or developing new ones, through advocacy, creating organisations, or funding people to work in the field. I regard field-building as a type of movement building with a focus on academic/research issues (e.g., increasing the number of supportive researchers, research institutes and research publications).

Community builders

I treat community building as a type of movement building focused on community growth (e.g., increasing the number of contributors and sense of connections within the AI Safety community).

AI Funders

I regard any AI safety-focused funding as a type of movement building (potentially at the overlap of another work group), focused on providing financial support.

AI Ethics

The ethics of artificial intelligence is the study of the ethical issues arising from the creation of artificial intelligence. I regard safety-focused AI ethics as a subset of strategy, governance and technical work.

Summary of my conceptualisation of the AI Safety community

Based on the above, I conceptualise the AI Safety community as shown below.

Feedback

Does this all seem useful, correct and/or optimal? Could anything be simplified or improved? What is missing? I would welcome feedback.

What next?

In the next post, I will suggest three factors/outcomes that AI Safety Movement Building should focus on: contributors, contributions and coordination.

Acknowledgements

The following people helped review and improve this post: Amber Ace, Bradley Tjandra, JJ Hepburn, Greg Sadler, Michael Noetel, David Nash, Chris Leong, and Steven Deng. All mistakes are my own.

This work was initially supported by a grant from the FTX Regranting Program to allow me to explore learning about and doing AI safety movement building work. I don’t know if I will use it now, but it got me started.

Support

Anyone who wants to support me to do more of this work can help by offering:

- Feedback on this and future posts

- A commitment to reimburse me if I need to repay the FTX regrant

- Any expression of interest in potentially hiring or funding me to do AI Safety movement building work.

AlexanderSaeri @ 2022-11-25T04:12 (+13)

Thanks Peter! I appreciate the work you've put in to synthesising a large and growing set of activities.

Nicholas Moes and Caroline Jeanmaire wrote a piece, A Map to Navigate AI Governance, which set out Strategy as 'upstream' of typical governance activities. Michael Aird in a shortform post about x-risk policy 'pipelines' also set (macro)strategy upstream of other policy research, development, and advocacy activities.

One thing that could be interesting to explore is the current and ideal relationships between the work groups you describe here.

For example, in your government analogy, you describe Strategy as the executive branch, and each of the other work groups as agencies, departments, or specific functions (e.g., HR), which would be subordinate.

Does this reflect your thinking as well? Should AI strategy worker / organisations be deferred to by AI governance workers / organisations?

PeterSlattery @ 2022-11-25T04:52 (+3)

For example, in your government analogy, you describe Strategy as the executive branch, and each of the other work groups as agencies, departments, or specific functions (e.g., HR), which would be subordinate.

Does this reflect your thinking as well? Should AI strategy worker / organisations be deferred to by AI governance workers / organisations?

Thanks Alex! I agree that it could be interesting to explore the current and ideal relationships between the work groups. I'd like to see that happen in the future.

I think that deferring sounds a bit strong, but I suspect that many workers/organisations in AI governance (and in other work groups) would like strategic insights from people working on AI Strategy and Movement Building. For instance, on questions like:

- What is the AI Safety community's agreement with/enthusiasm for specific visions, organisations and research agendas?

- What are the key disagreements between competing visions for AI risk mitigation and the practical implications?

- Which outcomes are good metrics to optimise for?

- Who is doing/planning what in relevant domains, and what are the practical implications for a subset of workers/organisations plans?

With that said, I don't really have any well-formed opinions about how things should work just yet!

gergogaspar @ 2022-11-30T19:18 (+4)

Thanks for writing this up!

As someone who is getting started in AIS movement building, this was great to read!

i) I conceptualised the AI Safety community differently from some of my readers

I would be curious, how does your take differ from others' takes?

I have read Three pillars for avoiding AGI catastrophe: Technical alignment, deployment decisions, and coordination and I feel the two posts are trying to answer slightly different questions but would be keen to learn about some other way people have conceptualised the problem.

PeterSlattery @ 2022-12-07T08:22 (+3)

Hey, thanks for asking about this! I am writing this in a bit of a rush, so sorry if it's disjointed!

Yeah, I don't really have a good answer for that question. What happened was that I asked for feedback on an article outlining my theory of what AI Safety movement building should focus on.

The people who responded starting mentioning terms like funding, field building and community building and 'buying time'. I was unsure if/where all of these concepts overlapped with AI community/movement building.

So it was more so the case that I felt unclear on my take and other people's takes than I noticed some clear and specific differences between them.

When I posted this, it was partially to test my ideas on what the AI Safety community is and how it functions and learn if people had different takes from me. So far, Jonathan Claybrough is the only one who has offered a new take.

I am not sure how my take aligns with Three pillars for avoiding AGI catastrophe: Technical alignment, deployment decisions, and coordination etc.

At this stage, I haven't really been able to read and integrate much of the general perspective on what the AI Safety community as a whole should do. I think that this is ok for now anyway, because I want to focus on the smaller space of what movement builders' should think about.

I am also unsure if I will explore the macro strategy space much in the future because of the trade-offs. I think that in the long term, people are going to need to think about strategy at different levels of scope (e.g., around governance, overall strategy, movement building in governance etc). It's going to be very hard for me to have a high fidelity model of macro-strategy and all the concepts and actors etc while also really understanding what I need to do for movement building.

I therefore suspect that in the future, I will probably rely on an expert source of information to understand strategy (e.g., what do these three people or this community survey suggest I should think) rather than try to have my own in depth understanding. It's perhaps like a recruiter for a tech company is probably just going to rely on a contact to learn what the company needs for its hires, rather than understanding the companies structure and goals in great detail. However, I could be wrong about all of that and change my mind once I get more feedback!

gergogaspar @ 2022-12-07T14:55 (+3)

Fair enough, thank you!

Jonathan Claybrough @ 2022-11-27T01:08 (+3)

You explained the difference between strategy and governance as governance being a more specific thing, but I'm not sure it's a good idea in a specific place to separate and specialize in that way. What good does it bring to have governance separated from strategy ? Should experts in governance not communicate directly with experts in strategy (like should they only interface through public reports given from one group for the other?)

It seems to be governance was already a field thinking about the total strategy as well as specific implementations. I personally think of AI safety as Governance, Alignment and field building, and with the current post I don't see why I should update that.

PeterSlattery @ 2022-11-28T02:01 (+3)

Thanks for commenting, Jonathan, it was helpful to hear your thoughts.

Sorry if I have been unclear. This post is not intended to show how I think things should be. I am instead trying to show how I think things are right now.

When doing that, I didn't mean to suggest that experts in governance don't communicate directly with experts in strategy.

As I said: "Many people in the AI Safety community are involved in more than one work group." I also tried to show that there is an overlap in my Venn diagram.

> I personally think of AI safety as Governance, Alignment and field building, and with the current post I don't see why I should update that.

I think that this is fine, and perhaps correct. However, right now, I still think that it is useful to tease those areas apart.

This is because I think that a lot of current work is related to strategy but not governance or the groups you mentioned. For instance, “What is happening in areas relevant to AI?” or how we should forecast progress based on biological anchors.

Let me know what you think.