How could we have avoided this?

By Nathan Young @ 2022-11-12T12:45 (+116)

It seems to me that the information that betting so heavily on FTX and SBF was an avoidable failure. So what could we have done ex-ante to avoid it?

You have to suggest things we could have actually done with the information we had. Some examples of information we had:

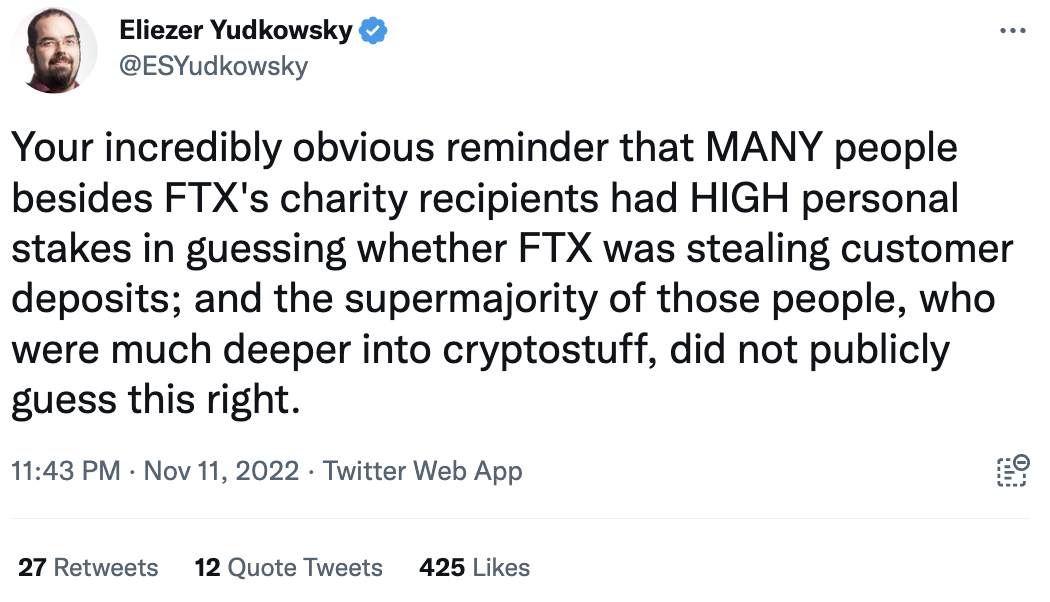

First, the best counterargument:

Then again, if we think we are better at spotting x-risks then these people maybe this should make us update towards being worse at predicting things.

Also I know there is a temptation to wait until the dust settles, but I don't think that's right. We are a community with useful information-gathering technology. We are capable of discussing here.

Things we knew at the time

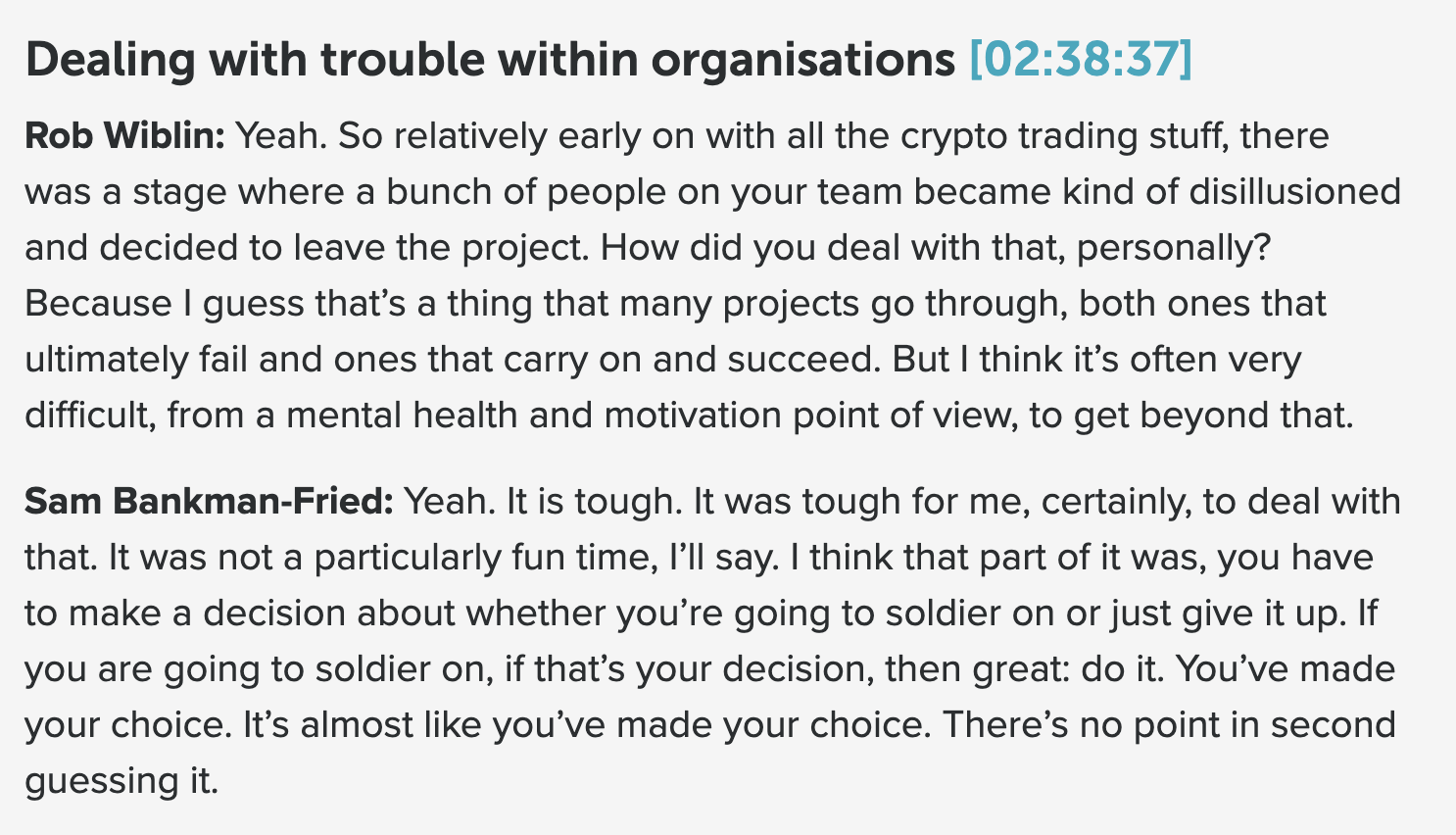

We knew that about half of Alameda left at one time. I'm pretty sure many are EAs or know them and they would have had some sense of this.

We knew that SBF's wealth was a very high proportion of effective altruism's total wealth. And we ought to have known that something that took him down would be catastrophic to us.

This was Charles Dillon's take, but he tweets behind a locked account and gave me permission to tweet it.

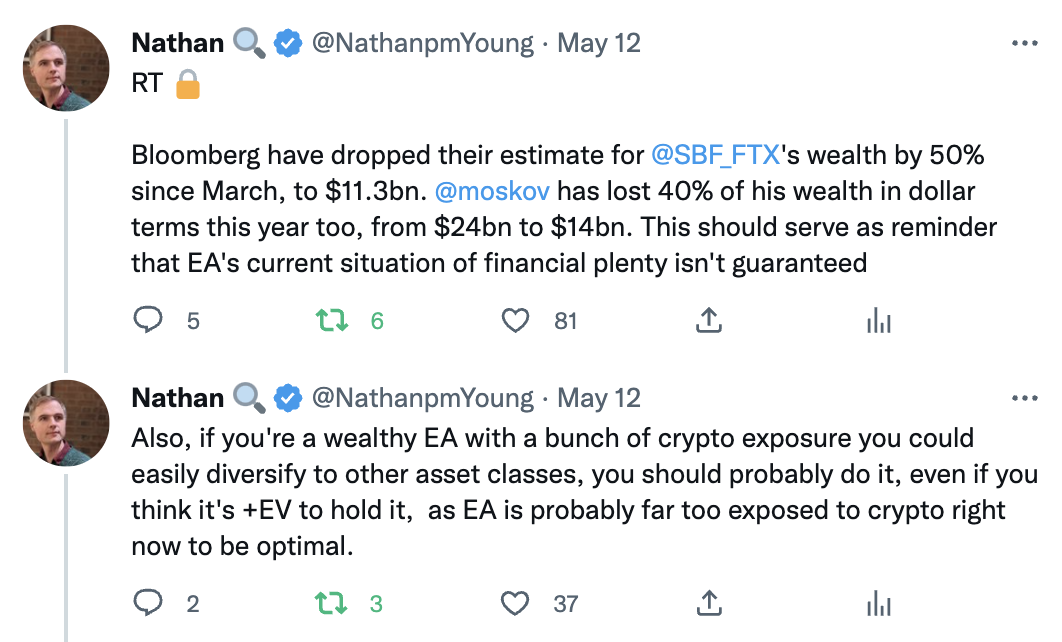

Peter Wildeford noted the possible reputational risk 6 months ago:

We knew that corruption is possible and that large institutions need to work hard to avoid being coopted by bad actors.

Many people found crypto distasteful or felt that crypto could have been a scam.

FTX's Chief Compliance Officer, Daniel S. Friedberg, had behaved fraudulently In the past. This from august 2021.

In 2013, an audio recording surfaced that made mincemeat of UB’s original version of events. The recording of an early 2008 meeting with the principal cheater (Russ Hamilton) features Daniel S. Friedberg actively conspiring with the other principals in attendance to (a) publicly obfuscate the source of the cheating, (b) minimize the amount of restitution made to players, and (c) force shareholders to shoulder most of the bill.

freedomandutility @ 2022-11-12T14:34 (+95)

Discourage EA billionaires from being public advocates for EA since this makes them the face of EA and increases reputational risk. (Musk tweeted about EA once and seems like the face of EA to some people!)

I’ve seen numerous articles about SBF’s EA involvement and very few about Dustin Moskovitz, so I think Dustin Moskovitz made the right call here.

MichaelStJules @ 2022-11-15T18:13 (+3)

Dustin tweets about EA often. I think the difference is that the public is just generally less interested in him and he doesn't say or do crazy or outrageous things to attract tons of attention. Maybe he's also turning down interviews or avoiding EA in them.

Vasco Grilo @ 2022-11-12T20:12 (+2)

I tend to agree. That being said, it is also important to recognise that Musk being public about EA will eventually push people towards learning more about EA, which is good.

freedomandutility @ 2022-11-12T21:24 (+15)

I don't think Musk talking about EA is clearly net-positive, lots of people are clearly put off of EA by the association.

Nathan Young @ 2022-11-11T16:33 (+66)

Updated

We unreasonably narrowed our probability space. As Violet Hour says below win somehow discounted this whole hypothesis without even considering it. Even a 1% chance of this massive scandal was worth someone thinking about it full-time. And we dropped the ball.

Violet Hour @ 2022-11-12T13:48 (+90)

Upvoted, but I disagree with this framing.

I don't think our primary problem was with flawed probability assignments over some set of explicitly considered hypotheses. If I were to continue with the probabilistic metaphor, I'd be much more tempted to say that we erred in our formation of the practical hypothesis space — that is, the finite set of hypothesis that the EA community considered to be salient, and worthy of extended discussion.

Afaik (and at least in my personal experience), very few EAs seemed to think the potential malfeasance of FTX was an important topic to discuss. Because the topics weren't salient, few people bothered to assign explicit probabilities. To me, the fact that we weren't focusing on the right topics, in some nebulous sense, is more concerning than the ways in which we erred in assigning probabilities to claims within our practical hypothesis space.

Yonatan Cale @ 2022-11-13T08:52 (+28)

(disagree)

Nathan (or anyone who agrees with the comment above), can you even list all the things that, if they have even a 1% chance of failing then they are "worth someone thinking about in full-time"?

Can you find at least 1 (or 3?) people who'd be competent at looking at these topics, and that you'd realistically remove from their current roles to look at the things-that-might-fail?

Saying you'd look specifically at the FTX-fraud possibility is hindsight bias. In reality, we'd have to look at many similar topics in advance, without knowing which of them is going to fail. Will you bite the bullet?

Yonatan Cale @ 2022-11-13T08:59 (+5)

Reminds me of this post:

https://slatestarcodex.com/2018/05/23/should-psychiatry-test-for-lead-more/

Which I recommend as "A Scott Alexander post that stuck with me and changes the way I think sometimes"

Nathan Young @ 2022-11-13T10:56 (+4)

The failure rate of crypto exchanges is like 5%. I think this was a really large risk.

Neel Nanda @ 2022-11-13T21:53 (+11)

I think that the core scandal is massive fraud, not exchange failure. The base rate of the fraud is presumably lower (though idk the base rates - wouldn't surprise me if in crypto the exchange failure rate was more like 30% and fraud rate was 5% - 5% failure seems crazy low)

Yonatan Cale @ 2022-11-13T12:22 (+9)

Yeah, but this ignores my question.

(For example, how many other things are there with a failure rate of like 5% and that would be very important if they failed, to EA?)

Miguel @ 2022-11-12T14:22 (+10)

Hi Nathan,

Fraud is actually a high probability event. Over the the last 20 years especially with similar cases like ENRON in 2001 and LEHMAN Brothers in 2008, traditional institutions - (eg. publicly held corporations or banks) have coped with this through governance boards and internal audit departments.

I think newer industries like Crypto, AI and Space are slower to adapt to these concepts as newer pioneers are setting up managements that are unaware of the fraudulent past, the corruptive nature of the ability to acquire money and be able to hide/ misappropriate it. Well, I could understand why, startups won't spend more money for governance and reviews as it does not have a direct impact to the overall product or service and as they eventually get bigger - they fail to realize the need to review the control features that worked when they were still small...

I should put fraud risk to as high as 10% - could go higher if the internal controls are weak or non-existent. Like who checks SBF? or all of the funders? that is a great thing to start reviewing moving forward in my opinion. The cost of any scandal is too large that it will affect the EA community's ability to deliver its objectives.

All the best,

Miguel

Sharmake @ 2022-11-12T14:13 (+8)

So putting it another way,we failed at recursively applying it to ourselves. We didn't apply the logic of x-risk and GCRs to ourselves, and didn't go far enough.

Vasco Grilo @ 2022-11-12T20:04 (+3)

Yes, as Scott Alexander put it in the context of Covid, a failure, but not of prediction.

And if the risk was 10%, shouldn’t that have been the headline. “TEN PERCENT CHANCE THAT THERE IS ABOUT TO BE A PANDEMIC THAT DEVASTATES THE GLOBAL ECONOMY, KILLS HUNDREDS OF THOUSANDS OF PEOPLE, AND PREVENTS YOU FROM LEAVING YOUR HOUSE FOR MONTHS”? Isn’t that a better headline than Coronavirus panic sells as alarmist information spreads on social media? But that’s the headline you could have written if your odds were ten percent!

Ubuntu @ 2023-03-31T23:57 (+1)

I know some people think EAs should have been far more suspicious of SBF than professional investors, the media etc were, but this stat from Benjamin_Todd nevertheless seems relevant:

in early 2022, metaculus had a 1% chance of FTX making any default on customer funds over the year with ~40 forecasters

Nathan Young @ 2022-11-12T13:09 (+57)

There have been calls for a proper whistleblowing system within EA. I guess if I'd had someone I could report to as there is for community issues, then perhaps I would have.

I made a prediction market, did a little investigating, but I didn't really see a way to do things with the little suspicions I had. That seems pretty damning.

John_Maxwell @ 2022-11-12T17:38 (+14)

This comment seems to support the idea that a whistleblowing system would've helped: https://forum.effectivealtruism.org/posts/xafpj3on76uRDoBja/the-ftx-future-fund-team-has-resigned-1?commentId=NbevNWixq3bJMEW7b

Miguel @ 2022-11-12T14:31 (+8)

The whistleblower system is a good idea. But Internal Audit Reviews done through International Standards for the Professional Practice of Internal Auditing by an Independent EA Internal Audit Team is still the best solution in ensuring the quality of EA procedures across all EA organizations.

Nathan Young @ 2022-11-12T15:43 (+4)

If you want I'd like to see what you think the most important points of that doc are.

Miguel @ 2022-11-12T16:14 (+16)

Hi Nathan,

To point out what key features that EA may take seriously if setting up an Internal Audit Team is in its future plans based on standards

- The internal audit activity must be independent, and internal auditors must be objective in performing their work. (Performance Standards)

- The chief audit executive must report to a level within the organization that allows the internal audit activity to fulfill its responsibilities. The chief audit executive must confirm to the board, at least annually, the organizational independence of the internal audit activity. (Performance Standards)

- The chief audit executive must effectively manage the internal audit activity to ensure it adds value to the organization. (Attribute Standards)

- The internal audit activity must evaluate the effectiveness and contribute to the improvement of risk management processes. (Attribute Standards)

There are many more standards that acts as safeguards to how an internal audit activity be done objectively and prevents large scale errors or frauds to happen.

These SOP in Internal Audit are proven methods ran by almost all large corporations and banks all over the world.

All the best,

Miguel

esc12a @ 2022-11-12T20:55 (+2)

I know very little about these things, but yeah I would think if serious money is involved, it may be good to have someone with experience at one of the Big 4 accounting firms (or similar) have a position in these orgs.

Dancer @ 2022-11-12T13:56 (+8)

I don't know how much effort it would be to set up a "proper" community-wide whistleblowing system across many organisations, but perhaps some low-hanging fruit would be CEA's community health team having a separate anonymous form specifically for anonymous suspicions of this kind that wouldn't require legal protection (as in your case? because you're not an FTX employee?).

Maybe the team already has an anonymous form but I couldn't find it from a quick look at the CEA website, so maybe it should be more prominent, and in any case I think it may be worth encouraging sharing this kind of info more explicitly now.

Aleks_K @ 2022-11-12T15:13 (+11)

It would have to be significantly more independent than the CEA community health team for it to be valuable.

Dancer @ 2022-11-12T15:26 (+3)

Ah yes - makes sense!

Julia_Wise @ 2022-11-22T16:41 (+7)

The community health team does have an anonymous form. Thanks for the observation that it wasn't that easy to find - we'll be working on this.

freedomandutility @ 2022-11-12T14:38 (+50)

Make it clearer that EA is not just classical utilitarianism and that EA abides by moral rules like not breaking the law, not lying and not carrying out violence.

(Especially with short AI timelines and the close to infinite EV of x-risk mitigation, classical utilitarianism will sometimes endorse breaking some moral rules, but EA shouldn’t!)

Pablo @ 2022-11-13T06:45 (+27)

You keep saying that classical utilitarianism combined with short timelines condones crime, but I don't think this is the case at all.

The standard utilitarian argument for adhering to various commonsense moral norms, such as norms against lying, stealing and killing, is that violating these norms would have disastrous consequences (much worse than you naively think), damaging your reputation and, in turn, your future ability to do good in the world. A moral perspective, such as the total view, for which the value at stake is much higher than previously believed, doesn't increase the utilitarian incentives for breaking such moral norms. Although the goodness you can realize by violating these norms is now much greater, the reputational costs are correspondingly large. As Hubinger reminds us in a recent post, "credibly pre-committing to follow certain principles—such as never engaging in fraud—is extremely advantageous, as it makes clear to other agents that you are a trustworthy actor who can be relied upon." Thinking that you have a license to disregard these principles because the long-term future has astronomical value fails to appreciate that endangering your perceived trustworthiness will seriously compromise your ability to protect that valuable future.

freedomandutility @ 2022-11-13T10:27 (+3)

But the short timelines mean the chances of getting caught are a lot smaller because the world might end in 20-30 years. As we get closer to AGI, the chances of getting caught will be smaller still.

freedomandutility @ 2022-11-13T10:30 (+2)

FWIW obviously people with the same utilitarian views will disagree on what is positive EV / a good action under the same set of premises / beliefs.

But even then, I think the chances you condone fraud to fund AI safety is very high under the combo of pure / extreme classical total utilitarianism + longtermism + short AI timelines, even if some people who share these beliefs might not condone fraud.

Vasco Grilo @ 2022-11-12T20:26 (+10)

EA is not just classical utilitarianism

I agree.

classical utilitarianism will sometimes endorse breaking some moral rules

Classical utilitarianism endorses maximising total wellbeing. Whether this involves breaking "moral rules" or not is, in my view, an empirical question. For the vast majority of cases, not breaking "moral rules" leads to more total wellbeing than breaking them, but we should be open to the contrary. There are many examples of people breaking "moral rules" in some sense (e.g. lying) which are widely recognised as good deeds (widespread fraud is obviously not one such example).

freedomandutility @ 2022-11-12T21:28 (+1)

I think classical total utilitarianism + short-ish AI timelines + longtermism unavoidably endorses widespread fraud to fund AI safety research.

Agree that there are examples of when common-sense morality endorses breaking moral rules and even breaking the law, eg - illegal protests against authoritarian leaders, nationalist violence against European colonialists, violence against Nazi soldiers

Nathan Young @ 2022-11-11T16:29 (+44)

A coordination mechanism around rumours.

I now here that there were many rumours around bad behaviour at Alameda. If there are similar rumours around another EA org or an individual, how will we ensure they are taken seriously.

Had we spent $10M investigating stuff like this, had it only found this one case, it would have been worth it (without second order effects, which are hard).

David Mears @ 2022-11-12T23:21 (+36)

A good policy change should be at least general enough to prevent Ben Delo-style catastrophes too (a criminal crypto EA billionaire from a few years back.) Let's not overfit to this data point!

One takeaway I'm tossing about is adopting a much more skeptical posture towards the ultra-wealthy, by which I mean always having your guard up, even if they seem squeaky-clean (so far). With the ultra-wealthy/ultra-powerful, don't start from a position of trust and adjust downwards when you hear bad stories; do the opposite.

It can’t be right to cut all ties to the super-rich, for all the classic reasons (eg the feminist movement was sped up a lot by the funding which created the pill). Hundreds of thousands of people would die if we did this. Ambitious attempts to fix the world’s pressing problems depends on having resources.

But there are plenty of moderate options, too.

Here's three ways I think learning to mistrust the ultra-wealthy could help matters.

A general posture of skepticism towards power might have helped whenever someone raised qualms about FTX, or SBF himself, such that the warnings wouldn't have fallen on deaf ears and could be pieced together over time.

It would also motivate infrastructure to protect EA against SBFs and Delos: for example, ensuring good governance, and whistleblowing mechanisms, or asking billionaires to donate their wealth to separate entities now in advance of grants being allocated, so we know they're for real / the commitment is good. (Each of these mechanisms might not be a good idea, I don't know, I just mean that the general category probably has useful things in it.)

It would also imply 'stop valorizing them'. If we hadn't got to the point where I have a sticker by my bed reading 'What Would SBF Do?' (from EAG SF 2022*), EAs like me could hold our heads higher (I should probably remove that). Other ways to valorize billionaires include inviting them to events, podcasts, parties, working for them, and doing free PR for them. Let's think twice about that next time.

Not saying 'stop talking to Vitalik', but: the alliance with billionaires has been very cosy, and perhaps it should cool, or uneasy.

The above is strongly expressed, but I'm mainly trying this view on for size.

*produced by a random attendee rather than the EAG team

EliezerYudkowsky @ 2022-11-13T02:10 (+38)

My current understanding is that Ben Delo was convicted over failure to implement a particular regulation that strikes me as not particularly tied to the goodness of humanity; I know very little about him one way or the other but do not currently have in my "poor moral standing" column on the basis of what I've heard.

Obvious potential conflicts of interest are obvious: MIRI received OP funding at one point that was passed through by way of Ben Delo. I don't recall meeting or speaking with Ben Delo at any length and don't make positive endorsement of him.

Yitz @ 2022-11-13T00:57 (+4)

I have a sticker by my bed reading 'What Would SBF Do?' (from EAG SF 2022) (I should probably remove that)

Maybe don't remove that—this seems emblematic of a category of mistakes worth remembering , if only so we don't repeat it.

Nathan Young @ 2022-11-12T14:47 (+27)

Hedge on reputation

Even if we took SBF's money at the time we didn't need to double down by connecting ourselves to his reputation. He was just a man. In this way we reinvested capital in something we were already overinvested in.

(This is similar to freedom and utility's point)

EliezerYudkowsky @ 2022-11-13T02:06 (+25)

Well, obvious thought in hindsight #1: Manifold scandal prediction markets with anonymous trading and rolling expiration dates.

I don't know how else you'd aggregate a lot of possible opinions that people possibly might not be incentivized to say openly, into a useful probability estimate that anyone could actually act on.

Nathan Young @ 2022-11-13T03:11 (+12)

I have been suggesting this for like a year, but everyone I talked to told me it would cause problems so I wasn't confident enough to do it unilaterally.

That's no longer the case.

Edouard Harris @ 2022-11-15T02:24 (+5)

Isn't this trivially exploitable? If a market like this existed, then surely my first move as a corrupt billionaire would be to place rolling bets on my own innocence. Seems like I should be able to manipulate the estimate by risking only a small fraction of my charitable allocation in most realistic cases. Especially since the lower the market probability looks, the less likely anyone is to actually investigate me carefully.

EliezerYudkowsky @ 2022-11-15T02:38 (+13)

Standard reply is that a visible bet of this form would itself be sus and would act as a subsidy to the prediction market that means bets the other way would have a larger payoff and hence warrant a more expensive investigation. Though this alas does not work quite the same way on Manifold since it's not convertible back to USD.

Nathan Young @ 2022-11-15T10:29 (+6)

It is by us though. All bets are convertible to charity donations and a lot of effective organisations are on there. So for many EAs it's effectively just cash.

EliezerYudkowsky @ 2022-11-15T22:18 (+2)

Golly, I didn't even realize that.

Lorenzo Buonanno @ 2022-11-15T11:01 (+2)

Honest question: could betting on FTT have been modeled as a prediction market on FTX? (vaguely similar to stock, even with important differences)

I see many crypto tokens as bets on the value of X, and they have been very highly manipulated for the past ~8 years (at least), why would USD prediction markets be different?

Nathan Young @ 2022-11-15T11:37 (+12)

Yes, I think so. And in that sense we were racing against a huge prediction market.

Nathan Young @ 2022-11-12T13:18 (+23)

We were narrow-minded in the kinds of catastrophic risks to look at and so some high-probability risks were ignored because they weren't the right type.

I sense most that far more of happy to take on the burden of learning more about AI risk than financial risk. I've heard many people trying to upskill in AI. I've never heard someone say they wanted to learn more about FTX's finances to avoid an catastrophic meta risk

Sabs @ 2022-11-12T16:38 (+22)

Did people in the general EA polysphere know about the FTX polycule environment, and in particular that the CEOs of FTX and Alameda were sleeping together on a regular basis? If so, this probably should have raised a lot of people's estimates of financial misconduct. I wouldn't expect EAs to be especially savvy as to all the weird arbitrage trades in the crypto space and how they could go wrong, so missing that is very forgivable, but I would expect high-level EAs to be extremely aware of who is fucking who.

aogara @ 2022-11-13T01:35 (+17)

Normally I would downvote a claim without evidence. But if this is true, it would help explain why FTX bailed out Alameda using customer funds. And it would have important implications for how EA views conflicts of interest from romantic relationships in other areas.

AI Safety has plenty of romantic relationships between important people. To name just one relationship that has been made fully public and transparent, Holden Karnofsky is married to Daniella Amodei, President of Anthropic and sister to Dario Amodei, an advisor to OpenPhil on AI Safety. I think it’s reasonable to believe that Dario has had more influence over OpenPhil’s institutional views, and that OpenPhil has a higher opinion of Anthropic, than would be true without this romantic relationship.

My perception is that many EAs think romantic relationships don’t often cause problematic conflicts of interest. Sabs’ comment was downvoted heavily for reasons such as Lukas’s below. I have previously drafted posts similar to this one and not published them because of fear of backlash.

I don’t want to name other relationships mostly because it wouldn’t solve the problem. I don’t think romance between coworkers is always wrong — I’m currently dating someone I used to work with, though our relationship only started after she left the company. But when grantmakers are taking advice from or giving grants to people they’re romantically involved with, I think there’s a lot of room for compromised decision making. These conflicts of interest should at least be public, and better yet avoided entirely.

Julia Wise writes about this a bit here: https://forum.effectivealtruism.org/posts/fovDwkBQgTqRMoHZM/power-dynamics-between-people-in-ea

Jason @ 2022-11-13T02:12 (+4)

I'd add that some (maybe even many) potential conflicts of interest can be legitimately waived, but that it is not the decision of the person with the potential conflict to make. Furthermore, the waiver decision should generally be based on the best interests of the organization, employer, community, mission, etc. rather than the conflicted party's own interests.

Lukas Trötzmüller @ 2022-11-12T18:44 (+6)

Downvoted for several reasons: because I would expect colleagues in any work environment to hook up, because I think it's very unkind to assume sexual relations in the workplace are indicative of a problem, because I'm against outing people's sex lifes unless directly relevant to a scandal. And finally, because it seems unnecessary to mention polyamory when talking about two people hooking up.

(Retracted after more consideration. I still disagree with the wording of the comment I responded to but can now see it points towards a real problem)

Sabs @ 2022-11-12T18:50 (+40)

This is nonsense. Financial firms typically have strict disclosure rules about relationships between colleagues because ppl will commit fraud out of loyalty to ppl they're fucking. As, y'know, may well have happened here!

Jason @ 2022-11-13T01:54 (+21)

This would be reportable and disqualifying in a lot of industries. For instance, if a prosecutor is having (or recently had) a sexual relationship with a defense attorney, it would be wildly inappropriate for them to be on the same case. It is impossible to maintain objectivity in that kind of dual relationship. A foundation employee should not be evaluating a grant proposal from someone they are sleeping with. And so on. So one doesn't even have to even considered the possibility of fraud to have realized how improper this would have been if true.

To generalize the your earlier comment, and to probably sound older than I am, it sounds like FTX/Alameda was not at all run in a professionally appropriate or "grown up" manner. How aware was the community of that characteristic more generally?

Nathan Young @ 2022-11-12T18:34 (+3)

I sense there wasn't an FTX polcule. Though disagreevote if you think there was.

Thomas Kwa @ 2024-03-17T19:19 (+2)

There was likely no FTX polycule (a Manifold question resolved 15%) but I was aware that the FTX and Alameda CEOs were dating. I had gone to a couple of FTX events but try to avoid gossip, so my guess is that half of the well-connected EAs had heard gossip about this.

Nathan Young @ 2024-04-18T18:39 (+2)

It resolved to my personal credence so you shouldn’t take that more seriously than “nathan thinks it unlikely that”

Vasco Grilo @ 2022-11-12T20:52 (+1)

I do not know whether there was a polycule environment in FTX. However, if there was and such environment is materially correlated with financial misconduct, I think it is legitimate to use that as evidence for financial misconduct.

Nathan Young @ 2022-11-12T15:39 (+22)

Set a higher bar for what good business practices are

By lionising someone involved in ponzi schemes and who handled money they should have known came from crime, we sent a credible signal that it was okay to break norms of morality as long as one didn't get caught.

It should not be entirely surprising they went on to commit fraud.

sawyer @ 2022-11-11T16:35 (+17)

What if there were a norm in EA of not accepting large amounts of funding unless a third-party auditor of some sort has done a thorough review of the funder's finances and found them to above-board? Obviously lots of variables in this proposal, but I think something like this is plausibly good and would be interested to hear pushback.

Habryka @ 2022-11-12T18:24 (+26)

I disagree with this. I think we should receive money from basically arbitrary sources, but I think that money should not come with associated status and reputation from within the community. If an old mafia boss wants to buy malaria nets, I think it's much better if they can than if they cannot.

I think the key thing that went wrong was that in addition to Sam giving us money and receiving charitable efforts in return, he also received a lot of status and in many ways became one of the central faces of the EA community, and I think that was quite bad. I think we should have pushed back hard when Sam started being heavily associated with EA (and e.g. I think we should have not invited him to things like coordination forum, or had him speak at lots of EA events, etc.)

emre kaplan @ 2022-11-12T18:56 (+9)

I guess it also depends on where the funding is going. If a bloody dictator gives a lot money to GiveDirectly or another charity that spends the money on physical goods(anti-malaria nets) which are obviously good, then it's still debatable but there's less concern. But if the money is used in an outreach project to spread ideas then it's a terrible outcome. It's similarly dangerous for research institutions.

ChanaMessinger @ 2022-11-12T22:27 (+2)

What's the specific mistake you think was made? Do you think e.g. "being very good at crypto / trading / markets" shouldn't be on its own sufficient to have status in the community?

Edit: Answered elsewhere

AllAmericanBreakfast @ 2022-11-12T21:30 (+2)

"Old mafia don?" How about Vladimir Putin?

I tend to lean in your direction, but I think we should base this argument on the most radioactive relevant modern case.

Habryka @ 2022-11-12T21:51 (+6)

I would be glad to see Putin have less resources and to see more bednets being distributed.

I do think the influence angle is key here. I think if Putin was doing a random lottery where he chose any organization in the world to receive a billion dollars from him, and it happened to be my organization, I think I should keep the money.

I think it gets trickier if we think about Putin giving money directly to me, because like, presumably he wants something in return. But if there was genuine proof he didn't want anything in-return, I would be glad to take it, especially if the alternative is that it fuels a war with Ukraine.

AllAmericanBreakfast @ 2022-11-12T21:58 (+9)

Right, I agree that it's good to drain his resources and turn them into good things. The problem is that right now, our model is "status is a voluntary transaction." In that model, when SBF, or in this example VP, donates, they are implicitly requesting status, which their recipients can choose to grant them or not.

I don't think grantees - even whole movements - necessarily have a choice in this matter. How would we have coordinated to avoid granting SBF status? Refused to have him on podcasts? But if he donates to EA, and a non-EA podcaster (maybe Tyler Cowen) asks him, SBF is free to talk about his connection and reasoning. Journalists can cover it however they see fit. People in EA, perhaps simply disagreeing, perhaps because they hope to curry favor with SBF, may self-interestedly grant status anyway. That wouldn't be very altruistic, but we should be seriously examining the degree to which self-interest motivates people to participate in EA right now.

So if we want to be able to accept donations from radioactive (or potentially radioactive) people, we need some story to explain how that avoids granting them status in ways that are out of our control. How do we avoid journalists, podcasters, a fraction of the EA community, and the donor themselves from constructing a narrative of the donor as a high-status EA figure?

Habryka @ 2022-11-12T22:20 (+10)

I don't think grantees - even whole movements - necessarily have a choice in this matter. How would we have coordinated to avoid granting SBF status? Refused to have him on podcasts? But if he donates to EA, and a non-EA podcaster (maybe Tyler Cowen) asks him, SBF is free to talk about his connection and reasoning. Journalists can cover it however they see fit. People in EA, perhaps simply disagreeing, perhaps because they hope to curry favor with SBF, may self-interestedly grant status anyway. That wouldn't be very altruistic, but we should be seriously examining the degree to which self-interest motivates people to participate in EA right now.

I think my favorite version of this is something like "You can buy our scrutiny and time". Like, if you donate to EA, we will pay attention to you, and we will grill you in the comments section of our forum, and in some sense this is an opportunity for you to gain status, but it's also an opportunity for you to lose a lot of status, if you don't hold yourself well in those situations.

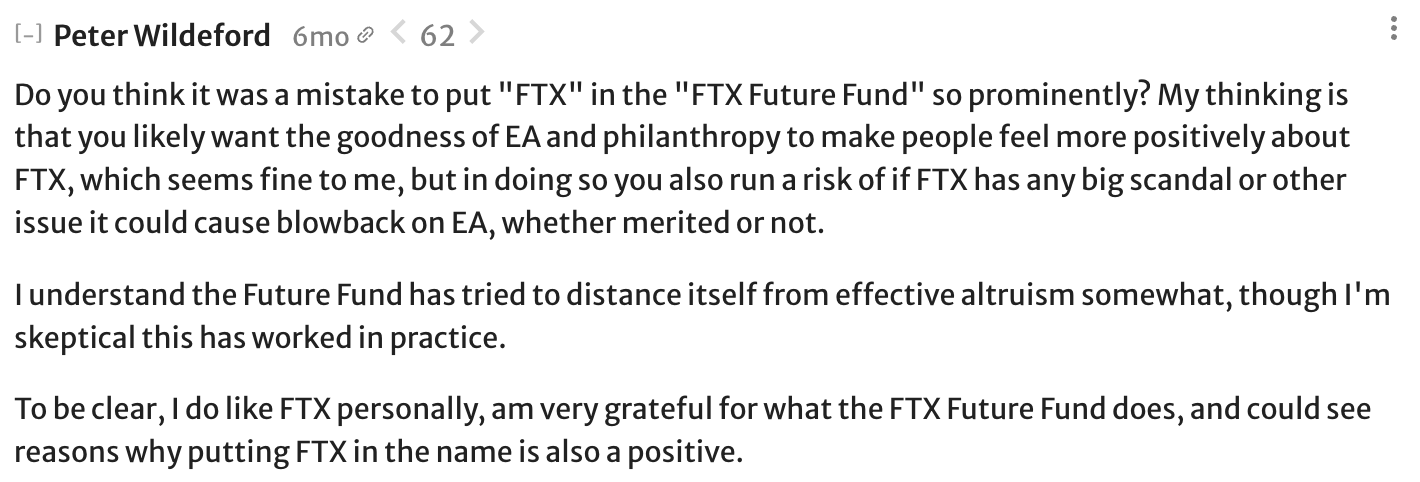

I think a podcast with SBF where someone would have grilled him on his controversial stances would have been great. Indeed, I was actually planning to do a public debate with him in February where I was planning to bring up his reputation for lack of honesty and his involvement in politics that seemed pretty shady to me, but some parts of EA leadership actively requested that I don't do that, since it seemed too likely to explode somehow and reflect really badly on EAs image.

I also think repeatedly that we don't think he is a good figurehead of the EA community, not inviting him to coordination forum and other leadership events, etc. would have been good and possible.

Indeed, right now I am involved with talking to a bunch of people about similar situations, where we are associated with a bunch of AI capabilities companies and there are a bunch of people in policy that I don't want to support, but they are working on things that are relevant to us and that are useful to coordinate with (and sometimes give resources to). And I think we could just have a public statement being like "despite the fact that we trade with OpenAI, we also think they are committing a terrible atrocity and we don't want you to think we support them". And I think this would help a lot, and doesn't seem that hard. And if they don't want to take the other side of that deal and only want to trade with us if we say that we think they are great, then we shouldn't trade with them.

Sharmake @ 2022-11-12T22:38 (+7)

I think a podcast with SBF where someone would have grilled him on his controversial stances would have been great. Indeed, I was actually planning to do a public debate with him in February where I was planning to bring up his reputation for lack of honesty and his involvement in politics that seemed pretty shady to me, but some parts of EA leadership actively requested that I don't do that, since it seemed too likely to explode somehow and reflect really badly on EAs image.

This is an issue with optimizing of image I have: You aren't able to speak out against a thought leader because they're successful, and EA optimizing for seeming good is how we got into this mess in the first place.

AllAmericanBreakfast @ 2022-11-12T22:40 (+2)

I support these actions, conditional on them becoming common knowledge community norms. However, it's strictly less likely for us to trade with bad actors and project that we don't support them than it is for us to just trade with bad actors.

Dancer @ 2022-11-12T13:03 (+26)

I don't know much about how this all works but how relevant do you think this point is?

If Sequoia Capital can get fooled - presumably after more due diligence and apparent access to books than you could possibly have gotten while dealing with the charitable arm of FTX FF that was itself almost certainly in the dark - then there is no reasonable way you could have known.

[Edit: I don't think the OP had included the Eliezer tweet in the question when I originally posted this. My point is basically already covered in the OP now.]

elifland @ 2022-11-12T13:56 (+64)

It's a relevant point but I think we can reasonably expect EA leadership to do better at vetting megadonors than Sequoia due to (a) more context on the situation, e.g. EAs should have known more about SBF's past than Sequoia and/or could have found it out more easily via social and professional connections (b) more incentive to avoid downside risks, e.g. the SBF blowup matters a lot more for EA's reputation than Sequoia's.

To be clear, this does not apply to charities receiving money from FTXFF, that is a separate question from EA leadership.

timunderwood @ 2022-11-13T10:59 (+8)

You expect the people being given free cash to do a better job of due diligence than the people handing someone a giant cash pile?

Not to mention that the Future Fund donations probably did more good for EA causes than the reputational damage is going to do harm to them (making the further assumption that this is actually net reputational damage, as opposed to a bunch of free coverage that pushes some people off and attracts some other people).

Nathan Young @ 2022-11-12T14:51 (+4)

Also, to be pithy:

If we are so f*****g clever as to know what risks everyone else misses and how to avoid them, how come we didn't spot that one of our best and brightest was actually a massive fraudster

PeterMcCluskey @ 2022-11-12T15:41 (+9)

I haven't expected EAs to have any unusual skill at spotting risks.

EAs have been unusual at distinguishing risks based on their magnitude. The risks from FTX didn't look much like the risk of human extinction.

Nathan Young @ 2022-11-12T15:44 (+8)

But half our resources to combat human extinction were at risk due to risks to FTX. Why didn't we take that more seriously.

Lukas_Gloor @ 2022-11-12T18:35 (+5)

And also the community's reputation to a very significant degree. It was arguably the biggest mistake EA has made thus far (or the biggest one that has become obvious – could imagine we're making other mistakes that aren't yet obvious).

Lukas_Gloor @ 2022-11-12T18:32 (+4)

Do you think EAs should stop having opinions on extinction risks because they made a mistake in a different relevant domain (insufficient cynicism in the social realm)? I don't see the logic here.

Dancer @ 2022-11-12T14:15 (+1)

I think a) and b) are good points. Although there's also c) it's reasonable to give extra trust points to a member of the community who's just given you a not-insignificant part of their wealth to spend on charitable endeavours as you see fit.

Note that I'm obviously not saying this implied SBF was super trustworthy on balance, just that it's a reasonable consideration pushing in the other direction when making the comparison with Sequoia who lacked most of this context (I do think it's a good thing that we give each other trust points for signalling and demonstrating commitments to EA).

Greg_Colbourn @ 2022-11-12T18:38 (+4)

The thing is, whilst SBF pledged ~all his wealth to EA causes, he only actually gave ~1-2% before the shit hit the fan. It seems doubtful that any significant amounts beyond this were ever donated to a non-SBF/FTX-controlled separate legal entity (e.g. the Future Fund or FTX Foundation). That should've raised some suspicions for those in the know. (Note this is speculation based on what is said in the Future Fund resignation post; would be good to actually hear from them about this).

AllAmericanBreakfast @ 2022-11-12T21:26 (+2)

The quote you're citing is an argument for abject helplessness. We shouldn't be so confident in our own utter lack of capacity for risk management that we fund this work with $0.

Miguel @ 2022-11-12T14:24 (+1)

This is actually the best practice in banks and publicly held corporations...

AllAmericanBreakfast @ 2022-11-12T20:19 (+16)

I have been quietly thinking "this is crypto money and it could vanish anytime." But I never said it out loud, because I knew people like Eliezer would say the kind of thing Eliezer said in the tweet above: "you're no expert, people way deeper into this stuff than you are putting their life savings in FTX, trust the market." It's a strangely inconsistent point of view from Eliezer in particular, who's expressed that his "faith has been shaken" in the EMH.

What Eliezer's ignoring in his tweet here is that the people who were skeptical of FTX, or crypto generally, mostly just didn't invest, and thus had no particular incentive to scrutinize FTX for wrongdoing. As it turns out, the only people looking closely enough at FTX were their rivals, who may have been doing this strategically in order to exploit vulnerabilities, and thus were incentivized not to spread this information until they were ready to trigger catastrophe. If there's money in scrutinizing a company, there's no money in releasing that information until after you've profited from it.

In my opinion, we need dedicated risk management for the EA community. The express purpose of risk management would be to start with the assumption that markets are not efficient, to brainstorm all the hazards we might face, without a requirement to be rigorously quantitative, to try and prioritize them according to severity and risk, and figure out strategies to mitigate these risks. And to be rude about it.

I think this does point to a serious failure mode within EA. Deference to leadership + insistance on quantitative models + norms of collegiality + lack of formal risk assessment + altruistic focus on other people's problems -> systemic risk of being catastrophically blindsided more than once.

Lukas_Gloor @ 2022-11-12T20:26 (+13)

I have been quietly thinking "this is crypto money and it could vanish anytime."

Yeah but that's different from fraud. I think a 99% crypto crash would've been a lot easier to handle. (While still being very disruptive for EA.)

I feel like the OP ("How could we have avoided this?") is less about the collapse of pledged funding and more about fraud.

AllAmericanBreakfast @ 2022-11-12T21:14 (+8)

I’m not trying to take credit for my silent suspicion. One of the reasons the crypto industry is notorious is because of fraud. I think that’s a natural case a dedicated risk team could have considered if we’d had one.

Lukas_Gloor @ 2022-11-12T21:39 (+9)

Feel free to take credit for it!

I initially interpreted your comment as being only about things other than fraud. You're right that "person makes a lot of money in crypto" probably boosts the base rate for fraud by more than 10x, so your point is great. I think a lot of people, myself included, thought "surely he wouldn't do anything too blatant (despite crypto)."

AllAmericanBreakfast @ 2022-11-12T21:22 (+5)

Also, the point of risk management isn't to identify, with confidence, what will happen. It almost certainly was not possible to predict FTX's collapse, much less the possibility of fraud, with high confidence.

What we probably could have done is find ways to mitigate that risk. For example, it sounds possible that money disbursed from the Future Fund could be clawed back. Was there an appropriate mechanism by which we could have avoided disbursing money until we were sure that grantees could feel totally secure that this would not happen? In fact, is there a way this could be implemented at other grantmaking organizations?

Could we have put the brakes on incorporating FTX Future Fund as an EA-affiliated grantmaker until it had been around for a while?

There are probably prudent steps we could start taking in the future to mitigate such damages without having to be oracles.

Sam Elder @ 2022-11-14T02:28 (+12)

Let me answer prospectively:

To vet charity destinations, we have GiveWell. Individual donors don't need to do their own research; they can consult GiveWell's recommendations, which include extensive, publicly documented deep dives into those organizations that make fraud overwhelmingly unlikely.

This experience shows that we need something analogous to vet charity sources, ReceiveWell, say. Individual recipients shouldn't need to do their own research; they would consult ReceiveWell's ratings of the funders in question, which would include extensive, publicly documented deep dives into the sources and risks associated with each of them.

freedomandutility @ 2022-11-12T14:39 (+10)

Make it clearer that earning to give shouldn’t involve straightforward EV maximisation because charities / NGOs benefit from certainty and stability, and you shouldn’t make decisions which have a high risk of losing money which has already been promised to charities even if the decision is positive EV.

Vasco Grilo @ 2022-11-12T20:59 (+1)

I agree certainty and stability are quite relevant, but these can be integrated into expected value thinking. So I would say "straightforward money [not EV] maximisation", as "value" should account for the risk of the assets.

freedomandutility @ 2022-11-12T21:31 (+3)

Yes that makes sense to me!

freedomandutility @ 2022-11-12T14:38 (+10)

Make it clearer that EA is not just about maximising expected value since maximising EV will sometimes involve dishonesty and illegality.

Vasco Grilo @ 2022-11-12T20:39 (+8)

Hi,

I agree EA is not just about maximising expected value, but I think that is a great principle. Connecting it to dishonesty and illegality seems pretty bad. Moreover, there are some rare examples where illegal actions are recognised as good, and one should be open to such cases.

Trish @ 2022-11-13T21:41 (+9)

I've commented on a separate post here.

In short: I'm not sure EA could have prevented or predicted this particular event with FTX blowing up.

However, EA did know that its funding sources were very undiversified and volatile, and could have thought more about the risks of an expected source of funding drying up and how to mitigate those risks.

Nathan Young @ 2022-11-12T16:04 (+9)

Have a place to store concerns about stuff like this publicly.

There were lots of people with different information. We could have put it all in the FTX forum article.

freedomandutility @ 2022-11-12T14:41 (+9)

Encourage more EAs to form for-profit start-ups and aim to diversify donors - lots of multi-millionaires > 2 billionaires

Nathan Young @ 2022-11-12T14:48 (+2)

I think I'd aim for just a lot more billionaires but yes, your point stands.

Pat Myron @ 2022-11-12T16:17 (+5)

There are ~2700 billionaires collectively worth ~$13T:

https://en.wikipedia.org/wiki/The_World's_Billionaires

While there are over 50M millionaires collectively worth ~$200T:

https://www.visualcapitalist.com/distribution-of-global-wealth-chart/

Jesus de Sivar @ 2022-11-13T08:21 (+4)

There was no avoiding of this.

The only way would've been with:

- A professional third-party audit, which requires

- Regulatory norms for avoiding frauds such as these.

- Forbidding SBF from associating himself with EA.

The first option is a non-starter because there are no such crypto regulations.

I don't know about the finances of FTX, but I do know about audits. Auditors work based on norms[1], and those norms get better at predicting new disasters by learning from previous disasters (such as Enron). The same is true of air travel, one of "the safest ways of travel".

The second option is also a non-starter because of ... ¿The First Amendment?

Therefore, we couldn't have "avoided" ... What exactly?

- ... SBF's fraud? or

- ... SBF harming the EA "brand"?

If what we want is to avoid future fraud in the Crypto community, then the goal of the Crypto community should be to replicate the air travel model for air safety[2]:

- Strong (and voluntary) inter-institutional cooperation, and

- A post-mortem of every single disaster in order to incorporate not regulation but "best practices".

However, if the goal is to avoid harming the EA "brand", then there's a profession for that. It's called "Public Relations".

PR it's also the reason why big companies have rules that prevent them from (publicly) doing business with people suspected of doing illegal activities. ("The wife of the Caesar must not only be pure, but also be free of any suspicios of impurity")

For example, EA institutions could from now on:

- Copy GiveDirectly's approach and avoid any single donor from representing more than 50% of their income.

- Perhaps increase or decrease that percentage, depending on the impact in SBF's supported charities.

- Reject money that comes from Tax Havens.

- FTX was a business based mainly in The Bahamas.

- I don't know what is the quality of the Bahamas standard for financial audits. In fact, I don't even know if they demand financial audits at all... but I know that The Bahamas is sometimes classed as a Tax Haven, and is more likely that we find criminals and frads with money in Tax Haven than outside of them.

- Incentivize their own supported charities to reject dependence on a single donor, and to reject money that comes from Tax Havens.

- Perhaps also...

- ... Campaign against Tax Havens?

- Tax Havens crowd out against money given to tax-deductible charities, and therefore for EA Charities.

- There is an economic benefit to some of the citizens of the Tax Haven countries, but when weighted against the criminal conduct that they enable... are they truly more good than bad?

- ... Create a certification for NGOs to be considered "EA"?

- Most people know that some causes (Malaria treatments, Deworming...) are well-known EA causes.

- They are causes that attract million of dollars in funding.

- Since there is no certification for NGO0s to use the name "EA", a fraudster-in-waiting can just

- Start a new NGO tomorrow.

- "Brand" itself as an EA charity

- See the donations begin to pour-in, and

- Commit fraud in a new manner that avoids existing regulation

- Profit

- Give the news cycle an exciting new story, and the EA community another sleepless night.

- Most people know that some causes (Malaria treatments, Deworming...) are well-known EA causes.

- ... Campaign against Tax Havens?

In fact, fraud in NGO's happens all the time. One of the reasons why Against Malaria Foundation had trouble implementing their first Give Well charity is that they were too stringent on the anti corruption requirements for governments.

It's in the direct interest of the EA community to minimize the amount of fraudulent NGO's, and to minimize the amount of EA branded fraudulent NGO's.

- ^

My guess is that the only way in which alarms would've ringed for FTX investors was by realizing that there was a "back door" where somebody could just steal all the money.

Would a financial auditor have looked into that, the programming aspect of the business?

I doubt it, unless specific norms were in place to look for just that.

- ^

In fact, at the same time that we're discussing this, a tragic air travel accident happened on a Dallas Airshow. Our efforts might be more "effective" by not discussing SBF and instead discussing Airshow Security and their morality.

David Mears @ 2022-11-13T09:38 (+15)

The second option is also a non-starter because of ... ¿The First Amendment?

Disagree on two counts. Firstly, EA is a trademark belonging to CEA. Secondly, the association with SBF was bilateral - EA lauded SBF, not just vice versa.

We can take billionaires’ money without palling up.

Jesus de Sivar @ 2022-11-14T01:38 (+2)

EA is a trademark belonging to CEA

I didn't know that EA was a trademark. And if I (who am involved in EA) didn't know, then the public certainly doesn't know.

What we need is not a trademark but a "certification" or "seal of quality" to certify NGO's that actually fulfill EA principles, and in order to be able to disown future frauds.

Max Ghenis @ 2022-11-12T23:10 (+4)

We could cultivate for-profit entrepreneurship in fields with clearer social benefit. I've seen arguments that people can make a bigger impact by focusing on social impact or profitability rather than trying to do both, and I think that's true on the margin for most people, but embracing crypto may have overcorrected.

Sharmake @ 2022-11-12T16:12 (+4)

A few thoughts on how to avoid this:

- Focus on rule utilitarianism, and stop normalizing unilateral actions that have a chance to burn the commons like lying or stealing. I wouldn't go as far as freedomandutility would, but we need to be clear that lying and stealing are usually bad, and shouldn't be normal.

Speaking of that, that leads to my next suggestion:

- We need to have a whistleblower hotline.

Specifically, we need to make sure that we catch unethical behavior early, before things get big. Here's the thing, a lot of big failures are preceded by smaller failures, as Dan Luu says.

Here's a link:

We really need to move beyond the model of EA posts being a whistleblower's main place, even in the best case scenario.

And 3. We should be much more risk-averse to actions that involve norm violations, because they have usually negative benefits. One of SBF (and partially EA's flaw) was not realizing what the space of outcomes could be.

Quoting here:

It is important to distinguish different types of risk-taking here. (1) There is the kind of risk taking that promises high payoffs but with a high chance of the bet falling to zero, without violating commonsense ethical norms, (2) Risk taking in the sense of being willing to risk it all secretly violating ethical norms to get more money. One flaw in SBF's thinking seemed to be that risk-neutral altruists should take big risks because the returns can only fall to zero. In fact, the returns can go negative - eg all the people he has stiffed, and all of the damage he has done to EA.

Yeah, the idea that everything would fall to zero only makes sense if we take a very narrow view of who was harmed. Once we include depositors, EAs and more, it turned negative value.

Yonatan Cale @ 2022-11-13T08:43 (+2)

IF we had a failure of "this was predictable", then I'd point some of my blame at how EA hires people, and specifically our tendency to not try to hire very senior people who can do such very complicated things.

I anyway think this is a mistake we are making as a movement

Jason @ 2022-11-13T01:33 (+2)

I expect the best output we could reasonably hope for from any improved detection system would be relatively modest. For example: "several community members have come forward with specific allegations of past serious misbehavior by megadonor X, and so we estimate that there is a 20% chance that X's company will be revealed as (or end up committing) massive fraud in the next ten years." If someone has strongly probative evidence of fraud, that person should not be going an outfit set up by the EA community with that information . . . they should be going to the appropriate authorities.

Let's say a detection system had discerned a 20% chance of significant fraud by SBF -- this seems to at least several times better performance than the results obtained by organizations with better access to FTX's internal accounting and lots of resources/motivation. What then? Does the community turn down any FTX-related money, even though there is an 80% chance there is nothing scandalous about FTX? How does that get communicated in a decentralized community where everyone makes their own decisions about who to accept funding from?

And how is that communicated -- especially in a PR/optics fashion -- in a way that doesn't create a serious risk of slander/libel liability? "We think Megadonor X poses an unacceptable risk of causing grave reputational harm to the community" sure sounds like an opinion based on undisclosed facts, which is a potentially slanderous form of opinion even in the free-speech friendly USA.

It was widely known that crypto-linked assets are inherently volatile and can disappear in a flash, so while better intel on SBF would have better informed the odds of catastrophic funding loss it was not necessary to understand that this risk existed.

All that is to say that the better approach might be more focused on what healthcare workers would call universal precautions than on attempting to identify the higher-risk individuals. Wear gloves with all patients. Always "hedge on reputation" as Nathan put it below.