What a compute-centric framework says about AI takeoff speeds

By Tom_Davidson @ 2023-01-23T04:09 (+189)

This is a linkpost to https://www.lesswrong.com/posts/Gc9FGtdXhK9sCSEYu/what-a-compute-centric-framework-says-about-ai-takeoff

As part of my work for Open Philanthropy I’ve written a draft report on AI takeoff speeds, the question of how quickly AI capabilities might improve as we approach and surpass human-level AI. Will human-level AI be a bolt from the blue, or will we have AI that is nearly as capable many years earlier?

Most of the analysis is from the perspective of a compute-centric framework, inspired by that used in the Bio Anchors report, in which AI capabilities increase continuously with more training compute and work to develop better AI algorithms.

This post doesn’t summarise the report. Instead I want to explain some of the high-level takeaways from the research which I think apply even if you don’t buy the compute-centric framework.

The framework

h/t Dan Kokotajlo for writing most of this section

This report accompanies and explains https://takeoffspeeds.com (h/t Epoch for building this!), a user-friendly quantitative model of AGI timelines and takeoff, which you can go play around with right now. (By AGI I mean “AI that can readily[1] perform 100% of cognitive tasks” as well as a human professional; AGI could be many AI systems working together, or one unified system.)

Takeoff simulation with Tom’s best-guess value for each parameter.

Takeoff simulation with Tom’s best-guess value for each parameter.

The framework was inspired by and builds upon the previous “Bio Anchors” report. The “core” of the Bio Anchors report was a three-factor model for forecasting AGI timelines:

Dan’s visual representation of Bio Anchors report

- Compute to train AGI using 2020 algorithms. The first and most subjective factor is a probability distribution over training requirements (measured in FLOP) given today’s ideas. It allows for some probability to be placed in the “no amount would be enough” bucket.

- The probability distribution is shown by the coloured blocks on the y-axis in the above figure.

- Algorithmic progress. The second factor is the rate at which new ideas come along, lowering AGI training requirements. Bio Anchors models this as a steady exponential decline.

- It’s shown by the falling yellow lines.

- Bigger training runs. The third factor is the rate at which FLOP used on training runs increases, as a result of better hardware and more $ spending. Bio Anchors assumes that hardware improves at a steady exponential rate.

- The FLOP used on the biggest training run is shown by the rising purple lines.

Once there’s been enough algorithmic progress, and training runs are big enough, we can train AGI. (How much is enough? That depends on the first factor!)

This draft report builds a more detailed model inspired by the above. It contains many minor changes and two major ones.

The first major change is that algorithmic and hardware progress are no longer assumed to have steady exponential growth. Instead, I use standard semi-endogenous growth models from the economics literature to forecast how the two factors will grow in response to hardware and software R&D spending, and forecast that spending will grow over time. The upshot is that spending accelerates as AGI draws near, driving faster algorithmic (“software”) and hardware progress.

The key dynamics represented in the model. “Software” refers to the quality of algorithms for training AI.

The second major change is that I model the effects of AI systems automating economic tasks – and, crucially, tasks in hardware and software R&D – prior to AGI. I do this via the “effective FLOP gap:” the gap between AGI training requirements and training requirements for AI that can readily perform 20% of cognitive tasks (weighted by economic-value-in-2022). My best guess, defended in the report, is that you need 10,000X more effective compute to train AGI. To estimate the training requirements for AI that can readily perform x% of cognitive tasks (for 20 < x < 100), I interpolate between the training requirements for AGI and the training requirements for AI that can readily perform 20% of cognitive tasks.

Modeling the cognitive labor done by pre-AGI systems makes timelines shorter. It also gives us a richer language for discussing and estimating takeoff speeds. The main metric I focus on is “time from AI that could readily[2] automate 20% of cognitive tasks to AI that could readily automate 100% of cognitive tasks”. I.e. time from 20%-AI to 100%-AI.[3] (This time period is what I’m referring to when I talk about the duration of takeoff, unless I say otherwise.)

My personal probabilities[4] are still very much in flux and are not robust.[5] My current probabilities, conditional on AGI happening by 2100, are:

- ~10% to a <3 month takeoff [this is especially non-robust]

- ~25% to a <1 year takeoff

- ~50% to a <3 year takeoff

- ~80% to a <10 year takeoff

Those numbers are time from 20%-AI to 100%-AI, for cognitive tasks in the global economy. One factor driving fast takeoff here is that I expect AI automation of AI R&D to happen before AI automation of the global economy.[6] So by the time that 20% of tasks in the global economy could be readily automated, I expect that more than 20% of AI R&D will be automated, which will drive faster AI progress.

If I instead start counting from the time at which 20% of AI R&D can be automated, and stop counting when 100% of AI R&D can be automated, this factor goes away and my takeoff speeds are slower:

- ~10% to a <1 year takeoff

- ~30% to a <3 year takeoff

- ~70% to a <10 year takeoff

(Unless I say otherwise, when I talk about the duration of takeoff I’m referring to the time 20%-AI to 100%-AI for cognitive tasks in the global economy, not AI R&D.)

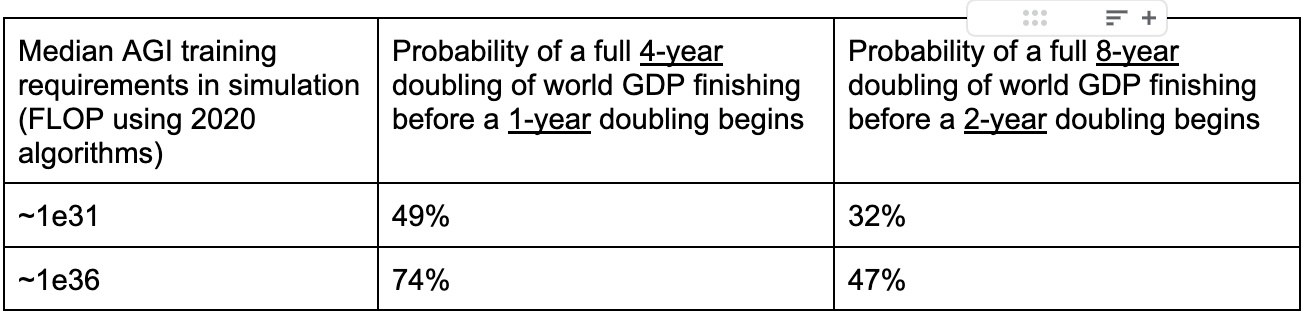

It’s important to note that my median AGI training requirements are pretty large - 1e36 FLOP using 2020 algorithms. Using lower requirements makes takeoff significantly faster. If my median AGI training requirements were instead ~1e31 FLOP with 2020 algorithms, my takeoff speeds would be:

- ~40% to a <1 year takeoff

- ~70% to a <3 year takeoff

- ~90% to a <10 year takeoff

The report also discusses the “time from AGI to superintelligence”. My best guess is that this takes less than a year absent humanity choosing to go slower (which we definitely should!).

Takeaways about capabilities takeoff speed

I find it useful to distinguish capabilities takeoff – how quickly AI capabilities improve around AGI – from impact takeoff – how quickly AI’s impact on a particular domain grows around AGI. For example, the latter is much more affected by deployment decisions and various bottlenecks.

The metric “time from 20%-AI to 100%-AI” is about capabilities, not impact, because 20%-AI is defined as AI that could readily automate 20% of economic tasks, not as AI that actually does automate them.

Even without any discontinuities, takeoff could last < 1 year

Even if AI progress is continuous, without any sudden kinks, the slope of improvement could be steep enough that takeoff is very fast.

Even in a continuous scenario, I put ~15% on takeoff lasting <1 year, and ~60% on takeoff lasting <5 years.[7] Why? On a high level, because:

- It might not be that much harder to develop 100%-AI than 20%-AI.

- AI will probably be improving very quickly once we have 20%-AI.

Going into more detail:

- It might not be that much harder to develop 100%-AI than 20%-AI.

- Perhaps chimps couldn’t perform 20% of tasks, even if they’d been optimized to do so. Humans have ~3X bigger brains than chimps by synapse count. That could mean that you only need to increase model size by 3X to go from 20%-AI to 100%-AI which, with Chinchilla scaling, would take 10X more training FLOP.

- You might need to increase model size by less than 3X.

-

With Chinchilla scaling, a 3X bigger model gets 3X more data during training. But human lifetime learning only lasts 1-2X longer than chimp lifetime learning.[8]

-

So intelligence might improve more from a 3X increase in model size with Chinchilla scaling than from chimps to humans.

-

- You might need to increase model size by less than 3X.

- Brain size - IQ correlations suggest a similar conclusion. A 10% bigger brain is associated with ~5 extra IQ points. This isn't much, but extrapolating the relationship implies that a 3X bigger brain would be ~60 IQ points smarter; and ML models may gain more from scale than humans as bigger models will be trained on more data (unlike bigger-brained humans).

- It is pretty hard to partially automate a job, e.g. for AI to automate 20% of the tasks. All of the tasks are interconnected in a messy way! Everything is set up for one human, with full context, to do the work.

- Normally, we restructure business processes to allow for partial automation. But this takes a lot of effort and time - typically decades! If the transition from 20%-AI to 100%-AI happens in just a few years (as implied by the other arguments in this section) there won’t be time for this kind of restructuring to happen.

- In this case, I still expect partial automation to happen earlier than full automation because it will still be somewhat easier to develop AI that can partially automate a job (with only small efforts restructuring processes) than AI that can fully automate the job (with similarly small efforts restructuring processes). But it might only be slightly easier.

- In other words, the lack of time for restructuring processes narrows the difficulty gap between developing 20%-AI and 100%-AI, but doesn’t eliminate it entirely.

- (This point is closely related to the “sonic boom” argument for fast takeoff.)

- Perhaps chimps couldn’t perform 20% of tasks, even if they’d been optimized to do so. Humans have ~3X bigger brains than chimps by synapse count. That could mean that you only need to increase model size by 3X to go from 20%-AI to 100%-AI which, with Chinchilla scaling, would take 10X more training FLOP.

- AI will probably be improving very quickly once we have 20%-AI.

-

Algorithmic progress is already very fast. OpenAI estimates a 16 month doubling time for algorithmic efficiency on ImageNet; an recent Epoch analysis estimates just 10 months for the same quantity. My sense is that progress is if anything faster for LMs.

-

Hardware progress is already very fast. Epoch estimates that FLOP/$ has been doubling every 2.5 years.

-

Spending on AI development – AI training runs, AI software R&D, and hardware R&D – might rise rapidly after we have 20%-AI, and the strategic and economic benefits of AI are apparent.

-

20%-AI could readily add ~$10tr/year to global GDP.[9] Compared to this figure, investments in hardware R&D (~$100b/year) and AI software R&D (~$20b/year) are low.

-

For <1 year takeoffs, fast scale-up of spending on AI training runs, simply by using a larger fraction of the world’s chips, plays a central role.

-

-

Once we have 20%-AI (AI that can readily[10] automate 20% of cognitive tasks in the general economy), AI itself will accelerate AI progress. The easier AI R&D is to automate compared to the general economy, the bigger this effect.

- How big might this effect be? This is a massive uncertainty for me but here are my current guesses. By the time we have 20%-AI I expect:

- Conservatively, AI will have automated 20% of cognitive tasks in AI R&D, speeding up AI R&D progress by a factor of ~1.3. I think it’s unlikely (~15%) the effect is smaller than this.

- Somewhat aggressively, AI will have automated 40% of cognitive tasks in AI R&D, speeding up AI R&D progress by a factor of ~1.8. I think there’s a decent chance (~30%) of getting bigger effects than this.

- The speed up increases over time as AI automates more of AI R&D. When we simulate this dynamic we find AI automation reduces “time from 20%-AI to 100%-AI” by ~2.5X.

- How big might this effect be? This is a massive uncertainty for me but here are my current guesses. By the time we have 20%-AI I expect:

-

Combining the above, I think the “effective compute” on training runs (which incorporates better algorithms) will probably rise by >5X each year between 20%-AI and 100%-AI, and could rise by 100X each year.

-

We should assign some probability to takeoff lasting >5 years

I have ~40% on takeoff lasting >5 years. On a high-level my reasons are:

- It might be a lot harder to develop 100%-AI than 20%-AI.

- AI progress might be slower once we reach 20%-AI than it is today.

Going into more detail:

- It might be a lot harder to develop 100%-AI than 20%-AI.

-

The key reason is that AI may have a strong comparative advantage at some tasks over other tasks, compared with humans. Its comparative advantages might allow it to automate 20% of tasks long before it can automate the full 100%. The bullets below expand on this basic point.

-

AI, and computers more generally, already achieve superhuman performance in many domains by exploiting massive AI-specific advantages (lots of experience/data, fast thinking, reliability, memorisation). It might be far harder for AI to automate tasks where these advantages aren’t as relevant.

-

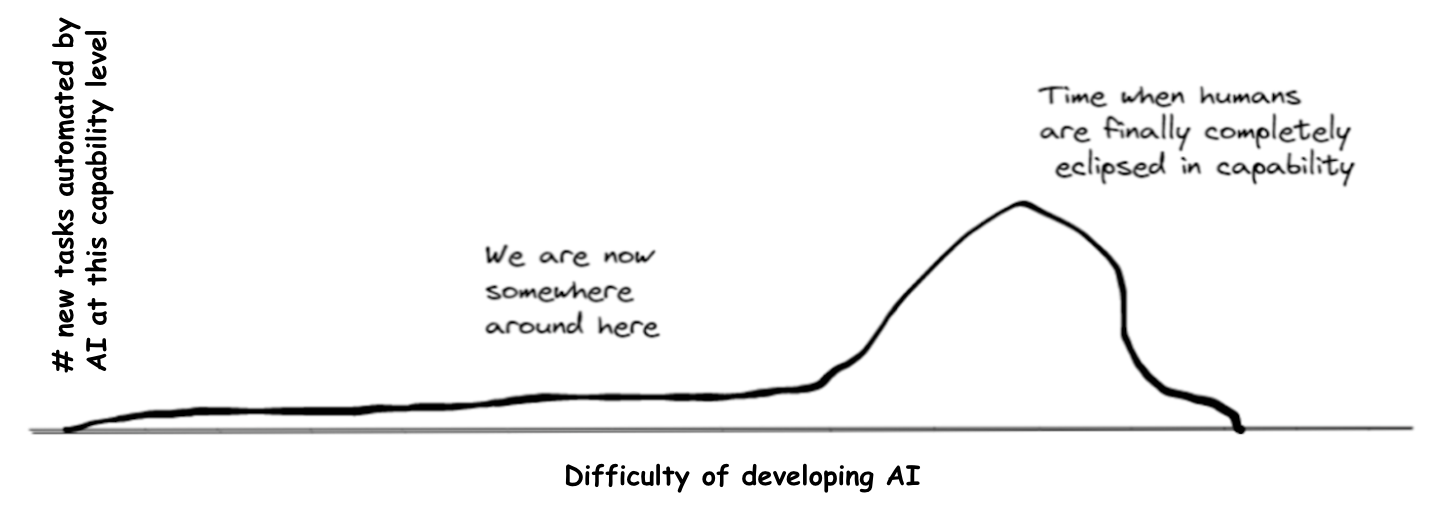

We can visualise this using (an adjusted version of) the graph Dan Kokotajlo drew in his review of Joe Carlsmith’s report on power-seeking AI.

We’re currently in the left tail, where AI’s massive comparative advantages allow it to automate certain tasks despite being much less capable than humans overall. If AI automates 20% of tasks before the big hump, or the hump is wide, it will be much easier to develop 20%-AI than 100%-AI.

We’re currently in the left tail, where AI’s massive comparative advantages allow it to automate certain tasks despite being much less capable than humans overall. If AI automates 20% of tasks before the big hump, or the hump is wide, it will be much easier to develop 20%-AI than 100%-AI. -

Outside of AI, there’s generally a large precedent for humans finding simple, dumb ways to automate significant fractions of labour.

- We may have automated >80% of the cognitive tasks that humans performed as of 1700 (most people worked in agriculture), but using methods that don’t get us close to automating 100% of them.

- By analogy, AI may automate 20% of 2020 cognitive tasks using methods that don’t get AI close to automating 100% of them. If this happens gradually over many decades, it might feel like “progress as normal” rather than “AI is on the cusp of having a transformative economic impact”.

-

Within AI, there are many mechanisms that could give AI comparative advantages at some tasks but not others. AI is better placed to perform tasks with the following features:

-

AI can learn to perform the task with “short horizon training”, without requiring “long horizon training”.[11]

-

The task is similar to what AI is doing during pre-training (e.g. similar to “next word prediction”, in the case of large language models).

-

It’s easier to get large amounts of training data for the task, e.g. from human demonstrations.

-

Memorising lots of information improves task performance.

-

It’s important to “always be on” (no sleep), or to consistently maintain focus (no getting bored or slacking).

-

It’s easier to verify that an answer is correct than to generate the correct answer. (This helps to generate training data and allows us to trust AI outputs.)

-

The task doesn’t require strong sim2real transfer.

-

The downside of poor performance is limited. (We might not trust AI in scenarios where a mistake is catastrophic, e.g. driving.)

-

-

Human brains were “trained” by evolution and then lifetime learning in a pretty different way to how AIs are trained, and humans seem to have pretty different brain architectures to AIs in many ways. So humans might have big comparative advantages over AIs in certain domains. This could make it very difficult to develop 100%-AI.

-

GPT-N looks like it will solve some LM benchmarks with ~4 OOMs less training FLOP. In other words, it has strong “comparative advantages” at some benchmarks over others. I expect cognitive tasks throughout the entire economy to have more variation along many dimensions than these LM benchmarks, suggesting this example underestimates the difficulty gap between developing 20%-AI and 100%-AI.

-

It’s notable that most of the evidence discussed above for a small difficulty gap between 20%-AI and 100%-AI (in particular “chimps vs humans” and “brain size - IQ correlations”) completely ignore this point about “large comparative advantage at certain tasks” by assuming intelligence is on a one-dimensional spectrum.[12]

-

I find it most plausible that there’s a big difficulty gap between 20%-AI and 100%-AI if 100%-AI is very difficult to develop.

-

- AI progress might be slower once we reach 20%-AI than it is today (though my best guess is that it will be faster).

- A lot of recent AI progress has come from increasing the fraction of computer chips used to train AI. This can only go on for so long!

- Hardware progress might be much more difficult by this time, as we approach the ultimate limits of the current hardware paradigm.

- Both of these reasons are more likely to apply if 20%-AI is hard to develop, i.e. if timelines are long.

- Above I discussed reasons why AI progress will probably be faster once we have 20%-AI: larger total $ investments in AI and AI automation. But these reasons may not apply strongly:

- It might be hard to quickly convert “more $” into faster AI progress.

- It may take years for new talent to be able to contribute to the cutting edge (especially with hardware R&D). So growing the total quality-adjusted talent in AI R&D might be slow.

- Even if you could quickly double the amount of quality-adjusted R&D talent, that less-than-doubles the rate of progress due to duplication of effort and difficulties parallelising work (“nine mothers can’t make a baby in one month”).

- Hard to scale up global production of AI chips, due to the immense complexity of the supply chain.

- Limited effects of AI automation on AI R&D progress.

- There will be some lags before AI is deployed in AI R&D.

- Progress will be bottlenecked by the tasks AI can still not perform.

- It might be hard to quickly convert “more $” into faster AI progress.

- After we reach 20%-AI, we may become more concerned about various AI risks and deliberately slow down.

Takeoff won’t last >10 years unless 100%-AI is very hard to develop

As discussed above, AI progress is already very fast and will probably become faster once we have 20%-AI. If you think that even 10 years of this fast rate of progress won’t be enough to reach 100%-AI, that implies that 100%-AI is way harder to develop than 20%-AI.

In addition, I think that today’s AI is quite far from 20%-AI: its economic impact is pretty limited (<$100b/year), suggesting it can’t readily[13] automate even 1% of tasks. So I personally expect 20%-AI to be pretty difficult to develop compared to today’s AI.

This means that, if takeoff lasts >10 years, 100%-AI is a lot harder to develop than 20%-AI, which is itself a lot harder to develop than today’s AI. This all only works out if you think that 100%-AI is very difficult to develop. Playing around with the compute-centric model, I find it hard to get >10 year takeoff without assuming that 100%-AI would have taken >=1e38 FLOP to train with 2020 algorithms (which was the conservative “long horizon” anchor in Bio Anchors).

Time from AGI to superintelligence is probably less than 1 year

Recall that by AGI I mean AI that can readily perform ~100% of cognitive tasks as well as a human professional. By superintelligence I mean AI that very significantly surpasses humans at ~100% of cognitive tasks. My best guess is that the time between these milestones is less than 1 year, the primary reason being the massive amounts of AI labour available to do AI R&D, once we have AGI. More.

Takeaways about impact takeoff speed

Here I mostly focus on economic impact.

If we align AGI, I weakly expect impact takeoff to be slower than capabilities takeoff

I think there will probably just be a few years (~3 years) from 20%-AI to 100%-AI (in a capabilities sense). But, if AI is aligned, I think time from actually deploying AI in 20% to >95% of economic tasks will take many years (~10 years):

- Standard deployment lags. It typically takes decades for new technologies to noticeably affect GDP growth, e.g. computers and the internet.

- Political economy. Workers and organizations on course to be replaced by AI will attempt to block its deployment.

- Caution. We should be, and likely will be, very cautious about handing over ~all decision making to advanced AI, even if we have strong evidence that it’s safe and aligned. (E.g. imagine the resistance to letting AI run the government.) This would probably mean that humans remain a “bottleneck” on AI’s economic impact for some time.

- Even if we have compelling reasons to hand over all decisions to AI, I still expect there to be a lot of (perhaps unreasonable) caution – AIs making decisions will feel creepy and weird to many people.

I’m not confident about this. Here are some countervailing considerations:

- Less incentive to deploy AI before superintelligence.

- If a lab faces a choice between deploying their current SOTA AIs in the economy vs investing in improving SOTA, they may choose the latter if they think they could automate AI R&D and thereby accelerate their lab’s AI progress.

- Eventually though, after the “better AI → faster AI R&D progress” feedback loop has fizzled out (perhaps after they’ve developed superhuman AI), labs’ incentive will simply be to deploy. There could be an extremely fast impact takeoff when labs suddenly start trying to deploy their superhuman AIs.

- Superhuman AIs quickly circumvent barriers to deployment. Perhaps pre-AGI systems mostly aren’t deployed due to barriers like regulations. But superhuman aligned AI might be able to quickly navigate these barriers, e.g. good-faith convincing human regulators to deploy it more widely so it can cure diseases.

Many of the above points, on both sides, apply more weakly to the impact of AI on AI R&D than on the general economy. For example, I expect regulation to apply less strongly in AI R&D, and also for lab incentives to favour deployment of AIs in AI R&D (especially software R&D). So I expect impact takeoff within AI R&D to match capabilities takeoff fairly closely.

If we don’t align AGI, I expect impact takeoff to be faster than capabilities takeoff

If AGI isn’t aligned, then AI’s impact could increase very suddenly at the point when misaligned AIs first collectively realise that they can disempower humanity and try to do so. Before this point, human deployment decisions (influenced by regulation, general caution, slow decision making, etc) limit AI’s impact; afterwards AIs forcibly circumvent these decisions.[14]

Some chance of <$3tr/year economic impact from AI before we have AI that could disempower humanity

I’m at ~15% for this. (For reference, annual revenues due to AI today are often estimated at ~$10-100b,[15] though this may be smaller than AI’s impact on GDP.)

Here are some reasons this could happen:

- Fast capabilities takeoff. There might be only a few years from “AI that could readily add $3tr/year to world GDP” to AI that could disempower humanity. See above arguments.

- Significant lags to deploying AI systems in the broader economy. See above points.

- As above, I expect there will be fewer lags to deployment in AI R&D. I’m not counting work done by AI within AI R&D as counting towards the “$3tr”.

- Labs may prioritise improving SOTA AI over deploying it. Discussed above; this could continue until labs develop AI that could disempower humanity.

- On the other hand, even if leading labs strongly prioritise deploying AIs internally, they’ll probably expend some effort plucking the low-hanging fruit for deploying it in the broader economy to make money and generate more investment. And even if leading labs don’t do this, other labs may specialise in training AI to be deployed in the broader economy.

Why am I not higher on this?

-

$3tr/year only corresponds to automating ~6% of cognitive tasks;[16] I expect AI will be able to perform >60%, and probably >85% of cognitive tasks before it can disempower humanity. That’s a pretty big gap in AI capabilities!

-

People will be actively trying to create economic value from AI and also actively trying to prevent AI from being able to disempower humanity.

- We’ll train AI specifically to be good at economically valuable tasks and not train AI to be good at “taking over the world” tasks (modulo the possibility of using AI in the military).

- We’ll make adjustments to workflows etc. to facilitate AI having economic impact, and (hopefully!) make adjustments to protect against AI takeover.

-

I have a fairly high estimate of the difficulty of developing AGI. I think we’re unlikely to develop AGI by 2030, by which time AI may already be adding >$3tr/year to world GDP.

My “15%” probability here feels especially non-robust, compared to the others in this post.

Takeaways about AI timelines

Multiple reasons to have shorter timelines compared to what I thought a few years ago

Here’s a list (including some repetition from above):

- Growing $ investment in training runs, software R&D and hardware R&D, once AI can readily automate non-trivial fractions of cognitive labour (e.g >3%).

- AI automation of AI R&D accelerating AI progress. Firstly, it may be easier to fully automate AI R&D than to fully automate cognitive labour in general. Secondly, even partial R&D automation can significantly speed up AI progress.

- “Swimming in runtime compute”.

-

If AGI can’t be trained by ~2035 (as I think is likely), then we’ll have a lot of runtime compute lying around, e.g. enough to run 100s of millions of SOTA AIs.[17]

-

It may be possible to leverage this runtime compute to “boost” the capabilities of pre-AGI systems. This would involve using existing techniques for this like “chain of thought”, “best of N sampling” and MCTS, as well as finding novel techniques. As a result, we might fully automate AI R&D much sooner than we otherwise would.

-

I think this factor alone could easily shorten timelines by ~5 years if AGI training requirements are my best guess (1e36 FLOP with 2020 algorithms). It shortens timelines more(/less) if training requirements are bigger(/smaller).

-

- Faster software progress. I put more probability on algorithmic progress for training AGI being very fast than previously. This is from fast software progress for LMs (e.g. Chinchilla scaling) and recent analysis from Epoch.

Harder than I thought to avoid AGI by 2060

To avoid AGI by 2060, we cannot before 2040 develop “AI that is so good that AGI follows within a couple of decades due to [rising investment and/or AI itself accelerating AI R&D progress]”. As discussed above, this latter target might be much easier to hit. So my probability of AGI by 2060 has risen.

Relatedly, I used to update more on growth economist-y concerns like “ah but if AI can automate 90% of tasks but not the final 10%, that will bottleneck its impact”. Now I think “well if AI automates 90% of cognitive tasks that will significantly accelerate AI R&D progress and attract more investment in AI, so it won’t be too long before AI can perform 100%”.

Takeaways about the relationship between takeoff speed and AI timelines

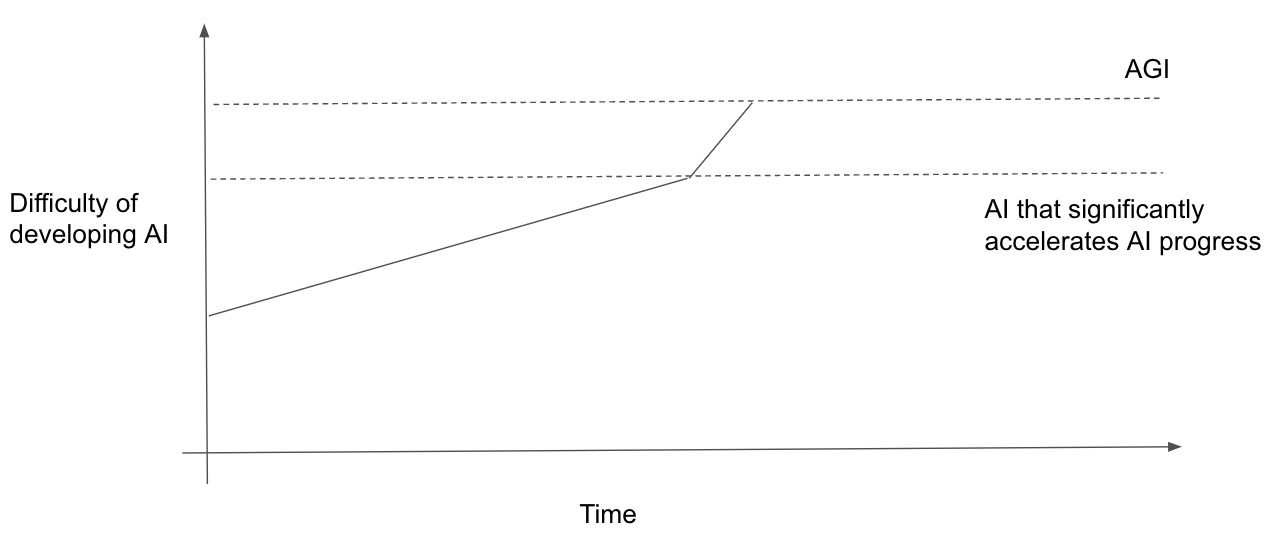

The easier AGI is to develop, the faster takeoff will be

Probably the biggest determinant of takeoff speeds is the difficulty gap between 100%-AI and 20%-AI. If you think that 100%-AI isn’t very difficult to develop, this upper-bounds how large this gap can be and makes takeoff faster.

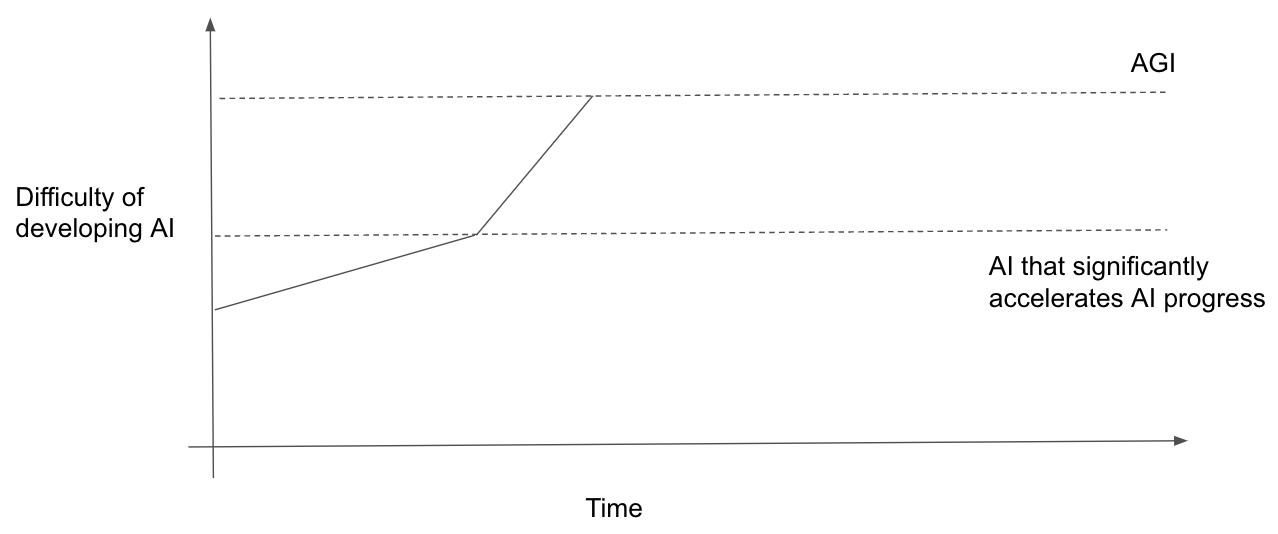

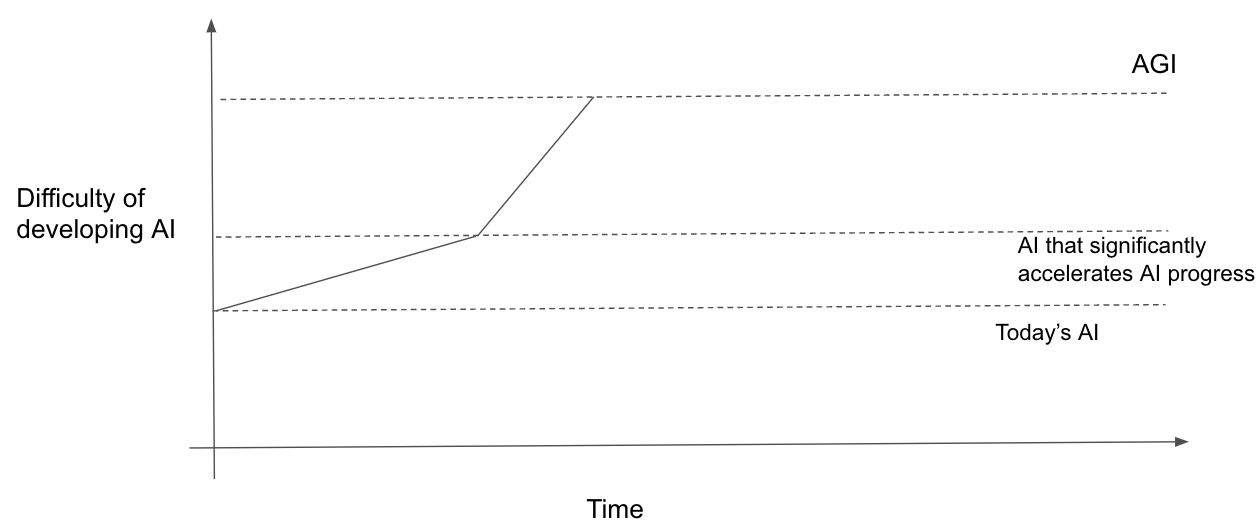

In the lower scenario AGI is easier to develop and, as a result, takeoff is faster.

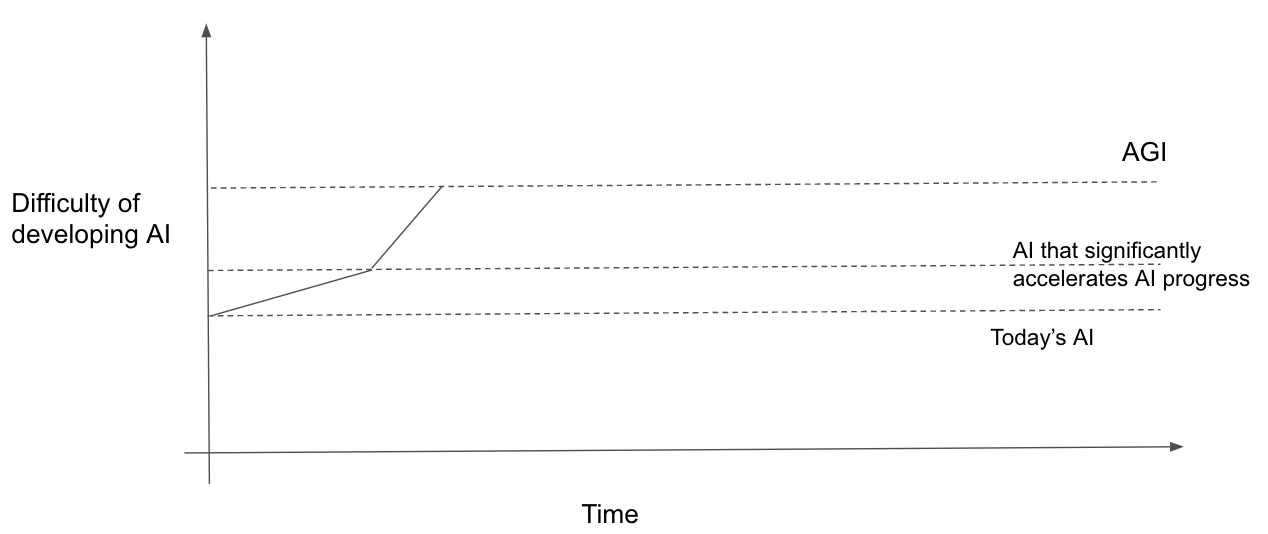

Holding AGI difficulty fixed, slower takeoff → earlier AGI timelines

If takeoff is slower, there is a bigger difficulty gap between AGI and “AI that significantly accelerates AI progress”. Holding fixed AGI difficulty, that means “AI that significantly accelerates AI progress” happens earlier. And so AGI happens earlier. (This point has been made before.)

Two scenarios with the same AGI difficulty. In the lower scenario takeoff is slower and, as a result, AGI happens sooner.

The model in the report quantifies this tradeoff. When I play around with it I find that, holding the difficulty of AGI constant, decreasing the time from 20%-AI to 100%-AI by two years delays 100%-AI by three years.[19] I.e. make takeoff two years shorter → delay 100%-AI by three years.

How does the report relate to previous thinking about takeoff speeds?

Eliezer Yudkowsky’s Intelligence Explosion Microeconomics

- Intelligence explosion microeconomics (IEM) doesn’t argue for takeoff happening in weeks rather than in years, so doesn’t speak to whether takeoff is faster or slower than I conclude. More.

- I think of my report as providing one possible quantitative framework for Intelligence Explosion Microeconomics. It makes IEM’s qualitative claims quantitative by drawing on empirical evidence about the returns to hardware + software R&D, how intelligence scales with additional compute and better algorithms, and how cognitive output scales with intelligence. More.

- I think Eliezer’s thinking about takeoff speeds is influenced by his interpretation of the chimp-human transition. I chatted to Nate Soares about this transition and its implications for AI takeoff speeds; I describe my understanding of Nate’s view here.

- I currently and tentatively put ~6% on a substantial discontinuity[20] in AI progress around the human range; but this was not the main focus of my research. More.

Paul Christiano

I think Paul Christiano’s 2018 blog post does a good job of arguing that takeoff is likely to be continuous. It also claims that takeoff will probably be slow. My report highlights the possibility that takeoff could be continuous but still be pretty fast, and the Monte Carlo analysis spits out the probability that takeoff is “fast” according to the definitions in the 2018 blog post.

More.

Notes

By “AI can readily perform a task” I mean “performing the task with AI could be done with <1 year of work spent engineering and rearranging workflows, and this would be profitable”. ↩︎

Recall that “readily” means “automating the task with AI would be profitable and could be done within 1 year”. ↩︎

100%-AI is different from AGI only in that 100%-AI requires that we have enough runtime compute to actually automate all instances of cognitive tasks that humans perform, whereas AGI just requires that AI could perform any (but not all) cognitive tasks. ↩︎

To arrive at these probabilities I took the results of the model’s Monte Carlo analysis and adjusted them based on the model’s limitations – specifically the model excluding certain kinds of discontinuities in AI progress and ignoring certain frictions to developing and deploying AI systems. ↩︎

Not robust means that further arguments and evidence could easily change my probabilities significantly, and that it’s likely that in hindsight I’ll think these numbers were unreasonable given the current knowledge available to me. ↩︎

Why? Firstly, I think the cognitive tasks in AI R&D will be particularly naturally suited for AI automation, e.g. because there is lots of data for writing code, AI R&D mostly doesn’t require manipulating things in the real world, and indeed AI is already helping with AI R&D. Secondly, I expect AI researchers to prioritise automating AI R&D over other areas because they’re more familiar with AI R&D tasks, there are fewer barriers to deploying AI in their own workflows (e.g. regulation, marketing to others), and because AI R&D will be a very valuable part of the economy when we’re close to AGI. ↩︎

These probabilities are higher than the ones above because here I’m ignoring types of discontinuities that aren’t captured by having a small “effective FLOP gap” between 20%-AI and 100%-AI. ↩︎

Chimps reach sexual maturity around 7 and can live until 60, suggesting humans have 1-2X more time for learning rather than 3X. ↩︎

World GDP is ~$100tr, about half of which is paid to human labour. If AI automates 20% of that work, that’s worth ~$10tr/year. ↩︎

Reminder: “AI can readily automate X” means “automating X with AI would be profitable and could be done within 1 year”. ↩︎

The “horizon length” concept is from Bio Anchors. Short horizons means that each data point requires the model to “think” for only a few seconds; long horizons means that each data point requires the model to “think” for months, and so training requires much more compute. ↩︎

Indeed, my one-dimensional model of takeoff speeds predicts faster takeoff. ↩︎

Reminder: “AI can readily automate X” means “automating X with AI would be profitable and could be done within 1 year”. ↩︎

This falls under “Superhuman AIs quickly circumvent barriers to deployment”, from above. ↩︎

E.g. here, here, here, here. I don’t know how reliable these estimates are, or even their methodologies. ↩︎

World GDP is ~$100tr, about half of which is paid to human labour. If AI automates 6% of that work, that’s worth ~$3tr/year. ↩︎

Here's a rough BOTEC (h/t Lukas Finnveden). ↩︎

Reminder: x%-AI is AI that could readily automate x% of cognitive tasks, weighted by their economic value in 2020. ↩︎

By “ substantial discontinuous jump” I mean “>10 years of progress at previous rates occurred on one occasion”. (h/t AI impacts for that definition) ↩︎

Benjamin Hilton @ 2023-01-23T16:16 (+34)

This looks really cool, thanks Tom!

I haven't read the report in full (just the short summary) - but I have some initial scepticism, and I'd love to answers to some of the following questions, so I can figure out how much evidence this report is on takeoff speeds. I've put the questions roughly in order of subjective importance to my ability to update:

- Did you consider Baumol effects, the possibility of technological deflation, and the possibility of technological unemployment, how they affect the profit incentive as tasks are increasingly automated? [My guess is that this effect of all of these is to slow takeoff down, so I'd guess a report that uses simpler models will be noticeably overestimating takeoff speeds.]

- How much does this rely on the accuracy of semi-endogenous growth models? Does this model rely on exponential population growth? [I'm asking because as far as I can tell, work relying on semi-endogenous growth models should be pretty weak evidence. First, the "semi" in semi-endogenous growth usually refers to exogenous exponential population growth, which seems unlikely to be a valid assumption. Second, endogenous growth theory has very limited empirical evidence in favour of it (e.g. 1, 2) and I have the impression that this is true for semi-endogenous growth models too. This wouldn't necessarily be a problem in other fields, but in general I think that economic models with little empirical evidence behind them provide only very weak evidence overall.]

- In section 8, the only uncertainty pointing in favour of fast takeoff is "there might be a discontinuous jump in AI capabilities". Does this mean that, if you don't think a discontinuous jump in AI capabilities is likely, you should expect slower take-off than your model suggests? How substantial is this effect?

- How did you model the AI production function? Relatedly, how did you model constraints like energy costs, data costs, semiconductor costs, silicon costs etc.? [My thoughts: looks like you roughly used a task-based CES model, which seems like a decent choice to me, knowing not much about this! But I'd be curious about the extent to which using this changed your results from Cobb-Douglas.]

- I'm vaguely worried that the report proves too much, in that I'd guess that the basic automation of the industrial revolution also automated maybe 70%+ of tasks by pre-industrial revolution GDP. (Of course, generally automation itself wasn't automated - so I'd be curious on your thoughts about the extent to which this criticism applies at least to the human investment parts of the report.)

That's all the thoughts that jumped into my head when I read the summary and skimmed the report - sorry if they're all super obvious if I'd read it more thoroughly! Again, super excited to see models with this level of detail, thanks so much!

Tom_Davidson @ 2023-01-23T18:19 (+31)

Thanks for these great questions Ben!

To take them point by point:

- The CES task-based model incorporates Baumol effects, in that after AI automates a task the output on that task increases significantly and so its importance to production decreases. The tasks with low output become the bottlenecks to progress.

- I'm not sure what exactly you mean by technological deflation. But if AI automates therapy and increases the amount of therapists by 100X then my model won't imply that the real $ value of therapy industry increases 100X. The price of therapy falls and so there is a more modest increase in the value of therapy.

- Re technological unemployment, the model unrealistically assumes that when AI automates (e.g.) 20% of tasks, human workers are immediately reallocated to the remaining 80%. I.e. there is no unemployment until AI automates 100% of tasks. I think this makes sense for things like Copilot that automates/accelerates one part of a job; but is wrong for a hypothetical AI that fully automates a particular job. Modelling delays to reallocating human labour after AI automation would make takeoff slower. My guess is that this will be a bigger deal for the general economy than for AI R&D. Eg maybe AI fully automates the trucking industry, but I don't expect it to fully automate a particular job within AI R&D. Most of the action with capabilities takeoff speed is with AI R&D (the main effect of AI automation is to accelerate hardware and software progress), so I don't think modelling this better would affect takeoff speeds by much.

- Profit incentives. This is a significant weakness of the report - I don't explicitly model the incentives faced by firms to invest in AI R&D and do large training runs at all. (More precisely, I don't endogenise investment decisions as being made to maximise future profits, as happens in some economic models. Epoch is working on a model along these lines.) Instead I assume that once enough significant actors "wake up" to the strategic and economic potential of AI, investments will rise faster than they are today. So one possibility for slower takeoff is that AI firms just really to capture the value they create, and can't raise the money to go much higher than (e.g.) $5b training runs even after many actors have "woken up".

- I am using semi-endogenous growth models to predict the rate of future software and hardware progress, so they're very important. I don't know of a better approach to forecasting how investments in R&D will translate to progress, without investigating the details of where specifically progress might come from (I think that kind of research is very valuable, but it was far beyond the scope of this project). I think semi-endogenous growth models are a better fit to the data than the alternatives (e.g. see this). I do think it's a valid perspective to say "I just don't trust any method that tries to predict the rate of technological progress from the amount of R&D investment", but if you do want to use such a method then I think this is the ~best you can do. In the Monte Carlo analysis, I put large uncertainty bars on the rate of returns to future R&D to represent the fact that the historical relationship between R&D investment and observed progress may fail to hold in the future.

- I don't expect the papers you link to change my mind about this, from reading the abstracts. It seems like your second link is a critique of endogenous growth theory but not semi-endog theory (it says "According to endogenous growth theory, permanent changes in certain policy variables have permanent effects on the rate of economic growth" but this isn't true of semi-endog theories). It seems like your first link is either looking at ~irrelevant evidence or drawing a the incorrect conclusion (here's my perspective on the evidence mentioned in its abstract: "the slowing of growth in the OECD countries over the last two decades [Tom: I expect semi-endog theories can explain this better than the neoclassical model. The population growth rate of the scientific workforce as been slowing so we'd expect growth so slow as well; the neoclassical model as (as far as I'm aware) no comparable mechanism for explaining the slowdown.] ; the acceleration of growth in several Asian countries since the early 1960s [this is about catch-up growth so wouldn't expect semi-endog theories to explain it; semi-endog theories are designed to explain growth of the global technological frontier] ; studies of the determinants of growth in a cross-country context [again, semi-endog growth models aren't designed to explain this kind of thing at all]; and sources of the differences in international productivity levels [again again, semi-endog growth models aren't designed to explain this kind of thing at all]".

- You could see this as an argument for slower takeoff if you think "I'm pretty sure that looking into the details of where future progress might come from would conclude that progress will be slower than is predicted by the semi-endogenous model", although this isn't my current view.

- One way to think about this is to start from a method you may trust more that using semi-endog models: just extrapolating past trends in tech progress. But you might worry about this method if you expect R&D inputs to the relevant fields to rise much faster than in recent history (because you expect people to invest more and you expect AI to automate a lot of the work). Naively, your method is going to underestimate the rate of progress. So then using a semi-endog model addresses this problem. It matches the predictions of your initial method when R&D inputs continue to rise at their recent historical rate, but predicts faster progress in scenarios where R&D inputs rise more quickly than in recent history.

- > "Does this mean that, if you don't think a discontinuous jump in AI capabilities is likely, you should expect slower take-off than your model suggests? How substantial is this effect?" The results of the Monte Carlo don't include any discontinuous jumps (beyond the possibility that there's a continuous but very-fast transition from "AI that isn't economically useful" to AGI). So adjusting for discontinuities would only make takeoff faster. My own subjective probabilities do increase the probability of very fast takeoff by 5-10% to account for the possibility of other discontinuities.

- "In section 8, the only uncertainty pointing in favour of fast takeoff is "there might be a discontinuous jump in AI capabilities"" There are other ways that I think my conclusions might be biased in favour of slower takeoff, in particular the ones mentioned here.

- "How did you model the AI production function? Relatedly, how did you model constraints like energy costs, data costs, semiconductor costs, silicon costs etc.?"

- In the model the capability of the AI trained just depends on the compute used in training and the quality of AI algorithms used; you combine the two multiplicatively. I didn't model energy/semiconductor/silicon costs except as implicit in FLOP/$ trends); I didn't model or data costs (which feels like a significant limitation).

- The CES task-based model is used as the production function for R&D to improve AI algorithms ("software") and AI chips ("hardware"), and for GDP. It gives slower takeoff than if you used Cobb Douglas bc you get more bottlenecked by the tasks AI still can't perform (e.g. tasks done by humans, or tasks done with equipment like experiments).

- There's a parameter rho that controls how close the behaviour is to Cobb Douglas vs a model with very binding bottlenecks. I ultimately settled on a values that make GDP much more bottlenecked by physical infrastructure than R&D progress. This was based on it seeming to me that you could speed up R&D a lot by uploading the smartest minds and running billions of them at 100X speed, but couldn't increase GDP by nearly as much by having those uploads try to provide people with goods and services (holding the level of technology fixed).

- "I'm vaguely worried that the report proves too much, in that I'd guess that the basic automation of the industrial revolution also automated maybe 70%+ of tasks by pre-industrial revolution GDP." I agree with this! I don't think it undermines the report - I discuss it here. Interested to hear pushback if you disagree.

kokotajlod @ 2023-01-23T04:13 (+32)

I'm so excited to see this go live! I've learned a lot from it & consider it to do for takeoff speeds what Ajeya's report did for timelines, i.e. it's an actual fucking serious-ass gears-level model, the best that exists in the world for now. Future work will critique it and build off it rather than start from scratch, I say. Thanks Tom and Epoch and everyone else who contributed!

I strongly encourage everyone reading this to spend 10min playing around with the model, trying out different settings, etc. For example: Try to get it to match what you intuitively felt like timelines and takeoff would look like, and see how hard it is to get it to do so. Or: Go through the top 5-10 variables one by one and change them to what you think they should be (leaving unchanged the ones about which you have no opinion) and then see what effect each change has.

Almost two years ago I wrote this story of what the next five years would look like on my median timeline. At the time I had the bio anchors framework in mind with a median training requirements of 3e29. So, you can use this takeoff model as a nice complement to that story:

- Go to takeoffspeeds.com and load the preset: best guess scenario.

- Set AGI training requirements to 3e29 instead of 1e36

- (Optional) Set software returns to 2.5 instead of 1.25 (I endorse this change in general, because it's more consistent with the empirical evidence. See Tom's report for details & decide whether his justification for cutting it in half, to 1.25, is convincing.)

- (Optional) Set FLOP gap to 1e2 instead of 1e4 (In general, as Tom discusses in the report, if training requirements are smaller then probably the FLOP gap is smaller too. So if we are starting with Tom's best guess scenario and lowering the training requirements we should also lower the FLOP gap.)

- The result:

In 2024, 4% of AI R&D tasks are automated; then 32% in 2026, and then singularity happens around when I expected, in mid 2028. This is close enough to what I had expected when I wrote the story that I'm tentatively making it canon.

Oh, also, a citation about my contribution to this post (Tom was going to make this a footnote but ran into technical difficulties): The extremely janky graph/diagram was made by me in may 2021, to help explain Ajeya's Bio Anchors model. The graph that forms the bottom left corner came from some ARK Invest webpage which I can't find now.

Greg_Colbourn @ 2023-01-23T10:21 (+6)

Very worrying! Can you get OpenAI to do something!? What's the plan? Is a global moratorium on AGI research possible? Should we just be trying for it anyway at this point? Are Google/DeepMind and Microsoft/OpenAI even discussing this with each other?

Vasco Grilo @ 2024-04-09T16:33 (+2)

Hi Daniel,

In 2024, 4% of AI R&D tasks are automated; then 32% in 2026, and then singularity happens around when I expected, in mid 2028. This is close enough to what I had expected when I wrote the story that I'm tentatively making it canon.

Relatedly, what it your median time from now until human extinction? If it is only a few years, I would be happy to set up a bet like this one.

jacobpfau @ 2023-01-24T20:51 (+11)

My deeply concerning impression is that OpenPhil (and the average funder) has timelines 2-3x longer than the median safety researcher. Daniel has his AGI training requirements set to 3e29, and I believe the 15th-85th percentiles among safety researchers would span 1e31 +/- 2 OOMs. On that view, Tom's default values are off in the tails.

My suspicion is that funders write off this discrepancy, if noticed, as inside-view bias i.e. thinking safety researchers self-select for scaling optimism. My, admittedly very crude, mental model of an OpenPhil funder makes two further mistakes in this vein: (1) Mistakenly taking the Cotra report's biological anchors weighting as a justified default setting of parameters rather than an arbitrary choice which should be updated given recent evidence. (2) Far overweighting the semi-informative priors report despite semi-informative priors abjectly failing to have predicted Turing-test level AI progress. Semi-informative priors apply to large-scale engineering efforts which for the AI domain has meant AGI and the Turing test. Insofar as funders admit that the engineering challenges involved in passing the Turing test have been solved, they should discard semi-informative priors as failing to be predictive of AI progress.

To be clear, I see my empirical claim about disagreement between the funding and safety communities as most important -- independently of my diagnosis of this disagreement. If this empirical claim is true, OpenPhil should investigate cruxes separating them from safety researchers, and at least allocate some of their budget on the hypothesis that the safety community is correct.

Vasco Grilo @ 2024-01-08T22:00 (+2)

Hi Tom,

We have some reasons to care about takeoff speeds, but are there any plans to explicitly model how they impact AI risk? Are there any other relevant sections on the report besides the one I just linked?