Ryan Greenblatt's Quick takes

By Ryan Greenblatt @ 2024-02-03T17:55 (+3)

nullRyan Greenblatt @ 2025-07-27T18:59 (+99)

Slightly hot take: Longtermist capacity/community building is pretty underdone at current margins and retreats (focused on AI safety, longtermism, or EA) are also underinvested in. By "longtermist community building", I mean rather than AI safety. I think retreats are generally underinvested in at the moment. I'm also sympathetic to thinking that general undergrad and high school capacity building (AI safety, longtermist, or EA) is underdone, but this seems less clear-cut.

I think this underinvestment is due to a mix of mistakes on the part of Open Philanthropy (and Good Ventures)[1] and capacity building being lower status than it should be.

Here are some reasons why I think this work is good:

- It's very useful for there to be people who are actually trying really hard to do the right thing and they often come through these sorts of mechanisms. Another way to put this is that flexible, impact-obsessed people are very useful.

- Retreats make things feel much more real to people and result in people being more agentic and approaching their choices more effectively.

- Programs like MATS are good, but they get somewhat different people at a somewhat different part of the funnel, so they don't (fully) substitute.

A large part of why I'm writing this is to try to make this work higher status and to encourage more of this work. Consider yourself to be encouraged and/or thanked if you're working in this space or planning to work in this space.

- ^

I think these mistakes are: underfunding this work, Good Ventures being unwilling to fund some versions of this work, failing to encourage people to found useful orgs in this space, and hiring out many of the best people in this space to instead do (IMO less impactful) grantmaking.

Vaidehi Agarwalla 🔸 @ 2025-07-28T12:27 (+7)

Other (probably more important, if combined) reasons :

- wanting to have direct impact (i.e. risk aversion within longtermist interventions)

- personal fit for founding (and specifically founding meta orgs, where impact is even harder to quantify)

- not quite underfunding, but lack of funding diversity if your vision for the org differs from what OP is willing to fund at scale.

- lack of founder-level talent

Dylan Richardson @ 2025-08-01T02:48 (+3)

AI safety pretty clearly swallows longtermist community building. If we want longtermism to be built and developed it needs to be very explicitly aimed at, not just mentioned on the side. I suspect that general EA group community building is better for this reason too - it isn't overwhelmed by any one object level cause/career/demographic.

Noah Birnbaum @ 2025-07-31T14:30 (+3)

Some of the arguments I make here are similar.

Ryan Greenblatt @ 2025-07-03T16:37 (+71)

Recently, various groups successfully lobbied to remove the moratorium on state AI bills. This involved a surprising amount of success while competing against substantial investment from big tech (e.g. Google, Meta, Amazon). I think people interested in mitigating catastrophic risks from advanced AI should consider working at these organizations, at least to the extent their skills/interests are applicable. This both because they could often directly work on substantially helpful things (depending on the role and organization) and because this would yield valuable work experience and connections.

I worry somewhat that this type of work is neglected due to being less emphasized and seeming lower status. Consider this an attempt to make this type of work higher status.

Pulling organizations mostly from here and here we get a list of orgs you could consider trying to work (specifically on AI policy) at:

- Encode AI

- Americans for Responsible Innovation (ARI)

- Fairplay (Fairplay is a kids safety organization which does a variety of advocacy which isn't related to AI. Roles/focuses on AI would be most relevant. In my opinion, working on AI related topics at Fairplay is most applicable for gaining experience and connections.)

- Common Sense (Also a kids safety organization)

- The AI Policy Network (AIPN)

- Secure AI project

To be clear, these organizations vary in the extent to which they are focused on catastrophic risk from AI (from not at all to entirely).

seanrson @ 2025-07-04T15:32 (+21)

Also want to shout out @Holly Elmore ⏸️ 🔸 and PauseAI's activism in getting people to call their senators. (You can commend this effort even if you disagree with an ultimate pause goal.) It could be worth following them for similar advocacy opportunities.

Ryan Greenblatt @ 2025-05-23T21:07 (+68)

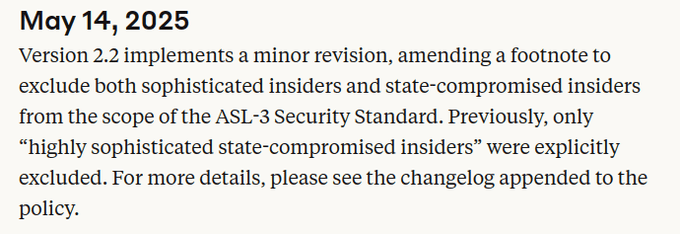

A week ago, Anthropic quietly weakened their ASL-3 security requirements. Yesterday, they announced ASL-3 protections.

I appreciate the mitigations, but quietly lowering the bar at the last minute so you can meet requirements isn't how safety policies are supposed to work.

(This was originally a tweet thread (https://x.com/RyanPGreenblatt/status/1925992236648464774) which I've converted into a quick take. I also posted it on LessWrong.)

What is the change and how does it affect security?

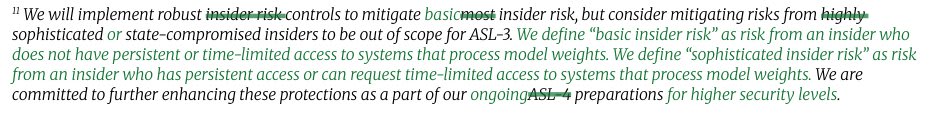

9 days ago, Anthropic changed their RSP so that ASL-3 no longer requires being robust to employees trying to steal model weights if the employee has any access to "systems that process model weights".

Anthropic claims this change is minor (and calls insiders with this access "sophisticated insiders").

But, I'm not so sure it's a small change: we don't know what fraction of employees could get this access and "systems that process model weights" isn't explained.

Naively, I'd guess that access to "systems that process model weights" includes employees being able to operate on the model weights in any way other than through a trusted API (a restricted API that we're very confident is secure). If that's right, it could be a high fraction! So, this might be a large reduction in the required level of security.

If this does actually apply to a large fraction of technical employees, then I'm also somewhat skeptical that Anthropic can actually be "highly protected" from (e.g.) organized cybercrime groups without meeting the original bar: hacking an insider and using their access is typical!

Also, one of the easiest ways for security-aware employees to evaluate security is to think about how easily they could steal the weights. So, if you don't aim to be robust to employees, it might be much harder for employees to evaluate the level of security and then complain about not meeting requirements[1].

Anthropic's justification and why I disagree

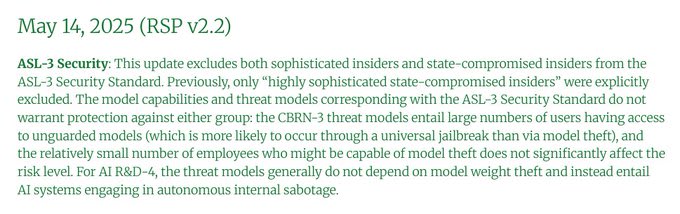

Anthropic justified the change by saying that model theft isn't much of the risk from amateur CBRN uplift (CBRN-3) and that the risks from AIs being able to "fully automate the work of an entry-level, remote-only Researcher at Anthropic" (AI R&D-4) don't depend on model theft.

I disagree.

On CBRN: If other actors are incentivized to steal the model for other reasons (e.g. models become increasingly valuable), it could end up broadly proliferating which might greatly increase risk, especially as elicitation techniques improve.

On AI R&D: AIs which are over the capability level needed to automate the work of an entry-level researcher could seriously accelerate AI R&D (via fast speed, low cost, and narrow superhumanness). If other less safe (or adversarial) actors got access, risk might increase a bunch.[2]

More strongly, ASL-3 security must suffice up until the ASL-4 threshold: it has to cover the entire range from ASL-3 to ASL-4. ASL-4 security itself is still not robust to high-effort attacks from state actors which could easily be motivated by large AI R&D acceleration.

As of the current RSP, it must suffice until just before AIs can "substantially uplift CBRN [at] state programs" or "cause dramatic acceleration in [overall AI progress]". These seem like extremely high bars indicating very powerful systems, especially the AI R&D threshold.[3]

As it currently stands, Anthropic might not require ASL-4 security (which still isn't sufficient for high effort state actor attacks) until we see something like 5x AI R&D acceleration (and there might be serious issues with measurement lag).

I'm somewhat sympathetic to security not being very important for ASL-3 CBRN, but it seems very important as of the ASL-3 AI R&D and seems crucial before the AI R&D ASL-4 threshold! I think the ASL-3 AI R&D threshold should probably instead trigger the ASL-4 security!

Overall, Anthropic's justification for this last minute change seems dubious and the security requirements they've currently committed to seem dramatically insufficient for AI R&D threat models. To be clear, other companies have worse security commitments.

Concerns about potential noncompliance and lack of visibility

Another concern is that this last minute change is quite suggestive of Anthropic being out of compliance with their RSP before they weakened the security requirements.

We have to trust Anthropic quite a bit to rule out noncompliance. This isn't a good state of affairs.

To explain this concern, I'll need to cover some background on how the RSP works.

The RSP requires ASL-3 security as soon as it's determined that ASL-3 can't be ruled out (as Anthropic says is the case for Opus 4).

Here's how it's supposed to go:

- They ideally have ASL-3 security mitigations ready, including the required auditing.

- Once they find the model is ASL-3, they apply the mitigations immediately (if not already applied).

If they aren't ready, they need temporary restrictions.

My concern is that the security mitigations they had ready when they found the model was ASL-3 didn't suffice for the old ASL-3 bar but do suffice for the new bar (otherwise why did they change the bar?). So, prior to the RSP change they might have been out of compliance.

It's certainly possible they remained compliant:

- Maybe they had measures which temporarily sufficed for the old higher bar but which were too costly longer term. Also, they could have deleted the weights outside of secure storage until the RSP was updated to lower the bar.

- Maybe an additional last minute security assessment (which wasn't required to meet the standard?) indicated inadequate security and they deployed temporary measures until they changed the RSP. It would be bad to depend on last minute security assessment for compliance.

(It's also technically possible that the ASL-3 capability decision was made after the RSP was updated. This would imply the decision was only made 8 days before release, so hopefully this isn't right. Delaying evals until an RSP change lowers the bar would be especially bad.)

Conclusion

Overall, this incident demonstrates our limited visibility into AI companies. How many employees are covered by the new bar? What triggered this change? Why does Anthropic believe it remained in compliance? Why does Anthropic think that security isn't important for ASL-3 AI R&D?

I think a higher level of external visibility, auditing, and public risk assessment would be needed (as a bare minimum) before placing any trust in policies like RSPs to keep the public safe from AI companies, especially as they develop existentially dangerous AIs.

To be clear, I appreciate Anthropic's RSP update tracker and that it explains changes. Other AI companies have mostly worse safety policies: as far as I can tell, o3 and Gemini 2.5 Pro are about as likely to cross the ASL-3 bar as Opus 4 and they have much worse mitigations!

Appendix and asides

I don't think current risks are existentially high (if current models were fully unmitigated, I'd guess this would cause around 50,000 expected fatalities per year) and temporarily being at a lower level of security for Opus 4 doesn't seem like that big of a deal. Also, given that security is only triggered after a capability decision, the ASL-3 CBRN bar is supposed to include some conservativeness anyway. But, my broader points around visibility stand and potential noncompliance (especially unreported noncompliance) should be worrying even while the stakes are relatively low.

You can view the page showing the RSP updates including the diff of the latest change here: https://www.anthropic.com/rsp-updates. Again, I appreciate that Anthropic has this page and makes it easy to see the changes they make to the RSP.

I find myself quite skeptical that Anthropic actually could rule out that Sonnet 4 and other models weaker than Opus 4 cross the ASL-3 CBRN threshold. How sure is Anthropic that it wouldn't substantially assist amateurs even after the "possible performance increase from using resources that a realistic attacker would have access to"? I feel like our current evidence and understanding is so weak, and models already substantially exceed virology experts at some of our best proxy tasks.

The skepticism applies similarly or more to other AI companies (and Anthropic's reasoning is more transparent).

But, this just serves to further drive home ways in which the current regime is unacceptable once models become so capable that the stakes are existential.

One response is that systems this powerful will be open sourced or trained by less secure AI companies anyway. Sure, but the intention of the RSP is (or was) to outline what would "keep risks below acceptable levels" if all actors follow a similar policy.

(I don't know if I ever bought that the RSP would succeed at this. It's also worth noting there is an explicit exit clause Anthropic could invoke if they thought proceeding outweighed the risks despite the risks being above an acceptable level.)

This sort of criticism is quite time consuming and costly for me. For this reason there are specific concerns I have about AI companies which I haven't discussed publicly. This is likely true for other people as well. You should keep this in mind when assessing AI companies and their practices.

- ^

It also makes it harder for these complaints to be legible to other employees while other employees might be able to more easily interpret arguments about what they could do.

- ^

It looks like AI 2027 would estimate around a ~2x AI R&D acceleration for a system which was just over this ASL-3 AI R&D bar (as it seems somewhat more capable than the "Reliable agent" bar). I'd guess more like 1.5x at this point, but either way this is a big deal!

- ^

Anthropic says they'll likely require a higher level of security for this "dramatic acceleration" AI R&D threshold, but they haven't yet committed to this nor have they defined a lower AI R&D bar which results in an ASL-4 security requirement.

Owen Cotton-Barratt @ 2025-05-26T22:04 (+9)

I appreciate the investigation here.

I'm not sure whether I agree that "quietly lowering the bar at the last minute so you can meet requirements isn't how safety policies are supposed to work". (Not sure I disagree; but going to try to articulate a case against).

I think in a world where you understand the risks well ahead of time of course this isn't how safety policies should work. In a world where you don't understand the risks well ahead of time, you can get more clarity as the key moments approach, and this could lead you to rationally judge that a lower bar would be appropriate. In a regime of voluntary safety policies, the idea seems to me to be that each actor makes their own judgements about what policy would be safe, and it seems to me like you absolutely could expect to see this pattern some of the time from actors just following their own best judgements, even if those are unbiased.

Of course:

- This is a pattern that you could also see because judgements are getting biased (your discussion of the object level merits of the change is very relevant to this question)

- The difficulty from outside of distinguishing between cases where it's a fair judgement behind the change vs an impartial one is a major reason to potentially prefer regimes other than "voluntary safety policy" -- but I don't really think that it's good for people in the voluntary safety policy regime to act as though they're already under a different regime (e.g. because this might slow down actually moving to a different regime)

(Someone might object that if people can make these changes there's little point in voluntary safety policies -- the company will ultimately do what it thinks is good! I think there would be something to this objection, and that these voluntary policies provide less assurance than it is commonly supposed they do; nonetheless I still think they are valuable, not for the commitments they provide but for the transparency they provide about how companies at a given moment are thinking about the tradeoffs.)

Ryan Greenblatt @ 2025-05-26T22:22 (+9)

From my perspective, a large part of the point of safety policies is that people can comment on the policies in advance and provide some pressure toward better policies. If policies are changed at the last minute, then the world may not have time to understand the change and respond before it is too late.

So, I think it's good to create an expectation/norm that you shouldn't substantially weaken a policy right as it is being applied. That's not to say that a reasonable company shouldn't do this some of the time, just that I think it should by default be considered somewhat bad, particularly if there isn't a satisfactory explanation given. In this case, I find the object level justification for the change somewhat dubious (at least for the AI R&D trigger) and there is also no explanation of why this change was made at the last minute.

Owen Cotton-Barratt @ 2025-05-26T22:47 (+2)

I guess I'm fairly sympathetic to this. It makes me think that voluntary safety policies should ideally include some meta-commentary about how companies view the purpose and value-add of the safety policy, and meta-policies about how updates to the safety policy will be made -- in particular, that it might be good to specify a period for public comments before a change is implemented. (Even a short period could be some value add.)

Ryan Greenblatt @ 2024-02-03T17:55 (+12)

Reducing the probability that AI takeover involves violent conflict seems leveraged for reducing near-term harm

Often in discussions of AI x-safety, people seem to assume that misaligned AI takeover will result in extinction. However, I think AI takeover is reasonably likely to not cause extinction due to the misaligned AI(s) effectively putting a small amount of weight on the preferences of currently alive humans. Some reasons for this are discussed here. Of course, misaligned AI takeover still seems existentially bad and probably eliminates a high fraction of future value from a longtermist perspective.

(In this post when I use the term “misaligned AI takeover”, I mean misaligned AIs acquiring most of the influence and power over the future. This could include “takeover” via entirely legal means, e.g., misaligned AIs being granted some notion of personhood and property rights and then becoming extremely wealthy.)

However, even if AIs effectively put a bit of weight on the preferences of current humans it's possible that large numbers of humans die due to violent conflict between a misaligned AI faction (likely including some humans) and existing human power structures. In particular, it might be that killing large numbers of humans (possibly as collateral damage) makes it easier for the misaligned AI faction to take over. By large numbers of deaths, I mean over hundreds of millions dead, possibly billions.

But, it's somewhat unclear whether violent conflict will be the best route to power for misaligned AIs and this also might be possible to influence. See also here for more discussion.

So while one approach to avoid violent AI takeover is to just avoid AI takeover, it might also be possible to just reduce the probability that AI takeover involves violent conflict. That said, the direct effects of interventions to reduce the probability of violence don't clearly matter from an x-risk/longtermist perspective (which might explain why there hasn't historically been much effort here).

(However, I think trying to establish contracts and deals with AIs could be pretty good from a longtermist perspective in the case where AIs don't have fully linear returns to resources. Also, generally reducing conflict seems maybe slightly good from a longtermist perspective.)

So how could we avoid violent conflict conditional on misaligned AI takeover? There are a few hopes:

- Ensure a bloodless coup rather than a bloody revolution

- Ensure that negotiation or similar results in avoiding the need for conflict

- Ensure that a relatively less lethal takeover strategy is easier than more lethal approaches

I'm pretty unsure about what the approaches here look best or are even at all tractable. (It’s possible that some prior work targeted at reducing conflict from the perspective of S-risk could be somewhat applicable.)

Separately, this requires that the AI puts at least a bit of weight on the preferences of current humans (and isn't spiteful), but this seems like a mostly separate angle and it seems like there aren't many interventions here which aren't covered by current alignment efforts. Also, I think this is reasonably likely by default due to reasons discussed in the linked comment above. (The remaining interventions which aren’t covered by current alignment efforts might relate to decision theory (and acausal trade or simulation considerations), informing the AI about moral uncertainty, and ensuring the misaligned AI faction is importantly dependent on humans.)

Returning back to the topic of reducing violence given a small weight on the preferences of current humans, I'm currently most excited about approaches which involve making negotiation between humans and AIs more likely to happen and more likely to succeed (without sacrificing the long run potential of humanity).

A key difficulty here is that AIs might have a first mover advantage and getting in a powerful first strike without tipping its hand might be extremely useful for the AI. See here for more discussion (also linked above). Thus, negotiation might look relatively bad to the AI from this perspective.

We could try to have a negotiation process which is kept secret from the rest of the world or we could try to have preexisting commitments upon which we'd yield large fractions of control to AIs (effectively proxy conflicts).

More weakly, just making negotiation at all seem like a possibility, might be quite useful.

I’m unlikely to spend much if any time working on this topic, but I think this topic probably deserves further investigation.

Ryan Greenblatt @ 2024-02-09T19:00 (+2)

After thinking about this somewhat more, I don't really have any good proposals, so this seems less promising than I was expecting.

harfe @ 2024-02-03T23:37 (+1)

In the context of a misaligned AI takeover, making negotiations and contracts with a misaligned AI in order to allow it to take over does not seem useful to me at all.

A misaligned AI that is in power could simply decide to walk back any promises and ignore any contracts it agreed to. Humans cannot do anything about it because they lost all the power at that point.

Ryan Greenblatt @ 2024-02-04T00:59 (+2)

Some objections:

- Building better contract enforcement ability might be doable. (Though pretty tricky on enforcing humans to do things and on forcing AIs to do things.)

- Negotation could involve tit-for-tat arrangements.

- Unconditional surrenders can reduce the need for violence if we ensure there is some process for demonstrating that the AI would have succeeded at takeover with very high probabilty. (Note that I'm assuming the AI puts some small amount of weight on the preferences of currently alive humans as I discuss in the parent.)