The availability bias in job hunting

By Vaidehi Agarwalla 🔸 @ 2022-04-30T14:53 (+106)

TL:DR; EA talent is currently suboptimally allocated, resulting in lower impact for the movement. There are many reasons for this, and in this post I talk about one reason: attention misallocation [1] - and specifically, availability bias around certain careers and paths.

Description: A janky but entertaining image of impact lost due to attention misallocation over time.

Linguistic note: I will refer to career paths that are given the most attention in EA “highlighted paths”.

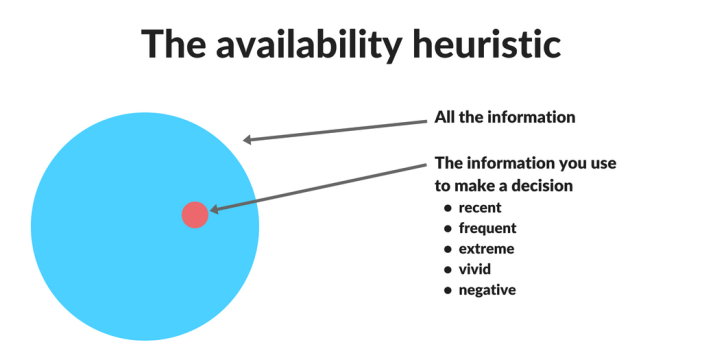

The availability bias is “a mental shortcut that relies on immediate examples that come to a given person's mind when evaluating a specific topic, concept, method or decision.” Many factors cause some facts to be recalled more easily than others (e.g. if the fact is recent, frequent, extreme, vivid, negative etc.)

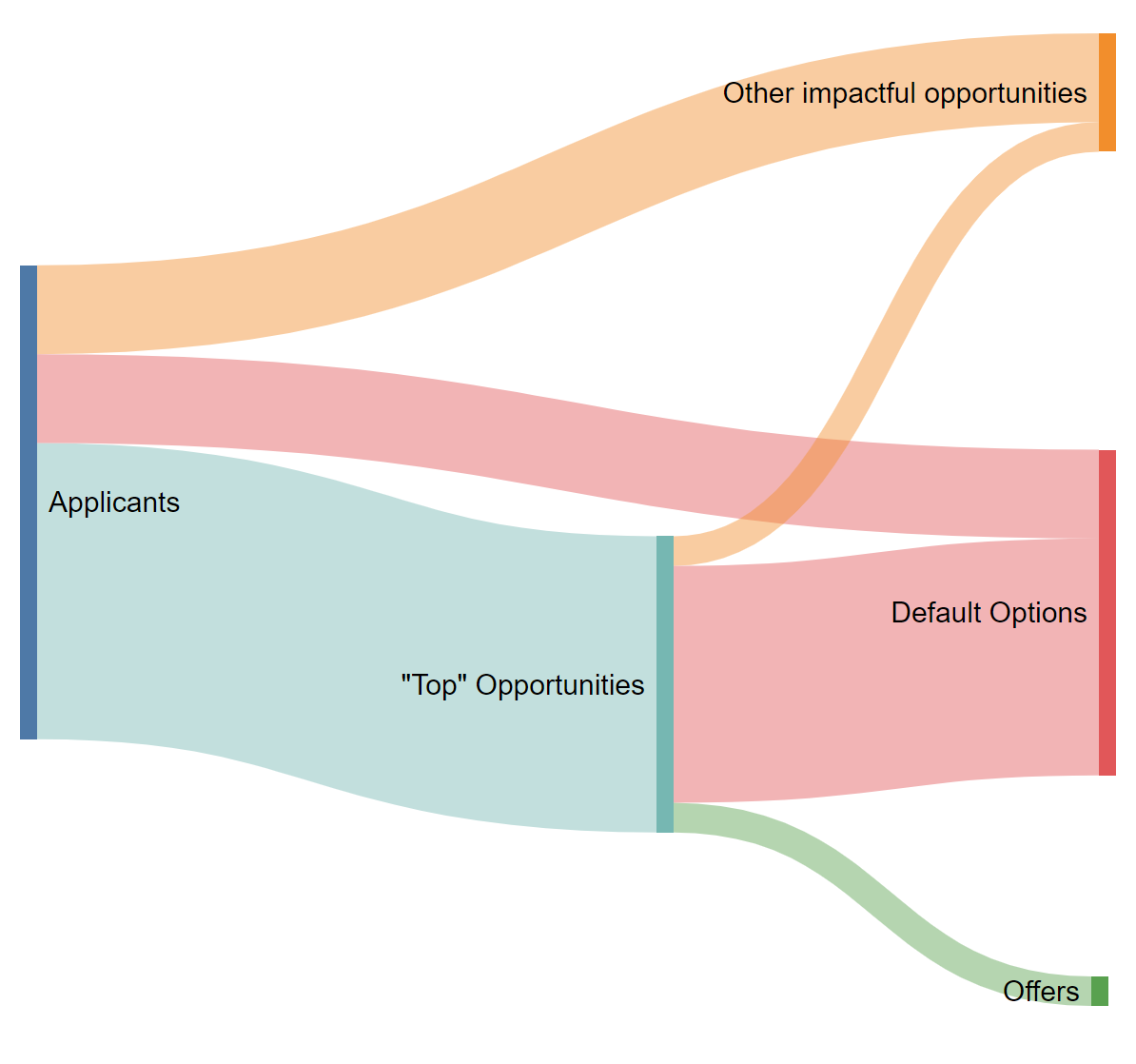

In the EA context, people exhibit the availability bias towards a small subset of very competitive, geography-limited, low absorbency career paths and roles (henceforth highlighted paths). [2] How certain are we that this is actually happening? One proxy for understanding attention is to look at job applications. These are pretty strong signals of intent on the part of community members. Jobs at some EA-aligned organisations are notoriously hard to get and getting rejected from such opportunities comes with costs. The mismatch of attention and opportunities illustrated in the stylized sankey plot below:

How does the availability bias work?

Highlighted paths get disproportionate attention and status from the community, but cannot absorb enough people. The way that these opportunities are perceived and experienced creates the conditions for availability bias. Additionally, there are limited resources and support for other, higher absorbency paths so the lack of information and support around these paths further exacerbates the condition.

It makes highlighted paths...

socially acceptable

EA changes peoples’ perceptions of different opportunities. In particular, it raises the bar for what counts as effective. People consider a smaller set of opportunities to be acceptable and may feel like a given opportunity needs endorsement from the EA community to be acceptable.[3] Because there are so few opportunities that are defined as clearing this bar, they get disproportionate attention.

(socially) desirable

As people get more involved with EA and deepen their engagement, the more likely they are to want direct impact as well. It actually is more desirable to work for an EA organisation because EA jobs provide scarce non-monetary goods such as:

- Social status

- Meaning-making / life orientation

- A sense of having a near-maximal impact

- Being part of a value-aligned group

easier to access (not to actually get)

Job opportunities for highlighted paths at existing EA organisations, feel more accessible because they are publicised repeatedly, concrete, and convenient.

- Repetition: You are much likely to apply if you hear about a role at a careers fair, on job boards, on social media and the Forum, and by referrals from group organisers and friends.

- Concrete: work at an org vs create your own role (e..g in policy this can happen)

- Convenient: Some publicity methods like job boards or career fairs make finding some opportunities very convenient. Curated job boards present you with relevant and up-to-date openings, are convenient to search, and appear comprehensive (sometimes listing hundreds of vacancies). Careers fairs give you the ability to network with staff from the represented organisations which both increasing the likelihood of people applying and may appear to (and actually) improve their chances of getting the job. It also makes it easy to anchor on such methods, because the alternative to a job board is to search up lots of organisations yourself, and the alternative to a careers fair is to proactively reach out and network with people.

It adds friction for people trying to pursue independent paths

People are always making decisions under imperfect information - there are good practical reasons to limit the pool of options considered - but I think that the above factors systematically bias us away from certain kinds of jobs.

They create a "headlight” effect [4] - some paths and roles are much better illuminated than they previously were, but leave others completely in the dark. If given a choice individuals prefer jobs that are more accessible. So for individuals who want to pursue their own paths, they actually have *more* work to do than before they discovered EA - default career paths no longer cut it because the bar has been raised. If they want to go off the beaten path, they'll need to research their own opportunities, find a way to network or information-gather for those jobs, and try to get warm referrals etc. This is hard work and it's reasonable (albeit frustrating) that everyone is not willing to do this.

We haven’t made enough progress to address the availabity bias

Nothing I’ve said here is particularly novel - there have been countless posts on this topic - the SHOW framework encourages EAs to go outside of EA, since EA can be a career endpoint. Multiple career advisors have said they think most impactful jobs are outside of EA. There’s a whole Forum tag discussing the question of EA vs non-EA jobs. It’s been 3 years since these arguments were first widely discussed by the community, but it seems like we still have a lot of progress to make.

I think we are broadly moving in the right direction, with new career organisations like Probably Good and Animal Advocacy Careers, and meta organisations like High Impact Professionals (and various professional EA groups) and Training for Good actively working on issues in this space. I think this is just the start.

What can we do?

Changing attitudes towards how people approach their careers

It seems the discourse that has happened so far has not enough to address the deeper issue of culture change to address issues like misplaced deference. Why could this be? Some hypotheses:

- There hasn’t been enough discussion: I think this is unlikely (see above). It seems like there has been a lot of private and public discussion about this issue, but there hasn’t been much resolution.

- There hasn’t been the right kind of discussion: This post is an attempt to reframe the discussion around concrete mechanisms (the availability bias) and solutions (this section). Perhaps there need to be more solution-proposals to help us map out the possible solution space?

- Discussing just isn’t sufficient - something else is needed: I think this is the most plausible explanation. Past discussions have not always called for concrete changes, but I think there are many things worth experimenting with - which many actors could plausibly fill.

Some very tentatively suggested experiments, in order of increasing resource intensity:

- Just tell people why being proactive is really important: Being really clear that to be successful, you need to be proactive and find opportunities yourself (not just apply for things within the EA community, but carve out your own path).

- Give people an accountability structure: Work together in a workshop to do career planning with a focus on the nitty-gritty (sending out networking emails, drafting applications and submitting, resume feedback)

- Career coaching / Personalised troubleshooting: 1-1 meetings to help coach people to overcome personal bottlenecks. I previously wrote a very rough proposal for a career coaching service.

- Create structures that enable people to create or identify opportunities for themselves. For example, we might teach people how to develop a compelling project proposal, organise a workshop, distil research in an EA-related field such as AI alignment, or identify a knowledge or resource gap in the EA community.

Improving careers advice information & opportunities for existing community members

How can we overcome this binary of direct work EA job and the default safe option for the existing community?

One option is to state that those options exist and make them socially acceptable. There are many impactful opportunities that we have not discovered or defined, that we might want aligned people to enfter, where they can gain relevant career capital for paths they want to pursue (e.g. create strong networks, have direct influence or provide information). We need to create respected, impactful, high absorbency careers.

There is evidence that this approach can work - 80,000 Hours emphasis on the need for operations staff in 2018 encouraged many people to try it out, and at least partially helped spark the operations camp. The idea of EA consultancies had been floating around for a while, but the EA needs consultancies post has created a reference point for why it’s valuable and validated this type of project. Anecdotally, the recent focus on PA work seems to have gotten some people considering this path.

The 80,000 Hours and other EA job boards could aim to list a wider variety of roles from non-EA aligned organisations, perhaps first doing research into some key institutions that could be good for gaining career capital (e.g. entry-level roles for a variety of career paths), and emphasising those by changing the UX of the site (e.g. Have a filtered page of “Jobs suitable for fresh graduates” which filters out a subset of jobs, and have it strategically linked from articles that are targeted towards early-career EAs). [5]

Regional and national groups can engage in local priorities research to develop more targeted and geography-specific careers advice. Field & profession building can also improve the availability of people to speak to about more practical career advice (e.g. how do I get into a particular policy path?) It also provides more opportunities for networking and getting access to opportunities that may not be possible to highlight at the career path level (e.g. that are very connection-dependent).

Company-specific community building can help build within-cohort motivation for EAs pursuing these opportunities, and make it more likely that people feel these careers are socially accepted and okay.

All of the above make it easier for people to take risks - especially when doing something odd or unusual - by providing them with resources to help them feel less alone and more supported.

Targeted outreach for existing defined roles

Let's exploit the situation of well-defined opportunities - both specific jobs and more vaguely defined roles like “entrepreneur” - with targeted outreach to relevant groups.

Let’s make it as easy as possible for relevant people to find the opportunities we think are really impactful and they are good fits for - whether that's targeted outreach to specific groups, getting opportunities listed on specific job boards or tabling at the right careers fairs, headhunting, sending people personalised job recommendations.

This could mean starting recruiting agencies which do focused outreach via 1-1 advising, independently or collaboratively running introductory programs with existing groups (e.g. startup incubators like OnDeck), content outreach which could feed into regular community building efforts (e.g. 40,000 Hours) or something else entirely.

This also means building talent pipelines in advance. Historically it seems that career advice has been more reactive than proactive. How can we change that by modelling out different possible talent needs ahead of time building talent pipelines 1-3 years in advance, rather than trying to create an entrepreneurial culture in a matter of months?

Thanks to Benjamin Skubi, Arjun Khandelwal, Marisa Jurcyzk and Aaron Mayer for suggestions and feedback!

- ^

We talk a lot about talent and funding allocation, but I think the dynamics around attention (of community members) is often overlooked in explanations. Understanding the mechanisms by which attention is allocated (consciously or not) can help prominent meta actors and organisations understand the impact their actions have on the community as a whole.

- ^

Although the career paths that are promoted and discussed by 80,000 Hours are quite broad, they are 1) still quite competitive and 2) people anchor onto specific roles, jobs or opportunities within those paths, often those at explicitly EA-aligned organisations. Thus when I say "highlighted paths" I am referring to the actual bits that are higlighted, but keeping in mind that it's not always a specific job or role, but some unrepresentative cluster of those which I group together as "path".

- ^

Note that the same phenomenon can occur with donation decisions.

- ^

If there's an actual phrase please let me know - I think availability bias doesn't quite explain this effect. Maybe it does.

- ^

I’ve shared this feedback with the new 80,000 Hours job board curator who was very receptive to the feedback - I encourage you to share your feedback on the job board if you have any!

samuel @ 2022-04-30T17:14 (+8)

Great post/suggestions, I especially agree with target outreach. I want to amplify something that's touched on but not explicitly stated:

EA is simply a lens/framework - you can apply these principles anywhere, and the impact may be significant! I work in environmental sustainability / climate change mitigation and notice that the movements closely mirror each other because:

- Maximizing impact is the overarching principle (at least theoretically...)

- It’s a rapidly growing and trendy field.

- Until now, amateurs/volunteers/hobbyists have done a lot of the work.

In both EA and sustainability, people clamor for high-profile direct impact roles but they're incredibly competitive, the roles may lack the imagined leverage and candidates spend an outsized amount of time trying to get them. It’s difficult to quantify, but many (most?) people will be more impactful applying a EA framework to non-EA specific work. The EA movement is still nascent enough that it makes sense to encourage people to apply to EA-specific roles or start new organizations, but eventually the messaging will transition to how you can apply EA to any job you take, not how you can become an EA superstar.

samuel @ 2022-04-30T17:22 (+4)

Adding this as a separate comment to maintain some organization - I've mentioned this in comments on other posts, but I really think that there's room for an organization or mechanism that identifies and rewards undervalued EA-related work that's already being done at existing non-EA institutions. In the context of your post, it would further normalize the idea that plenty of good EA work happens outside of EA.

Linda Linsefors @ 2022-05-02T18:26 (+2)

80k podcast does this (more identify than reward, but still). But I agree that more would be good.

Elizabeth @ 2023-08-28T19:26 (+3)

I think this is an important point, and one I worry about a lot. But I worry that a naive switch in emphasis does more harm than good.

Your post focuses on people who could do well on a more independent path, if only they'd consider it. But there are a lot of people for whom that's not true- they lack the temperament, or the skills, or the knowledge. Some people's suitability will change with time, but I think a majority won't, and that's fine. Or at least, recognizing it as fine leads to better outcomes.

A complementary solution is to develop more work that can absorb people who aren't currently ready to leave highlighted paths. I think Charity Entrepreneurship does great work by creating an intermediate path and helping people transition. I know less about the implementation of the push for ops, but it seems conceptually good way to turn capable generalists into esoteric epistemic work. As a for-profit company Wave[1] can absorb lots of positions.

I think you're right that of people who can create their own, most will do more good by doing so. But that's a really high bar I don't want people to rush for prematurely.

- ^

Full disclosure: I worked there 6 years ago.