Don't Be Bycatch

By DirectedEvolution @ 2021-03-10T05:28 (+387)

It's a common story. Someone who's passionate about EA principles, but has little in the way of resources, tries and fails to do EA things. They write blog posts, and nothing happens. They apply to jobs, and nothing happens. They do research, and don't get that grant. Reading articles no longer feels exciting, but like a chore, or worse: a reminder of their own inadequacy. Anybody who comes to this place, I heartily sympathize, and encourage them to disentangle themselves from this painful situation any way they can.

Why does this happen? Well, EA has two targets.

- Subscribers to EA principles who the movement wants to become big donors or effective workers.

- Big donors and effective workers who the movement wants to subscribe to EA principles.

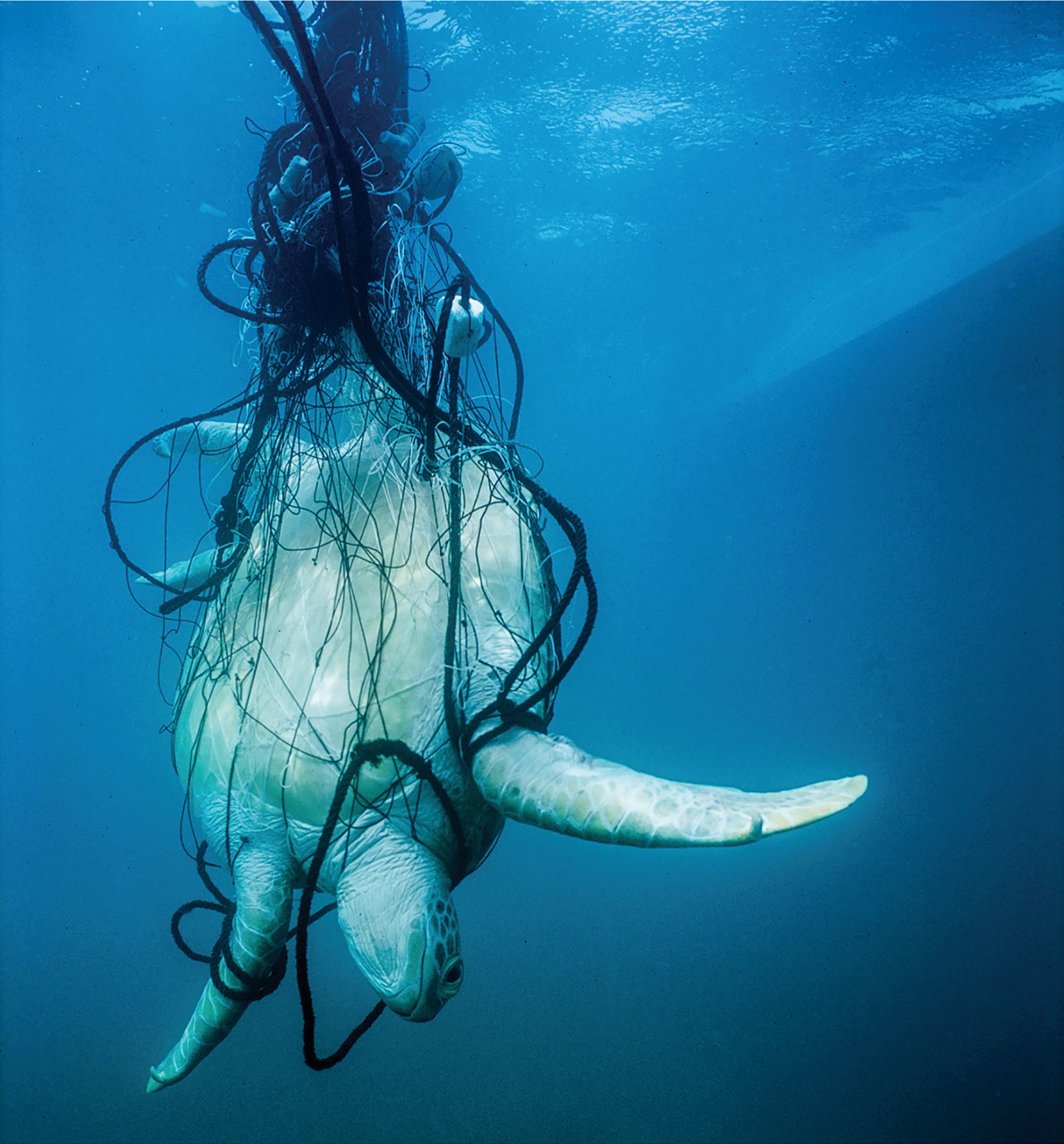

I won't claim what weight this community and its institutions give to (1) vs. (2). But when we set out to catch big fish, we risk turning the little fish into bycatch. The technical term for this is churn.

Part of the issue is the planner's fallacy. When we're setting out, we underestimate how long and costly it will be to achieve an impact, and overestimate what we'll accomplish. The higher above average you aim for, the more likely you are to fall short.

And another part is expectation-setting. If the expectation right from the get-go is that EA is about quickly achieving big impact, almost everyone will fail, and think they're just not cut out for it. I wish we had a holiday that was the opposite of Petrov Day, where we honored somebody who went a little bit out of their comfort zone to try and be helpful in a small and simple way. Or whose altruistic endeavor was passionate, costly, yet ineffective, and who tried it anyway, changed their mind, and valued it as a learning experience.

EA organizations and writers are doing us a favor by presenting a set of ideas that speak to us. They can't be responsible for addressing all our needs. That's something we need to figure out for ourselves. EA is often criticized for its "think global" approach. But the EA is our local, our global local. How do we help each other to help others?

From one little fish in the sEA to another, this is my advice:

- Don't aim for instant success. Aim for 20 years of solid growth. Alice wants to maximize her chance of a 1,000% increase in her altruistic output this year. Zahara's trying to maximize her chance of a 10% increase in her altruistic output. They're likely to do very different things to achieve these goals. Don't be like Alice. Be like Zahara.

- Start small, temporary, and obvious. Prefer the known, concrete, solvable problem to the quest for perfection. Yes, running an EA book club or, gosh darn it, picking up trash in the park is a fine EA project to cut our teeth on. If you donate 0% of your income, donating 1% of your income is moving in the right direction. Offer an altruistic service to one person. Interview one person to find out what their needs are.

- Ask, don't tell. When entrepreneurs do market research, it's a good idea to avoid telling the customer about the idea. Instead, they should ask the customer about their needs and problems. How do they solve their problems right now? Then they can go back to the Batcave and consider whether their proposed solution would be an improvement.

- Let yourself become something, just do it a little more gradually. It's good to keep your options open, but EA can be about slowing and reducing the process of commitment, increasing the ability to turn and bend. It doesn't have to be about hard stops and hairpin turns. It's OK to take a long time to make decisions and figure things out.

- Build each other up. Do zoom calls. Ask each other questions. Send a message to a stranger whose blog posts you like. Form relationships, and care about those relationships for their own sake. That is literally what EA community development is about; a community of like-minded friends is far stronger than an organization of ideologues.

- You don't have to brand everything as EA. If you want to encourage your friends to donate to MIRI or GiveWell, you can just talk about those specific organizations. An argument that's true often doesn't need to be argued. "They work to keep AI technology safe for humanity" and "they give bed nets to prevent malaria" are causes that kind of sell themselves. If people want to know why you recommend them, you'll be able to answer very well.

- Be a founder and an instigator, even if the organization is temporary, the activity incomplete. Do a little bit of everything. Have the guts to write for this forum, if you have time. Organize an event with a friend. Buy a domain name and throw together a website.

- Stay true to the principles, even if you're not sure how to put them into practice.

- Don't be bycatch. It's OK to come in and out of EA, and back in again if you want to. The best thing you can possibly do for the community is make EA work for you, rather than just making yourself work for EA.

NunoSempere @ 2021-03-12T10:34 (+67)

tl;dr: I like the post. One thing that I think it gets wrong is that if "picking up trash in the park is a fine EA project to cut our teeth on", then that is a sorry state for EA to find itself in.

I think that a thing that this post gets wrong is that EA seems to be particularly prone to generating bycatch, and although there are solutions at the individual level, I'd also appreciate having solutions at higher levels of organization. For example, the solution to "you find yourself writing not-so-valuable blogposts" is probably to ask a mentor to recommend you valuable blog posts to write.

One proposal to do that was to build what this post calls a "hierarchical networked structure" in which people have people to ask about which blog posts or research directions would be valuable, and Aaron Gertler is there to offer editing, and further along the way, EA groups have mentors which have an idea of which EA jobs are particularly valuable to apply to, and which are particularly likely to generate disillusionment, and EA group mentors themselves have someone to ask advice to, and so on. This to some extent already exists; I imagine that this post is valuable enough to get sent on the EA newsletter, which means that involved members in their respective countries will read it and maybe propagate its ideas. But there is way to go.

Another solution in that space would be to have a forecasting-based decentralized systems, where essentially the same thing happens (e.g., good blog posts to write or small projects to do get recommended, career hopes get calibrated, etc.), but which I imagine could be particularly scalable.

We can also look at past movements in history. In particular, General Semantics also had this same problem, and a while ago I speculated that this lead to its doom. Note also that religions don't have the problem of bycatch at all.

AllAmericanBreakfast @ 2021-03-12T15:50 (+8)

That’s good feedback and a complementary point of view! I wanted to check on this part:

“I think that a thing that this post gets wrong is that EA seems to be particularly prone to generating bycatch, and although there are solutions at the individual level, I'd also appreciate having solutions at higher levels of organization.”

Are you saying that you think EA is not particularly prone to generating bycatch? Or that it is, but it’s a problem that needs higher-level solutions?

NunoSempere @ 2021-03-12T16:35 (+21)

Yeah, that's not my proudest sentence. I meant the former, that it is particularly prone to generating bycatch, and hence it would benefit from higher level solutions. In your post, you try to solve this at the level of the little fish, but addressing that at the fisherman level strikes me as a better (though complimentary) idea.

tessa @ 2021-03-11T03:13 (+59)

I really liked the encouraging tone of this― "from one little fish in the sEA to another" was so sweet― and like the suggestion to instigate small / temporary / obvious projects. Reminds me a bit of the advice in Dive In which I totally failed to integrate when I first read it, but now feels very spot on; I spent ages agnoising over whether my project ideas were Effective Enough and lost months years that could have been spent building imperfect things and nurturing competence and understanding.

MJusten @ 2021-03-11T21:35 (+51)

This really resonated for a young EA like myself. EA totally transformed the way I think about my career decisions, but I quickly realized how competitive jobs at the organizations I looked up to were. (The 80k job board is a tough place for an undergraduate to NOT feel like an imposter). This didn't discourage me from EA in general, but it did leave me with some uncertainty about how much I should let EA dictate my pursuits. This post offered some excellent reassurances and reminders to stay grounded.

Inspired me to leave my first comment on the forum. Thank you for the lovely post :)

Peter_Hurford @ 2021-03-12T20:57 (+29)

Similar to "Effective Altruism is Not a Competition"

Neel Nanda @ 2021-03-11T11:21 (+29)

Thanks a lot for writing this! I think this is a really common trap to fall into, and I both see this a lot in others, and in myself.

To me, this feels pretty related to the trap of guilt-based motivation - taking the goals that I care about, and thinking of them as 'I should do this' or as obligations, and feeling bad and guilty when I don't meet them. Combined with having unrealistically high standards, based on a warped and perfectionist view of what I 'should' be capable of, hindsight bias and the planning fallacy and what I think the people around me are capable of. Which combine to mean that I set myself standards I can never really meet, feel guilty for failing to meet them, and ultimately build up aversions that stop me caring about whatever I'm working on, and to flinch away from it.

This is particularly insidious, because I find the intention behind this is often pure and important to me. It comes from a place of striving to be better, of caring about things, and wanting to live in consistency with my values. But in practice, this intention, plus those biases and failure modes, combine in me doing far worse than I could.

I find a similar mindset to your first piece of advice useful: I imagine a future version of myself that is doing far better than I am today, and ask how I could have gotten there. And I find that I'd be really surprised and confused if I suddenly got way better one day. But that it's plausible to me that each day I do a little bit better than before, and that, on average, this compounds over time. Which means it's important to calibrate my standards so that I expect myself to do a bit better than what I have been realistically capable of before.

If you resonate with that, I wrote a blog post called Your Standards Are Too High on how I (try to) deal with this problem. And the Replacing Guilt series by Nate Soares is phenomenally good, and probably one of the most useful things I've ever read re own my mental health

David_Kristoffersson @ 2021-03-15T23:02 (+28)

What I appreciate the most about this post is simply just the understanding it shows for people in this situation.

It's not easy. Everyone has their own struggles. Hang in there. Take some breaks. You can learn, you can try something slightly different, or something very different. Make sure you have a balanced life, and somewhere to go. Make sure you have good plan B's (e.g., myself, I can always go back to the software industry). In the for-profit and wider world, there are many skills you can learn better than you would working at an EA org.

Ben_West @ 2021-03-11T23:20 (+16)

Ask, don't tell.

This is really good advice, at least for a subset of people.

Whether someone is problem-oriented ("the shortage of widgets in EA") versus solution-oriented ("find a way to use my widget-making skills") often gives me a strong signal of how likely they are to be successful.

I'd add on that sharing the answers people give to your questions (e.g. on this forum) is helpful. The set of things EA needs is vast, and there's no reason for us to all start from scratch in figuring out how to help.

Milan_Griffes @ 2021-03-12T16:24 (+15)

Thank you for this wonderful post!

Humans need places came to mind as I read it. Alongside leveling up one's personal robustness & grit, I think there is much we can do at the systemic level to reduce the incidence of bycatch in EA.

OllieBase @ 2021-03-12T12:55 (+15)

Thanks for this really thoughtful post :)

Zahara's trying to maximize her chance of a 10% increase in her altruistic output.

I agree it's a good idea to focus on small gains. Even better advice might be to focus on learning or building skills which might give Zahara a 10% increase in her altruistic output later on (e.g. focus on studying, learn something new, try and find a job which means she'll learn a lot).

You could apply the same idea to donating 0% or 1% this year. It's also fine to donate 0% for several years and then give when you're more comfortable.

In general, I think EAs (myself included) are too focused on generating impact today and should focus more on building skills to generate impact later on.

lexande @ 2021-03-12T22:19 (+10)

Unfortunately this competes with the importance of interventions failing fast. If it's going to take several years before the expected benefits of an intervention are clearly distinguishable from noise, there is a high risk that you'll waste a lot of time on it before finding out it didn't actually help, you won't be able to experiment with different variants of the intervention to find out which work best, and even if you're confident it will help you might find it infeasible to maintain motivation when the reward feedback loop is so long.

OllieBase @ 2021-03-14T20:58 (+7)

Sorry, perhaps this wasn't clear - I'm not suggesting investing heavily in a single intervention or cause area for a long time, rather building skills that will be useful for solving a variety of problems (e.g. research skills, experience working in teams etc.).

RomeoStevens @ 2021-03-23T00:29 (+9)

I propose that March 26th (6 months equidistant from Petrov day) be converse Petrov day.

NunoSempere @ 2021-03-23T16:19 (+8)

Nemo day, perhaps

--alex-- @ 2021-05-23T17:16 (+8)

Is it true that some tech folks started up a low-impact-angst group for all the Zaharas out there? (Asking question, not telling!)

Daniel Tabakman @ 2021-05-23T18:55 (+7)

I have heard rumors about it, but it seems like it might be a little exclusive.

I heard that you might have to provide evidence that you are not already a highly impactful individual, and that you have some amount of angst in your life, so I'm not sure it's for everyone.

--alex-- @ 2021-05-23T22:49 (+5)

Good point! We wouldn't want to encourage people to lower their impact and become angsty to get into an EA Club -- that's what Clubhouse is for...

Tristan Katz @ 2025-07-25T09:22 (+5)

I found this post inspiring while reading it, but after reaching the end I realized that I have very little idea of what it's actually telling me to do. I'm writing this comment far too late in the hope that someone might tell me what I'm missing.

So - how do I avoid being bycatch? Here's my reconstruction of the argument and my evaluation of each points

1. Build up career capital slowly, over the long-term - ok, but AI timelines are short, and the future is unpredictable. A lot of EAs originally did exactly this by going into careers like medicine only to later find that it doesn't help them, given their updated beliefs. Arguably, being able to pivot is better. ❌

2. Start with small & concrete actions that provide evidence of your altruistic efforts. Ok, but isn't that exactly the kind of bycatch activities this post is warning against? ❌

3. Ask questions to figure out what needs to be done. Ok this point I think is generally good advice, and could help ensure that one's skills end up being useful. ✅

4. Take your time to figure things out. This point counters (1). Taking time to figure things out likely means delaying any commitment to a long-term plan, or switching track several times. I've done this myself, but I think this is what leaves people as bycatch. ❌

5. Engage in the community. This again is good advice, but I'm not sure how it prevents someone becoming bycatch. ❔

6. Not everything needs to be branded as EA. Ok, I suppose if I have some skills or career capital, this helps me from ensuring that they are recognized as useful instead 'churn'. ✅

7. I understand as be brave and try things. Again, this seems to be exactly what was warned against - the EA who writes blog posts, applies for grants etc but nothing happens. Trying things has a high cost and can leave people very demotivated in a competitive ecosystem. ❌

8. Follow EA principles. This seems more like asking the bycatch not to give up hope, rather than real advice. ❌

9. The wording of this one was strange: not being bycatch is obviously a way to not be bycatch. But I suppose what is meant is: try to be valuable very generally, rather than just being a committed EA employee. This is useful, but the real question is how, and as I've indicated I feel fairly unconvinced by most of the answers in the rest of the post.

Emrik @ 2022-05-19T00:41 (+3)

I'm really sorry I downvoted... I love the tone, I love the intention, but I worry about the message. Yes, less ambition and more love would probably make us suffer less. But I would rather try to encourage ambition by emphasising love for the ambitious failures. I'm trying to be ambitious, and I want to know that I can spiritually fall back on goodwill from the community because we all know we couldn't achieve anything without people willing to risk failing.