Visualizations of the significance - persistence - contingency framework

By Jakob @ 2022-09-02T18:22 (+27)

Will MacAskill’s What We Owe the Future was published on Sep 1st in Europe. In chapter 2, You Can Shape the Course of History, Will presents “a framework for assessing the long term value of an event”, with the dimensions 1) significance, 2) persistence, and 3) contingency. Will explains the terms in the following way:

- Let’s start by assuming that some event or action will create a state of affairs, then

- Significance is the average value added by bringing about that state of affairs

- Persistence is how long the state of affairs is expected to last, once it is brought about

- Contingency considers, if the event or action didn’t occur, would the state of affairs eventually be brought about anyways? More precisely defined: in expectation, what % of the time spent in the state of affairs (defined as the persistence in the bullet above) would be expected anyways, in a world without the event or action?

In this post I’ll start by presenting some visualizations of this that hopefully can make these concepts intuitive, and then use those visualizations to discuss the claim that we’re living in a period of plasticity. Note that as I refer to the book throughout this post, it is based on my best reading of it, and any errors are my own (I have not been in touch with Will’s team).

The significance, persistence and contingency framework

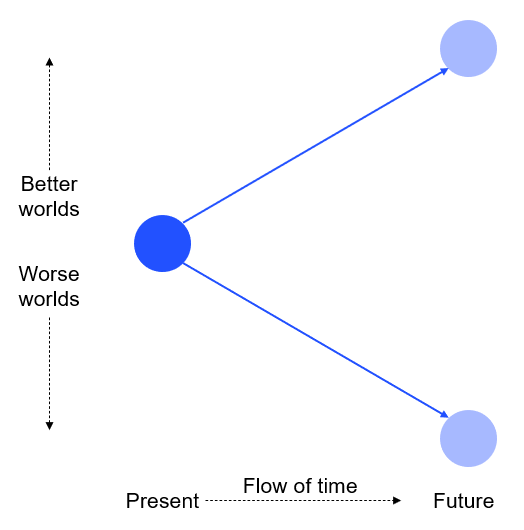

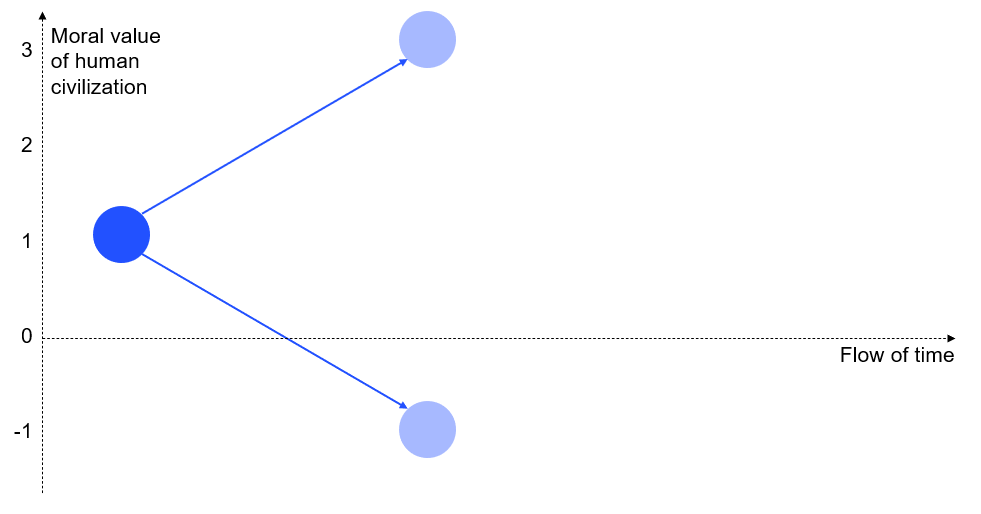

The basic tool I’ll use for the visualizations is a directed acyclic graph visualizing, on the x-axis, the flow of time (which could be measured in e.g., days, years, generations or millennia for each node, depending on what question you’re asking), and on the y-axis, some normative value of the world. This is a big simplification compared to how I interpret that Will is using the framework. Notably, when he refers to a “state of affairs”, that may refer to the existence of some institution or technology, which is just one out of many variables that would determine my y axis. Mapping all of this to one dimension may miss out on some nuance, so if you haven’t done it already, please buy Will’s book and read the more detailed discussion there!

What, exactly, you use to assign a value on the y axis depends on your moral beliefs, but I won’t take that discussion here. For now, all I’ll assume is that it is possible to assign some value on this axis, that that the current value of the world is “1” (of some unit), and that going forward, the world can take higher or lower values, including values below “0”.

I will then assume that some event or action that we can choose to take, can shift the probability of different future scenarios, and in particular, that we can make good outcomes more likely.

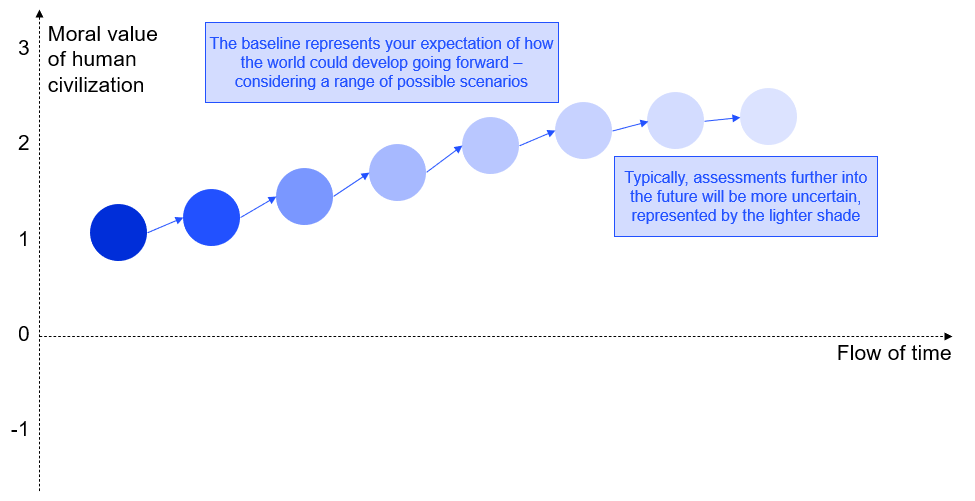

For simplicity, I’ll start out considering a deterministic world, where we know a baseline scenario for the future, and we know how the action we’re considering would change said baseline. For the probability enthusiasts, don’t worry, I’ll add back some uncertainty later.

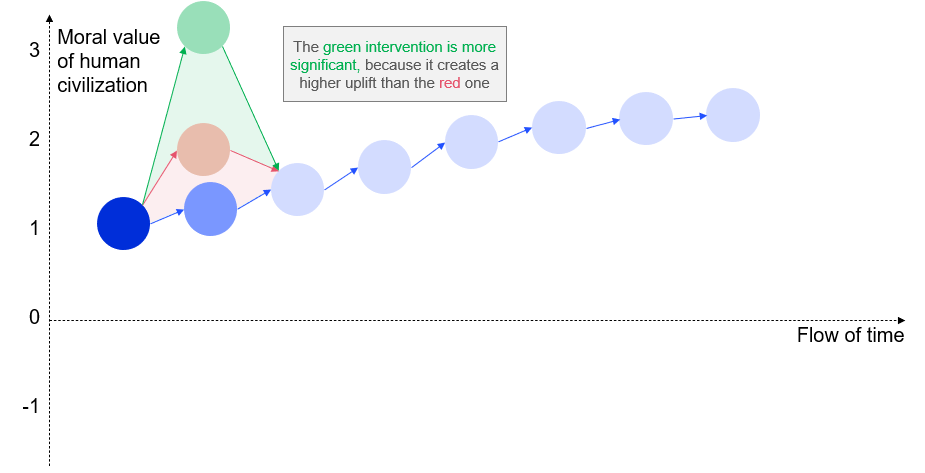

Under this framework, the significance of an action is illustrated by how much it lifts the value of civilization over its lifetime. In the chart below, this is represented by a green and a red action. If you prefer to think in examples, you can assume that the actions below are:

- Green: the city of Oslo, where I live, decides to pay for seasonal flu vaccines for all its citizens

- Red: the city of Oslo instead decides to pay the same amount for beautiful snow sculptures in public parks this winter

- Note that both actions are likely to only matter for the winter, but one is likely to matter more than the other

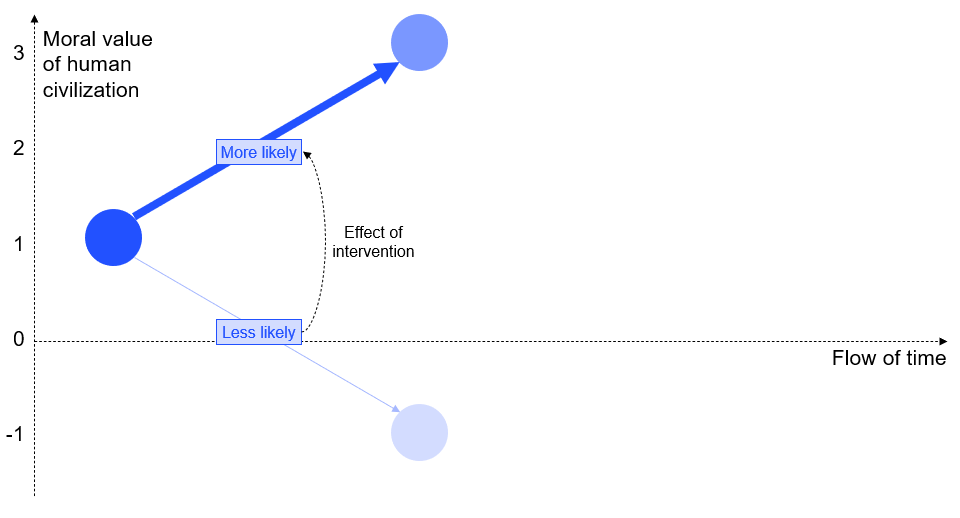

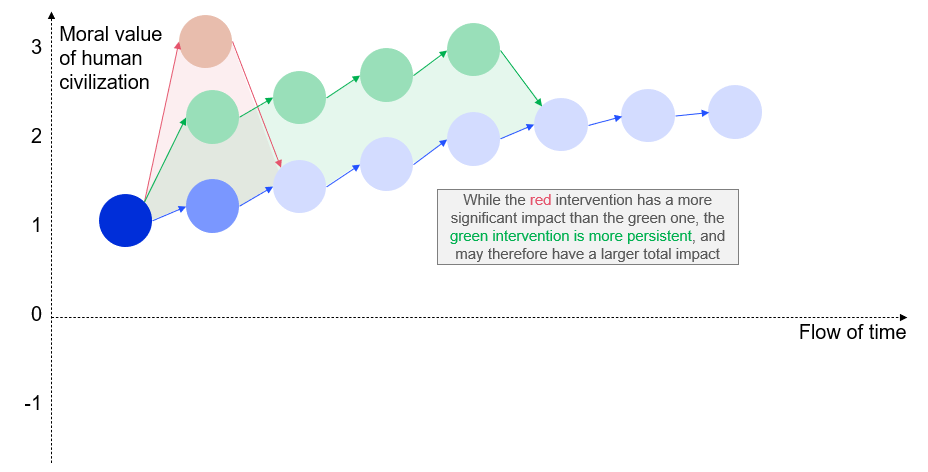

The persistence measures how long it takes before the value of civilization converges back to the baseline path. In the figure below, you may consider that the red action still is to pay for snow sculptures, while the green action is to pay for stone sculptures instead, which won’t be as pretty, but which will last longer.

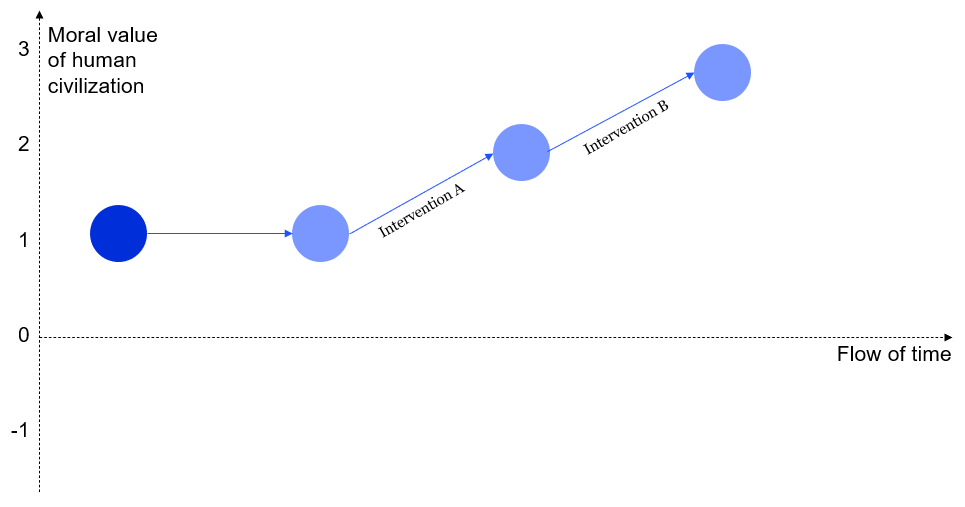

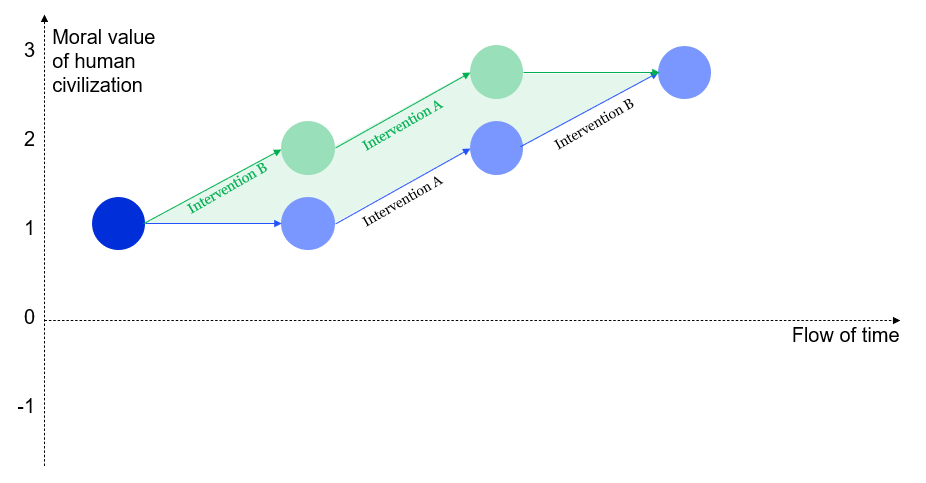

The contingency is slightly harder to visualize, since it considers when specific changes would happen in a counterfactual scenario. Let’s therefore start by considering the following baseline, where we have added some notion about how the improvements in the consecutive time steps are brought about; first, intervention A would be implemented, and then, intervention B. For simplicity, let’s assume that both interventions have the same significance, and both would persist until the end of humanity (which, on expectation, happens at the same time irrespective of these interventions).

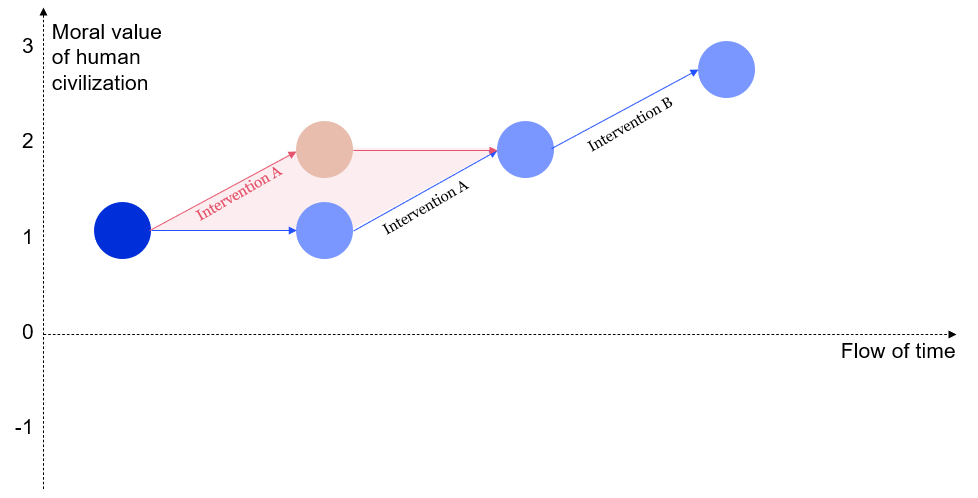

Assume that we can take one action today - either intervention A or B - and that is all we’re allowed to do. How would that look?

Taking action A today would only accelerate it by one node. In contrast, taking action B would accelerate it by two nodes. Therefore, the counterfactual impact of taking action B today is larger than the value of taking action A.

This is a bit of a simplified model, and one may balk at some of the assumptions. For instance, if we do A today, why don’t we just do B tomorrow, and end up with the same improvements? This is a fair objection to my specific scenarios, which aren’t optimized for plausibility, but rather for ease of visualization. It is possible to address the assumptions, for instance by adding a fixed cost for each intervention you choose to accelerate, and setting a budget so that you can only accelerate one intervention (e.g., because it requires you to spend your entire career on it). However, I don’t think this is critical for our purposes - this is just a stylized example, and there are other examples of how contingency can arise, though most are harder to visualize.

Notes on uncertainty

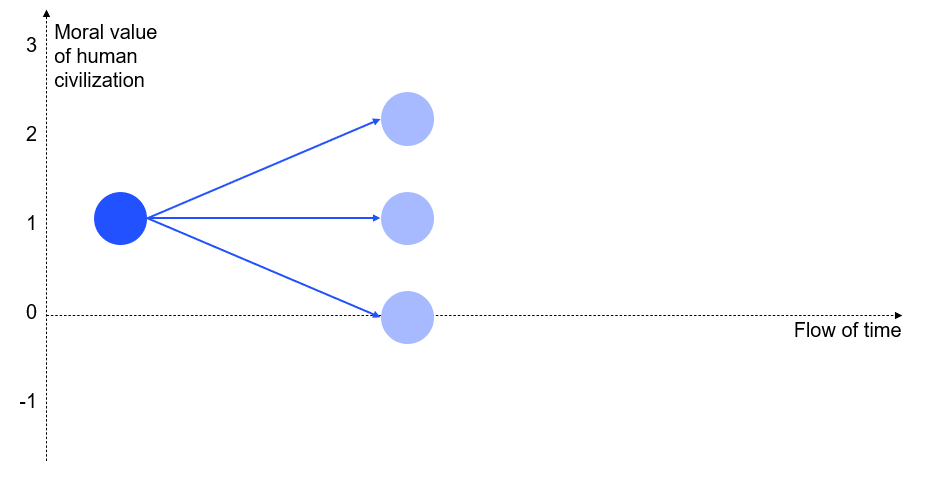

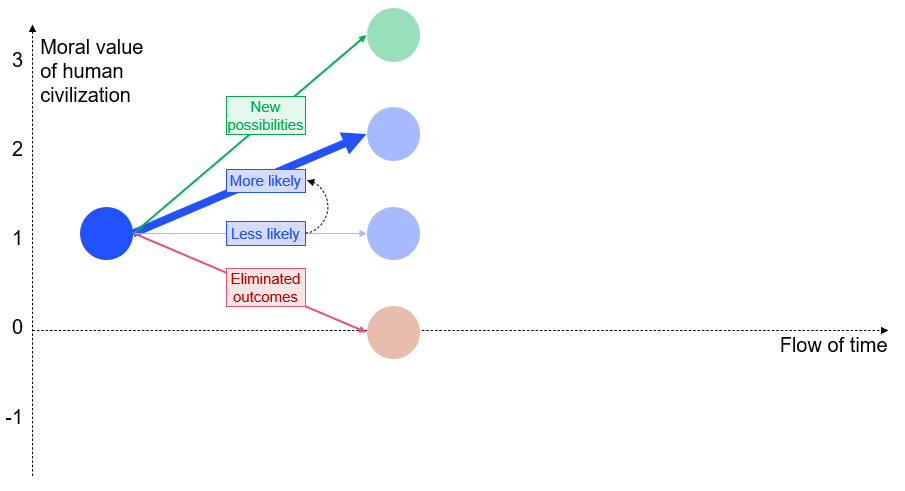

I will now switch back to visualizations with uncertainty. Consider then a baseline which includes different possible future scenarios, each with some estimated probability.

There are three ways an action could have positive significance compared to this baseline: 1) it could shift probabilities from the lower-valued to the higher-valued scenarios; 2) it could eliminate the lower-valued scenarios altogether; and 3) it could create new, higher-valued scenarios. These are illustrated below in blue, red and green respectively.

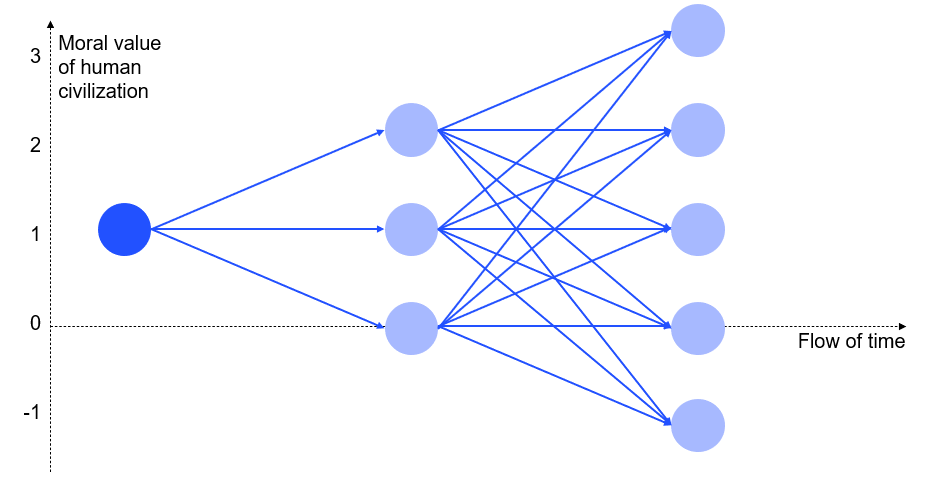

This gets even more complex when considering multiple stages in time. Even if just adding one more stage, I wouldn’t know how to start visualizing the potential impacts of an intervention. The chart below, is a simplification even if it only shows the baseline, since it makes quite limited assumptions about the possible future states of the world in each stage.

Complicating this picture even further, expected values can be dominated by tail outcomes - if an action could have a really large significance, persistence and/ or consistency, this may dominate the moral calculus, even if the most likely outcomes of the action are more moderate. Some have objected that this has properties resembling Pascal’s Mugging, but the relevant probabilities may not be Pascalian - Will explains in the book that for some of the relevant actions we can consider today, the probabilities are significantly higher than e.g., the risk of your house burning down, but you still presumably have insurance for that. Still, if the expected significance, persistence and contingency of our actions is dominated by outlier scenarios - maybe in the 1-5% range of probability, but with at least 2 orders of magnitude larger impact than the most likely scenarios - it poses an epistemic challenge, since it is much harder to get good data on the tail. This is some of the reason why philosophers have argued that we are clueless about the long-run impact of our actions, and one of the arguments Will offers in the book for taking actions that are robustly good across plausible worldviews.

Are we living in a period of plasticity?

In the book, Will argues that people alive today may be unusually well positioned to influence the long-run trajectory of civilization. This is a relevant question when deciding whether to try to influence the future by action today, or through resource accumulation that can be used to take action in the future (potentially by your descendants in the very far future). It is also a relevant question when considering whether to use our resources today to try to help people in the future, or whether to use them to help people and animals today.

The book presents the following argument:

- Civilization can still follow many different future trajectories, so our actions today can drive contingent outcomes (chapter 3, Moral change)

- In the future, the rate of change in society may decrease, with several implications:

- The outcomes we create with our actions today may get locked in for a long time, so our actions today can also drive persistent outcomes (chapters 4 Value lock-in and 5 Extinction, and partially also 6 Collapse and 7 Stagnation)

- Increasing ossification may decrease the potential for significant action in the future compared to today

I will not add much to this argument, beyond noting the following:

- Historically, technology has typically increased our powers to shape the world. If technology continues getting more powerful, this would be an argument for assuming that the significance of our actions will increase going forward. This would favor a strategy of accumulating resources to take more action later.

- The counterpoint to this is that technology may make irreversible changes (e.g., there may be no catching up with the first AGI, in which case the values it is optimizing for are likely to dominate the future), or that technology may shift who wields influence (e.g., shift the power balance between liberal democracies vs. autocracies). Both of these are arguments in favor of taking action before the power to influence the future is lost (either because of irreversible changes or power shifts)

- Historically, our understanding of the world, the future, and the outcomes of our actions (in short, our “wisdom”) has also been increasing, although some have argued that our wisdom has not grown as fast as our powers (Bostrom, 2019). If this is the case, it would be an argument for accelerating our wisdom-building, as well as saving up to wield influence later.

- However, a strategy of delaying action to later may not be available to us in practice. We have few mechanisms to reliably wield power far into the future - in his book, Will explicitly mentions Scotscare, a charity that is still operating long after its founders are dead, but which presumably is no longer achieving their goals (in this specific case, the reason is that the charity is focused on supporting Scots in London. This made sense when it was founded, since Scots in London were poor and discriminated against, but that is no longer the case, and so it is reasonable to assume the founders would want the organization to shift strategy, but that is no longer possible). Until we have institutions that can more reliably allow us to wield power in the future (in the way that we would actually want them to use it, and not just based on our best judgement today), we may be best off by deploying our resources today rather than trying to save them for later.